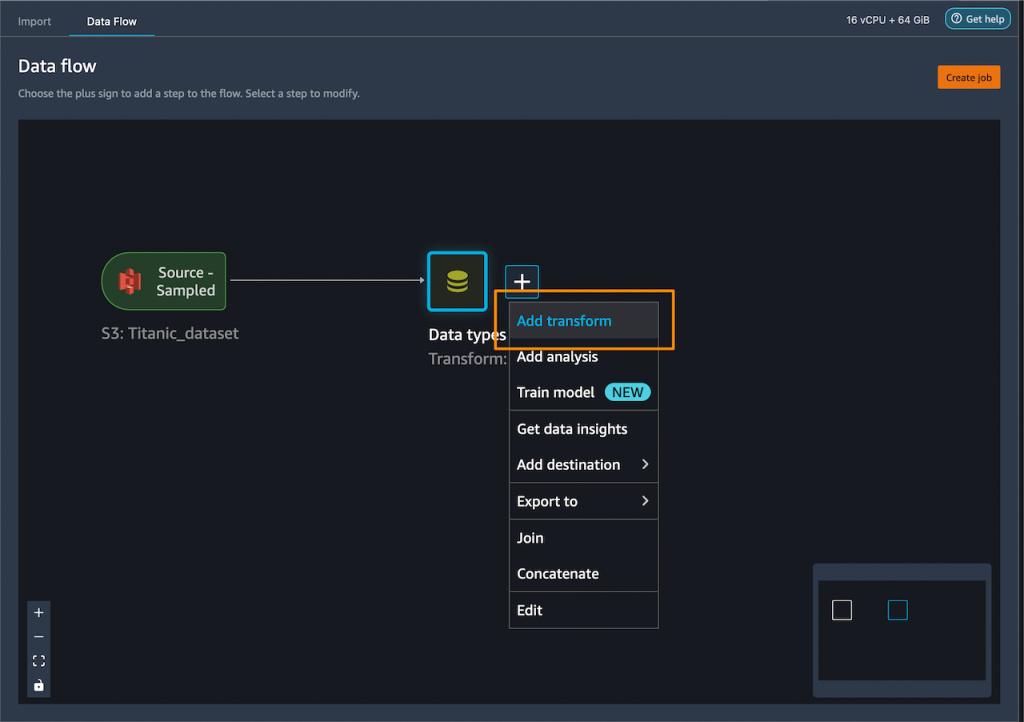

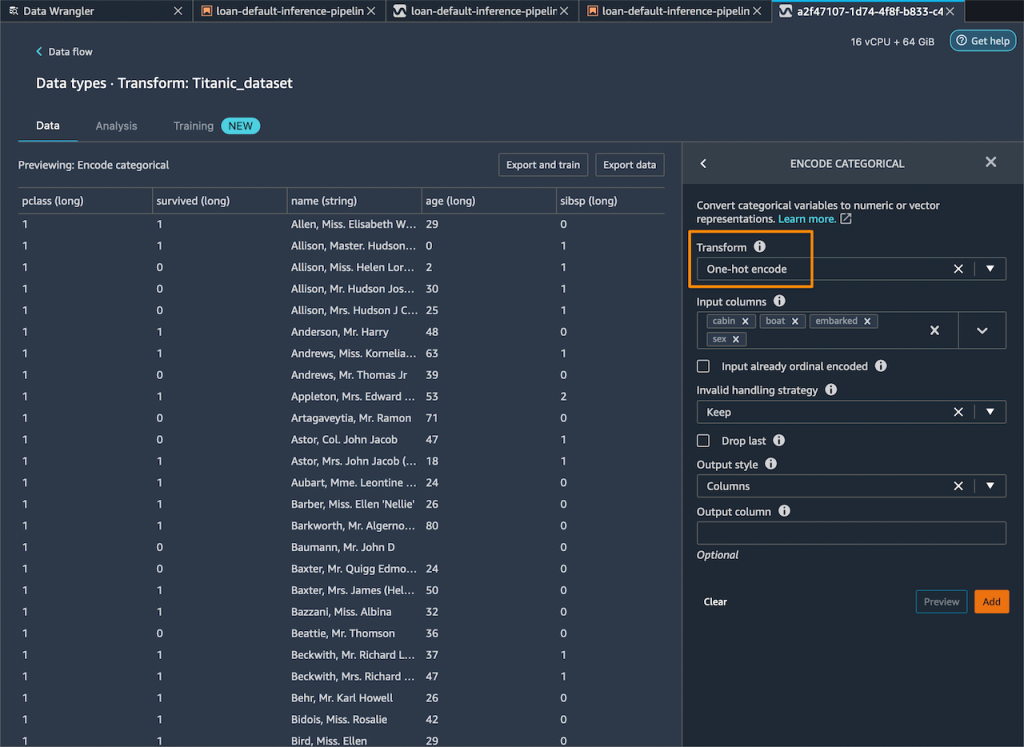

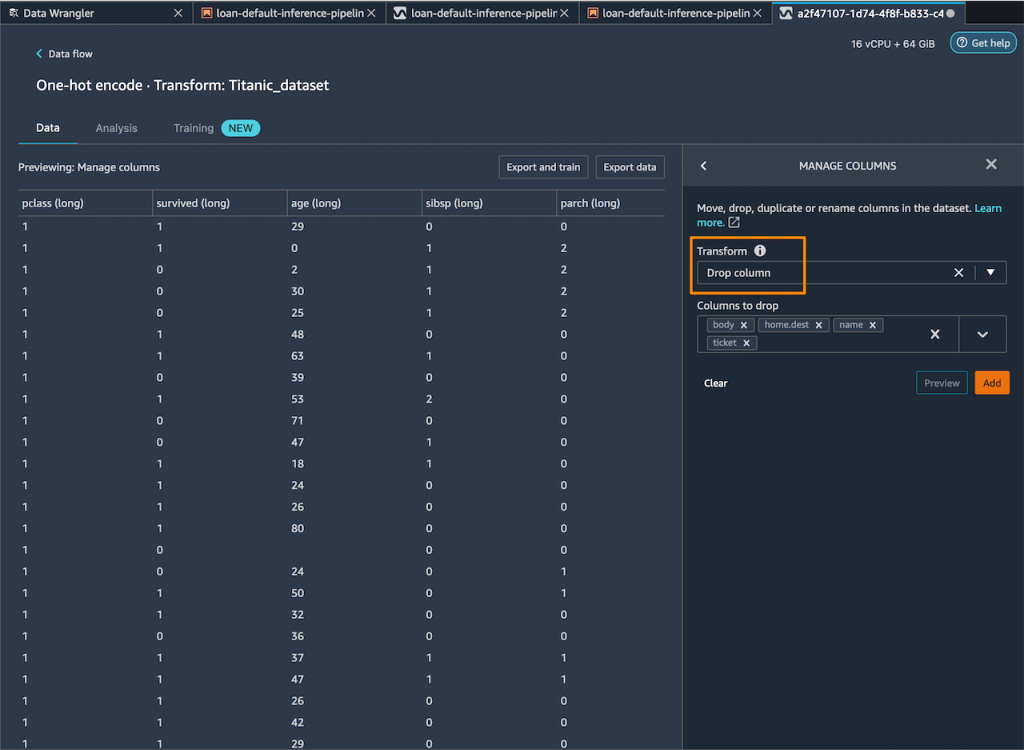

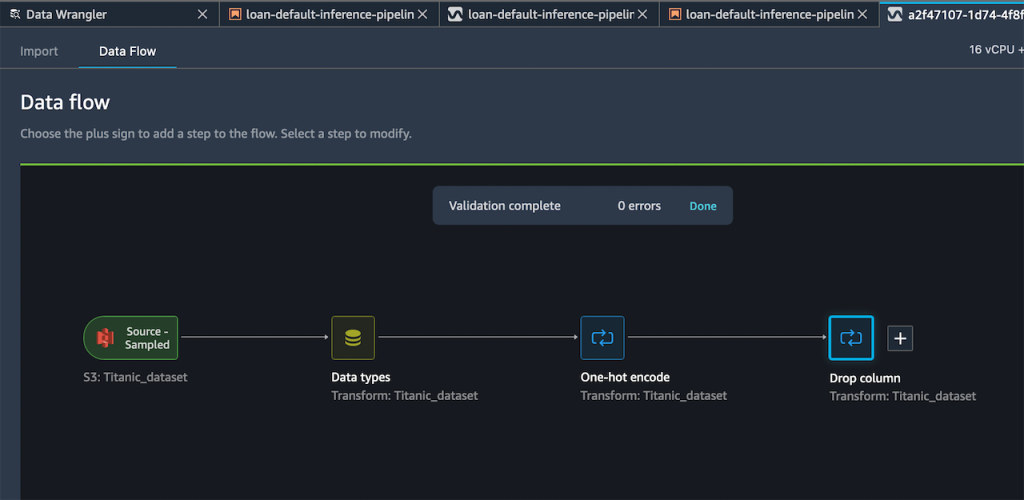

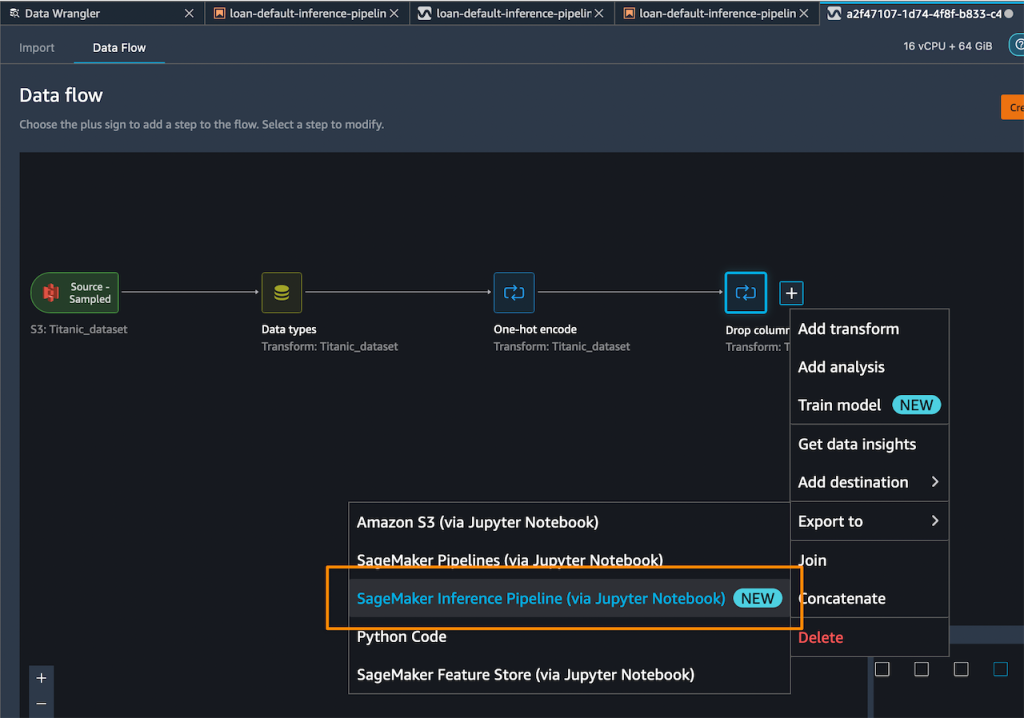

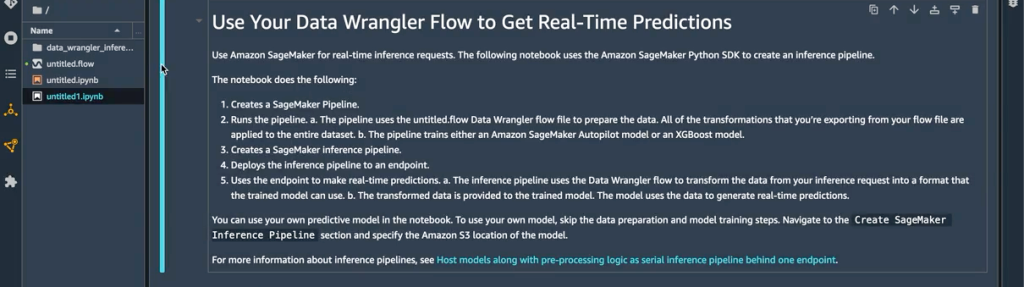

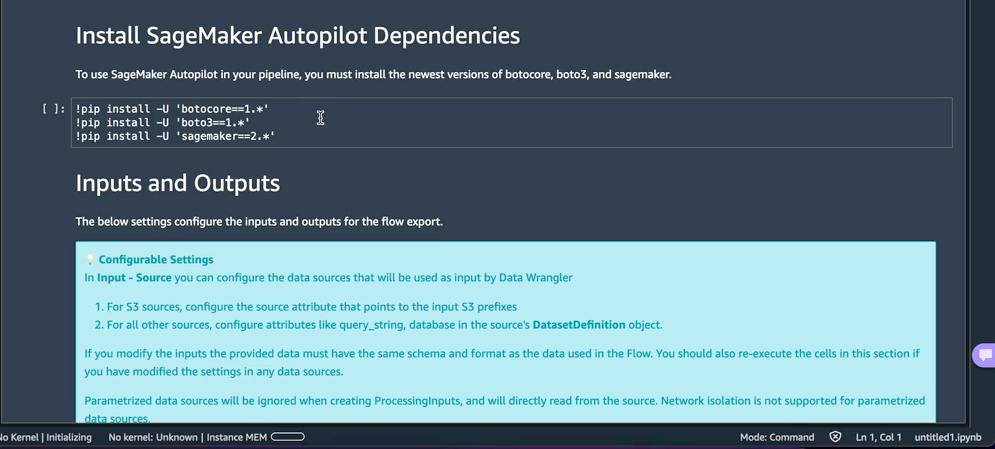

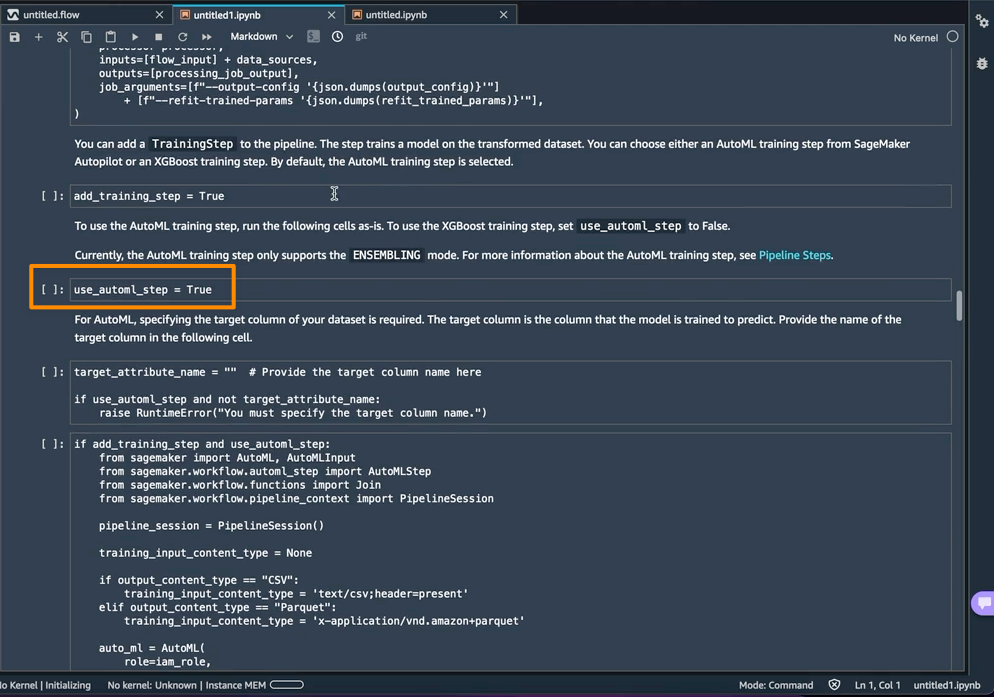

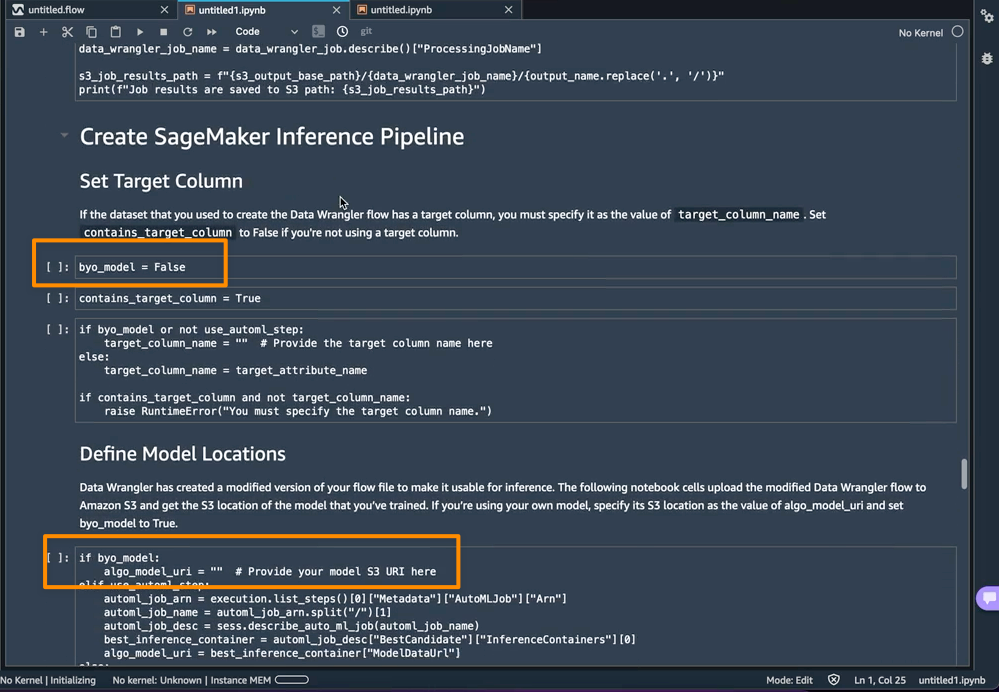

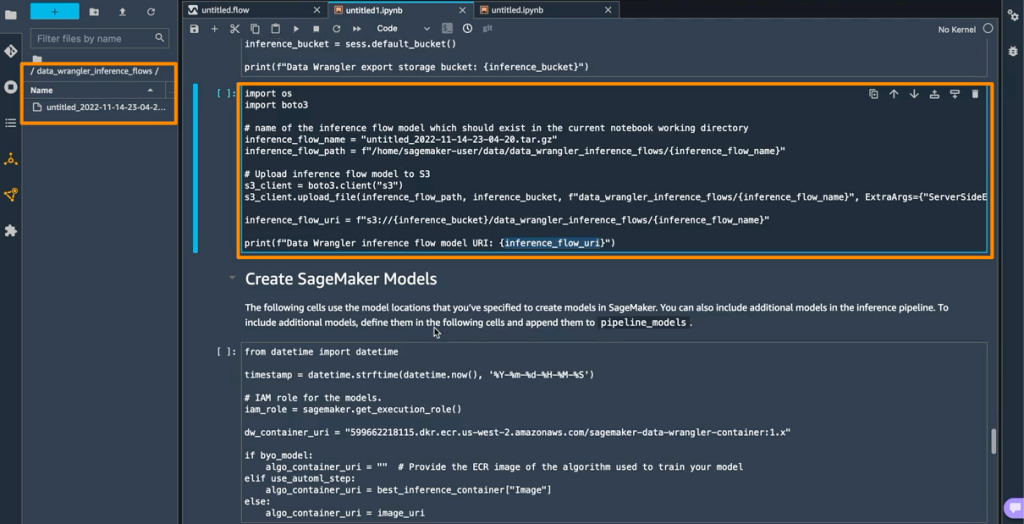

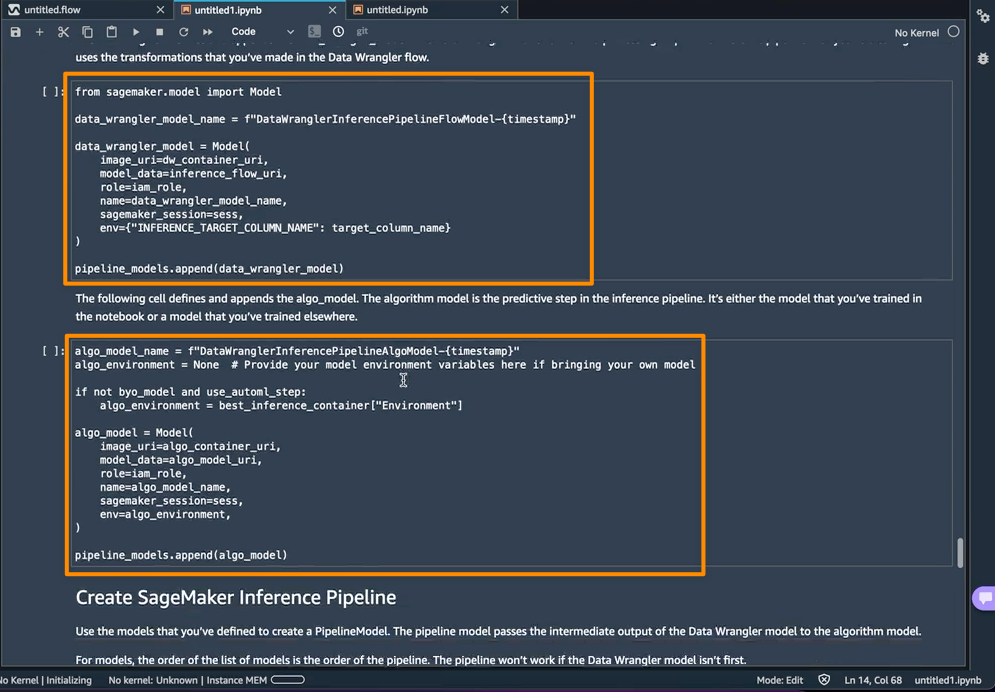

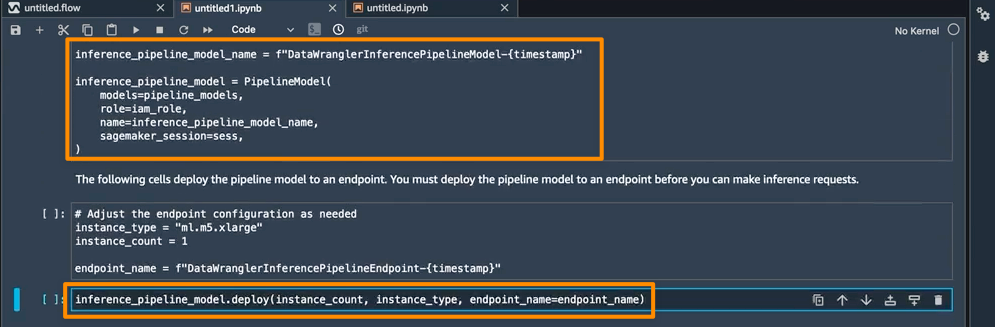

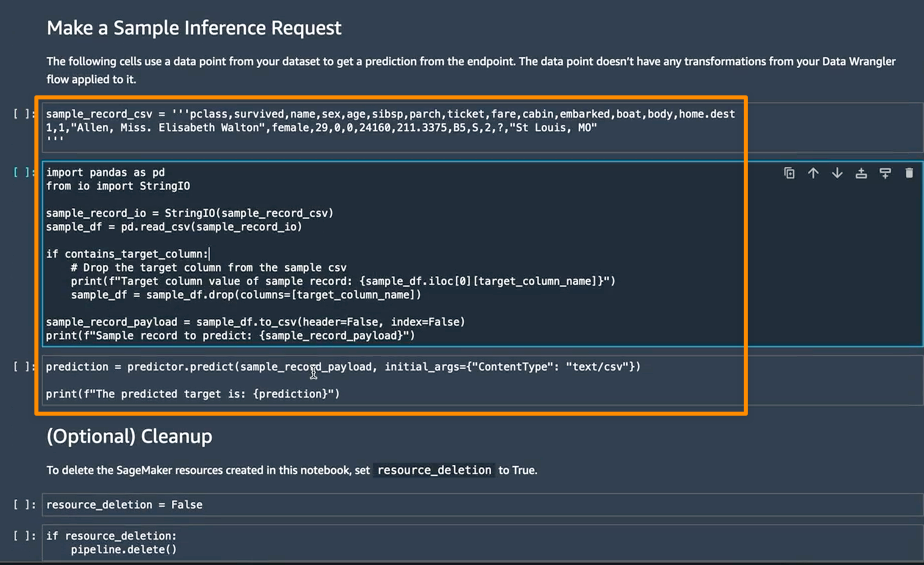

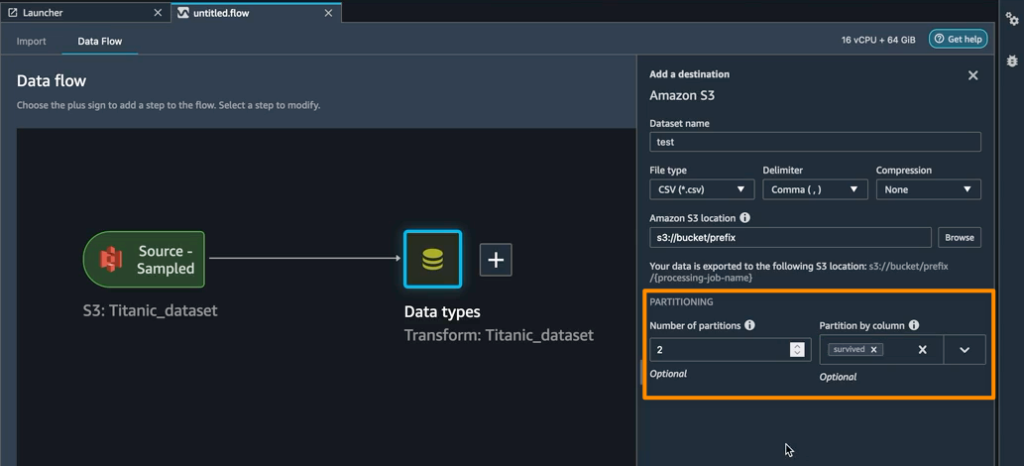

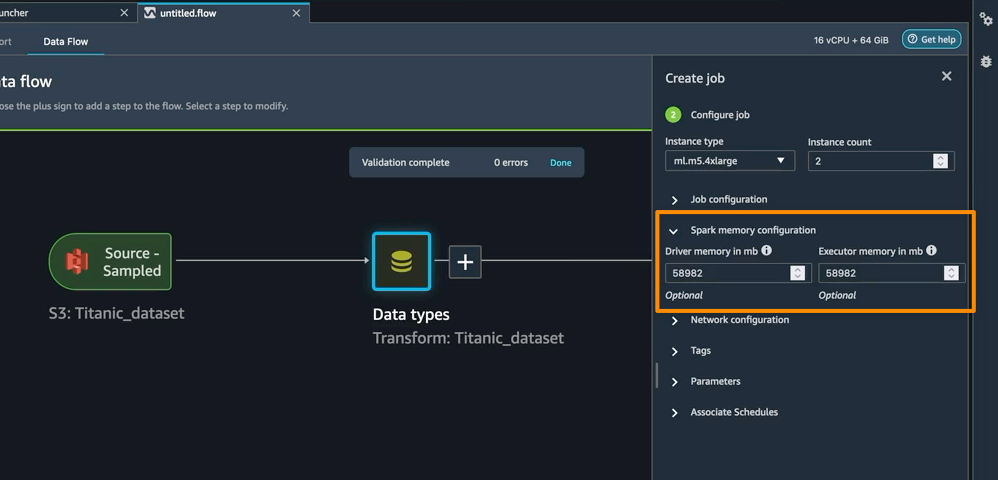

{"value":"To build machine learning models, machine learning engineers need to develop a data transformation pipeline to prepare the data. The process of designing this pipeline is time-consuming and requires a cross-team collaboration between machine learning engineers, data engineers, and data scientists to implement the data preparation pipeline into a production environment.\n\nThe main objective of [Amazon SageMaker Data Wrangler](https://aws.amazon.com/sagemaker/data-wrangler) is to make it easy to do data preparation and data processing workloads. With SageMaker Data Wrangler, customers can simplify the process of data preparation and all of the necessary steps of data preparation workflow on a single visual interface. SageMaker Data Wrangler reduces the time to rapidly prototype and deploy data processing workloads to production, so customers can easily integrate with MLOps production environments.\n\nHowever, the transformations applied to the customer data for model training need to be applied to new data during real-time inference. Without support for SageMaker Data Wrangler in a real-time inference endpoint, customers need to write code to replicate the transformations from their flow in a preprocessing script.\n\n### ++Introducing Support for Real-Time and Batch Inference in Amazon SageMaker Data Wrangler++\n\nI’m pleased to share that you can now deploy data preparation flows from SageMaker Data Wrangler for real-time and batch inference. This feature allows you to reuse the data transformation flow which you created in SageMaker Data Wrangler as a step in Amazon SageMaker inference pipelines.\n\nSageMaker Data Wrangler support for real-time and batch inference speeds up your production deployment because there is no need to repeat the implementation of the data transformation flow. You can now integrate SageMaker Data Wrangler with [SageMaker inference](https://docs.aws.amazon.com/sagemaker/latest/dg/deploy-model.html). The same data transformation flows created with the easy-to-use, point-and-click interface of SageMaker Data Wrangler, containing operations such as [Principal Component Analysis](https://docs.aws.amazon.com/sagemaker/latest/dg/data-wrangler-transform.html#data-wrangler-transform-dimensionality-reduction) and [one-hot encoding](https://docs.aws.amazon.com/sagemaker/latest/dg/data-wrangler-transform.html#data-wrangler-transform-cat-encode), will be used to process your data during inference. This means that you don’t have to rebuild the data pipeline for a real-time and batch inference application, and you can get to production faster.\n\n\n### ++Get Started with Real-Time and Batch Inference++\nLet’s see how to use the deployment supports of SageMaker Data Wrangler. In this scenario, I have a flow inside SageMaker Data Wrangler. What I need to do is to integrate this flow into real-time and batch inference using the SageMaker inference pipeline.\n\nFirst, I will apply some transformations to the dataset to prepare it for training.\n\n\n\n\nI add one-hot encoding on the categorical columns to create new features.\n\n\n\nThen, I drop any remaining string columns that cannot be used during training.\n\n\n\nMy resulting flow now has these two transform steps in it.\n\n\n\nAfter I’m satisfied with the steps I have added, I can expand the **Export to** menu, and I have the option to export to **SageMaker Inference Pipeline (via Jupyter Notebook).**\n\n\n\n\nI select **Export to SageMaker Inference Pipeline**, and SageMaker Data Wrangler will prepare a fully customized Jupyter notebook to integrate the SageMaker Data Wrangler flow with inference. This generated Jupyter notebook performs a few important actions. First, define data processing and model training steps in a SageMaker pipeline. The next step is to run the pipeline to process my data with Data Wrangler and use the processed data to train a model that will be used to generate real-time predictions. Then, deploy my Data Wrangler flow and trained model to a real-time endpoint as an inference pipeline. Last, invoke my endpoint to make a prediction.\n\n\n\n\nThis feature uses [Amazon SageMaker Autopilot](https://aws.amazon.com/sagemaker/), which makes it easy for me to build ML models. I just need to provide the transformed dataset which is the output of the SageMaker Data Wrangler step and select the target column to predict. The rest will be handled by Amazon SageMaker Autopilot to explore various solutions to find the best model.\n\n\n\n\nUsing AutoML as a training step from SageMaker Autopilot is enabled by default in the notebook with the ```use_automl_step```variable. When using the AutoML step, I need to define the value of ```target_attribute_name```, which is the column of my data I want to predict during inference. Alternatively, I can set ```use_automl_step``` to ```False``` if I want to use the XGBoost algorithm to train a model instead.\n\n\n\nOn the other hand, if I would like to instead use a model I trained outside of this notebook, then I can skip directly to the **Create SageMaker Inference Pipeline** section of the notebook. Here, I would need to set the value of the ```byo_model``` variable to ```True```. I also need to provide the value of ```algo_model_uri```, which is the Amazon Simple Storage Service (Amazon S3) URI where my model is located. When training a model with the notebook, these values will be auto-populated.\n\n\n\n\nIn addition, this feature also saves a tarball inside the ```data_wrangler_inference_flows``` folder on my SageMaker Studio instance. This file is a modified version of the SageMaker Data Wrangler flow, containing the data transformation steps to be applied at the time of inference. It will be uploaded to S3 from the notebook so that it can be used to create a SageMaker Data Wrangler preprocessing step in the inference pipeline.\n\n\n\nThe next step is that this notebook will create two SageMaker model objects. The first object model is the SageMaker Data Wrangler model object with the variable ```data_wrangler_model```, and the second is the model object for the algorithm, with the variable ```algo_model```. Object ```data_wrangler_model``` will be used to provide input in the form of data that has been processed into ```algo_model``` for prediction.\n\n\n\nThe final step inside this notebook is to create a SageMaker inference pipeline model, and deploy it to an endpoint.\n\n\n\nOnce the deployment is complete, I will get an inference endpoint that I can use for prediction. With this feature, the inference pipeline uses the SageMaker Data Wrangler flow to transform the data from your inference request into a format that the trained model can use.\n\nIn the next section, I can run individual notebook cells in **Make a Sample Inference Request**. This is helpful if I need to do a quick check to see if the endpoint is working by invoking the endpoint with a single data point from my unprocessed data. Data Wrangler automatically places this data point into the notebook, so I don’t have to provide one manually.\n\n\n\n\n### ++Things to Know++\n**Enhanced Apache Spark configuration** — In this release of SageMaker Data Wrangler, you can now easily configure how Apache Spark partitions the output of your SageMaker Data Wrangler jobs when saving data to Amazon S3. When adding a destination node, you can set the number of partitions, corresponding to the number of files that will be written to Amazon S3, and you can specify column names to partition by, to write records with different values of those columns to different subdirectories in Amazon S3. Moreover, you can also define the configuration in the provided notebook.\n\n\n\nYou can also define memory configurations for SageMaker Data Wrangler processing jobs as part of the **Create job** workflow. You will find similar configuration as part of your notebook.\n\n\n\n\nAvailability — SageMaker Data Wrangler supports for real-time and batch inference as well as enhanced Apache Spark configuration for data processing workloads are generally available in all AWS Regions that Data Wrangler currently supports.\n\nTo get started with [Amazon SageMaker Data Wrangler](https://aws.amazon.com/sagemaker/data-wrangler) supports for real-time and batch inference deployment, visit [AWS documentation.](https://docs.aws.amazon.com/sagemaker/latest/dg/data-wrangler-data-export.html)\n\nHappy building\n— [Donnie](https://donnie.id/)\n\n\n\n### Donnie Prakoso\nDonnie Prakoso is a software engineer, self-proclaimed barista, and Principal Developer Advocate at AWS. With more than 17 years of experience in the technology industry, from telecommunications, banking to startups. He is now focusing on helping the developers to understand varieties of technology to transform their ideas into execution. He loves coffee and any discussion of any topics from microservices to AI / ML.\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n","render":"<p>To build machine learning models, machine learning engineers need to develop a data transformation pipeline to prepare the data. The process of designing this pipeline is time-consuming and requires a cross-team collaboration between machine learning engineers, data engineers, and data scientists to implement the data preparation pipeline into a production environment.</p>\n<p>The main objective of <a href=\"https://aws.amazon.com/sagemaker/data-wrangler\" target=\"_blank\">Amazon SageMaker Data Wrangler</a> is to make it easy to do data preparation and data processing workloads. With SageMaker Data Wrangler, customers can simplify the process of data preparation and all of the necessary steps of data preparation workflow on a single visual interface. SageMaker Data Wrangler reduces the time to rapidly prototype and deploy data processing workloads to production, so customers can easily integrate with MLOps production environments.</p>\n<p>However, the transformations applied to the customer data for model training need to be applied to new data during real-time inference. Without support for SageMaker Data Wrangler in a real-time inference endpoint, customers need to write code to replicate the transformations from their flow in a preprocessing script.</p>\n<h3><a id=\"Introducing_Support_for_RealTime_and_Batch_Inference_in_Amazon_SageMaker_Data_Wrangler_6\"></a><ins>Introducing Support for Real-Time and Batch Inference in Amazon SageMaker Data Wrangler</ins></h3>\n<p>I’m pleased to share that you can now deploy data preparation flows from SageMaker Data Wrangler for real-time and batch inference. This feature allows you to reuse the data transformation flow which you created in SageMaker Data Wrangler as a step in Amazon SageMaker inference pipelines.</p>\n<p>SageMaker Data Wrangler support for real-time and batch inference speeds up your production deployment because there is no need to repeat the implementation of the data transformation flow. You can now integrate SageMaker Data Wrangler with <a href=\"https://docs.aws.amazon.com/sagemaker/latest/dg/deploy-model.html\" target=\"_blank\">SageMaker inference</a>. The same data transformation flows created with the easy-to-use, point-and-click interface of SageMaker Data Wrangler, containing operations such as <a href=\"https://docs.aws.amazon.com/sagemaker/latest/dg/data-wrangler-transform.html#data-wrangler-transform-dimensionality-reduction\" target=\"_blank\">Principal Component Analysis</a> and <a href=\"https://docs.aws.amazon.com/sagemaker/latest/dg/data-wrangler-transform.html#data-wrangler-transform-cat-encode\" target=\"_blank\">one-hot encoding</a>, will be used to process your data during inference. This means that you don’t have to rebuild the data pipeline for a real-time and batch inference application, and you can get to production faster.</p>\n<h3><a id=\"Get_Started_with_RealTime_and_Batch_Inference_13\"></a><ins>Get Started with Real-Time and Batch Inference</ins></h3>\n<p>Let’s see how to use the deployment supports of SageMaker Data Wrangler. In this scenario, I have a flow inside SageMaker Data Wrangler. What I need to do is to integrate this flow into real-time and batch inference using the SageMaker inference pipeline.</p>\n<p>First, I will apply some transformations to the dataset to prepare it for training.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/2c085a52571642b795a1550eed2815ac_image.png\" alt=\"image.png\" /></p>\n<p>I add one-hot encoding on the categorical columns to create new features.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/9d13a466ae674ab59be8ff5bfc143aac_image.png\" alt=\"image.png\" /></p>\n<p>Then, I drop any remaining string columns that cannot be used during training.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/9165fb0191f249d39bb028dcfcaf011d_image.png\" alt=\"image.png\" /></p>\n<p>My resulting flow now has these two transform steps in it.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/bb4377c996c445ae95661839a6ed811e_image.png\" alt=\"image.png\" /></p>\n<p>After I’m satisfied with the steps I have added, I can expand the <strong>Export to</strong> menu, and I have the option to export to <strong>SageMaker Inference Pipeline (via Jupyter Notebook).</strong></p>\n<p><img src=\"https://dev-media.amazoncloud.cn/3572aaadeb7249e2bf234a4cd1557b66_image.png\" alt=\"image.png\" /></p>\n<p>I select <strong>Export to SageMaker Inference Pipeline</strong>, and SageMaker Data Wrangler will prepare a fully customized Jupyter notebook to integrate the SageMaker Data Wrangler flow with inference. This generated Jupyter notebook performs a few important actions. First, define data processing and model training steps in a SageMaker pipeline. The next step is to run the pipeline to process my data with Data Wrangler and use the processed data to train a model that will be used to generate real-time predictions. Then, deploy my Data Wrangler flow and trained model to a real-time endpoint as an inference pipeline. Last, invoke my endpoint to make a prediction.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/09fbcdf5be6a41129fdcd614999ea3c8_image.png\" alt=\"image.png\" /></p>\n<p>This feature uses <a href=\"https://aws.amazon.com/sagemaker/\" target=\"_blank\">Amazon SageMaker Autopilot</a>, which makes it easy for me to build ML models. I just need to provide the transformed dataset which is the output of the SageMaker Data Wrangler step and select the target column to predict. The rest will be handled by Amazon SageMaker Autopilot to explore various solutions to find the best model.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/a37cefa4a5fb4617a8f0a3c82bc94bf9_image.png\" alt=\"image.png\" /></p>\n<p>Using AutoML as a training step from SageMaker Autopilot is enabled by default in the notebook with the <code>use_automl_step</code>variable. When using the AutoML step, I need to define the value of <code>target_attribute_name</code>, which is the column of my data I want to predict during inference. Alternatively, I can set <code>use_automl_step</code> to <code>False</code> if I want to use the XGBoost algorithm to train a model instead.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/1e1b246816644dc79d775636bb2e5414_image.png\" alt=\"image.png\" /></p>\n<p>On the other hand, if I would like to instead use a model I trained outside of this notebook, then I can skip directly to the <strong>Create SageMaker Inference Pipeline</strong> section of the notebook. Here, I would need to set the value of the <code>byo_model</code> variable to <code>True</code>. I also need to provide the value of <code>algo_model_uri</code>, which is the Amazon Simple Storage Service (Amazon S3) URI where my model is located. When training a model with the notebook, these values will be auto-populated.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/62372c6d48e345b2806fb27cd53b4ed0_image.png\" alt=\"image.png\" /></p>\n<p>In addition, this feature also saves a tarball inside the <code>data_wrangler_inference_flows</code> folder on my SageMaker Studio instance. This file is a modified version of the SageMaker Data Wrangler flow, containing the data transformation steps to be applied at the time of inference. It will be uploaded to S3 from the notebook so that it can be used to create a SageMaker Data Wrangler preprocessing step in the inference pipeline.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/30d22519f2694e748b31db3c669159ee_image.png\" alt=\"image.png\" /></p>\n<p>The next step is that this notebook will create two SageMaker model objects. The first object model is the SageMaker Data Wrangler model object with the variable <code>data_wrangler_model</code>, and the second is the model object for the algorithm, with the variable <code>algo_model</code>. Object <code>data_wrangler_model</code> will be used to provide input in the form of data that has been processed into <code>algo_model</code> for prediction.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/36d02f36d12c48b9a388bc30357126ab_image.png\" alt=\"image.png\" /></p>\n<p>The final step inside this notebook is to create a SageMaker inference pipeline model, and deploy it to an endpoint.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/1ee11f61fc1b4aec80dfe330275f182c_image.png\" alt=\"image.png\" /></p>\n<p>Once the deployment is complete, I will get an inference endpoint that I can use for prediction. With this feature, the inference pipeline uses the SageMaker Data Wrangler flow to transform the data from your inference request into a format that the trained model can use.</p>\n<p>In the next section, I can run individual notebook cells in <strong>Make a Sample Inference Request</strong>. This is helpful if I need to do a quick check to see if the endpoint is working by invoking the endpoint with a single data point from my unprocessed data. Data Wrangler automatically places this data point into the notebook, so I don’t have to provide one manually.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/630aac67454e4b66a80bb0f107c2372f_image.png\" alt=\"image.png\" /></p>\n<h3><a id=\"Things_to_Know_76\"></a><ins>Things to Know</ins></h3>\n<p><strong>Enhanced Apache Spark configuration</strong> — In this release of SageMaker Data Wrangler, you can now easily configure how Apache Spark partitions the output of your SageMaker Data Wrangler jobs when saving data to Amazon S3. When adding a destination node, you can set the number of partitions, corresponding to the number of files that will be written to Amazon S3, and you can specify column names to partition by, to write records with different values of those columns to different subdirectories in Amazon S3. Moreover, you can also define the configuration in the provided notebook.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/1542e66bddb040b8b24b22bc57479e4e_image.png\" alt=\"image.png\" /></p>\n<p>You can also define memory configurations for SageMaker Data Wrangler processing jobs as part of the <strong>Create job</strong> workflow. You will find similar configuration as part of your notebook.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/260e23dcef174ab6a71b61e59c4e36bb_image.png\" alt=\"image.png\" /></p>\n<p>Availability — SageMaker Data Wrangler supports for real-time and batch inference as well as enhanced Apache Spark configuration for data processing workloads are generally available in all AWS Regions that Data Wrangler currently supports.</p>\n<p>To get started with <a href=\"https://aws.amazon.com/sagemaker/data-wrangler\" target=\"_blank\">Amazon SageMaker Data Wrangler</a> supports for real-time and batch inference deployment, visit <a href=\"https://docs.aws.amazon.com/sagemaker/latest/dg/data-wrangler-data-export.html\" target=\"_blank\">AWS documentation.</a></p>\n<p>Happy building<br />\n— <a href=\"https://donnie.id/\" target=\"_blank\">Donnie</a></p>\n<p><img src=\"https://dev-media.amazoncloud.cn/1e46fee2355f4b9996082d676ef43892_89d6442457c51c067e7be46d60afd82.png\" alt=\"89d6442457c51c067e7be46d60afd82.png\" /></p>\n<h3><a id=\"Donnie_Prakoso_95\"></a>Donnie Prakoso</h3>\n<p>Donnie Prakoso is a software engineer, self-proclaimed barista, and Principal Developer Advocate at AWS. With more than 17 years of experience in the technology industry, from telecommunications, banking to startups. He is now focusing on helping the developers to understand varieties of technology to transform their ideas into execution. He loves coffee and any discussion of any topics from microservices to AI / ML.</p>\n"}

New — Introducing Support for Real-Time and Batch Inference in Amazon SageMaker Data Wrangler

海外精选

re:Invent

Amazon SageMaker

海外精选的内容汇集了全球优质的亚马逊云科技相关技术内容。同时,内容中提到的“AWS”

是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

0

0 0

0亚马逊云科技解决方案 基于行业客户应用场景及技术领域的解决方案

联系亚马逊云科技专家

目录

亚马逊云科技解决方案 基于行业客户应用场景及技术领域的解决方案

联系亚马逊云科技专家

亚马逊云科技解决方案

基于行业客户应用场景及技术领域的解决方案

联系专家

0

目录

分享

分享 点赞

点赞 收藏

收藏 目录

目录立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。