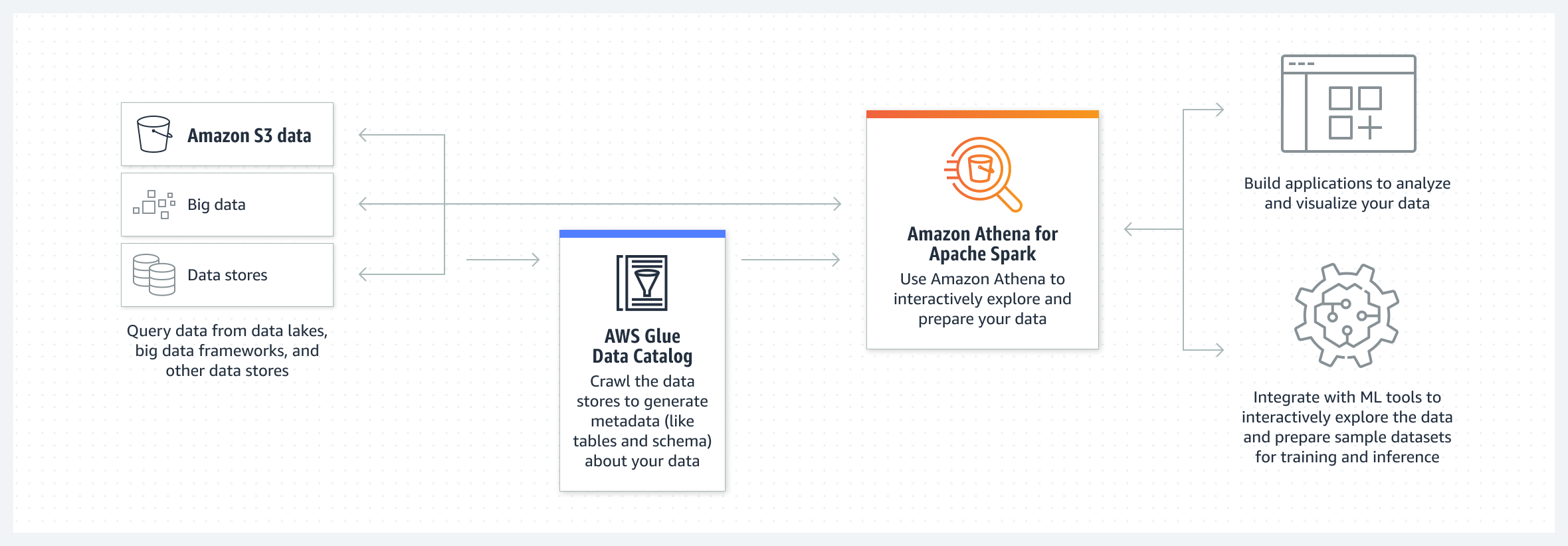

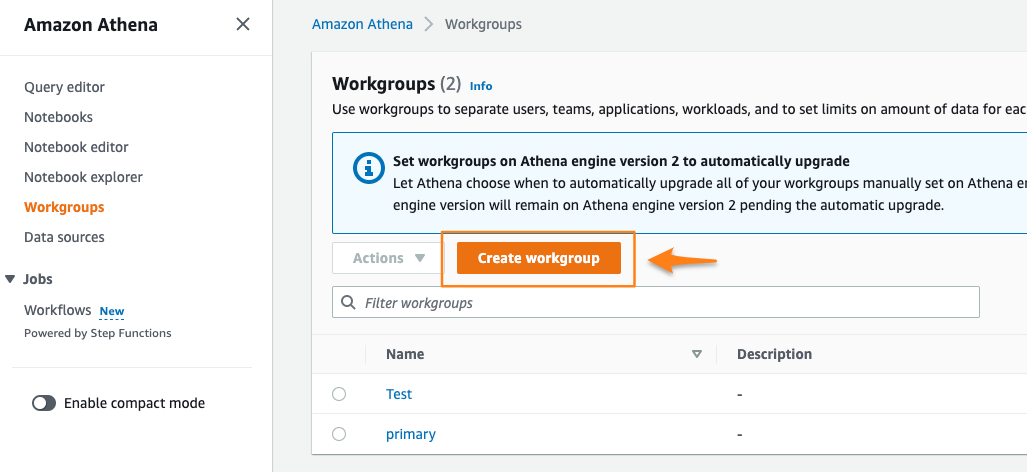

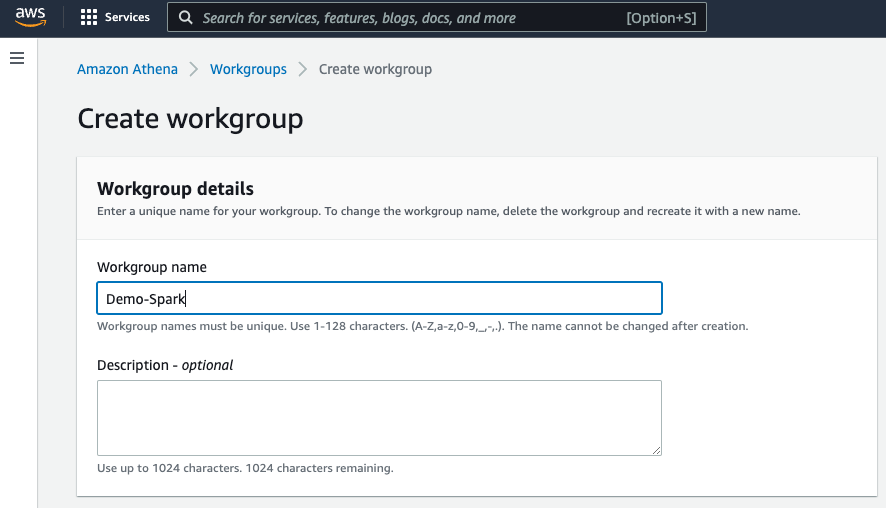

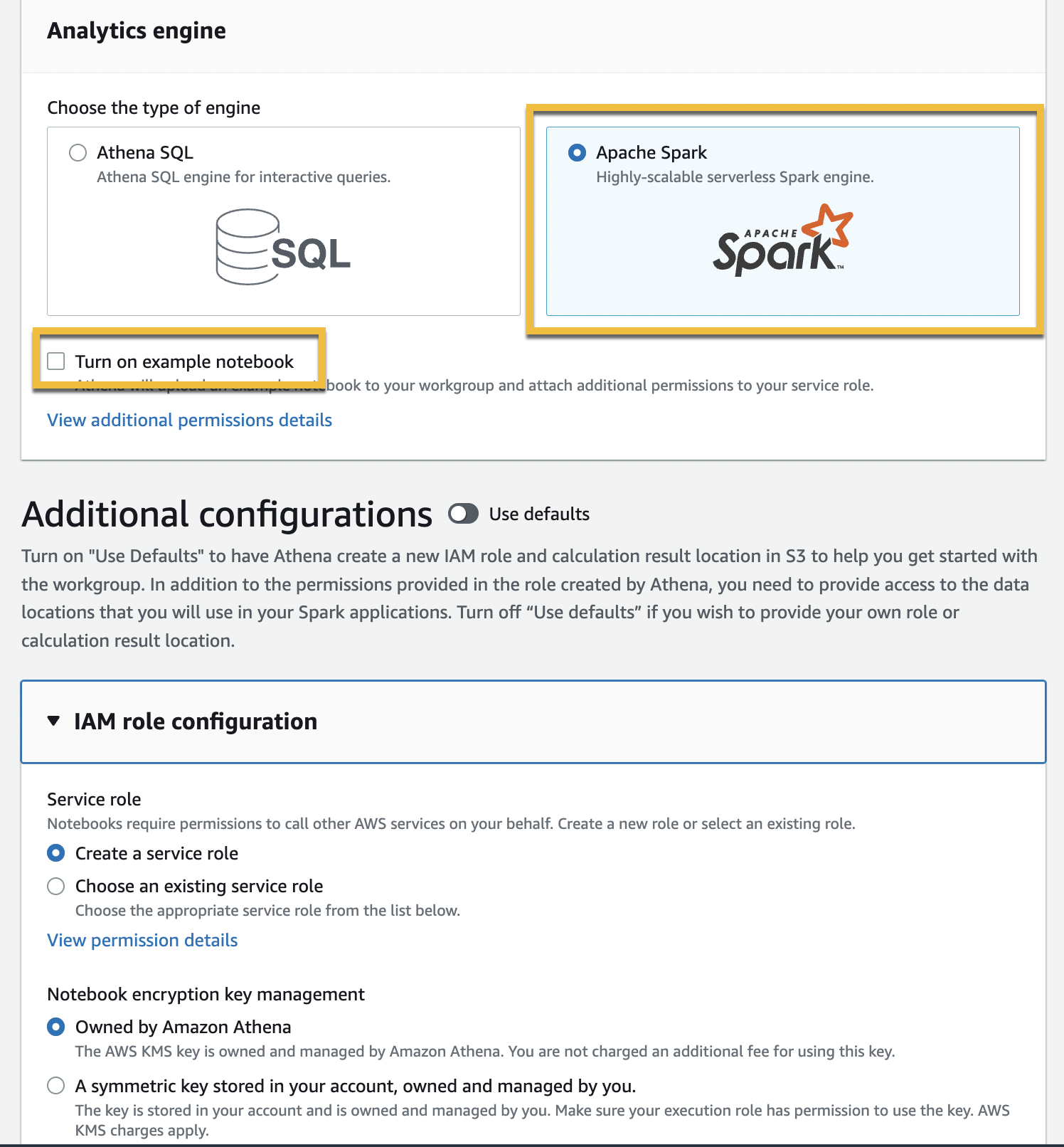

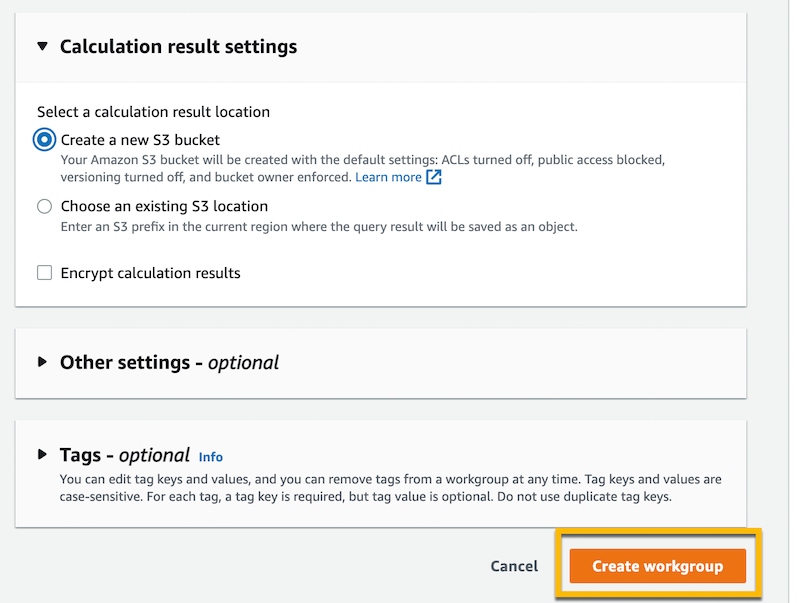

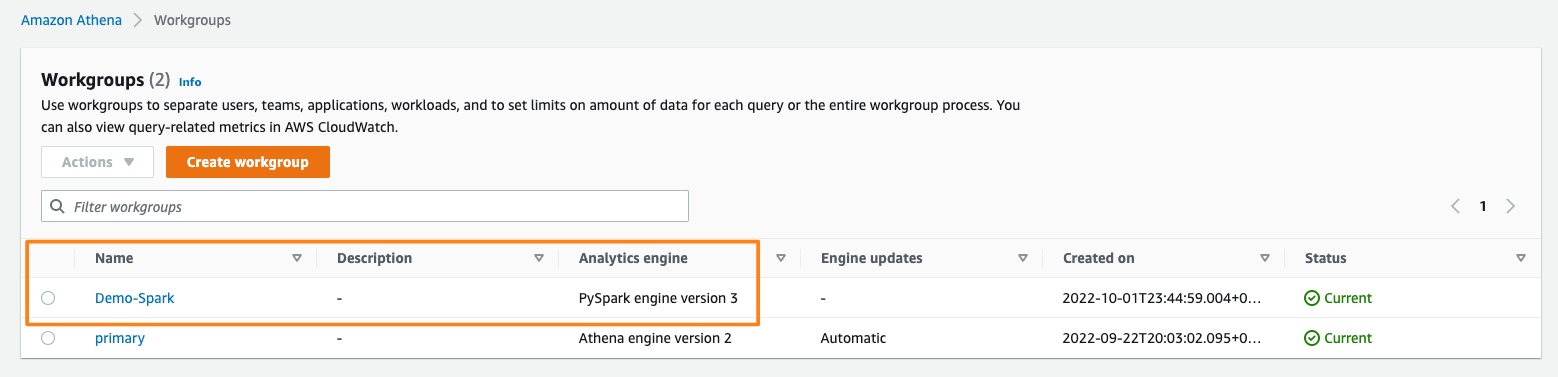

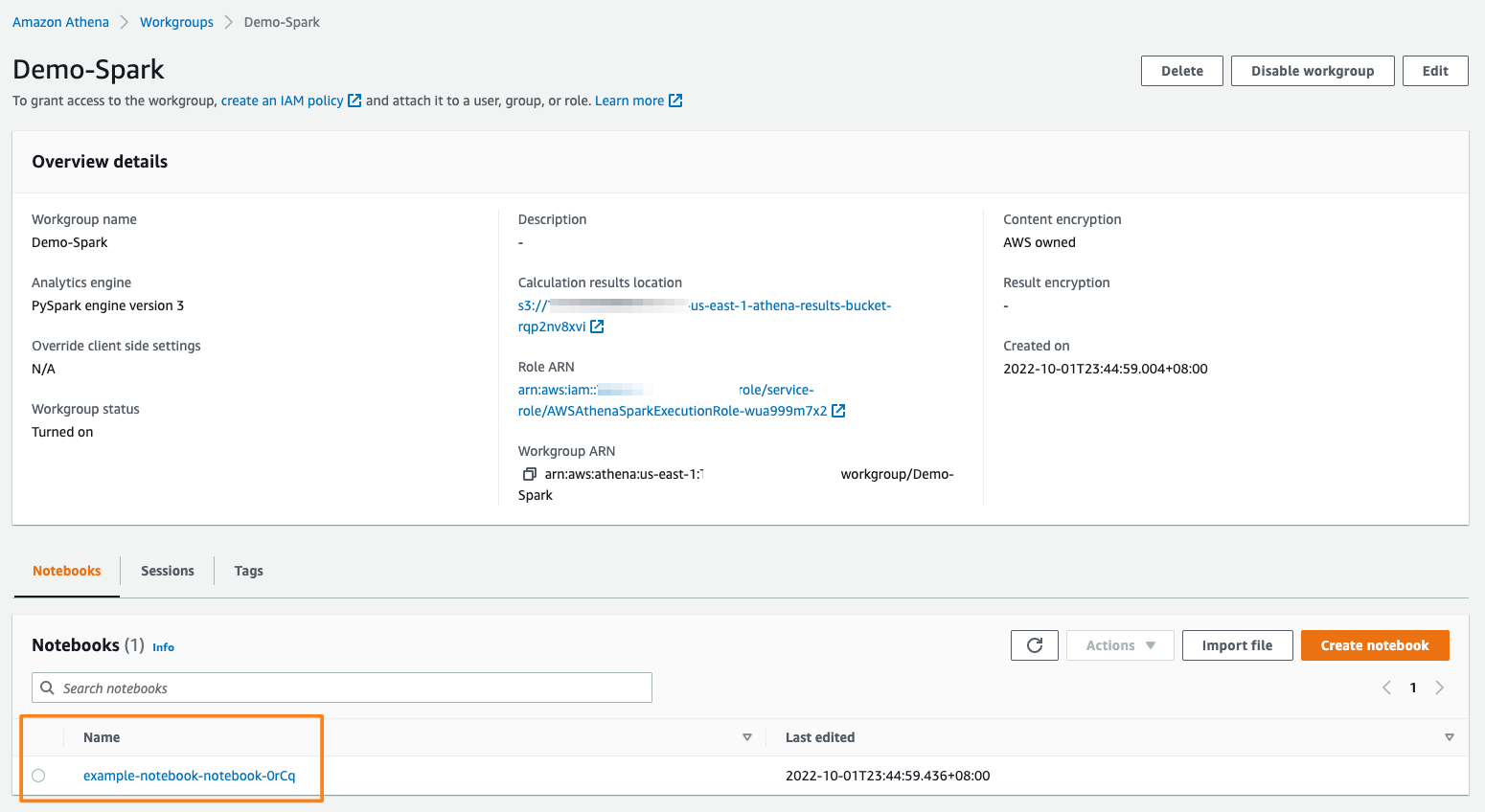

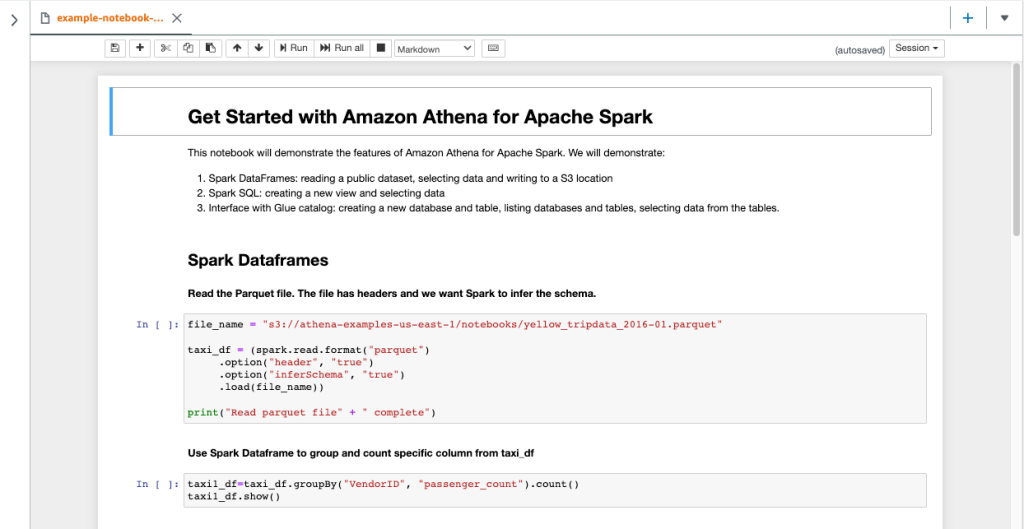

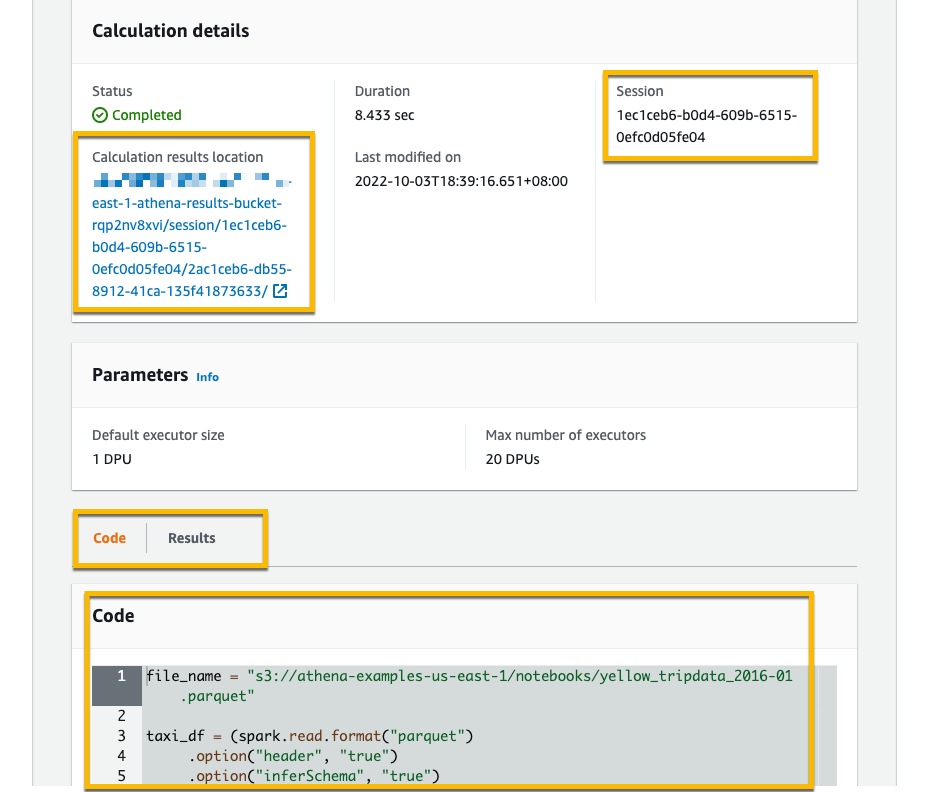

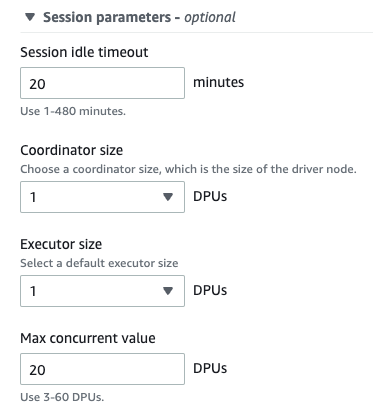

{"value":"When Jeff Barr first [announced Amazon Athena](https://aws.amazon.com/blogs/aws/amazon-athena-interactive-sql-queries-for-data-in-amazon-s3/) in 2016, it changed my perspective on interacting with data. With [Amazon Athena](https://aws.amazon.com/athena/?trk=229b0019-2a6b-4963-bd60-ee9bbf8c2dcf&sc_channel=el), I can interact with my data in just a few steps—starting from creating a table in Athena, loading data using connectors, and querying using the ANSI SQL standard.\n\nOver time, various industries, such as financial services, healthcare, and retail, have needed to run more complex analyses for a variety of formats and sizes of data. To facilitate complex data analysis, organizations adopted Apache Spark. [Apache Spark](https://aws.amazon.com/big-data/what-is-spark/) is a popular, open-source, distributed processing system designed to run fast analytics workloads for data of any size.\n\nHowever, building the infrastructure to run Apache Spark for interactive applications is not easy. Customers need to provision, configure, and maintain the infrastructure on top of the applications. Not to mention performing optimal tuning resources to avoid slow application starts and suffering from idle costs.\n\n### ++Introducing Amazon Athena for Apache Spark++\n\nToday, I’m pleased to announce **[Amazon Athena for Apache Spark](https://aws.amazon.com/athena/spark/?trk=3da7be85-174e-405a-864e-c34e90bd9d14&sc_channel=el)**. With this feature, we can run Apache Spark workloads, use Jupyter Notebook as the interface to perform data processing on Athena, and programmatically interact with Spark applications using Athena APIs. We can start Apache Spark in under a second without having to manually provision the infrastructure.\n\nHere’s a quick preview:\n\n\n\n\n### How It Works\nSince Amazon Athena for Apache Spark runs serverless, this benefits customers in performing interactive data exploration to gain insights without the need to provision and maintain resources to run Apache Spark. With this feature, customers can now build Apache Spark applications using the notebook experience directly from the Athena console or programmatically using APIs.\n\nThe following figure explains how this feature works:\n\n\n\nOn the Athena console, you can now run notebooks and run Spark applications with Python using Jupyter notebooks. In this Jupyter notebook, customers can query data from various sources and perform multiple calculations and data visualizations using Spark applications without context switching.\n\nAmazon Athena integrates with [AWS Glue Data Catalog](https://docs.aws.amazon.com/glue/latest/dg/catalog-and-crawler.html), which helps customers to work with any data source in AWS Glue Data Catalog, including data in Amazon S3. This opens possibilities for customers in building applications to analyze and visualize data to explore data to prepare data sets for machine learning pipelines.\n\nAs I demonstrated in the demo preview section, the initialization for the workgroup running the Apache Spark engine takes under a second to run resources for interactive workloads. To make this possible, Amazon Athena for Apache Spark uses [Firecracker](https://aws.amazon.com/blogs/aws/firecracker-lightweight-virtualization-for-serverless-computing/), a lightweight micro-virtual machine, which allows for instant startup time and eliminates the need to maintain warm pools of resources. This benefits customers who want to perform interactive data exploration to get insights without having to prepare resources to run Apache Spark.\n\n\n### Get Started with Amazon Athena for Apache Spark\nLet’s see how we can use Amazon Athena for Apache Spark. In this post, I will explain step-by-step how to get started with this feature.\n\nThe first step is to create a [workgroup](https://docs.aws.amazon.com/athena/latest/ug/user-created-workgroups.html). In the context of Athena, a workgroup helps us to separate workloads between users and applications.\n\nTo create a workgroup, from the Athena dashboard, select **Create Workgroup**.\n\n\n\n\nOn the next page, I give the name and description for this workgroup.\n\n\n\nOn the same page, I can choose Apache Spark as the engine for Athena. In addition, I also need to specify a **service role** with appropriate permissions to be used inside a Jupyter notebook. Then, I check **Turn on example notebook**, which makes it easy for me to get started with Apache Spark inside Athena. I also have the option to encrypt Jupyter notebooks managed by Athena or use the key I have configured in [AWS Key Management Service (AWS KMS)](https://aws.amazon.com/kms/).\n\n\n\n\nAfter that, I need to define an [Amazon Simple Storage Service (Amazon S3)](https://aws.amazon.com/s3/) bucket to store calculation results from the Jupyter notebook. Once I’m sure of all the configurations for this workgroup, I just have to select **Create workgroup**.\n\n\n\nNow, I can see the workgroup already created in Athena.\n\n\n\n\n\nTo see the details of this workgroup, I can select the link from the workgroup. Since I also checked the **Turn on example notebook** when creating this workgroup, I have a Jupyter notebook to help me get started. Amazon Athena also provides flexibility for me to import existing notebooks that I can upload from my laptop with **Import file** or create new notebooks from scratch by selecting **Create notebook**.\n\n\n\n\nWhen I select the Jupyter notebook example, I can start building my Apache Spark application.\n\n\n\nWhen I run a Jupyter notebook, it automatically creates a session in the workgroup. Subsequently, each time I run a calculation inside the Jupyter notebook, all results will be recorded in the session. This way, Athena provides me with full information to review each calculation by selecting **Calculation ID**, which took me to the **Calculation details** page. Here, I can review the **Code** and also **Results** for the calculation.\n\n\n\nIn the session, I can adjust the **Coordinator size** and **Executor size**, with 1 data processing unit (DPU) by default. A DPU consists of 4 vCPU and 16 GB of RAM. Changing to a larger DPU allows me to process tasks faster if I have complex calculations.\n\n\n\n### Programmatic API Access\nIn addition to using the Athena console, I can also use programmatic access to interact with the Spark application inside Athena. For example, I can create a workgroup with the ```create-work-group``` command, start a notebook with ```create-notebook```, and run a notebook session with ```start-session```.\n\nUsing programmatic access is useful when I need to execute commands such as building reports or computing data without having to open the Jupyter notebook.\n\nWith my Jupyter notebook that I’ve created before, I can start a session by running the following command with the AWS CLI:\n\n\n```\n$> aws athena start-session \\\n --work-group <WORKGROUP_NAME>\\\n --engine-configuration '{\"CoordinatorDpuSize\": 1, \"MaxConcurrentDpus\":20, \"DefaultExecutorDpuSize\": 1, \"AdditionalConfigs\":{\"NotebookId\":\"<NOTEBOOK_ID>\"}}'\n --notebook-version \"Jupyter 1\"\n --description \"Starting session from CLI\"\n\n{\n \"SessionId\":\"<SESSION_ID>\",\n \"State\":\"CREATED\"\n}\n```\n\nThen, I can run a calculation using the ```start-calculation-execution``` API.\n\n\n```\n$ aws athena start-calculation-execution \\\n --session-id \"<SESSION_ID>\"\n --description \"Demo\"\n --code-block \"print(5+6)\"\n\n{\n \"CalculationExecutionId\":\"<CALCULATION_EXECUTION_ID>\",\n \"State\":\"CREATING\"\n}\n```\n\nIn addition to using code inline, with the ```--code-block``` flag, I can also pass input from a Python file using the following command:\n\n\n```\n$ aws athena start-calculation-execution \\\n --session-id \"<SESSION_ID>\"\n --description \"Demo\"\n --code-block file://<PYTHON FILE>\n\n{\n \"CalculationExecutionId\":\"<CALCULATION_EXECUTION_ID>\",\n \"State\":\"CREATING\"\n}\n```\n\n### **Pricing and Availability**\nAmazon Athena for Apache Spark is available today in the following AWS Regions: US East (Ohio), US East (N. Virginia), US West (Oregon), Asia Pacific (Tokyo), and Europe (Ireland). To use this feature, you are charged based on the amount of compute usage defined by the data processing unit or DPU per hour. For more information see our [pricing page here](https://aws.amazon.com/athena/pricing/).\n\nTo get started with this feature, see [Amazon Athena for Apache Spark](https://aws.amazon.com/athena/spark/?trk=3da7be85-174e-405a-864e-c34e90bd9d14&sc_channel=el) to learn more from the documentation, understand the pricing, and follow the step-by-step walkthrough.\n\nHappy building,\n\n— [Donnie](https://twitter.com/donnieprakoso)\n\n\n\n\n### Donnie Prakoso\nDonnie Prakoso is a software engineer, self-proclaimed barista, and Principal Developer Advocate at AWS. With more than 17 years of experience in the technology industry, from telecommunications, banking to startups. He is now focusing on helping the developers to understand varieties of technology to transform their ideas into execution. He loves coffee and any discussion of any topics from microservices to AI / ML.\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n","render":"<p>When Jeff Barr first <a href=\"https://aws.amazon.com/blogs/aws/amazon-athena-interactive-sql-queries-for-data-in-amazon-s3/\" target=\"_blank\">announced Amazon Athena</a> in 2016, it changed my perspective on interacting with data. With <a href=\"https://aws.amazon.com/athena/?trk=229b0019-2a6b-4963-bd60-ee9bbf8c2dcf&sc_channel=el\" target=\"_blank\">Amazon Athena</a>, I can interact with my data in just a few steps—starting from creating a table in Athena, loading data using connectors, and querying using the ANSI SQL standard.</p>\n<p>Over time, various industries, such as financial services, healthcare, and retail, have needed to run more complex analyses for a variety of formats and sizes of data. To facilitate complex data analysis, organizations adopted Apache Spark. <a href=\"https://aws.amazon.com/big-data/what-is-spark/\" target=\"_blank\">Apache Spark</a> is a popular, open-source, distributed processing system designed to run fast analytics workloads for data of any size.</p>\n<p>However, building the infrastructure to run Apache Spark for interactive applications is not easy. Customers need to provision, configure, and maintain the infrastructure on top of the applications. Not to mention performing optimal tuning resources to avoid slow application starts and suffering from idle costs.</p>\n<h3><a id=\"Introducing_Amazon_Athena_for_Apache_Spark_6\"></a><ins>Introducing Amazon Athena for Apache Spark</ins></h3>\n<p>Today, I’m pleased to announce <strong><a href=\"https://aws.amazon.com/athena/spark/?trk=3da7be85-174e-405a-864e-c34e90bd9d14&sc_channel=el\" target=\"_blank\">Amazon Athena for Apache Spark</a></strong>. With this feature, we can run Apache Spark workloads, use Jupyter Notebook as the interface to perform data processing on Athena, and programmatically interact with Spark applications using Athena APIs. We can start Apache Spark in under a second without having to manually provision the infrastructure.</p>\n<p>Here’s a quick preview:</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/1c8142f8a8a24b07935bb34ea78039c3_Kepler-0.gif\" alt=\"Kepler0.gif\" /></p>\n<h3><a id=\"How_It_Works_15\"></a>How It Works</h3>\n<p>Since Amazon Athena for Apache Spark runs serverless, this benefits customers in performing interactive data exploration to gain insights without the need to provision and maintain resources to run Apache Spark. With this feature, customers can now build Apache Spark applications using the notebook experience directly from the Athena console or programmatically using APIs.</p>\n<p>The following figure explains how this feature works:</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/17b8008afc8645c1bb80a4cf9d773199_image.png\" alt=\"image.png\" /></p>\n<p>On the Athena console, you can now run notebooks and run Spark applications with Python using Jupyter notebooks. In this Jupyter notebook, customers can query data from various sources and perform multiple calculations and data visualizations using Spark applications without context switching.</p>\n<p>Amazon Athena integrates with <a href=\"https://docs.aws.amazon.com/glue/latest/dg/catalog-and-crawler.html\" target=\"_blank\">AWS Glue Data Catalog</a>, which helps customers to work with any data source in AWS Glue Data Catalog, including data in Amazon S3. This opens possibilities for customers in building applications to analyze and visualize data to explore data to prepare data sets for machine learning pipelines.</p>\n<p>As I demonstrated in the demo preview section, the initialization for the workgroup running the Apache Spark engine takes under a second to run resources for interactive workloads. To make this possible, Amazon Athena for Apache Spark uses <a href=\"https://aws.amazon.com/blogs/aws/firecracker-lightweight-virtualization-for-serverless-computing/\" target=\"_blank\">Firecracker</a>, a lightweight micro-virtual machine, which allows for instant startup time and eliminates the need to maintain warm pools of resources. This benefits customers who want to perform interactive data exploration to get insights without having to prepare resources to run Apache Spark.</p>\n<h3><a id=\"Get_Started_with_Amazon_Athena_for_Apache_Spark_29\"></a>Get Started with Amazon Athena for Apache Spark</h3>\n<p>Let’s see how we can use Amazon Athena for Apache Spark. In this post, I will explain step-by-step how to get started with this feature.</p>\n<p>The first step is to create a <a href=\"https://docs.aws.amazon.com/athena/latest/ug/user-created-workgroups.html\" target=\"_blank\">workgroup</a>. In the context of Athena, a workgroup helps us to separate workloads between users and applications.</p>\n<p>To create a workgroup, from the Athena dashboard, select <strong>Create Workgroup</strong>.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/bec4a8c49c914dfebe36e065e8d82717_image.png\" alt=\"image.png\" /></p>\n<p>On the next page, I give the name and description for this workgroup.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/002c2da97d1c405c92c62cda86f6c0bf_image.png\" alt=\"image.png\" /></p>\n<p>On the same page, I can choose Apache Spark as the engine for Athena. In addition, I also need to specify a <strong>service role</strong> with appropriate permissions to be used inside a Jupyter notebook. Then, I check <strong>Turn on example notebook</strong>, which makes it easy for me to get started with Apache Spark inside Athena. I also have the option to encrypt Jupyter notebooks managed by Athena or use the key I have configured in <a href=\"https://aws.amazon.com/kms/\" target=\"_blank\">AWS Key Management Service (AWS KMS)</a>.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/252948cba294466280293266e3995cd1_image.png\" alt=\"image.png\" /></p>\n<p>After that, I need to define an <a href=\"https://aws.amazon.com/s3/\" target=\"_blank\">Amazon Simple Storage Service (Amazon S3)</a> bucket to store calculation results from the Jupyter notebook. Once I’m sure of all the configurations for this workgroup, I just have to select <strong>Create workgroup</strong>.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/d3664a9a45c64af3813e9990137af63a_image.png\" alt=\"image.png\" /></p>\n<p>Now, I can see the workgroup already created in Athena.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/45e85967c4654811aafe457f75a15698_image.png\" alt=\"image.png\" /></p>\n<p>To see the details of this workgroup, I can select the link from the workgroup. Since I also checked the <strong>Turn on example notebook</strong> when creating this workgroup, I have a Jupyter notebook to help me get started. Amazon Athena also provides flexibility for me to import existing notebooks that I can upload from my laptop with <strong>Import file</strong> or create new notebooks from scratch by selecting <strong>Create notebook</strong>.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/eafefa1582364ff0a9c59d59a2314261_image.png\" alt=\"image.png\" /></p>\n<p>When I select the Jupyter notebook example, I can start building my Apache Spark application.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/021ddc2ef9004c12a49355b17e1cfe9e_image.png\" alt=\"image.png\" /></p>\n<p>When I run a Jupyter notebook, it automatically creates a session in the workgroup. Subsequently, each time I run a calculation inside the Jupyter notebook, all results will be recorded in the session. This way, Athena provides me with full information to review each calculation by selecting <strong>Calculation ID</strong>, which took me to the <strong>Calculation details</strong> page. Here, I can review the <strong>Code</strong> and also <strong>Results</strong> for the calculation.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/fbef830606dd40c7aaf32cb1c1c3f44d_image.png\" alt=\"image.png\" /></p>\n<p>In the session, I can adjust the <strong>Coordinator size</strong> and <strong>Executor size</strong>, with 1 data processing unit (DPU) by default. A DPU consists of 4 vCPU and 16 GB of RAM. Changing to a larger DPU allows me to process tasks faster if I have complex calculations.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/067500a12be94f5e8d18a25aead091af_image.png\" alt=\"image.png\" /></p>\n<h3><a id=\"Programmatic_API_Access_75\"></a>Programmatic API Access</h3>\n<p>In addition to using the Athena console, I can also use programmatic access to interact with the Spark application inside Athena. For example, I can create a workgroup with the <code>create-work-group</code> command, start a notebook with <code>create-notebook</code>, and run a notebook session with <code>start-session</code>.</p>\n<p>Using programmatic access is useful when I need to execute commands such as building reports or computing data without having to open the Jupyter notebook.</p>\n<p>With my Jupyter notebook that I’ve created before, I can start a session by running the following command with the AWS CLI:</p>\n<pre><code class=\"lang-\">$> aws athena start-session \\\n --work-group <WORKGROUP_NAME>\\\n --engine-configuration '{"CoordinatorDpuSize": 1, "MaxConcurrentDpus":20, "DefaultExecutorDpuSize": 1, "AdditionalConfigs":{"NotebookId":"<NOTEBOOK_ID>"}}'\n --notebook-version "Jupyter 1"\n --description "Starting session from CLI"\n\n{\n "SessionId":"<SESSION_ID>",\n "State":"CREATED"\n}\n</code></pre>\n<p>Then, I can run a calculation using the <code>start-calculation-execution</code> API.</p>\n<pre><code class=\"lang-\">$ aws athena start-calculation-execution \\\n --session-id "<SESSION_ID>"\n --description "Demo"\n --code-block "print(5+6)"\n\n{\n "CalculationExecutionId":"<CALCULATION_EXECUTION_ID>",\n "State":"CREATING"\n}\n</code></pre>\n<p>In addition to using code inline, with the <code>--code-block</code> flag, I can also pass input from a Python file using the following command:</p>\n<pre><code class=\"lang-\">$ aws athena start-calculation-execution \\\n --session-id "<SESSION_ID>"\n --description "Demo"\n --code-block file://<PYTHON FILE>\n\n{\n "CalculationExecutionId":"<CALCULATION_EXECUTION_ID>",\n "State":"CREATING"\n}\n</code></pre>\n<h3><a id=\"Pricing_and_Availability_126\"></a><strong>Pricing and Availability</strong></h3>\n<p>Amazon Athena for Apache Spark is available today in the following AWS Regions: US East (Ohio), US East (N. Virginia), US West (Oregon), Asia Pacific (Tokyo), and Europe (Ireland). To use this feature, you are charged based on the amount of compute usage defined by the data processing unit or DPU per hour. For more information see our <a href=\"https://aws.amazon.com/athena/pricing/\" target=\"_blank\">pricing page here</a>.</p>\n<p>To get started with this feature, see <a href=\"https://aws.amazon.com/athena/spark/?trk=3da7be85-174e-405a-864e-c34e90bd9d14&sc_channel=el\" target=\"_blank\">Amazon Athena for Apache Spark</a> to learn more from the documentation, understand the pricing, and follow the step-by-step walkthrough.</p>\n<p>Happy building,</p>\n<p>— <a href=\"https://twitter.com/donnieprakoso\" target=\"_blank\">Donnie</a></p>\n<p><img src=\"https://dev-media.amazoncloud.cn/8642d8e2f93c4c618543534316dc77a7_image.png\" alt=\"image.png\" /></p>\n<h3><a id=\"Donnie_Prakoso_138\"></a>Donnie Prakoso</h3>\n<p>Donnie Prakoso is a software engineer, self-proclaimed barista, and Principal Developer Advocate at AWS. With more than 17 years of experience in the technology industry, from telecommunications, banking to startups. He is now focusing on helping the developers to understand varieties of technology to transform their ideas into execution. He loves coffee and any discussion of any topics from microservices to AI / ML.</p>\n"}

New — Amazon Athena for Apache Spark

海外精选

re:Invent

Amazon Athena

海外精选的内容汇集了全球优质的亚马逊云科技相关技术内容。同时,内容中提到的“AWS”

是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

0

0 0

0亚马逊云科技解决方案 基于行业客户应用场景及技术领域的解决方案

联系亚马逊云科技专家

目录

亚马逊云科技解决方案 基于行业客户应用场景及技术领域的解决方案

联系亚马逊云科技专家

亚马逊云科技解决方案

基于行业客户应用场景及技术领域的解决方案

联系专家

0

目录

分享

分享 点赞

点赞 收藏

收藏 目录

目录立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。