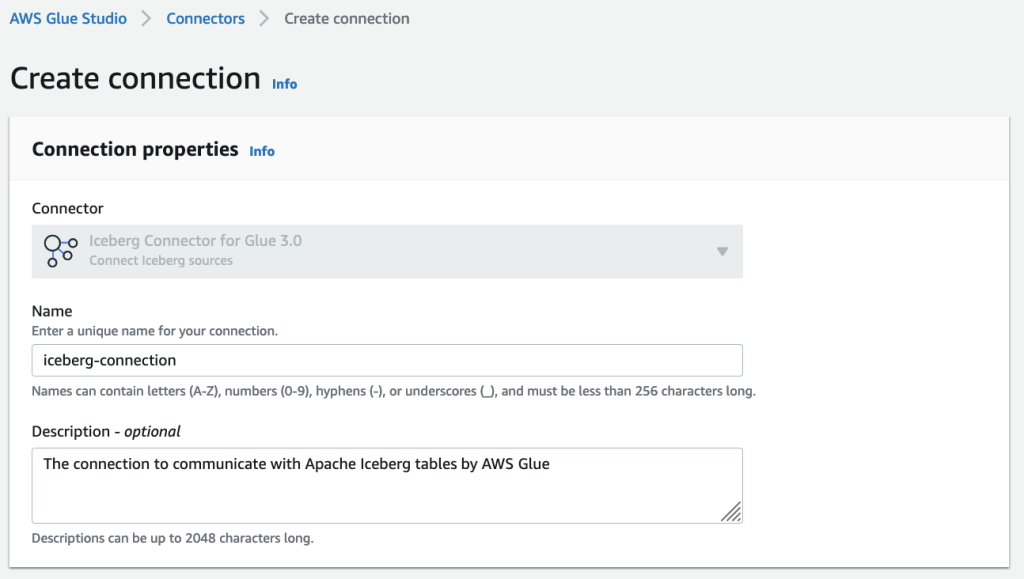

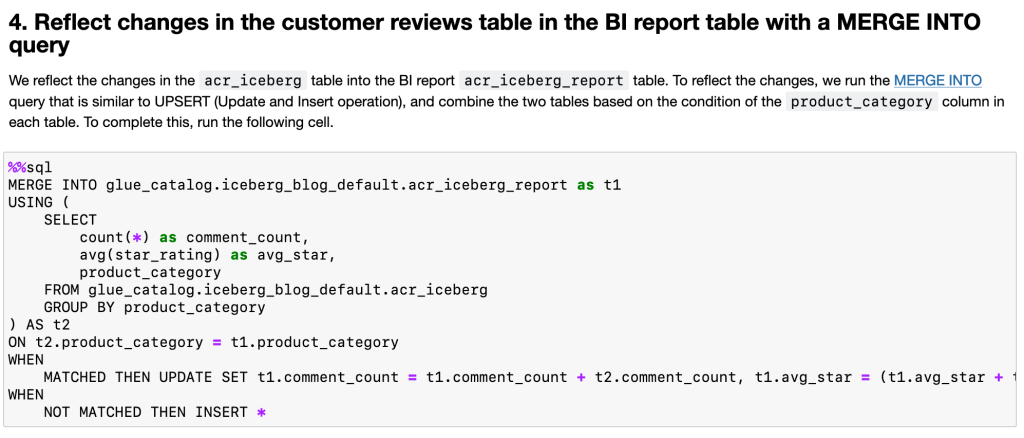

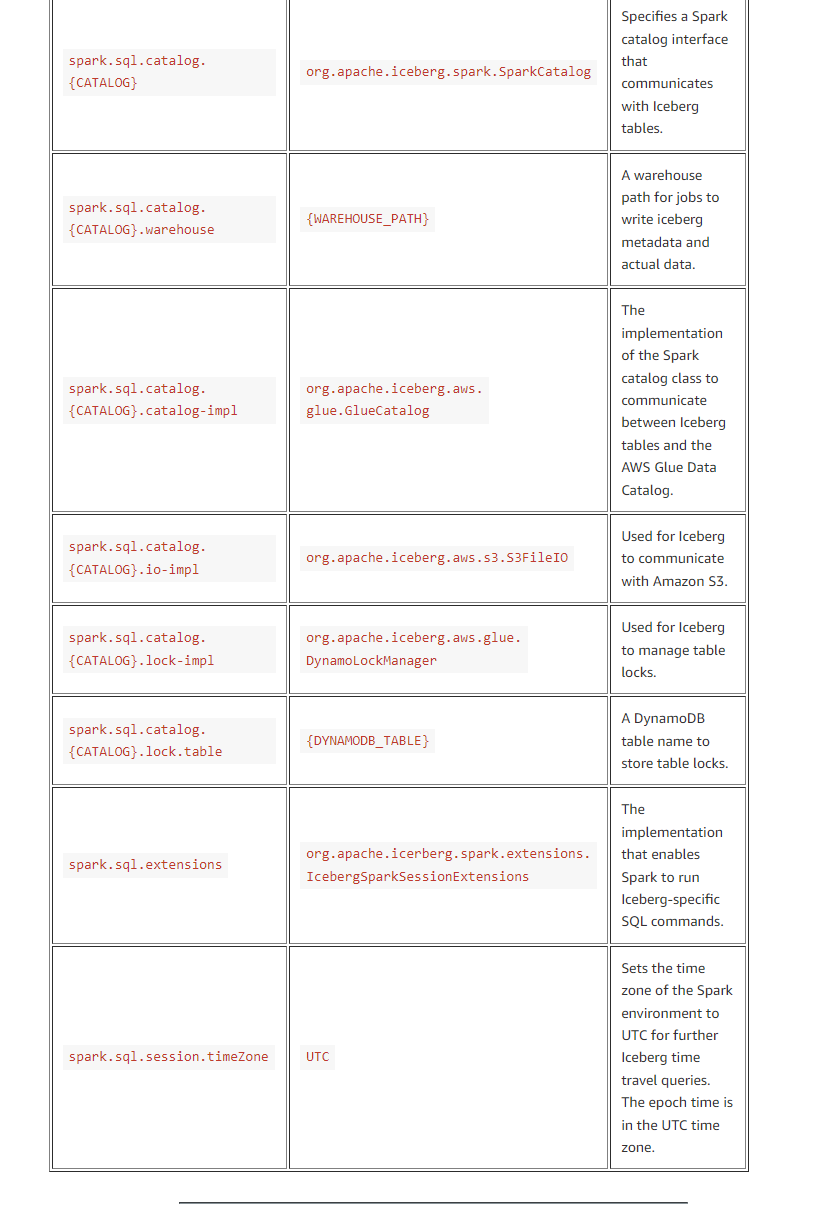

{"value":"Nowadays, many customers have built their data lakes as the core of their data analytic systems. In a typical use case of data lakes, many concurrent queries run to retrieve consistent snapshots of business insights by aggregating query results. A large volume of data constantly comes from different data sources into the data lakes. There is also a common demand to reflect the changes occurring in the data sources into the data lakes. This means that not only inserts but also updates and deletes need to be replicated into the data lakes.\n\n[Apache Iceberg ](https://iceberg.apache.org/) provides the capability of ACID transactions on your data lakes, which allows concurrent queries to add or delete records isolated from any existing queries with read-consistency for queries. Iceberg is an open table format designed for large analytic workloads on huge datasets. You can perform ACID transactions against your data lakes by using simple SQL expressions. It also enables time travel, rollback, hidden partitioning, and schema evolution changes, such as adding, dropping, renaming, updating, and reordering columns.\n\n[AWS Glue](https://aws.amazon.com/glue) is one of the key elements to building data lakes. It extracts data from multiple sources and ingests your data to your data lake built on [Amazon Simple Storage Service](http://aws.amazon.com/s3) (Amazon S3) using both batch and streaming jobs. To expand the accessibility of your AWS Glue extract, transform, and load (ETL) jobs to Iceberg, AWS Glue provides an [Apache Iceberg connector](https://aws.amazon.com/marketplace/pp/prodview-iicxofvpqvsio). The connector allows you to build Iceberg tables on your data lakes and run Iceberg operations such as ACID transactions, time travel, rollbacks, and so on from your AWS Glue ETL jobs.\n\nIn this post, we give an overview of how to set up the Iceberg connector for AWS Glue and configure the relevant resources to use Iceberg with AWS Glue jobs. We also demonstrate how to run typical Iceberg operations on [AWS Glue interactive sessions](https://docs.aws.amazon.com/glue/latest/dg/interactive-sessions-overview.html) with an example use case.\n\n#### **Apache Iceberg connector for AWS Glue**\n\nWith the [Apache Iceberg connector for AWS Glue](https://aws.amazon.com/marketplace/pp/prodview-iicxofvpqvsio), you can take advantage of the following Iceberg capabilities:\n\n- **Basic operations on Iceberg tables** – This includes creating Iceberg tables in the AWS Glue Data Catalog and inserting, updating, and deleting records with ACID transactions in the Iceberg tables\n- **Inserting and updating records** – You can run ```UPSERT``` (update and insert) queries for your Iceberg table\n- **Time travel on Iceberg tables** – You can read a specific version of an Iceberg table from table snapshots that Iceberg manages\n- **Rollback of table versions** – You can revert an Iceberg table back to a specific version of the table\n\nIceberg offers additional useful capabilities such as hidden partitioning; schema evolution with add, drop, update, and rename support; automatic data compaction; and more. For more details about Iceberg, refer to the [Apache Iceberg documentation](https://iceberg.apache.org/docs/latest/).\n\nNext, we demonstrate how the Apache Iceberg connector for AWS Glue works for each Iceberg capability based on an example use case.\n\n#### **Overview of example customer scenario**\n\nLet’s assume that an ecommerce company sells products on their online platform. Customers can buy products and write reviews to each product. Customers can add, update, or delete their reviews at any time. The customer reviews are an important source for analyzing customer sentiment and business trends.\n\nIn this scenario, we have the following teams in our organization:\n\n- **Data engineering team** – Responsible for building and managing data platforms.\n- **Data analyst team** – Responsible for analyzing customer reviews and creating business reports. This team queries the reviews daily, creates a business intelligence (BI) report, and shares it with sales team.\n- **Customer support team** – Responsible for replying to customer inquiries. This team queries the reviews when they get inquiries about the reviews.\n\nOur solution has the following requirements:\n\n- Query scalability is important because the website is huge.\n- Individual customer reviews can be added, updated, and deleted.\n- The data analyst team needs to use both notebooks and ad hoc queries for their analysis.\n- The customer support team sometimes needs to view the history of the customer reviews.\n- Customer reviews can always be added, updated, and deleted, even while one of the teams is querying the reviews for analysis. This means that any result in a query isn’t affected by uncommitted customer review write operations.\n- Any changes in customer reviews that are made by the organization’s various teams need to be reflected in BI reports and query results.\n\nIn this post, we build a data lake of customer review data on top of Amazon S3. To meet these requirements, we introduce Apache Iceberg to enable adding, updating, and deleting records; ACID transactions; and time travel queries. We also use an AWS Glue Studio notebook to integrate and query the data at scale. First, we set up the connector so we can create an AWS Glue connection for Iceberg.\n\n#### **Set up the Apache Iceberg connector and create the Iceberg connection**\n\nWe first set up Apache Iceberg connector for AWS Glue to use Apache Iceberg with AWS Glue jobs. Particularly, in this section, we set up the Apache Iceberg connector for AWS Glue and create an AWS Glue job with the connector. Complete the following steps:\n\n1. Navigate to the [Apache Iceberg connector for AWS Glue page](https://aws.amazon.com/marketplace/pp/prodview-iicxofvpqvsio) in [AWS Marketplace](https://aws.amazon.com/marketplace).\n2. Choose **Continue to Subscribe**.\n\n\n\n3.Review the information under **Terms and Conditions**, and choose **Accept Terms** to continue.\n\n\n\n4.When the subscription is complete, choose **Continue to Configuration**.\n\n\n\n\n\n5.For **Fulfillment option**, choose **Glue 3.0**. (1.0 and 2.0 are also available options.)\n6.For **Software version**, choose the latest software version.\nAs of this writing, 0.12.0-2 is the latest version of the Apache Iceberg connector for AWS Glue.\n\n7.Choose **Continue to Launch**.\n\n\n\n8.Choose **Usage instructions**.\n9.Choose **Activate the Glue connector from AWS Glue Studio**.\nYou’re redirected to AWS Glue Studio.\n\n10.For **Name**, enter a name for your connection (for example, ```iceberg-connection```).\n\n\n\n11.Choose **Create connection and activate connector**.\n\nA message appears that the connection was successfully added, and the connection is now visible on the AWS Glue Studio console.\n\n\n\n#### **Configure resources and permissions**\n\nWe use a provided AWS CloudFormation [template](https://aws-bigdata-blog.s3.amazonaws.com/artifacts/glue-iceberg-connector/IcebergConnectorBlogCFn.yml) to set up Iceberg configuration for AWS Glue. AWS CloudFormation creates the following resources:\n\n- An S3 bucket to store an Iceberg configuration file and actual data\n- An [AWS Lambda](https://aws.amazon.com/lambda/) function to generate an Iceberg configuration file based on parameters provided by a user for the CloudFormation template, and to clean up the resources created through this post\n- [AWS Identity and Access Management](https://aws.amazon.com/iam/) (IAM) roles and policies with necessary permissions\n- An AWS Glue database in the Data Catalog to register Iceberg tables\n\nTo deploy the CloudFormation template, complete the following steps:\n\n1.Choose **Launch Stack**:\n\n[](https://console.aws.amazon.com/cloudformation/home?region=us-east-1#/stacks/new?templateURL=https://aws-bigdata-blog.s3.amazonaws.com/artifacts/glue-iceberg-connector/IcebergConnectorBlogCFn.yml)\n\n2.For **DynamoDBTableName**, enter a name for an Amazon DynamoDB table that is created automatically when AWS Glue creates an Iceberg table.\nThis table is used for an AWS Glue job to obtain a commit lock to avoid concurrently modifying records in Iceberg tables. For more details about commit locking, refer to [DynamoDB for Commit Locking](https://iceberg.apache.org/docs/latest/aws/#dynamodb-for-commit-locking). Note that you shouldn’t specify the name of an existing table.\n\n3.For **IcebergDatabaseName**, enter a name for the AWS Glue database that is created in the Data Catalog and used for registering Iceberg tables.\n4.Choose **Next**.\n\n\n\n5.Select **I acknowledge that AWS CloudFormation might create IAM resources with custom names**.\n6.Choose **Create stack**.\n\n#### **Start an AWS Glue Studio notebook to use Apache Iceberg**\n\nAfter you launch the CloudFormation stack, you create an AWS Glue Studio notebook to perform Iceberg operations. Complete the following steps:\n\n1. Download the [Jupyter notebook file](https://aws-bigdata-blog.s3.amazonaws.com/artifacts/glue-iceberg-connector/iceberg-connector-with-glue.ipynb).\n2. On the AWS Glue console, choose **Jobs** in the navigation pane.\n3. Under **Create job**, select **Jupyter Notebook**.\n\n\n\n4.Select **Upload and edit an existing notebook** and upload ```iceberg-with-glue.ipynb```.\n\n\n\n5. Choose **Create**.\n6. For **Job name**, enter a name.\n7. For **IAM role**, choose **IcebergConnectorGlueJobRole**, which was created via the CloudFormation template.\n8. Choose **Start notebook job**.\n\n\n\nThe process takes a few minutes to complete, after which you can see an AWS Glue Studio notebook view.\n\n9.Choose **Save** to save the notebook.\n\n\n\n**Set up the Iceberg configuration**\n\nTo set up the Iceberg configuration, complete the following steps:\n\n1. Run the following cells with multiple options (magics). Note that you set your connection name for the ```%connections``` magic in the cell.\n\nFor more information, refer to [Configuring AWS Glue Interactive Sessions for Jupyter and AWS Glue Studio notebooks](https://docs.aws.amazon.com/glue/latest/dg/interactive-sessions-magics.html).\n\nA message **Session <session-id> has been created** appears when your AWS Glue Studio notebook is ready.\n\n\n\nIn the last cell in this section, you load your Iceberg configuration, which you specified when launching the CloudFormation stack. The Iceberg configuration includes a warehouse path for Iceberg actual data, a DynamoDB table name for commit locking, a database name for your Iceberg tables, and more.\n\nTo load the configuration, set the S3 bucket name that was created via the CloudFormation stack.\n\n2.On the [AWS CloudFormation console](https://console.aws.amazon.com/cloudformation/home), choose **Stacks** in the navigation pane.\n3.Choose the stack you created.\n4.On the **Outputs** tab, copy the S3 bucket name.\n\n\n\n5.Set the S3 name as the ```S3_BUCKET``` parameter in your notebook.\n\n\n\n6.Run the cell and load the Iceberg configuration that you set.\n\n**Initialize the job with Iceberg configurations**\n\nWe continue to run cells to initiate a ```SparkSession``` in this section.\n\n1. Set an Iceberg warehouse path and a DynamoDB table name for Iceberg commit locking from the ```user_config parameter```.\n2. Initialize a ```SparkSession``` by setting the Iceberg configurations.\n3. With the ```SparkSession``` object, create ```SparkContext``` and ```GlueContext``` objects.\n\nThe following screenshot shows the relevant section in the notebook.\n\n\n\nWe provide the details of each parameter that you configure for the ```SparkSession``` in the appendix of this post.\n\nFor this post, we demonstrate setting the Spark configuration for Iceberg. You can also set the configuration as AWS Glue job parameters. For more information, refer to the **Usage Information** section in the [Iceberg connector product page](https://aws.amazon.com/marketplace/pp/prodview-iicxofvpqvsio).\n\n#### **Use case walkthrough**\n\nTo walk through our use case, we use two tables; ```acr_iceberg``` and ```acr_iceberg_report```. The table ```acr_iceberg``` contains the customer review data. The table ```acr_iceberg_report``` contains BI analysis results based on the customer review data. All changes to ```acr_iceberg``` also impact ```acr_iceberg_report```. The table ```acr_iceberg_report``` needs to be updated daily, right before sharing business reports with stakeholders.\n\nTo demonstrate this use case, we walk through the following typical steps:\n\n1. A data engineering team registers the ```acr_iceberg``` and ```acr_iceberg_report``` tables in the Glue Data Catalog.\n2. Customers (ecommerce users) add reviews to products in the ```Industrial_Supplies category```. These reviews are added to the Iceberg table.\n3. A customer requests to update their reviews. We simulate updating the customer review in the ```acr_iceberg``` table.\n4. We reflect the customer’s request of the updated review in ```acr_iceberg``` into ```acr_iceberg_report```.\n5. We revert the customer’s request of the updated review for the customer review table ```acr_iceberg```, and reflect the reversion in ```acr_iceberg_report```.\n\n**1. Create Iceberg tables of customer reviews and BI reports**\n\nIn this step, the data engineering team creates the ```acr_iceberg``` Iceberg table for customer reviews data (based on the Amazon Customer Reviews Dataset), and the team creates the ```acr_iceberg_report``` Iceberg table for BI reports.\n\n**Create the ```acr_iceberg``` table for customer reviews**\n\nThe following code initially extracts the Amazon customer reviews, which are stored in a public S3 bucket. Then it creates an Iceberg table of the customer reviews and loads these reviews into your specified S3 bucket (created via CloudFormation stack). Note that the script loads partial datasets to avoid taking a lot of time to load the data.\n\n```\n# Loading the dataset and creating an Iceberg table. This will take about 3-5 minutes.\nspark.read \\\n .option('basePath', INPUT_BASE_PATH) \\\n .parquet(*INPUT_CATEGORIES) \\\n .writeTo(f'{CATALOG}.{DATABASE}.{TABLE}') \\\n .tableProperty('format-version', '2') \\\n .create()\n```\n\nRegarding the ```tableProperty parameter```, we specify format version 2 to make the table version compatible with [Amazon Athena](http://aws.amazon.com/athena). For more information about Athena support for Iceberg tables, refer to [Considerations and limitations](https://docs.aws.amazon.com/athena/latest/ug/querying-iceberg.html#querying-iceberg-considerations-and-limitations). To learn more about the difference between Iceberg table versions 1 and 2, refer to [Appendix E: Format version changes](https://iceberg.apache.org/spec/#appendix-e-format-version-changes).\n\nLet’s run the following cells. Running the second cell takes around 3–5 minutes.\n\n\n\nAfter you run the cells, the ```acr_iceberg``` table is available in your specified database in the Glue Data Catalog.\n\n\n\nYou can also see the actual data and metadata of the Iceberg table in the S3 bucket that is created through the CloudFormation stack. Iceberg creates the table and writes actual data and relevant metadata that includes table schema, table version information, and so on. See the following objects in your S3 bucket:\n\n```\n$ aws s3 ls 's3://your-bucket/data/' --recursive\nYYYY-MM-dd hh:mm:ss 83616660 data/iceberg_blog_default.db/acr_iceberg/data/00000-44-c2983230-c43a-4f4a-9b89-1f7c13e59645-00001.parquet\nYYYY-MM-dd hh:mm:ss 83247771 \n...\nYYYY-MM-dd hh:mm:ss 5134 data/iceberg_blog_default.db/acr_iceberg/metadata/00000-bc5d3ea2-280f-4e28-a71f-4c2b749ed637.metadata.json\nYYYY-MM-dd hh:mm:ss 116950 data/iceberg_blog_default.db/acr_iceberg/metadata/411308cd-1f4d-4535-9444-f6b56a56697f-m0.avro\nYYYY-MM-dd hh:mm:ss 3821 data/iceberg_blog_default.db/acr_iceberg/metadata/snap-6122957686233868728-1-411308cd-1f4d-4535-9444-f6b56a56697f.avro\n```\n\nThe job tries to create a DynamoDB table, which you specified in the CloudFormation stack (in the following screenshot, its name is ```myGlueLockTable```), if it doesn’t exist already. As we discussed earlier, the DynamoDB table is used for commit locking for Iceberg tables.\n\n\n\n**Create the ```acr_iceberg_report``` Iceberg table for BI reports**\n\nThe data engineer team also creates the ```acr_iceberg_report``` table for BI reports in the Glue Data Catalog. This table initially has the following records.\n\n\n\nTo create the table, run the following cell.\n\n\n\nThe two Iceberg tables have been created. Let’s check the ```acr_iceberg table``` records by running a query.\n\n**Determine the average star rating for each product category by querying the Iceberg table**\n\nYou can see the Iceberg table records by using a ```SELECT``` statement. In this section, we query the ```acr_iceberg``` table to simulate seeing a current BI report data by running an ad hoc query.\n\nRun the following cell in the notebook to get the aggregated number of customer comments and mean star rating for each ```product_category```.\n\n\n\nThe cell output has the following results.\n\n\n\nAnother way to query Iceberg tables is using Amazon Athena (when you use the Athena with Iceberg tables, you need to set up the [Iceberg environment](https://docs.aws.amazon.com/athena/latest/ug/querying-iceberg.html)) or [Amazon EMR](http://aws.amazon.com/emr).\n\n**2. Add customer reviews in the Iceberg table**\n\nIn this section, customers add comments for some products in the **Industrial Supplies** product category, and we add these comments to the ```acr_iceberg``` table. To demonstrate this scenario, we create a Spark DataFrame based on the following new customer reviews and then add them to the table with an ```INSERT``` statement.\n\n\n\nRun the following cells in the notebook to insert the customer comments to the Iceberg table. The process takes about 1 minute.\n\n\n\nRun the next cell to see an addition to the product category ```Industrial_Supplies``` with 5 under ```comment_count```.\n\n\n\n**3. Update a customer review in the Iceberg table**\n\nIn the previous section, we added new customer reviews to the ```acr_iceberg``` Iceberg table. In this section, a customer requests an update of their review. Specifically, customer ```78901234``` requests the following update of the review ID ```IS4392CD4C3C4```.\n\n- change ```star_rating``` from 3 to 5\n- update the ```review_headline``` from ```nice one, but it broke some days later``` to ```very good```\n\nWe update the customer comment by using an ```UPDATE``` [query](https://iceberg.apache.org/docs/latest/spark-writes/#update) by running the following cell.\n\n\n\nWe can review the updated record by running the next cell as follows.\n\n\n\n\n\nAlso, when you run this cell for the reporting table, you can see the updated ```avg_star``` column value for the ```Industrial_Supplies``` product category. Specifically, the ```avg_star``` value has been updated from 3.8 to 4.2 as a result of the ```star_rating``` changing from 3 to 5:\n\n\n\n**4. Reflect changes in the customer reviews table in the BI report table with a MERGE INTO query**\n\nIn this section, we reflect the changes in the ```acr_iceberg``` table into the BI report table ```acr_iceberg_report```. To do so, we run the ```MERGE INTO``` query and combine the two tables based on the condition of the ```product_category``` column in each table. This query works as follows:\n\n- When the ```product_category``` column in each table is the same, the query returns the sum of each column record\n- When the column in each table is not the same, the query just inserts a new record\n\nThis ```MERGE INTO``` operation is also referred to as an ```UPSERT``` (update and insert).\n\nRun the following cell to reflect the update of customer reviews in the ```acr_iceberg``` table into the ```acr_iceberg_report``` BI table.\n\n\n\nAfter the ```MERGE INTO``` query is complete, you can see the updated ```acr_iceberg_report``` table by running the following cell.\n\n\n\nThe ```MERGE INTO``` query performed the following changes:\n\n- In the ```Camera```, ```Industrial_Supplies```, and ```PC``` product categories, each ```comment_count``` is the sum between the initial value of the ```acr_iceberg_report``` table and the aggregated table value. For example, in the ```Industrial_Supplies``` product category row, the comment_count ```100``` is calculated by ```95``` (in the initial version of ```acr_iceberg_report```) + ```5``` (in the aggregated report table).\n- In addition to ```comment_count```, the ```avg_star``` in the ```Camera```, ```Industrial_Supplies```, or ```PC``` product category row is also computed by averaging between each ```avg_star``` value in ```acr_iceberg_report``` and in the aggregated table.\n- In other product categories, each ```comment_count``` and ```avg_star``` is the same as each value in the aggregated table, which means that each value in the aggregated table is inserted into the ```acr_iceberg_report``` table.\n\n**5. Roll back the Iceberg tables and reflect changes in the BI report table**\n\nIn this section, the customer who requested the update of the review now requests to revert the updated review.\n\nIceberg stores versioning tables through the operations for Iceberg tables. We can see the information of each version of table by [inspecting tables](https://iceberg.apache.org/docs/latest/spark-queries/#inspecting-tables), and we can also time travel or roll back tables to an old table version.\n\nTo complete the customer request to revert the updated review, we need to revert the table version of ```acr_iceberg``` to the earlier version when we first added the reviews. Additionally, we need to update the ```acr_iceberg_report``` table to reflect the rollback of the ```acr_iceberg``` table version. Specifically, we need to perform the following three steps to complete these operations:\n\n1. Check the history of table changes of ```acr_iceberg``` and ```acr_iceberg_report``` to get each table snapshot.\n2. Roll back ```acr_iceberg``` to the version when first we inserted records, and also roll back the ```acr_iceberg_report``` table to the initial version to reflect the customer review update.\n3. Merge the ```acr_iceberg``` table with the ```acr_iceberg_report``` table again.\n\n**Get the metadata of each report table**\n\nAs a first step, we check table versions by [inspecting the table](https://iceberg.apache.org/docs/latest/spark-queries/#inspecting-tables). Run the following cells.\n\n\n\nNow you can see the following table versions in ```acr_iceberg``` and ```acr_iceberg_report```:\n\n- ```acr_iceberg``` has three versions:\n - The oldest one is the initial version of this table, which shows the ```append``` operation\n - The second oldest one is the record insertion, which shows the ```append``` operation\n - The latest one is the update, which shows the ```overwrite``` operation\n- ```acr_iceberg_report``` has two versions:\n - The oldest one is the initial version of this table, which shows the append operation\n - The other one is from the ```MERGE INTO``` query in the previous section, which shows the ```overwrite``` operation\n\nAs shown in the following screenshot, we roll back to the ```acr_iceberg``` table version, inserting records based on the customer revert request. We also roll back to the ```acr_iceberg_report``` table version in the initial version to discard the ```MERGE INTO``` operation in the previous section.\n\n\n\n**Roll back the ```acr_iceberg``` and ```acr_iceberg_report``` tables**\n\nBased on your snapshot IDs, you can roll back each table version:\n\n- For ```acr_iceberg```, use the ```second-oldest``` snapshot_id (in this example, ```5440744662350048750```) and replace ```<Type snapshot_id in ace_iceberg table>``` in the following cell with this ```snapshot_id```.\n- For ```acr_iceberg_report``` table, use the initial snapshot_id (in this example, ```7958428388396549892```) and replace ```<Type snaphost_id in ace_iceberg_report table>``` in the following cell with this ```snapshot_id```.\n\nAfter you specify the snapshot_id for each rollback query, run the following cells.\n\n\n\nWhen this step is complete, you can see the previous and current snapshot IDs of each table.\n\n\n\nEach Iceberg table has been reverted to the specific version now.\n\n**Reflect changes in ```acr_iceberg``` into ```acr_iceberg_report``` again**\nWe reflect the ```acr_iceberg``` table reversion into the current ```acr_iceberg_report``` table. To complete this, run the following cell.\n\n\n\nAfter you rerun the ```MERGE INTO``` query, run the following cell to see the new table records. When we compare the table records, we observe that the ```avg_star``` value in ```Industrial_Supplies``` is lower than the value of the previous table ```avg_star```.\n\n\n\nYou were able to reflect a customer’s request of reverting their updated review on the BI report table. Specifically, you can get the updated ```avg_star``` record in the ```Industrial_Supplies``` product category.\n\n#### **Clean up**\nTo clean up all resources that you created, delete the CloudFormation stack.\n\n#### **Conclusion**\nIn this post, we walked through using the Apache Iceberg connector with AWS Glue ETL jobs. We created an Iceberg table built on Amazon S3, and ran queries such as reading the Iceberg table data, inserting a record, merging two tables, and time travel.\n\nThe operations for the Iceberg table that we demonstrated in this post aren’t all of the operations Iceberg supports. Refer to the [Apache Iceberg documentation](https://iceberg.apache.org/docs/latest/) for information about more operations.\n\n\n\n#### **About the Author**\n\n\n\n**Tomohiro** Tanaka is a Cloud Support Engineer at Amazon Web Services. He builds Glue connectors such as Apache Iceberg connector and TPC-DS connector. He’s passionate about helping customers build data lakes using ETL workloads. In his free time, he also enjoys coffee breaks with his colleagues and making coffee at home.","render":"<p>Nowadays, many customers have built their data lakes as the core of their data analytic systems. In a typical use case of data lakes, many concurrent queries run to retrieve consistent snapshots of business insights by aggregating query results. A large volume of data constantly comes from different data sources into the data lakes. There is also a common demand to reflect the changes occurring in the data sources into the data lakes. This means that not only inserts but also updates and deletes need to be replicated into the data lakes.</p>\n<p><a href=\"https://iceberg.apache.org/\" target=\"_blank\">Apache Iceberg </a> provides the capability of ACID transactions on your data lakes, which allows concurrent queries to add or delete records isolated from any existing queries with read-consistency for queries. Iceberg is an open table format designed for large analytic workloads on huge datasets. You can perform ACID transactions against your data lakes by using simple SQL expressions. It also enables time travel, rollback, hidden partitioning, and schema evolution changes, such as adding, dropping, renaming, updating, and reordering columns.</p>\n<p><a href=\"https://aws.amazon.com/glue\" target=\"_blank\">AWS Glue</a> is one of the key elements to building data lakes. It extracts data from multiple sources and ingests your data to your data lake built on <a href=\"http://aws.amazon.com/s3\" target=\"_blank\">Amazon Simple Storage Service</a> (Amazon S3) using both batch and streaming jobs. To expand the accessibility of your AWS Glue extract, transform, and load (ETL) jobs to Iceberg, AWS Glue provides an <a href=\"https://aws.amazon.com/marketplace/pp/prodview-iicxofvpqvsio\" target=\"_blank\">Apache Iceberg connector</a>. The connector allows you to build Iceberg tables on your data lakes and run Iceberg operations such as ACID transactions, time travel, rollbacks, and so on from your AWS Glue ETL jobs.</p>\n<p>In this post, we give an overview of how to set up the Iceberg connector for AWS Glue and configure the relevant resources to use Iceberg with AWS Glue jobs. We also demonstrate how to run typical Iceberg operations on <a href=\"https://docs.aws.amazon.com/glue/latest/dg/interactive-sessions-overview.html\" target=\"_blank\">AWS Glue interactive sessions</a> with an example use case.</p>\n<h4><a id=\"Apache_Iceberg_connector_for_AWS_Glue_8\"></a><strong>Apache Iceberg connector for AWS Glue</strong></h4>\n<p>With the <a href=\"https://aws.amazon.com/marketplace/pp/prodview-iicxofvpqvsio\" target=\"_blank\">Apache Iceberg connector for AWS Glue</a>, you can take advantage of the following Iceberg capabilities:</p>\n<ul>\n<li><strong>Basic operations on Iceberg tables</strong> – This includes creating Iceberg tables in the AWS Glue Data Catalog and inserting, updating, and deleting records with ACID transactions in the Iceberg tables</li>\n<li><strong>Inserting and updating records</strong> – You can run <code>UPSERT</code> (update and insert) queries for your Iceberg table</li>\n<li><strong>Time travel on Iceberg tables</strong> – You can read a specific version of an Iceberg table from table snapshots that Iceberg manages</li>\n<li><strong>Rollback of table versions</strong> – You can revert an Iceberg table back to a specific version of the table</li>\n</ul>\n<p>Iceberg offers additional useful capabilities such as hidden partitioning; schema evolution with add, drop, update, and rename support; automatic data compaction; and more. For more details about Iceberg, refer to the <a href=\"https://iceberg.apache.org/docs/latest/\" target=\"_blank\">Apache Iceberg documentation</a>.</p>\n<p>Next, we demonstrate how the Apache Iceberg connector for AWS Glue works for each Iceberg capability based on an example use case.</p>\n<h4><a id=\"Overview_of_example_customer_scenario_21\"></a><strong>Overview of example customer scenario</strong></h4>\n<p>Let’s assume that an ecommerce company sells products on their online platform. Customers can buy products and write reviews to each product. Customers can add, update, or delete their reviews at any time. The customer reviews are an important source for analyzing customer sentiment and business trends.</p>\n<p>In this scenario, we have the following teams in our organization:</p>\n<ul>\n<li><strong>Data engineering team</strong> – Responsible for building and managing data platforms.</li>\n<li><strong>Data analyst team</strong> – Responsible for analyzing customer reviews and creating business reports. This team queries the reviews daily, creates a business intelligence (BI) report, and shares it with sales team.</li>\n<li><strong>Customer support team</strong> – Responsible for replying to customer inquiries. This team queries the reviews when they get inquiries about the reviews.</li>\n</ul>\n<p>Our solution has the following requirements:</p>\n<ul>\n<li>Query scalability is important because the website is huge.</li>\n<li>Individual customer reviews can be added, updated, and deleted.</li>\n<li>The data analyst team needs to use both notebooks and ad hoc queries for their analysis.</li>\n<li>The customer support team sometimes needs to view the history of the customer reviews.</li>\n<li>Customer reviews can always be added, updated, and deleted, even while one of the teams is querying the reviews for analysis. This means that any result in a query isn’t affected by uncommitted customer review write operations.</li>\n<li>Any changes in customer reviews that are made by the organization’s various teams need to be reflected in BI reports and query results.</li>\n</ul>\n<p>In this post, we build a data lake of customer review data on top of Amazon S3. To meet these requirements, we introduce Apache Iceberg to enable adding, updating, and deleting records; ACID transactions; and time travel queries. We also use an AWS Glue Studio notebook to integrate and query the data at scale. First, we set up the connector so we can create an AWS Glue connection for Iceberg.</p>\n<h4><a id=\"Set_up_the_Apache_Iceberg_connector_and_create_the_Iceberg_connection_42\"></a><strong>Set up the Apache Iceberg connector and create the Iceberg connection</strong></h4>\n<p>We first set up Apache Iceberg connector for AWS Glue to use Apache Iceberg with AWS Glue jobs. Particularly, in this section, we set up the Apache Iceberg connector for AWS Glue and create an AWS Glue job with the connector. Complete the following steps:</p>\n<ol>\n<li>Navigate to the <a href=\"https://aws.amazon.com/marketplace/pp/prodview-iicxofvpqvsio\" target=\"_blank\">Apache Iceberg connector for AWS Glue page</a> in <a href=\"https://aws.amazon.com/marketplace\" target=\"_blank\">AWS Marketplace</a>.</li>\n<li>Choose <strong>Continue to Subscribe</strong>.</li>\n</ol>\n<p><img src=\"https://dev-media.amazoncloud.cn/78dabddfb3084d32a9f7ccd950cbbd21_image.png\" alt=\"image.png\" /></p>\n<p>3.Review the information under <strong>Terms and Conditions</strong>, and choose <strong>Accept Terms</strong> to continue.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/813b678a40924481b88f415fff521bec_image.png\" alt=\"image.png\" /></p>\n<p>4.When the subscription is complete, choose <strong>Continue to Configuration</strong>.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/f1df77b71d4645f79b51e4b4c5216a2a_image.png\" alt=\"image.png\" /></p>\n<p><img src=\"https://dev-media.amazoncloud.cn/4e78f9a98277423fbdf3aa01d40a0538_image.png\" alt=\"image.png\" /></p>\n<p>5.For <strong>Fulfillment option</strong>, choose <strong>Glue 3.0</strong>. (1.0 and 2.0 are also available options.)<br />\n6.For <strong>Software version</strong>, choose the latest software version.<br />\nAs of this writing, 0.12.0-2 is the latest version of the Apache Iceberg connector for AWS Glue.</p>\n<p>7.Choose <strong>Continue to Launch</strong>.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/c9cee41611a14cb6837c33082bfd6374_image.png\" alt=\"image.png\" /></p>\n<p>8.Choose <strong>Usage instructions</strong>.<br />\n9.Choose <strong>Activate the Glue connector from AWS Glue Studio</strong>.<br />\nYou’re redirected to AWS Glue Studio.</p>\n<p>10.For <strong>Name</strong>, enter a name for your connection (for example, <code>iceberg-connection</code>).</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/3768fd68ce1d4239b805c6506b3614b4_image.png\" alt=\"image.png\" /></p>\n<p>11.Choose <strong>Create connection and activate connector</strong>.</p>\n<p>A message appears that the connection was successfully added, and the connection is now visible on the AWS Glue Studio console.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/7edf54a8f83049a58e1b9f31686c5f56_image.png\" alt=\"image.png\" /></p>\n<h4><a id=\"Configure_resources_and_permissions_83\"></a><strong>Configure resources and permissions</strong></h4>\n<p>We use a provided AWS CloudFormation <a href=\"https://aws-bigdata-blog.s3.amazonaws.com/artifacts/glue-iceberg-connector/IcebergConnectorBlogCFn.yml\" target=\"_blank\">template</a> to set up Iceberg configuration for AWS Glue. AWS CloudFormation creates the following resources:</p>\n<ul>\n<li>An S3 bucket to store an Iceberg configuration file and actual data</li>\n<li>An <a href=\"https://aws.amazon.com/lambda/\" target=\"_blank\">AWS Lambda</a> function to generate an Iceberg configuration file based on parameters provided by a user for the CloudFormation template, and to clean up the resources created through this post</li>\n<li><a href=\"https://aws.amazon.com/iam/\" target=\"_blank\">AWS Identity and Access Management</a> (IAM) roles and policies with necessary permissions</li>\n<li>An AWS Glue database in the Data Catalog to register Iceberg tables</li>\n</ul>\n<p>To deploy the CloudFormation template, complete the following steps:</p>\n<p>1.Choose <strong>Launch Stack</strong>:</p>\n<p><a href=\"https://console.aws.amazon.com/cloudformation/home?region=us-east-1#/stacks/new?templateURL=https://aws-bigdata-blog.s3.amazonaws.com/artifacts/glue-iceberg-connector/IcebergConnectorBlogCFn.yml\" target=\"_blank\"><img src=\"https://dev-media.amazoncloud.cn/6a3a2c59b00b4d50aefe83c58ba52b37_image.png\" alt=\"image.png\" /></a></p>\n<p>2.For <strong>DynamoDBTableName</strong>, enter a name for an Amazon DynamoDB table that is created automatically when AWS Glue creates an Iceberg table.<br />\nThis table is used for an AWS Glue job to obtain a commit lock to avoid concurrently modifying records in Iceberg tables. For more details about commit locking, refer to <a href=\"https://iceberg.apache.org/docs/latest/aws/#dynamodb-for-commit-locking\" target=\"_blank\">DynamoDB for Commit Locking</a>. Note that you shouldn’t specify the name of an existing table.</p>\n<p>3.For <strong>IcebergDatabaseName</strong>, enter a name for the AWS Glue database that is created in the Data Catalog and used for registering Iceberg tables.<br />\n4.Choose <strong>Next</strong>.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/f6b957093c3f4e428d0e1cb0430d9a7b_image.png\" alt=\"image.png\" /></p>\n<p>5.Select <strong>I acknowledge that AWS CloudFormation might create IAM resources with custom names</strong>.<br />\n6.Choose <strong>Create stack</strong>.</p>\n<h4><a id=\"Start_an_AWS_Glue_Studio_notebook_to_use_Apache_Iceberg_109\"></a><strong>Start an AWS Glue Studio notebook to use Apache Iceberg</strong></h4>\n<p>After you launch the CloudFormation stack, you create an AWS Glue Studio notebook to perform Iceberg operations. Complete the following steps:</p>\n<ol>\n<li>Download the <a href=\"https://aws-bigdata-blog.s3.amazonaws.com/artifacts/glue-iceberg-connector/iceberg-connector-with-glue.ipynb\" target=\"_blank\">Jupyter notebook file</a>.</li>\n<li>On the AWS Glue console, choose <strong>Jobs</strong> in the navigation pane.</li>\n<li>Under <strong>Create job</strong>, select <strong>Jupyter Notebook</strong>.</li>\n</ol>\n<p><img src=\"https://dev-media.amazoncloud.cn/ba70a233775944bba3963cddd3530856_image.png\" alt=\"image.png\" /></p>\n<p>4.Select <strong>Upload and edit an existing notebook</strong> and upload <code>iceberg-with-glue.ipynb</code>.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/978b19bdd8f743b8a79f32b3b1ca9de3_image.png\" alt=\"image.png\" /></p>\n<ol start=\"5\">\n<li>Choose <strong>Create</strong>.</li>\n<li>For <strong>Job name</strong>, enter a name.</li>\n<li>For <strong>IAM role</strong>, choose <strong>IcebergConnectorGlueJobRole</strong>, which was created via the CloudFormation template.</li>\n<li>Choose <strong>Start notebook job</strong>.</li>\n</ol>\n<p><img src=\"https://dev-media.amazoncloud.cn/56fa31ad77c145b0851b8fd58c39ee4c_image.png\" alt=\"image.png\" /></p>\n<p>The process takes a few minutes to complete, after which you can see an AWS Glue Studio notebook view.</p>\n<p>9.Choose <strong>Save</strong> to save the notebook.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/2ce1fff2b6dd4c84a105792adaf2ec30_image.png\" alt=\"image.png\" /></p>\n<p><strong>Set up the Iceberg configuration</strong></p>\n<p>To set up the Iceberg configuration, complete the following steps:</p>\n<ol>\n<li>Run the following cells with multiple options (magics). Note that you set your connection name for the <code>%connections</code> magic in the cell.</li>\n</ol>\n<p>For more information, refer to <a href=\"https://docs.aws.amazon.com/glue/latest/dg/interactive-sessions-magics.html\" target=\"_blank\">Configuring AWS Glue Interactive Sessions for Jupyter and AWS Glue Studio notebooks</a>.</p>\n<p>A message <strong>Session <session-id> has been created</strong> appears when your AWS Glue Studio notebook is ready.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/23256dba38694fda95f26c5804d0d16b_image.png\" alt=\"image.png\" /></p>\n<p>In the last cell in this section, you load your Iceberg configuration, which you specified when launching the CloudFormation stack. The Iceberg configuration includes a warehouse path for Iceberg actual data, a DynamoDB table name for commit locking, a database name for your Iceberg tables, and more.</p>\n<p>To load the configuration, set the S3 bucket name that was created via the CloudFormation stack.</p>\n<p>2.On the <a href=\"https://console.aws.amazon.com/cloudformation/home\" target=\"_blank\">AWS CloudFormation console</a>, choose <strong>Stacks</strong> in the navigation pane.<br />\n3.Choose the stack you created.<br />\n4.On the <strong>Outputs</strong> tab, copy the S3 bucket name.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/787244120ace4446b362179552f2e4fa_image.png\" alt=\"image.png\" /></p>\n<p>5.Set the S3 name as the <code>S3_BUCKET</code> parameter in your notebook.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/3730c7d5397f417eb8bb0cff9c2b310f_image.png\" alt=\"image.png\" /></p>\n<p>6.Run the cell and load the Iceberg configuration that you set.</p>\n<p><strong>Initialize the job with Iceberg configurations</strong></p>\n<p>We continue to run cells to initiate a <code>SparkSession</code> in this section.</p>\n<ol>\n<li>Set an Iceberg warehouse path and a DynamoDB table name for Iceberg commit locking from the <code>user_config parameter</code>.</li>\n<li>Initialize a <code>SparkSession</code> by setting the Iceberg configurations.</li>\n<li>With the <code>SparkSession</code> object, create <code>SparkContext</code> and <code>GlueContext</code> objects.</li>\n</ol>\n<p>The following screenshot shows the relevant section in the notebook.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/b13cc6ad3ee443fd81f15e2efb7f6ff4_image.png\" alt=\"image.png\" /></p>\n<p>We provide the details of each parameter that you configure for the <code>SparkSession</code> in the appendix of this post.</p>\n<p>For this post, we demonstrate setting the Spark configuration for Iceberg. You can also set the configuration as AWS Glue job parameters. For more information, refer to the <strong>Usage Information</strong> section in the <a href=\"https://aws.amazon.com/marketplace/pp/prodview-iicxofvpqvsio\" target=\"_blank\">Iceberg connector product page</a>.</p>\n<h4><a id=\"Use_case_walkthrough_180\"></a><strong>Use case walkthrough</strong></h4>\n<p>To walk through our use case, we use two tables; <code>acr_iceberg</code> and <code>acr_iceberg_report</code>. The table <code>acr_iceberg</code> contains the customer review data. The table <code>acr_iceberg_report</code> contains BI analysis results based on the customer review data. All changes to <code>acr_iceberg</code> also impact <code>acr_iceberg_report</code>. The table <code>acr_iceberg_report</code> needs to be updated daily, right before sharing business reports with stakeholders.</p>\n<p>To demonstrate this use case, we walk through the following typical steps:</p>\n<ol>\n<li>A data engineering team registers the <code>acr_iceberg</code> and <code>acr_iceberg_report</code> tables in the Glue Data Catalog.</li>\n<li>Customers (ecommerce users) add reviews to products in the <code>Industrial_Supplies category</code>. These reviews are added to the Iceberg table.</li>\n<li>A customer requests to update their reviews. We simulate updating the customer review in the <code>acr_iceberg</code> table.</li>\n<li>We reflect the customer’s request of the updated review in <code>acr_iceberg</code> into <code>acr_iceberg_report</code>.</li>\n<li>We revert the customer’s request of the updated review for the customer review table <code>acr_iceberg</code>, and reflect the reversion in <code>acr_iceberg_report</code>.</li>\n</ol>\n<p><strong>1. Create Iceberg tables of customer reviews and BI reports</strong></p>\n<p>In this step, the data engineering team creates the <code>acr_iceberg</code> Iceberg table for customer reviews data (based on the Amazon Customer Reviews Dataset), and the team creates the <code>acr_iceberg_report</code> Iceberg table for BI reports.</p>\n<p><strong>Create the <code>acr_iceberg</code> table for customer reviews</strong></p>\n<p>The following code initially extracts the Amazon customer reviews, which are stored in a public S3 bucket. Then it creates an Iceberg table of the customer reviews and loads these reviews into your specified S3 bucket (created via CloudFormation stack). Note that the script loads partial datasets to avoid taking a lot of time to load the data.</p>\n<pre><code class=\"lang-\"># Loading the dataset and creating an Iceberg table. This will take about 3-5 minutes.\nspark.read \\\n .option('basePath', INPUT_BASE_PATH) \\\n .parquet(*INPUT_CATEGORIES) \\\n .writeTo(f'{CATALOG}.{DATABASE}.{TABLE}') \\\n .tableProperty('format-version', '2') \\\n .create()\n</code></pre>\n<p>Regarding the <code>tableProperty parameter</code>, we specify format version 2 to make the table version compatible with <a href=\"http://aws.amazon.com/athena\" target=\"_blank\">Amazon Athena</a>. For more information about Athena support for Iceberg tables, refer to <a href=\"https://docs.aws.amazon.com/athena/latest/ug/querying-iceberg.html#querying-iceberg-considerations-and-limitations\" target=\"_blank\">Considerations and limitations</a>. To learn more about the difference between Iceberg table versions 1 and 2, refer to <a href=\"https://iceberg.apache.org/spec/#appendix-e-format-version-changes\" target=\"_blank\">Appendix E: Format version changes</a>.</p>\n<p>Let’s run the following cells. Running the second cell takes around 3–5 minutes.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/d9613a7adc634296a6dd5fd9bc458c59_image.png\" alt=\"image.png\" /></p>\n<p>After you run the cells, the <code>acr_iceberg</code> table is available in your specified database in the Glue Data Catalog.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/43924a702c7f4c6f9f0aeb6533ea5cf5_image.png\" alt=\"image.png\" /></p>\n<p>You can also see the actual data and metadata of the Iceberg table in the S3 bucket that is created through the CloudFormation stack. Iceberg creates the table and writes actual data and relevant metadata that includes table schema, table version information, and so on. See the following objects in your S3 bucket:</p>\n<pre><code class=\"lang-\">$ aws s3 ls 's3://your-bucket/data/' --recursive\nYYYY-MM-dd hh:mm:ss 83616660 data/iceberg_blog_default.db/acr_iceberg/data/00000-44-c2983230-c43a-4f4a-9b89-1f7c13e59645-00001.parquet\nYYYY-MM-dd hh:mm:ss 83247771 \n...\nYYYY-MM-dd hh:mm:ss 5134 data/iceberg_blog_default.db/acr_iceberg/metadata/00000-bc5d3ea2-280f-4e28-a71f-4c2b749ed637.metadata.json\nYYYY-MM-dd hh:mm:ss 116950 data/iceberg_blog_default.db/acr_iceberg/metadata/411308cd-1f4d-4535-9444-f6b56a56697f-m0.avro\nYYYY-MM-dd hh:mm:ss 3821 data/iceberg_blog_default.db/acr_iceberg/metadata/snap-6122957686233868728-1-411308cd-1f4d-4535-9444-f6b56a56697f.avro\n</code></pre>\n<p>The job tries to create a DynamoDB table, which you specified in the CloudFormation stack (in the following screenshot, its name is <code>myGlueLockTable</code>), if it doesn’t exist already. As we discussed earlier, the DynamoDB table is used for commit locking for Iceberg tables.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/51685e1ae8c74060bc54e9b628dce5e1_image.png\" alt=\"image.png\" /></p>\n<p><strong>Create the <code>acr_iceberg_report</code> Iceberg table for BI reports</strong></p>\n<p>The data engineer team also creates the <code>acr_iceberg_report</code> table for BI reports in the Glue Data Catalog. This table initially has the following records.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/f96bd533ec0548d999fe0a4ff013221e_f39d4e44ef0cf3f8c28a8daab454638.jpg\" alt=\"f39d4e44ef0cf3f8c28a8daab454638.jpg\" /></p>\n<p>To create the table, run the following cell.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/e4a71da0f82e40939c4ce848afc4b298_image.png\" alt=\"image.png\" /></p>\n<p>The two Iceberg tables have been created. Let’s check the <code>acr_iceberg table</code> records by running a query.</p>\n<p><strong>Determine the average star rating for each product category by querying the Iceberg table</strong></p>\n<p>You can see the Iceberg table records by using a <code>SELECT</code> statement. In this section, we query the <code>acr_iceberg</code> table to simulate seeing a current BI report data by running an ad hoc query.</p>\n<p>Run the following cell in the notebook to get the aggregated number of customer comments and mean star rating for each <code>product_category</code>.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/92f70b3f6595459a95af11408e02a1d8_image.png\" alt=\"image.png\" /></p>\n<p>The cell output has the following results.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/a5136f3af627460ead862ce3fad503fd_image.png\" alt=\"image.png\" /></p>\n<p>Another way to query Iceberg tables is using Amazon Athena (when you use the Athena with Iceberg tables, you need to set up the <a href=\"https://docs.aws.amazon.com/athena/latest/ug/querying-iceberg.html\" target=\"_blank\">Iceberg environment</a>) or <a href=\"http://aws.amazon.com/emr\" target=\"_blank\">Amazon EMR</a>.</p>\n<p><strong>2. Add customer reviews in the Iceberg table</strong></p>\n<p>In this section, customers add comments for some products in the <strong>Industrial Supplies</strong> product category, and we add these comments to the <code>acr_iceberg</code> table. To demonstrate this scenario, we create a Spark DataFrame based on the following new customer reviews and then add them to the table with an <code>INSERT</code> statement.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/0b9dd28e04b74c5db731c6b99a70ea92_ee1ab79d2d8de568ef43eb0866e74ac.jpg\" alt=\"ee1ab79d2d8de568ef43eb0866e74ac.jpg\" /></p>\n<p>Run the following cells in the notebook to insert the customer comments to the Iceberg table. The process takes about 1 minute.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/1a4dc10f3aca4d62b7fece778b884d46_image.png\" alt=\"image.png\" /></p>\n<p>Run the next cell to see an addition to the product category <code>Industrial_Supplies</code> with 5 under <code>comment_count</code>.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/5f6904f681014daf9498419024357350_image.png\" alt=\"image.png\" /></p>\n<p><strong>3. Update a customer review in the Iceberg table</strong></p>\n<p>In the previous section, we added new customer reviews to the <code>acr_iceberg</code> Iceberg table. In this section, a customer requests an update of their review. Specifically, customer <code>78901234</code> requests the following update of the review ID <code>IS4392CD4C3C4</code>.</p>\n<ul>\n<li>change <code>star_rating</code> from 3 to 5</li>\n<li>update the <code>review_headline</code> from <code>nice one, but it broke some days later</code> to <code>very good</code></li>\n</ul>\n<p>We update the customer comment by using an <code>UPDATE</code> <a href=\"https://iceberg.apache.org/docs/latest/spark-writes/#update\" target=\"_blank\">query</a> by running the following cell.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/be6f3215eb414d21ae1dc283757ab2a5_image.png\" alt=\"image.png\" /></p>\n<p>We can review the updated record by running the next cell as follows.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/d032ed55590143c5a96e01f657ebb38a_image.png\" alt=\"image.png\" /></p>\n<p><img src=\"https://dev-media.amazoncloud.cn/477b62dde5474702a0f802e995ddf0f4_image.png\" alt=\"image.png\" /></p>\n<p>Also, when you run this cell for the reporting table, you can see the updated <code>avg_star</code> column value for the <code>Industrial_Supplies</code> product category. Specifically, the <code>avg_star</code> value has been updated from 3.8 to 4.2 as a result of the <code>star_rating</code> changing from 3 to 5:</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/8fca024ff40e4707b46715eb1a98ff2b_image.png\" alt=\"image.png\" /></p>\n<p><strong>4. Reflect changes in the customer reviews table in the BI report table with a MERGE INTO query</strong></p>\n<p>In this section, we reflect the changes in the <code>acr_iceberg</code> table into the BI report table <code>acr_iceberg_report</code>. To do so, we run the <code>MERGE INTO</code> query and combine the two tables based on the condition of the <code>product_category</code> column in each table. This query works as follows:</p>\n<ul>\n<li>When the <code>product_category</code> column in each table is the same, the query returns the sum of each column record</li>\n<li>When the column in each table is not the same, the query just inserts a new record</li>\n</ul>\n<p>This <code>MERGE INTO</code> operation is also referred to as an <code>UPSERT</code> (update and insert).</p>\n<p>Run the following cell to reflect the update of customer reviews in the <code>acr_iceberg</code> table into the <code>acr_iceberg_report</code> BI table.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/4990c055e64d43a1b67fbe560a492530_image.png\" alt=\"image.png\" /></p>\n<p>After the <code>MERGE INTO</code> query is complete, you can see the updated <code>acr_iceberg_report</code> table by running the following cell.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/b4753e15398e4e5f9428fc651681647e_image.png\" alt=\"image.png\" /></p>\n<p>The <code>MERGE INTO</code> query performed the following changes:</p>\n<ul>\n<li>In the <code>Camera</code>, <code>Industrial_Supplies</code>, and <code>PC</code> product categories, each <code>comment_count</code> is the sum between the initial value of the <code>acr_iceberg_report</code> table and the aggregated table value. For example, in the <code>Industrial_Supplies</code> product category row, the comment_count <code>100</code> is calculated by <code>95</code> (in the initial version of <code>acr_iceberg_report</code>) + <code>5</code> (in the aggregated report table).</li>\n<li>In addition to <code>comment_count</code>, the <code>avg_star</code> in the <code>Camera</code>, <code>Industrial_Supplies</code>, or <code>PC</code> product category row is also computed by averaging between each <code>avg_star</code> value in <code>acr_iceberg_report</code> and in the aggregated table.</li>\n<li>In other product categories, each <code>comment_count</code> and <code>avg_star</code> is the same as each value in the aggregated table, which means that each value in the aggregated table is inserted into the <code>acr_iceberg_report</code> table.</li>\n</ul>\n<p><strong>5. Roll back the Iceberg tables and reflect changes in the BI report table</strong></p>\n<p>In this section, the customer who requested the update of the review now requests to revert the updated review.</p>\n<p>Iceberg stores versioning tables through the operations for Iceberg tables. We can see the information of each version of table by <a href=\"https://iceberg.apache.org/docs/latest/spark-queries/#inspecting-tables\" target=\"_blank\">inspecting tables</a>, and we can also time travel or roll back tables to an old table version.</p>\n<p>To complete the customer request to revert the updated review, we need to revert the table version of <code>acr_iceberg</code> to the earlier version when we first added the reviews. Additionally, we need to update the <code>acr_iceberg_report</code> table to reflect the rollback of the <code>acr_iceberg</code> table version. Specifically, we need to perform the following three steps to complete these operations:</p>\n<ol>\n<li>Check the history of table changes of <code>acr_iceberg</code> and <code>acr_iceberg_report</code> to get each table snapshot.</li>\n<li>Roll back <code>acr_iceberg</code> to the version when first we inserted records, and also roll back the <code>acr_iceberg_report</code> table to the initial version to reflect the customer review update.</li>\n<li>Merge the <code>acr_iceberg</code> table with the <code>acr_iceberg_report</code> table again.</li>\n</ol>\n<p><strong>Get the metadata of each report table</strong></p>\n<p>As a first step, we check table versions by <a href=\"https://iceberg.apache.org/docs/latest/spark-queries/#inspecting-tables\" target=\"_blank\">inspecting the table</a>. Run the following cells.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/fb67e3a08dc84ba184b86819c5d3e603_image.png\" alt=\"image.png\" /></p>\n<p>Now you can see the following table versions in <code>acr_iceberg</code> and <code>acr_iceberg_report</code>:</p>\n<ul>\n<li><code>acr_iceberg</code> has three versions:\n<ul>\n<li>The oldest one is the initial version of this table, which shows the <code>append</code> operation</li>\n<li>The second oldest one is the record insertion, which shows the <code>append</code> operation</li>\n<li>The latest one is the update, which shows the <code>overwrite</code> operation</li>\n</ul>\n</li>\n<li><code>acr_iceberg_report</code> has two versions:\n<ul>\n<li>The oldest one is the initial version of this table, which shows the append operation</li>\n<li>The other one is from the <code>MERGE INTO</code> query in the previous section, which shows the <code>overwrite</code> operation</li>\n</ul>\n</li>\n</ul>\n<p>As shown in the following screenshot, we roll back to the <code>acr_iceberg</code> table version, inserting records based on the customer revert request. We also roll back to the <code>acr_iceberg_report</code> table version in the initial version to discard the <code>MERGE INTO</code> operation in the previous section.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/07dad3170dc249879dabd66e66f7f988_image.png\" alt=\"image.png\" /></p>\n<p><strong>Roll back the <code>acr_iceberg</code> and <code>acr_iceberg_report</code> tables</strong></p>\n<p>Based on your snapshot IDs, you can roll back each table version:</p>\n<ul>\n<li>For <code>acr_iceberg</code>, use the <code>second-oldest</code> snapshot_id (in this example, <code>5440744662350048750</code>) and replace <code><Type snapshot_id in ace_iceberg table></code> in the following cell with this <code>snapshot_id</code>.</li>\n<li>For <code>acr_iceberg_report</code> table, use the initial snapshot_id (in this example, <code>7958428388396549892</code>) and replace <code><Type snaphost_id in ace_iceberg_report table></code> in the following cell with this <code>snapshot_id</code>.</li>\n</ul>\n<p>After you specify the snapshot_id for each rollback query, run the following cells.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/ecfaca5cfab94743b7707b748fcb3c85_image.png\" alt=\"image.png\" /></p>\n<p>When this step is complete, you can see the previous and current snapshot IDs of each table.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/47739e6b6fd243dab3ecc39fd56984e6_image.png\" alt=\"image.png\" /></p>\n<p>Each Iceberg table has been reverted to the specific version now.</p>\n<p><strong>Reflect changes in <code>acr_iceberg</code> into <code>acr_iceberg_report</code> again</strong><br />\nWe reflect the <code>acr_iceberg</code> table reversion into the current <code>acr_iceberg_report</code> table. To complete this, run the following cell.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/75181dea45914a938002015af138b82e_image.png\" alt=\"image.png\" /></p>\n<p>After you rerun the <code>MERGE INTO</code> query, run the following cell to see the new table records. When we compare the table records, we observe that the <code>avg_star</code> value in <code>Industrial_Supplies</code> is lower than the value of the previous table <code>avg_star</code>.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/798b443de4e34eba94a35172b05e422b_image.png\" alt=\"image.png\" /></p>\n<p>You were able to reflect a customer’s request of reverting their updated review on the BI report table. Specifically, you can get the updated <code>avg_star</code> record in the <code>Industrial_Supplies</code> product category.</p>\n<h4><a id=\"Clean_up_380\"></a><strong>Clean up</strong></h4>\n<p>To clean up all resources that you created, delete the CloudFormation stack.</p>\n<h4><a id=\"Conclusion_383\"></a><strong>Conclusion</strong></h4>\n<p>In this post, we walked through using the Apache Iceberg connector with AWS Glue ETL jobs. We created an Iceberg table built on Amazon S3, and ran queries such as reading the Iceberg table data, inserting a record, merging two tables, and time travel.</p>\n<p>The operations for the Iceberg table that we demonstrated in this post aren’t all of the operations Iceberg supports. Refer to the <a href=\"https://iceberg.apache.org/docs/latest/\" target=\"_blank\">Apache Iceberg documentation</a> for information about more operations.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/162a1ff419d14051aa5c5752903f62ff_2cfe5ae21a62d8eca205fd808305780.png\" alt=\"2cfe5ae21a62d8eca205fd808305780.png\" /></p>\n<h4><a id=\"About_the_Author_390\"></a><strong>About the Author</strong></h4>\n<p><img src=\"https://dev-media.amazoncloud.cn/6a8016732341491ca14d3b5ada9b7d85_image.png\" alt=\"image.png\" /></p>\n<p><strong>Tomohiro</strong> Tanaka is a Cloud Support Engineer at Amazon Web Services. He builds Glue connectors such as Apache Iceberg connector and TPC-DS connector. He’s passionate about helping customers build data lakes using ETL workloads. In his free time, he also enjoys coffee breaks with his colleagues and making coffee at home.</p>\n"}

Use the Amazon Glue connector to read and write Apache Iceberg tables with ACID transactions and perform time travel

海外精选

Amazon Simple Storage Service (S3)

Amazon Glue

海外精选的内容汇集了全球优质的亚马逊云科技相关技术内容。同时,内容中提到的“AWS”

是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

0

0 0

0亚马逊云科技解决方案 基于行业客户应用场景及技术领域的解决方案

联系亚马逊云科技专家

相关产品

相关产品目录

相关产品

相关产品亚马逊云科技解决方案 基于行业客户应用场景及技术领域的解决方案

联系亚马逊云科技专家

亚马逊云科技解决方案

基于行业客户应用场景及技术领域的解决方案

联系专家

0

目录

分享

分享 点赞

点赞 收藏

收藏 目录

目录立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。