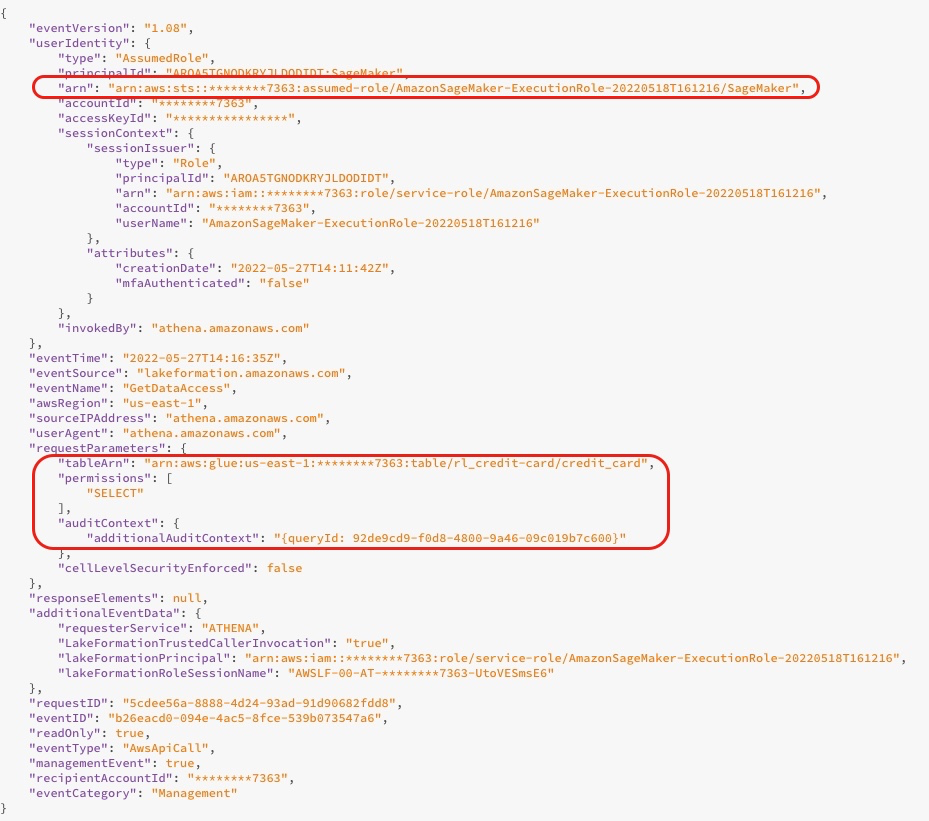

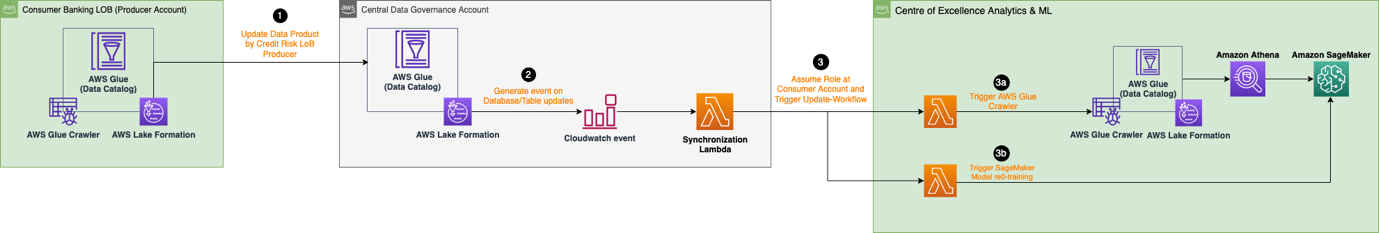

{"value":"This is the second part of a series that showcases the machine learning (ML) lifecycle with a data mesh design pattern for a large enterprise with multiple lines of business (LOBs) and a Center of Excellence (CoE) for analytics and ML.\n\nIn [part 1](https://aws.amazon.com/blogs/machine-learning/part-1-build-and-train-ml-models-using-a-data-mesh-architecture-on-aws/), we addressed the data steward persona and showcased a data mesh setup with multiple AWS data producer and consumer accounts. For an overview of the business context and the steps to set up a data mesh with [AWS Lake Formation](https://aws.amazon.com/lake-formation/) and register a data product, refer to part 1.\n\nIn this post, we address the analytics and ML platform team as a consumer in the data mesh. The platform team sets up the ML environment for the data scientists and helps them get access to the necessary data products in the data mesh. The data scientists in this team use [Amazon SageMaker](https://aws.amazon.com/sagemaker/) to build and train a credit risk prediction model using the shared credit risk data product from the consumer banking LoB.\n\n\nBuild and train ML models using a data mesh architecture on AWS\n\n- Part 1: Data mesh set up and data product registration\n- Part 2: Data product consumption by Analytics and ML CoE\n\nThe code for this example is available on [GitHub](https://github.com/aws-samples/amazon-sagemaker-lakeformation-datamesh).\n\n### **Analytics and ML consumer in a data mesh architecture**\n\nLet’s recap the high-level architecture that highlights the key components in the data mesh architecture.\n\n\n\nIn the data producer block 1 (left), there is a data processing stage to ensure that shared data is well-qualified and curated. The central data governance block 2 (center) acts as a centralized data catalog with metadata of various registered data products. The data consumer block 3 (right) requests access to datasets from the central catalog and queries and processes the data to build and train ML models.\n\nWith SageMaker, data scientists and developers in the ML CoE can quickly and easily build and train ML models, and then directly deploy them into a production-ready hosted environment. SageMaker provides easy access to your data sources for exploration and analysis, and also provides common ML algorithms and frameworks that are optimized to run efficiently against extremely large data in a distributed environment. It’s easy to get started with [Amazon SageMaker Studio](https://docs.aws.amazon.com/sagemaker/latest/dg/studio.html), a web-based integrated development environment (IDE), by completing the SageMaker domain [onboarding process](https://docs.aws.amazon.com/sagemaker/latest/dg/gs-studio-onboard.html). For more information, refer to the [Amazon SageMaker Developer Guide](https://docs.aws.amazon.com/sagemaker/latest/dg/whatis.html).\n\n### **Data product consumption by the analytics and ML CoE**\n\nThe following architecture diagram describes the steps required by the analytics and ML CoE consumer to get access to the registered data product in the central data catalog and process the data to build and train an ML model.\n\n\n\nThe workflow consists of the following components:\n\n1. The producer data steward provides access in the central account to the database and table to the consumer account. The database is now reflected as a shared database in the consumer account.\n2. The consumer admin creates a resource link in the consumer account to the database shared by the central account. The following screenshot shows an example in the consumer account, with ```rl_credit-card``` being the resource link of the ```credit-card``` database.\n\n\n\n\n\n3. The consumer admin provides the Studio [AWS Identity and Access Management](http://aws.amazon.com/iam) (IAM) execution role access to the resource linked database and the table identified in the Lake Formation tag. In the following example, the consumer admin provided to the SageMaker execution role has permission to access ```rl_credit-card``` and the table satisfying the Lake Formation tag expression.\n\n\n\n4. Once assigned an execution role, data scientists in SageMaker can use [Amazon Athena](http://aws.amazon.com/athena) to query the table via the resource link database in Lake Formation.\n\na. For data exploration, they can use Studio notebooks to process the data with interactive querying via Athena.\nb. For data processing and feature engineering, they can run SageMaker processing jobs with an Athena data source and output results back to [Amazon Simple Storage Service ](http://aws.amazon.com/s3)(Amazon S3).\nc. After the data is processed and available in Amazon S3 on the ML CoE account, data scientists can use SageMaker training jobs to train models and [SageMaker Pipelines](https://aws.amazon.com/sagemaker/pipelines/) to automate model-building workflows.\nd. Data scientists can also use the SageMaker model registry to register the models.\n\n### **Data exploration**\n\nThe following diagram illustrates the data exploration workflow in the data consumer account.\n\n\n\nThe consumer starts by querying a sample of the data from the ```credit_risk``` table with Athena in a Studio notebook. When querying data via Athena, the intermediate results are also saved in Amazon S3. You can use the [AWS Data Wrangler library](https://aws-data-wrangler.readthedocs.io/en/stable/) to run a query on Athena in a Studio notebook for data exploration. The following code example shows [how to query Athena](https://aws-data-wrangler.readthedocs.io/en/stable/tutorials/006%20-%20Amazon%20Athena.html) to fetch the results as a dataframe for data exploration:\n\n```\ndf= wr.athena.read_sql_query('SELECT * FROM credit_card LIMIT 10;', database=\"rl_credit-card\", ctas_approach=False)\n```\n\nNow that you have a subset of the data as a dataframe, you can start exploring the data and see what feature engineering updates are needed for model training. An example of data exploration is shown in the following screenshot.\n\n\n\nWhen you query the database, you can see the access logs from the Lake Formation console, as shown in the following screenshot. These logs give you information about who or which service has used Lake Formation, including the IAM role and time of access. The screenshot shows a log about SageMaker accessing the table ```credit_risk``` in AWS Glue via Athena. In the log, you can see the additional audit context that contains the query ID that matches the query ID in Athena.\n\n\n\nThe following screenshot shows the Athena query run ID that matches the query ID from the preceding log. This shows the data accessed with the SQL query. You can see what data has been queried by navigating to the Athena console, choosing the **Recent queries** tab, and then looking for the run ID that matches the query ID from the additional audit context.\n\n\n\n### **Data processing**\n\nAfter data exploration, you may want to preprocess the entire large dataset for feature engineering before training a model. The following diagram illustrates the data processing procedure.\n\n\n\nIn this example, we use a SageMaker processing job, in which we define an Athena dataset definition. The processing job queries the data via Athena and uses a script to split the data into training, testing, and validation datasets. The results of the processing job are saved to Amazon S3. To learn how to configure a processing job with Athena, refer to [Use Amazon Athena in a processing job with Amazon SageMaker](https://medium.com/p/d43272d69a78).\n\nIn this example, you can use the Python SDK to trigger a processing job with the Scikit-learn framework. Before triggering, you can configure the [inputs parameter](https://sagemaker.readthedocs.io/en/stable/api/training/processing.html#sagemaker.processing.ProcessingInput) to get the input data via the Athena dataset definition, as shown in the following code. The dataset contains the location to download the results from Athena to the processing container and the configuration for the SQL query. When the processing job is finished, the results are saved in Amazon S3.\n\n```\nAthenaDataset = AthenaDatasetDefinition (\n catalog = 'AwsDataCatalog', \n database = 'rl_credit-card', \n query_string = 'SELECT * FROM \"rl_credit-card\".\"credit_card\"\"', \n output_s3_uri = 's3://sagemaker-us-east-1-********7363/athenaqueries/', \n work_group = 'primary', \n output_format = 'PARQUET')\n\ndataSet = DatasetDefinition(\n athena_dataset_definition = AthenaDataset, \n local_path='/opt/ml/processing/input/dataset.parquet')\n\n\nsklearn_processor.run(\n code=\"processing/preprocessor.py\",\n inputs=[ProcessingInput(\n input_name=\"dataset\", \n destination=\"/opt/ml/processing/input\", \n dataset_definition=dataSet)],\n outputs=[\n ProcessingOutput(\n output_name=\"train_data\", source=\"/opt/ml/processing/train\", destination=train_data_path\n ),\n ProcessingOutput(\n output_name=\"val_data\", source=\"/opt/ml/processing/val\", destination=val_data_path\n ),\n ProcessingOutput(\n output_name=\"model\", source=\"/opt/ml/processing/model\", destination=model_path\n ),\n ProcessingOutput(\n output_name=\"test_data\", source=\"/opt/ml/processing/test\", destination=test_data_path\n ),\n ],\n arguments=[\"--train-test-split-ratio\", \"0.2\"],\n logs=False,\n)\n```\n\n### **Model training and model registration**\n\nAfter preprocessing the data, you can train the model with the preprocessed data saved in Amazon S3. The following diagram illustrates the model training and registration process.\n\n\n\nFor data exploration and SageMaker processing jobs, you can retrieve the data in the data mesh via Athena. Although the SageMaker Training API doesn’t include a parameter to configure an Athena data source, you can query data via Athena in the training script itself.\n\nIn this example, the preprocessed data is now available in Amazon S3 and can be used directly to train an XGBoost model with SageMaker Script Mode. You can provide the script, hyperparameters, instance type, and all the additional parameters needed to successfully train the model. You can trigger the SageMaker estimator with the training and validation data in Amazon S3. When the model training is complete, you can register the model in the SageMaker model registry for experiment tracking and deployment to a production account.\n\n```\nestimator = XGBoost(\n entry_point=entry_point,\n source_dir=source_dir,\n output_path=output_path,\n code_location=code_location,\n hyperparameters=hyperparameters,\n instance_type=\"ml.c5.xlarge\",\n instance_count=1,\n framework_version=\"0.90-2\",\n py_version=\"py3\",\n role=role,\n)\n\ninputs = {\"train\": train_input_data, \"validation\": val_input_data}\n\nestimator.fit(inputs, job_name=job_name)\n```\n\n### **Next steps**\n\nYou can make incremental updates to the solution to address requirements around data updates and model retraining, automatic deletion of intermediate data in Amazon S3, and integrating a feature store. We discuss each of these in more detail in the following sections.\n\n### **Data updates and model retraining triggers**\n\nThe following diagram illustrates the process to update the training data and trigger model retraining.\n\n\n\nThe process includes the following steps:\n\n1. The data producer updates the data product with either a new schema or additional data at a regular frequency.\n2. After the data product is re-registered in the central data catalog, this generates an [Amazon CloudWatch](http://aws.amazon.com/cloudwatch) event from Lake Formation.\n3. The CloudWatch event triggers an [AWS Lambda](http://aws.amazon.com/lambda) function to synchronize the updated data product with the consumer account. You can use this trigger to reflect the data changes by doing the following:\na. Rerun the AWS Glue crawler.\nb. Trigger model retraining if the data drifts beyond a given threshold.\n\nFor more details about setting up an SageMaker MLOps deployment pipeline for drift detection, refer to the [Amazon SageMaker Drift Detection](https://github.com/aws-samples/amazon-sagemaker-drift-detection) GitHub repo.\n\n### **Auto-deletion of intermediate data in Amazon S3**\n\nYou can automatically delete intermediate data that is generated by Athena queries and stored in Amazon S3 in the consumer account at regular intervals with S3 object lifecycle rules. For more information, refer to [Managing your storage lifecycle](https://docs.aws.amazon.com/AmazonS3/latest/userguide/object-lifecycle-mgmt.html).\n\n### **SageMaker Feature Store integration**\n\n[SageMaker Feature Store](https://aws.amazon.com/sagemaker/feature-store/) is purpose-built for ML and can store, discover, and share curated features used in training and prediction workflows. A feature store can work as a centralized interface between different data producer teams and LoBs, enabling feature discoverability and reusability to multiple consumers. The feature store can act as an alternative to the central data catalog in the data mesh architecture described earlier. For more information about cross-account architecture patterns, refer to [Enable feature reuse across accounts and teams using Amazon SageMaker Feature Store](https://aws.amazon.com/blogs/machine-learning/enable-feature-reuse-across-accounts-and-teams-using-amazon-sagemaker-feature-store/).\n\n\nRefer to the [MLOps foundation roadmap for enterprises with Amazon SageMaker](https://aws.amazon.com/blogs/machine-learning/mlops-foundation-roadmap-for-enterprises-with-amazon-sagemaker/) blog post to find out more about building an MLOps foundation based on the MLOps maturity model.\n\n### **Conclusion**\n\nIn this two-part series, we showcased how you can build and train ML models with a multi-account data mesh architecture on AWS. We described the requirements of a typical financial services organization with multiple LoBs and an ML CoE, and illustrated the solution architecture with Lake Formation and SageMaker. We used the example of a credit risk data product registered in Lake Formation by the consumer banking LoB and accessed by the ML CoE team to train a credit risk ML model with SageMaker.\n\nEach data producer account defines data products that are curated by people who understand the data and its access control, use, and limitations. The data products and the application domains that consume them are interconnected to form the data mesh. The data mesh architecture allows the ML teams to discover and access these curated data products.\n\nLake Formation allows cross-account access to Data Catalog metadata and underlying data. You can use Lake Formation to create a multi-account data mesh architecture. SageMaker provides an ML platform with key capabilities around data management, data science experimentation, model training, model hosting, workflow automation, and CI/CD pipelines for productionization. You can set up one or more analytics and ML CoE environments to build and train models with data products registered across multiple accounts in a data mesh.\n\nTry out the [AWS CloudFormation](http://aws.amazon.com/cloudformation) templates and code from the example [repository](https://github.com/aws-samples/amazon-sagemaker-lakeformation-datamesh) to get started.\n\n### **About the authors**\n\n\n\n**Karim Hammouda** is a Specialist Solutions Architect for Analytics at AWS with a passion for data integration, data analysis, and BI. He works with AWS customers to design and build analytics solutions that contribute to their business growth. In his free time, he likes to watch TV documentaries and play video games with his son.\n\n\n\n**Hasan Poonawala** is a Senior AI/ML Specialist Solutions Architect at AWS, Hasan helps customers design and deploy machine learning applications in production on AWS. He has over 12 years of work experience as a data scientist, machine learning practitioner, and software developer. In his spare time, Hasan loves to explore nature and spend time with friends and family.\n\n\n\n**Benoit de Patoul** is an AI/ML Specialist Solutions Architect at AWS. He helps customers by providing guidance and technical assistance to build solutions related to AI/ML using AWS. In his free time, he likes to play piano and spend time with friends.","render":"<p>This is the second part of a series that showcases the machine learning (ML) lifecycle with a data mesh design pattern for a large enterprise with multiple lines of business (LOBs) and a Center of Excellence (CoE) for analytics and ML.</p>\n<p>In <a href=\"https://aws.amazon.com/blogs/machine-learning/part-1-build-and-train-ml-models-using-a-data-mesh-architecture-on-aws/\" target=\"_blank\">part 1</a>, we addressed the data steward persona and showcased a data mesh setup with multiple AWS data producer and consumer accounts. For an overview of the business context and the steps to set up a data mesh with <a href=\"https://aws.amazon.com/lake-formation/\" target=\"_blank\">AWS Lake Formation</a> and register a data product, refer to part 1.</p>\n<p>In this post, we address the analytics and ML platform team as a consumer in the data mesh. The platform team sets up the ML environment for the data scientists and helps them get access to the necessary data products in the data mesh. The data scientists in this team use <a href=\"https://aws.amazon.com/sagemaker/\" target=\"_blank\">Amazon SageMaker</a> to build and train a credit risk prediction model using the shared credit risk data product from the consumer banking LoB.</p>\n<p>Build and train ML models using a data mesh architecture on AWS</p>\n<ul>\n<li>Part 1: Data mesh set up and data product registration</li>\n<li>Part 2: Data product consumption by Analytics and ML CoE</li>\n</ul>\n<p>The code for this example is available on <a href=\"https://github.com/aws-samples/amazon-sagemaker-lakeformation-datamesh\" target=\"_blank\">GitHub</a>.</p>\n<h3><a id=\"Analytics_and_ML_consumer_in_a_data_mesh_architecture_14\"></a><strong>Analytics and ML consumer in a data mesh architecture</strong></h3>\n<p>Let’s recap the high-level architecture that highlights the key components in the data mesh architecture.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/24a1d2489c1a40d5a6873101f8c6a4fe_image.png\" alt=\"image.png\" /></p>\n<p>In the data producer block 1 (left), there is a data processing stage to ensure that shared data is well-qualified and curated. The central data governance block 2 (center) acts as a centralized data catalog with metadata of various registered data products. The data consumer block 3 (right) requests access to datasets from the central catalog and queries and processes the data to build and train ML models.</p>\n<p>With SageMaker, data scientists and developers in the ML CoE can quickly and easily build and train ML models, and then directly deploy them into a production-ready hosted environment. SageMaker provides easy access to your data sources for exploration and analysis, and also provides common ML algorithms and frameworks that are optimized to run efficiently against extremely large data in a distributed environment. It’s easy to get started with <a href=\"https://docs.aws.amazon.com/sagemaker/latest/dg/studio.html\" target=\"_blank\">Amazon SageMaker Studio</a>, a web-based integrated development environment (IDE), by completing the SageMaker domain <a href=\"https://docs.aws.amazon.com/sagemaker/latest/dg/gs-studio-onboard.html\" target=\"_blank\">onboarding process</a>. For more information, refer to the <a href=\"https://docs.aws.amazon.com/sagemaker/latest/dg/whatis.html\" target=\"_blank\">Amazon SageMaker Developer Guide</a>.</p>\n<h3><a id=\"Data_product_consumption_by_the_analytics_and_ML_CoE_24\"></a><strong>Data product consumption by the analytics and ML CoE</strong></h3>\n<p>The following architecture diagram describes the steps required by the analytics and ML CoE consumer to get access to the registered data product in the central data catalog and process the data to build and train an ML model.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/22f50953d4cd4f0fbd8bb499e06ad6e3_image.png\" alt=\"image.png\" /></p>\n<p>The workflow consists of the following components:</p>\n<ol>\n<li>The producer data steward provides access in the central account to the database and table to the consumer account. The database is now reflected as a shared database in the consumer account.</li>\n<li>The consumer admin creates a resource link in the consumer account to the database shared by the central account. The following screenshot shows an example in the consumer account, with <code>rl_credit-card</code> being the resource link of the <code>credit-card</code> database.</li>\n</ol>\n<p><img src=\"https://dev-media.amazoncloud.cn/0d1c5bfdfd1f4b5d95263b0f6641345a_image.png\" alt=\"image.png\" /></p>\n<p><img src=\"https://dev-media.amazoncloud.cn/c5c7952bbf184f9aa0787824d6e68f6c_image.png\" alt=\"image.png\" /></p>\n<ol start=\"3\">\n<li>The consumer admin provides the Studio <a href=\"http://aws.amazon.com/iam\" target=\"_blank\">AWS Identity and Access Management</a> (IAM) execution role access to the resource linked database and the table identified in the Lake Formation tag. In the following example, the consumer admin provided to the SageMaker execution role has permission to access <code>rl_credit-card</code> and the table satisfying the Lake Formation tag expression.</li>\n</ol>\n<p><img src=\"https://dev-media.amazoncloud.cn/79e0008145ef45138313051b05064bc4_image.png\" alt=\"image.png\" /></p>\n<ol start=\"4\">\n<li>Once assigned an execution role, data scientists in SageMaker can use <a href=\"http://aws.amazon.com/athena\" target=\"_blank\">Amazon Athena</a> to query the table via the resource link database in Lake Formation.</li>\n</ol>\n<p>a. For data exploration, they can use Studio notebooks to process the data with interactive querying via Athena.<br />\nb. For data processing and feature engineering, they can run SageMaker processing jobs with an Athena data source and output results back to <a href=\"http://aws.amazon.com/s3\" target=\"_blank\">Amazon Simple Storage Service </a>(Amazon S3).<br />\nc. After the data is processed and available in Amazon S3 on the ML CoE account, data scientists can use SageMaker training jobs to train models and <a href=\"https://aws.amazon.com/sagemaker/pipelines/\" target=\"_blank\">SageMaker Pipelines</a> to automate model-building workflows.<br />\nd. Data scientists can also use the SageMaker model registry to register the models.</p>\n<h3><a id=\"Data_exploration_50\"></a><strong>Data exploration</strong></h3>\n<p>The following diagram illustrates the data exploration workflow in the data consumer account.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/2b7773b4d5284d60bc1d67554e8c64c5_image.png\" alt=\"image.png\" /></p>\n<p>The consumer starts by querying a sample of the data from the <code>credit_risk</code> table with Athena in a Studio notebook. When querying data via Athena, the intermediate results are also saved in Amazon S3. You can use the <a href=\"https://aws-data-wrangler.readthedocs.io/en/stable/\" target=\"_blank\">AWS Data Wrangler library</a> to run a query on Athena in a Studio notebook for data exploration. The following code example shows <a href=\"https://aws-data-wrangler.readthedocs.io/en/stable/tutorials/006%20-%20Amazon%20Athena.html\" target=\"_blank\">how to query Athena</a> to fetch the results as a dataframe for data exploration:</p>\n<pre><code class=\"lang-\">df= wr.athena.read_sql_query('SELECT * FROM credit_card LIMIT 10;', database="rl_credit-card", ctas_approach=False)\n</code></pre>\n<p>Now that you have a subset of the data as a dataframe, you can start exploring the data and see what feature engineering updates are needed for model training. An example of data exploration is shown in the following screenshot.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/f114a602b32a492188d11e368597260f_image.png\" alt=\"image.png\" /></p>\n<p>When you query the database, you can see the access logs from the Lake Formation console, as shown in the following screenshot. These logs give you information about who or which service has used Lake Formation, including the IAM role and time of access. The screenshot shows a log about SageMaker accessing the table <code>credit_risk</code> in AWS Glue via Athena. In the log, you can see the additional audit context that contains the query ID that matches the query ID in Athena.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/41920e4d20b44b43bf3ee52b2c21810a_image.png\" alt=\"image.png\" /></p>\n<p>The following screenshot shows the Athena query run ID that matches the query ID from the preceding log. This shows the data accessed with the SQL query. You can see what data has been queried by navigating to the Athena console, choosing the <strong>Recent queries</strong> tab, and then looking for the run ID that matches the query ID from the additional audit context.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/fa79b1ac54e44f00a6a849aa69425f7c_image.png\" alt=\"image.png\" /></p>\n<h3><a id=\"Data_processing_74\"></a><strong>Data processing</strong></h3>\n<p>After data exploration, you may want to preprocess the entire large dataset for feature engineering before training a model. The following diagram illustrates the data processing procedure.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/357be2aa763f4c1fbd6f1b3918b71cfb_image.png\" alt=\"image.png\" /></p>\n<p>In this example, we use a SageMaker processing job, in which we define an Athena dataset definition. The processing job queries the data via Athena and uses a script to split the data into training, testing, and validation datasets. The results of the processing job are saved to Amazon S3. To learn how to configure a processing job with Athena, refer to <a href=\"https://medium.com/p/d43272d69a78\" target=\"_blank\">Use Amazon Athena in a processing job with Amazon SageMaker</a>.</p>\n<p>In this example, you can use the Python SDK to trigger a processing job with the Scikit-learn framework. Before triggering, you can configure the <a href=\"https://sagemaker.readthedocs.io/en/stable/api/training/processing.html#sagemaker.processing.ProcessingInput\" target=\"_blank\">inputs parameter</a> to get the input data via the Athena dataset definition, as shown in the following code. The dataset contains the location to download the results from Athena to the processing container and the configuration for the SQL query. When the processing job is finished, the results are saved in Amazon S3.</p>\n<pre><code class=\"lang-\">AthenaDataset = AthenaDatasetDefinition (\n catalog = 'AwsDataCatalog', \n database = 'rl_credit-card', \n query_string = 'SELECT * FROM "rl_credit-card"."credit_card""', \n output_s3_uri = 's3://sagemaker-us-east-1-********7363/athenaqueries/', \n work_group = 'primary', \n output_format = 'PARQUET')\n\ndataSet = DatasetDefinition(\n athena_dataset_definition = AthenaDataset, \n local_path='/opt/ml/processing/input/dataset.parquet')\n\n\nsklearn_processor.run(\n code="processing/preprocessor.py",\n inputs=[ProcessingInput(\n input_name="dataset", \n destination="/opt/ml/processing/input", \n dataset_definition=dataSet)],\n outputs=[\n ProcessingOutput(\n output_name="train_data", source="/opt/ml/processing/train", destination=train_data_path\n ),\n ProcessingOutput(\n output_name="val_data", source="/opt/ml/processing/val", destination=val_data_path\n ),\n ProcessingOutput(\n output_name="model", source="/opt/ml/processing/model", destination=model_path\n ),\n ProcessingOutput(\n output_name="test_data", source="/opt/ml/processing/test", destination=test_data_path\n ),\n ],\n arguments=["--train-test-split-ratio", "0.2"],\n logs=False,\n)\n</code></pre>\n<h3><a id=\"Model_training_and_model_registration_123\"></a><strong>Model training and model registration</strong></h3>\n<p>After preprocessing the data, you can train the model with the preprocessed data saved in Amazon S3. The following diagram illustrates the model training and registration process.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/b92ff65b663846d3944e567d9d0cd9d7_image.png\" alt=\"image.png\" /></p>\n<p>For data exploration and SageMaker processing jobs, you can retrieve the data in the data mesh via Athena. Although the SageMaker Training API doesn’t include a parameter to configure an Athena data source, you can query data via Athena in the training script itself.</p>\n<p>In this example, the preprocessed data is now available in Amazon S3 and can be used directly to train an XGBoost model with SageMaker Script Mode. You can provide the script, hyperparameters, instance type, and all the additional parameters needed to successfully train the model. You can trigger the SageMaker estimator with the training and validation data in Amazon S3. When the model training is complete, you can register the model in the SageMaker model registry for experiment tracking and deployment to a production account.</p>\n<pre><code class=\"lang-\">estimator = XGBoost(\n entry_point=entry_point,\n source_dir=source_dir,\n output_path=output_path,\n code_location=code_location,\n hyperparameters=hyperparameters,\n instance_type="ml.c5.xlarge",\n instance_count=1,\n framework_version="0.90-2",\n py_version="py3",\n role=role,\n)\n\ninputs = {"train": train_input_data, "validation": val_input_data}\n\nestimator.fit(inputs, job_name=job_name)\n</code></pre>\n<h3><a id=\"Next_steps_152\"></a><strong>Next steps</strong></h3>\n<p>You can make incremental updates to the solution to address requirements around data updates and model retraining, automatic deletion of intermediate data in Amazon S3, and integrating a feature store. We discuss each of these in more detail in the following sections.</p>\n<h3><a id=\"Data_updates_and_model_retraining_triggers_156\"></a><strong>Data updates and model retraining triggers</strong></h3>\n<p>The following diagram illustrates the process to update the training data and trigger model retraining.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/083239a1aaa34a709ad03ecd8984ae5f_image.png\" alt=\"image.png\" /></p>\n<p>The process includes the following steps:</p>\n<ol>\n<li>The data producer updates the data product with either a new schema or additional data at a regular frequency.</li>\n<li>After the data product is re-registered in the central data catalog, this generates an <a href=\"http://aws.amazon.com/cloudwatch\" target=\"_blank\">Amazon CloudWatch</a> event from Lake Formation.</li>\n<li>The CloudWatch event triggers an <a href=\"http://aws.amazon.com/lambda\" target=\"_blank\">AWS Lambda</a> function to synchronize the updated data product with the consumer account. You can use this trigger to reflect the data changes by doing the following:<br />\na. Rerun the AWS Glue crawler.<br />\nb. Trigger model retraining if the data drifts beyond a given threshold.</li>\n</ol>\n<p>For more details about setting up an SageMaker MLOps deployment pipeline for drift detection, refer to the <a href=\"https://github.com/aws-samples/amazon-sagemaker-drift-detection\" target=\"_blank\">Amazon SageMaker Drift Detection</a> GitHub repo.</p>\n<h3><a id=\"Autodeletion_of_intermediate_data_in_Amazon_S3_172\"></a><strong>Auto-deletion of intermediate data in Amazon S3</strong></h3>\n<p>You can automatically delete intermediate data that is generated by Athena queries and stored in Amazon S3 in the consumer account at regular intervals with S3 object lifecycle rules. For more information, refer to <a href=\"https://docs.aws.amazon.com/AmazonS3/latest/userguide/object-lifecycle-mgmt.html\" target=\"_blank\">Managing your storage lifecycle</a>.</p>\n<h3><a id=\"SageMaker_Feature_Store_integration_176\"></a><strong>SageMaker Feature Store integration</strong></h3>\n<p><a href=\"https://aws.amazon.com/sagemaker/feature-store/\" target=\"_blank\">SageMaker Feature Store</a> is purpose-built for ML and can store, discover, and share curated features used in training and prediction workflows. A feature store can work as a centralized interface between different data producer teams and LoBs, enabling feature discoverability and reusability to multiple consumers. The feature store can act as an alternative to the central data catalog in the data mesh architecture described earlier. For more information about cross-account architecture patterns, refer to <a href=\"https://aws.amazon.com/blogs/machine-learning/enable-feature-reuse-across-accounts-and-teams-using-amazon-sagemaker-feature-store/\" target=\"_blank\">Enable feature reuse across accounts and teams using Amazon SageMaker Feature Store</a>.</p>\n<p>Refer to the <a href=\"https://aws.amazon.com/blogs/machine-learning/mlops-foundation-roadmap-for-enterprises-with-amazon-sagemaker/\" target=\"_blank\">MLOps foundation roadmap for enterprises with Amazon SageMaker</a> blog post to find out more about building an MLOps foundation based on the MLOps maturity model.</p>\n<h3><a id=\"Conclusion_183\"></a><strong>Conclusion</strong></h3>\n<p>In this two-part series, we showcased how you can build and train ML models with a multi-account data mesh architecture on AWS. We described the requirements of a typical financial services organization with multiple LoBs and an ML CoE, and illustrated the solution architecture with Lake Formation and SageMaker. We used the example of a credit risk data product registered in Lake Formation by the consumer banking LoB and accessed by the ML CoE team to train a credit risk ML model with SageMaker.</p>\n<p>Each data producer account defines data products that are curated by people who understand the data and its access control, use, and limitations. The data products and the application domains that consume them are interconnected to form the data mesh. The data mesh architecture allows the ML teams to discover and access these curated data products.</p>\n<p>Lake Formation allows cross-account access to Data Catalog metadata and underlying data. You can use Lake Formation to create a multi-account data mesh architecture. SageMaker provides an ML platform with key capabilities around data management, data science experimentation, model training, model hosting, workflow automation, and CI/CD pipelines for productionization. You can set up one or more analytics and ML CoE environments to build and train models with data products registered across multiple accounts in a data mesh.</p>\n<p>Try out the <a href=\"http://aws.amazon.com/cloudformation\" target=\"_blank\">AWS CloudFormation</a> templates and code from the example <a href=\"https://github.com/aws-samples/amazon-sagemaker-lakeformation-datamesh\" target=\"_blank\">repository</a> to get started.</p>\n<h3><a id=\"About_the_authors_193\"></a><strong>About the authors</strong></h3>\n<p><img src=\"https://dev-media.amazoncloud.cn/d607cbea36c34196bc5d2691debdddb2_image.png\" alt=\"image.png\" /></p>\n<p><strong>Karim Hammouda</strong> is a Specialist Solutions Architect for Analytics at AWS with a passion for data integration, data analysis, and BI. He works with AWS customers to design and build analytics solutions that contribute to their business growth. In his free time, he likes to watch TV documentaries and play video games with his son.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/791d5eb749414d18b1a378a16e207ce3_image.png\" alt=\"image.png\" /></p>\n<p><strong>Hasan Poonawala</strong> is a Senior AI/ML Specialist Solutions Architect at AWS, Hasan helps customers design and deploy machine learning applications in production on AWS. He has over 12 years of work experience as a data scientist, machine learning practitioner, and software developer. In his spare time, Hasan loves to explore nature and spend time with friends and family.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/80f3a6ff789b4954b09d811d8eec649d_image.png\" alt=\"image.png\" /></p>\n<p><strong>Benoit de Patoul</strong> is an AI/ML Specialist Solutions Architect at AWS. He helps customers by providing guidance and technical assistance to build solutions related to AI/ML using AWS. In his free time, he likes to play piano and spend time with friends.</p>\n"}

Build and train ML models using a data mesh architecture on Amazon: Part 2

海外精选

海外精选的内容汇集了全球优质的亚马逊云科技相关技术内容。同时,内容中提到的“AWS”

是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

0

0 0

0亚马逊云科技解决方案 基于行业客户应用场景及技术领域的解决方案

联系亚马逊云科技专家

目录

亚马逊云科技解决方案 基于行业客户应用场景及技术领域的解决方案

联系亚马逊云科技专家

亚马逊云科技解决方案

基于行业客户应用场景及技术领域的解决方案

联系专家

0

目录

分享

分享 点赞

点赞 收藏

收藏 目录

目录立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。