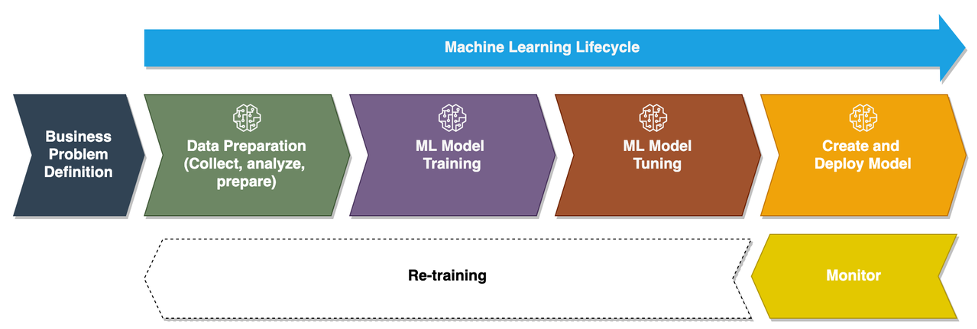

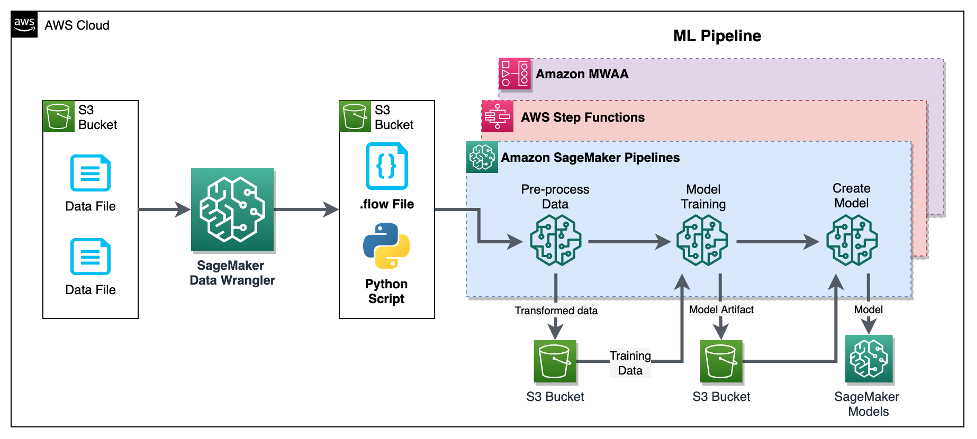

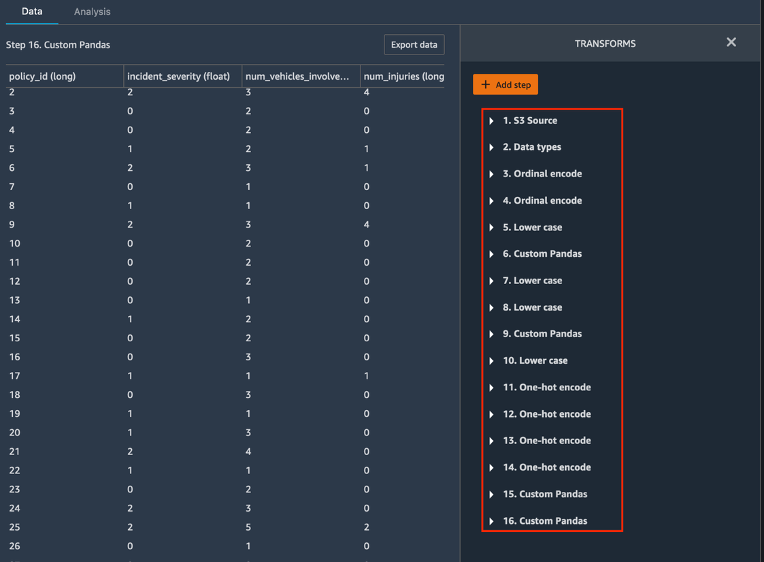

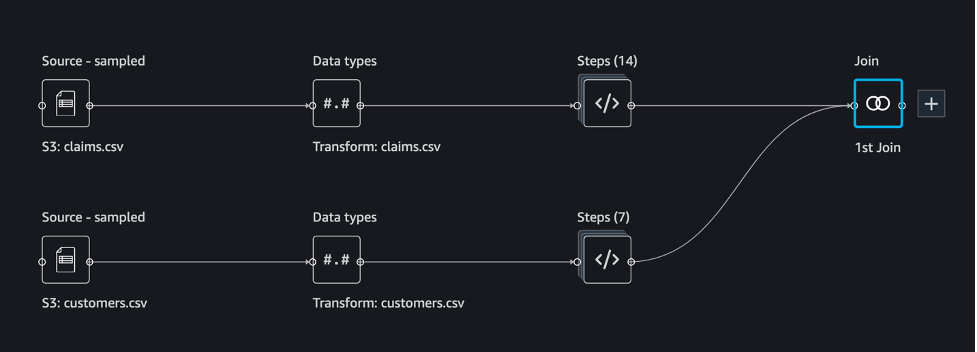

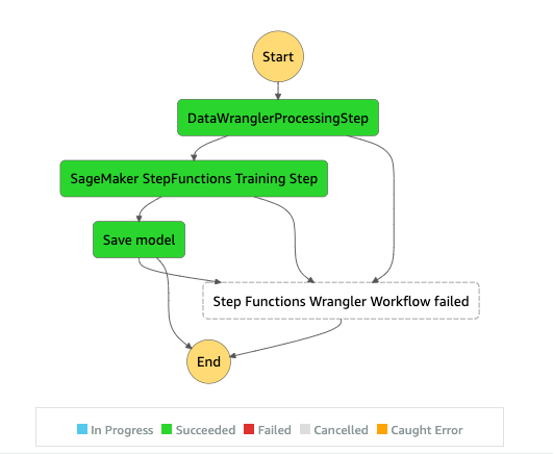

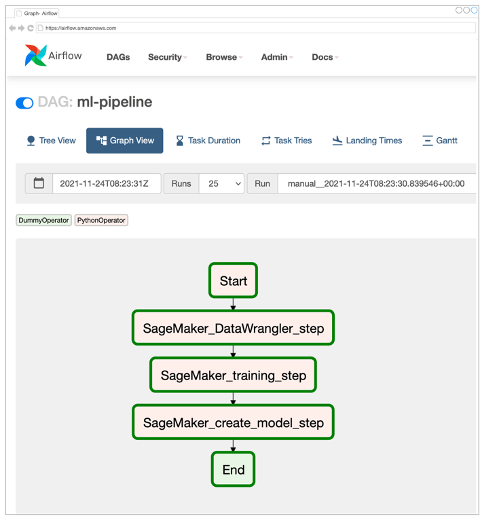

{"value":"\n\nData scientists and ML engineers spend somewhere between 70–80% of their time collecting, analyzing, cleaning, and transforming data required for model training. [Amazon SageMaker Data Wrangler](https://aws.amazon.com/sagemaker/data-wrangler/) is a fully managed capability of [Amazon SageMaker](https://aws.amazon.com/sagemaker/) that makes it faster for data scientists and ML engineers to analyze and prepare data for their ML projects with little to no code. When it comes to operationalizing an end-to-end ML lifecycle, data preparation is almost always the first step in the process. Given that there are many ways to build an end-to-end ML pipeline, in this post we discuss how you can easily integrate Data Wrangler with some of the well-known workflow automation and orchestration technologies.\n\n### **Solution overview**\n\nIn this post, we demonstrate how users can integrate data preparation using Data Wrangler with [Amazon SageMaker Pipelines, AWS Step Functions](https://aws.amazon.com/sagemaker/pipelines/), and A[pache Airflow](https://airflow.apache.org/) with [Amazon Managed Workflow for Apache Airflow](https://aws.amazon.com/managed-workflows-for-apache-airflow/) (Amazon MWAA). Pipelines is a SageMaker feature that is a purpose-built and easy-to-use continuous integration and continuous delivery (CI/CD) service for ML. Step Functions is a serverless, low-code visual workflow service used to orchestrate AWS services and automate business processes. Amazon MWAA is a managed orchestration service for Apache Airflow that makes it easier to operate end-to-end data and ML pipelines.\n\nFor demonstration purposes, we consider a use case to prepare data to train an ML model with the SageMaker built-in [XGBoost algorithm](https://docs.aws.amazon.com/sagemaker/latest/dg/xgboost.html) that will help us identify fraudulent vehicle insurance claims. We used a synthetically generated set of sample data to train the model and create a SageMaker model using the model artifacts from the training process. Our goal is to operationalize this process end to end by setting up an ML workflow. Although ML workflows can be more elaborate, we use a minimal workflow for demonstration purposes. The first step of the workflow is data preparation with Data Wrangler, followed by a model training step, and finally a model creation step. The following diagram illustrates our solution workflow.\n\n\n\nIn the following sections, we walk you through how to set up a Data Wrangler flow and integrate Data Wrangler with Pipelines, Step Functions, and Apache Airflow.\n\n### **Set up a Data Wrangler flow**\n\nWe start by creating a Data Wrangler flow, also called a data flow, using the [data flow UI](https://docs.aws.amazon.com/sagemaker/latest/dg/data-wrangler-data-flow.html#data-wrangler-data-flow-ui) via the [Amazon SageMaker Studio](https://aws.amazon.com/sagemaker/studio/) IDE. Our sample dataset consists of two data files: [claims.csv](https://github.com/aws-samples/sm-data-wrangler-mlops-workflows/blob/main/data/claims.csv) and [customers.csv](https://github.com/aws-samples/sm-data-wrangler-mlops-workflows/blob/main/data/customers.csv), which are stored in an [Amazon Simple Storage Service](https://aws.amazon.com/s3/) (Amazon S3) [bucket](https://docs.aws.amazon.com/AmazonS3/latest/userguide/creating-buckets-s3.html). We use the data flow UI to apply Data Wrangler’s [built-in transformations](https://docs.aws.amazon.com/sagemaker/latest/dg/data-wrangler-transform.html) such categorical encoding, string formatting, and imputation to the feature columns in each of these files. We also apply [custom transformation](https://docs.aws.amazon.com/sagemaker/latest/dg/data-wrangler-transform.html#data-wrangler-transform-custom) to a few feature columns using a few lines of custom Python code with [Pandas DataFrame](https://pandas.pydata.org/docs/reference/api/pandas.DataFrame.html). The following screenshot shows the transforms applied to the claims.csv file in the data flow UI.\n\n\n\nFinally, we join the results of the applied transforms of the two data files to generate a single training dataset for our model training. We use Data Wrangler’s built-in [join datasets](https://docs.aws.amazon.com/sagemaker/latest/dg/data-wrangler-transform.html#data-wrangler-transform-join) capability, which lets us perform SQL-like join operations on tabular data. The following screenshot shows the data flow in the data flow UI in Studio. For step-by-step instructions to create the data flow using Data Wrangler, refer to the [GitHub repository](https://github.com/aws-samples/sm-data-wrangler-mlops-workflows).\n\n\n\nYou can now use the data flow (.flow) file to perform data transformations on our raw data files. The data flow UI can automatically generate Python notebooks for us to use and integrate directly with Pipelines using the SageMaker SDK. For Step Functions, we use the [AWS Step Functions Data Science Python SDK](https://aws-step-functions-data-science-sdk.readthedocs.io/en/stable/) to integrate our Data Wrangler processing with a Step Functions pipeline. For Amazon MWAA, we use a [custom Airflow operator](https://airflow.apache.org/docs/apache-airflow/stable/howto/custom-operator.html) and the [Airflow SageMaker operator](https://sagemaker.readthedocs.io/en/stable/workflows/airflow/using_workflow.html). We discuss each of these approaches in detail in the following sections.\n\n### **Integrate Data Wrangler with Pipelines**\n\nSageMaker Pipelines is a native workflow orchestration tool for building ML pipelines that take advantage of direct SageMaker integration. Along with the [SageMaker model registry](https://docs.aws.amazon.com/sagemaker/latest/dg/model-registry.html), Pipelines improves the operational resilience and reproducibility of your ML workflows. These workflow automation components enable you to easily scale your ability to build, train, test, and deploy hundreds of models in production; iterate faster; reduce errors due to manual orchestration; and build repeatable mechanisms. Each step in the pipeline can keep track of the lineage, and intermediate steps can be cached for quickly rerunning the pipeline. You can [create pipelines using the SageMaker Python SDK](https://sagemaker.readthedocs.io/en/stable/workflows/pipelines/sagemaker.workflow.pipelines.html).\n\nA workflow built with SageMaker pipelines consists of a sequence of steps forming a Directed Acyclic Graph (DAG). In this example, we begin with a [processing step]()https://docs.aws.amazon.com/sagemaker/latest/dg/build-and-manage-steps.html#step-type-processing, which runs a [SageMaker Processing job](https://docs.aws.amazon.com/sagemaker/latest/dg/processing-job.html) based on the Data Wrangler’s flow file to create a training dataset. We then continue with a [training step](https://docs.aws.amazon.com/sagemaker/latest/dg/build-and-manage-steps.html#step-type-training), where we train an XGBoost model using SageMaker’s built-in XGBoost algorithm and the training dataset created in the previous step. After a model has been trained, we end this workflow with a [RegisterModel step ](https://docs.aws.amazon.com/sagemaker/latest/dg/build-and-manage-steps.html#step-type-register-model)to register the trained model with the SageMaker model registry.\n\n\n\n#### **Installation and walkthrough**\n\nTo run this sample, we use a Jupyter notebook running Python3 on a Data Science kernel image in a Studio environment. You can also run it on a Jupyter notebook instance locally on your machine by setting up the credentials to assume the SageMaker execution role. The notebook is lightweight and can be run on an ml.t3.medium instance. Detailed step-by-step instructions can be found in the [GitHub repository](https://github.com/aws-samples/sm-data-wrangler-mlops-workflows).\n\nYou can either use the export feature in Data Wrangler to generate the Pipelines code, or build your own script from scratch. In our sample repository, we use a combination of both approaches for simplicity. At a high level, the following are the steps to build and run the Pipelines workflow:\n\n1. Generate a flow file from Data Wrangler or use the setup script to generate a flow file from a preconfigured template.\n2. Create an [Amazon Simple Storage Service](http://aws.amazon.com/s3) (Amazon S3) bucket and upload your flow file and input files to the bucket. In our sample notebook, we use the SageMaker default S3 bucket.\n3. Follow the instructions in the notebook to create a Processor object based on the Data Wrangler flow file, and an Estimator object with the parameters of the training job.\na. In our example, because we only use SageMaker features and the default S3 bucket, we can use Studio’s default execution role. The same [AWS Identity and Access Management](http://aws.amazon.com/iam) (IAM) role is assumed by the pipeline run, the processing job, and the training job. You can further customize the execution role according to minimum privilege.\n4. Continue with the instructions to create a pipeline with steps referencing the Processor and Estimator objects, and then run the pipeline. The processing and training jobs run on SageMaker managed environments and take a few minutes to complete.\n5. In Studio, you can see the pipeline details monitor the pipeline run. You can also monitor the underlying processing and training jobs from the SageMaker console or from [Amazon CloudWatch.](http://aws.amazon.com/cloudwatch)\n\n### **Integrate Data Wrangler with Step Functions**\n\nWith Step Functions, you can express complex business logic as low-code, event-driven workflows that connect different AWS services. The [Step Functions Data Science SDK](https://aws-step-functions-data-science-sdk.readthedocs.io/en/stable/) is an open-source library that allows data scientists to create workflows that can preprocess datasets and build, deploy, and monitor ML models using SageMaker and Step Functions. Step Functions is based on state machines and tasks. Step Functions creates workflows out of steps called [states](https://docs.aws.amazon.com/step-functions/latest/dg/concepts-states.html), and expresses that workflow in the [Amazon States Language](https://docs.aws.amazon.com/step-functions/latest/dg/concepts-amazon-states-language.html). When you create a workflow using the Step Functions Data Science SDK, it creates a state machine representing your workflow and steps in Step Functions.\n\nFor this use case, we built a Step Functions workflow based on the common pattern used in this post that includes a processing step, training step, and ```RegisterModel``` step. In this case, we import these steps from the Step Functions Data Science Python SDK. We chain these steps in the same order to create a Step Functions workflow. The workflow uses the flow file that was generated from Data Wrangler, but you can also use your own Data Wrangler flow file. We reuse some code from the Data Wrangler [export feature](https://docs.aws.amazon.com/sagemaker/latest/dg/data-wrangler-data-export.html) for simplicity. We run the data preprocessing logic generated by Data Wrangler flow file to create a training dataset, train a model using the XGBoost algorithm, and save the trained model artifact as a SageMaker model. Additionally, in the GitHub repo, we also show how Step Functions allows us to try and catch errors, and handle failures and retries with ```FailStateStep``` and ```CatchStateStep```.\n\nThe resulting flow diagram, as shown in the following screenshot, is available on the Step Functions console after the workflow has started. This helps data scientists and engineers visualize the entire workflow and every step within it, and access the linked CloudWatch logs for every step.\n\n\n\n#### **Installation and walkthrough**\n\nTo run this sample, we use a Python notebook running with a Data Science kernel in a Studio environment. You can also run it on a Python notebook instance locally on your machine by setting up the credentials to assume the SageMaker execution role. The notebook is lightweight and can be run on a t3 medium instance for example. Detailed step-by-step instructions can be found in the [GitHub repository](https://github.com/aws-samples/sm-data-wrangler-mlops-workflows).\n\nYou can either use the export feature in Data Wrangler to generate the Pipelines code and modify it for Step Functions or build your own script from scratch. In our sample repository, we use a combination of both approaches for simplicity. At a high level, the following are the steps to build and run the Step Functions workflow:\n\n1. Generate a flow file from Data Wrangler or use the setup script to generate a flow file from a preconfigured template.\n2. Create an S3 bucket and upload your flow file and input files to the bucket.\n3. Configure your SageMaker execution role with the required permissions as mentioned earlier. Refer to the GitHub repository for detailed instructions.\n4. Follow the instructions to run the notebook in the repository to start a workflow. The processing job runs on a SageMaker-managed Spark environment and can take few minutes to complete.\n5. Go to Step Functions console and track the workflow visually. You can also navigate to the linked CloudWatch logs to debug errors.\n\nLet’s review some important sections of the code here. To define the workflow, we first define the steps in the workflow for the Step Function state machine. The first step is ```the data_wrangler_step``` for data processing, which uses the Data Wrangler flow file as an input to transform the raw data files. We also define a model training step and a model creation step named ```training_step``` and ```model_step```, respectively. Finally, we create a workflow by chaining all the steps we created, as shown in the following code:\n\n```\nfrom stepfunctions.steps import Chain \nfrom stepfunctions.workflow import Workflow \nimport uuid \n\nworkflow_graph = Chain([data_wrangler_step, training_step,model_step ]) \nbranching_workflow = Workflow( name = \"Wrangler-SF-Run-{}\".format(uuid.uuid1().hex),definition = workflow_graph, role = iam_role ) \nbranching_workflow.create()\n```\n\nIn our example, we built the workflow to take job names as parameters because they’re unique and need to be randomly generated during every pipeline run. We pass these names when the workflow runs. You can also schedule Step Functions workflow to run using CloudWatch (see [Schedule a Serverless Workflow with AWS Step Functions and Amazon CloudWatch](https://aws.amazon.com/getting-started/hands-on/scheduling-a-serverless-workflow-step-functions-cloudwatch-events/)), invoked using [Amazon S3 Events](https://docs.aws.amazon.com/step-functions/latest/dg/tutorial-cloudwatch-events-s3.html), or invoked from [Amazon EventBridge](https://aws.amazon.com/eventbridge/) (see [Create an EventBridge rule that triggers a Step Functions workflow](https://serverlessland.com/patterns/eventbridge-sfn)). For demonstration purposes, we can invoke the Step Functions workflow from the Step Functions console UI or using the following code from the notebook.\n\n```\n# Execute workflow \nexecution = branching_workflow.execute( \n\t\t\tinputs= { “ProcessingJobName”: processing_job_name, # Unique processing job name, \n\t\t\t\t “TrainingJobName”: training_job_name, # Unique training job name, \n\t\t\t\t “ModelName” : model_name # Unique model name \n\t\t\t\t } \n\t) \nexecution_output = execution.get_output(wait=True)\n```\n\n### **Integrate Data Wrangler with Apache Airflow**\n\nallows you to programmatically author, schedule, and monitor workflows. Amazon MWAA makes it easy to set up and operate end-to-end ML pipelines with Apache Airflow in the cloud at scale. An Airflow pipeline consists of a sequence of tasks, also referred to as a workflow. A workflow is defined as a DAG that encapsulates the tasks and the dependencies between them, defining how they should run within the workflow.\n\nWe created an Airflow DAG within an Amazon MWAA environment to implement our MLOps workflow. Each task in the workflow is an executable unit, written in Python programming language, that performs some action. A task can either be an operator or a sensor. In our case, we use an [Airflow Python operator](https://airflow.apache.org/docs/apache-airflow/stable/howto/operator/python.html) along with [SageMaker Python SDK](https://sagemaker.readthedocs.io/en/stable/) to run the Data Wrangler Python script, and use Airflow’s natively supported S[ageMaker operators](https://sagemaker.readthedocs.io/en/stable/workflows/airflow/using_workflow.html) to train the SageMaker built-in XGBoost algorithm and create the model from the resulting artifacts. We also created a helpful custom Data Wrangler operator (```SageMakerDataWranglerOperator```) for Apache Airflow, which you can use to process Data Wrangler flow files for data processing without the need for any additional code.\n\nThe following screenshot shows the Airflow DAG with five steps to implement our MLOps workflow.\n\n\n\nThe Start step uses a Python operator to initialize configurations for the rest of the steps in the workflow. ```SageMaker_DataWrangler_Step``` uses ```SageMakerDataWranglerOperator``` and the data flow file we created earlier. ```SageMaker_training_step``` and ```SageMaker_create_model_step``` use the built-in SageMaker operators for model training and model creation, respectively. Our Amazon MWAA environment uses the smallest instance type (mw1.small), because the bulk of the processing is done via Processing jobs, which uses its own instance type that can be defined as configuration parameters within the workflow.\n\n#### **Installation and walkthrough**\n\nDetailed step-by-step installation instructions to deploy this solution can be found in our [GitHub repository](https://github.com/aws-samples/sm-data-wrangler-mlops-workflows/blob/main/3-apache-airflow-pipelines/README.md#getting-started). We used a Jupyter notebook with Python code cells to set up the Airflow DAG. Assuming you have already generated the data flow file, the following is a high-level overview of the installation steps:\n\n1. [Create an S3 bucket](https://docs.aws.amazon.com/mwaa/latest/userguide/mwaa-s3-bucket.html) and subsequent folders required by Amazon MWAA.\n2. [Create an Amazon MWAA environment](https://docs.aws.amazon.com/mwaa/latest/userguide/create-environment.html). Note that we used Airflow version 2.0.2 for this solution.\n3. Create and upload a ```requirements.txt``` file with all the Python dependencies required by the Airflow tasks and upload it to the ```/requirements``` directory within the Amazon MWAA primary S3 bucket. This is used by the managed Airflow environment to install the Python dependencies.\n4. Upload the ```SMDataWranglerOperator.py``` file to the ```/dags``` directory. This Python script contains code for the custom Airflow operator for Data Wrangler. This operator can be used for tasks to process any .flow file.\n5. Create and upload the ```config.py``` script to the ```/dags``` directory. This Python script is used for the first step of our DAG to create configuration objects required by the remaining steps of the workflow.\n6. Finally, create and upload the ```ml_pipelines.py``` file to the ```/dags``` directory. This script contains the DAG definition for the Airflow workflow. This is where we define each of the tasks, and set up dependencies between them. Amazon MWAA periodically polls the ```/dags``` directory to run this script to create the DAG or update the existing one with any latest changes.\n\nThe following is the code for ```SageMaker_DataWrangler_step```, which uses the custom ```SageMakerDataWranglerOperator```. With just a few lines of code in your DAG definition Python script, you can point the ```SageMakerDataWranglerOperator``` to the Data Wrangler flow file location (which is an S3 location). Behind the scenes, this operator uses [SageMaker Processing jobs](https://sagemaker-examples.readthedocs.io/en/latest/sagemaker_processing/scikit_learn_data_processing_and_model_evaluation/scikit_learn_data_processing_and_model_evaluation.html) to process the .flow file in order to apply the defined transforms to your raw data files. You can also specify the [type of instance](https://docs.aws.amazon.com/sagemaker/latest/dg/data-wrangler-data-flow.html) and number of instances needed by the Data Wrangler processing job.\n\n```\n# Airflow Data Wrangler operator \nfrom SMDataWranglerOperator import SageMakerDataWranglerOperator \npreprocess_task = SageMakerDataWranglerOperator( task_id='DataWrangler_Processing_Step', \n dag=dag, \n flow_file_s3uri = flow_uri, \n processing_instance_count=2, \n instance_type='ml.m5.4xlarge', \n aws_conn_id=\"aws_default\", \n config=config)\n```\n\nThe ```config``` parameter accepts a dictionary (key-value pairs) of additional configurations required by the processing job, such as the output prefix of the final output file, type of output file (CSV or Parquet), and URI for the built-in Data Wrangler container image. The following code is what the ```config``` dictionary for ```SageMakerDataWranglerOperator``` looks like. These configurations are required for a SageMaker Processing [processor](https://sagemaker.readthedocs.io/en/stable/api/training/processing.html#module-sagemaker.processing). For details of each of these config parameters, refer to [sagemaker.processing.Processor()](https://sagemaker.readthedocs.io/en/stable/api/training/processing.html#sagemaker.processing.Processor).\n\n```\n{\n\t\"sagemaker_role\": #required SageMaker IAM Role name or ARN,\n\t\"s3_data_type\": #optional;defaults to \"S3Prefix\"\n\t\"s3_input_mode\": #optional;defaults to \"File\",\n\t\"s3_data_distribution_type\": #optional;defaults to \"FullyReplicated\",\n\t\"kms_key\": #optional;defaults to None,\n\t\"volume_size_in_gb\": #optional;defaults to 30,\n\t\"enable_network_isolation\": #optional;defaults to False,\n\t\"wait_for_processing\": #optional;defaults to True,\n\t\"container_uri\": #optional;defaults to built - in container URI,\n\t\"container_uri_pinned\": #optional;defaults to built - in container URI,\n\t\"outputConfig\": {\n\t\t\"s3_output_upload_mode\": #optional;defaults to EndOfJob\n\t\t\"output_content_type\": #optional;defaults to CSV\n\t\t\"output_bucket\": #optional;defaults to SageMaker Default bucket\n\t\t\"output_prefix\": #optional;defaults to None.Prefix within bucket where output will be written\n\t}\n}\n```\n\n### **Clean up**\n\nTo avoid incurring future charges, delete the resources created for the solutions you implemented.\n\n1. Follow these [instructions](https://github.com/aws-samples/sm-data-wrangler-mlops-workflows/blob/main/1-sagemaker-pipelines/README.md) provided in the GitHub repository to clean up resources created by the SageMaker Pipelines solution.\n2. Follow these [instructions](https://github.com/aws-samples/sm-data-wrangler-mlops-workflows/blob/main/2-step-functions-pipelines/README.md) provided in the GitHub repository to clean up resources created by the Step Functions solution.\n3. Follow these [instructions](https://github.com/aws-samples/sm-data-wrangler-mlops-workflows/blob/main/3-apache-airflow-pipelines/README.md) provided in the GitHub repository to clean up resources created by the Amazon MWAA solution.\n\n### **Conclusion**\n\nThis post demonstrated how you can easily integrate Data Wrangler with some of the well-known workflow automation and orchestration technologies in AWS. We first reviewed a sample use case and architecture for the solution that uses Data Wrangler for data preprocessing. We then demonstrated how you can integrate Data Wrangler with Pipelines, Step Functions, and Amazon MWAA.\n\nAs a next step, you can find and try out the code samples and notebooks in our [GitHub repository](https://github.com/aws-samples/sm-data-wrangler-mlops-workflows) using the detailed instructions for each of the solutions discussed in this post. To learn more about how Data Wrangler can help your ML workloads, visit the Data Wrangler [product page](https://aws.amazon.com/sagemaker/data-wrangler/) and [Prepare ML Data with Amazon SageMaker Data Wrangler](https://docs.aws.amazon.com/sagemaker/latest/dg/data-wrangler.html).\n\n### **About the authors**\n\n\n\n**Rodrigo Alarcon** is a Senior ML Strategy Solutions Architect with AWS based out of Santiago, Chile. In his role, Rodrigo helps companies of different sizes generate business outcomes through cloud-based AI and ML solutions. His interests include machine learning and cybersecurity.\n\n\n\n**Ganapathi Krishnamoorthi** is a Senior ML Solutions Architect at AWS. Ganapathi provides prescriptive guidance to startup and enterprise customers helping them to design and deploy cloud applications at scale. He is specialized in machine learning and is focused on helping customers leverage AI/ML for their business outcomes. When not at work, he enjoys exploring outdoors and listening to music.\n\n\n\n**Anjan Biswas** is a Senior AI Services Solutions Architect with focus on AI/ML and Data Analytics. Anjan is part of the world-wide AI services team and works with customers to help them understand, and develop solutions to business problems with AI and ML. Anjan has over 14 years of experience working with global supply chain, manufacturing, and retail organizations and is actively helping customers get started and scale on AWS AI services.","render":"<p><img src=\"https://dev-media.amazoncloud.cn/07115d07ccbc4ed88f722cd9204fb323_image.png\" alt=\"image.png\" /></p>\n<p>Data scientists and ML engineers spend somewhere between 70–80% of their time collecting, analyzing, cleaning, and transforming data required for model training. <a href=\"https://aws.amazon.com/sagemaker/data-wrangler/\" target=\"_blank\">Amazon SageMaker Data Wrangler</a> is a fully managed capability of <a href=\"https://aws.amazon.com/sagemaker/\" target=\"_blank\">Amazon SageMaker</a> that makes it faster for data scientists and ML engineers to analyze and prepare data for their ML projects with little to no code. When it comes to operationalizing an end-to-end ML lifecycle, data preparation is almost always the first step in the process. Given that there are many ways to build an end-to-end ML pipeline, in this post we discuss how you can easily integrate Data Wrangler with some of the well-known workflow automation and orchestration technologies.</p>\n<h3><a id=\"Solution_overview_4\"></a><strong>Solution overview</strong></h3>\n<p>In this post, we demonstrate how users can integrate data preparation using Data Wrangler with <a href=\"https://aws.amazon.com/sagemaker/pipelines/\" target=\"_blank\">Amazon SageMaker Pipelines, AWS Step Functions</a>, and A<a href=\"https://airflow.apache.org/\" target=\"_blank\">pache Airflow</a> with <a href=\"https://aws.amazon.com/managed-workflows-for-apache-airflow/\" target=\"_blank\">Amazon Managed Workflow for Apache Airflow</a> (Amazon MWAA). Pipelines is a SageMaker feature that is a purpose-built and easy-to-use continuous integration and continuous delivery (CI/CD) service for ML. Step Functions is a serverless, low-code visual workflow service used to orchestrate AWS services and automate business processes. Amazon MWAA is a managed orchestration service for Apache Airflow that makes it easier to operate end-to-end data and ML pipelines.</p>\n<p>For demonstration purposes, we consider a use case to prepare data to train an ML model with the SageMaker built-in <a href=\"https://docs.aws.amazon.com/sagemaker/latest/dg/xgboost.html\" target=\"_blank\">XGBoost algorithm</a> that will help us identify fraudulent vehicle insurance claims. We used a synthetically generated set of sample data to train the model and create a SageMaker model using the model artifacts from the training process. Our goal is to operationalize this process end to end by setting up an ML workflow. Although ML workflows can be more elaborate, we use a minimal workflow for demonstration purposes. The first step of the workflow is data preparation with Data Wrangler, followed by a model training step, and finally a model creation step. The following diagram illustrates our solution workflow.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/13c2cc17037447ecb9c9b5b03cc3852e_image.png\" alt=\"image.png\" /></p>\n<p>In the following sections, we walk you through how to set up a Data Wrangler flow and integrate Data Wrangler with Pipelines, Step Functions, and Apache Airflow.</p>\n<h3><a id=\"Set_up_a_Data_Wrangler_flow_14\"></a><strong>Set up a Data Wrangler flow</strong></h3>\n<p>We start by creating a Data Wrangler flow, also called a data flow, using the <a href=\"https://docs.aws.amazon.com/sagemaker/latest/dg/data-wrangler-data-flow.html#data-wrangler-data-flow-ui\" target=\"_blank\">data flow UI</a> via the <a href=\"https://aws.amazon.com/sagemaker/studio/\" target=\"_blank\">Amazon SageMaker Studio</a> IDE. Our sample dataset consists of two data files: <a href=\"https://github.com/aws-samples/sm-data-wrangler-mlops-workflows/blob/main/data/claims.csv\" target=\"_blank\">claims.csv</a> and <a href=\"https://github.com/aws-samples/sm-data-wrangler-mlops-workflows/blob/main/data/customers.csv\" target=\"_blank\">customers.csv</a>, which are stored in an <a href=\"https://aws.amazon.com/s3/\" target=\"_blank\">Amazon Simple Storage Service</a> (Amazon S3) <a href=\"https://docs.aws.amazon.com/AmazonS3/latest/userguide/creating-buckets-s3.html\" target=\"_blank\">bucket</a>. We use the data flow UI to apply Data Wrangler’s <a href=\"https://docs.aws.amazon.com/sagemaker/latest/dg/data-wrangler-transform.html\" target=\"_blank\">built-in transformations</a> such categorical encoding, string formatting, and imputation to the feature columns in each of these files. We also apply <a href=\"https://docs.aws.amazon.com/sagemaker/latest/dg/data-wrangler-transform.html#data-wrangler-transform-custom\" target=\"_blank\">custom transformation</a> to a few feature columns using a few lines of custom Python code with <a href=\"https://pandas.pydata.org/docs/reference/api/pandas.DataFrame.html\" target=\"_blank\">Pandas DataFrame</a>. The following screenshot shows the transforms applied to the claims.csv file in the data flow UI.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/ff6e577e64434927bb7fa6b48551be20_image.png\" alt=\"image.png\" /></p>\n<p>Finally, we join the results of the applied transforms of the two data files to generate a single training dataset for our model training. We use Data Wrangler’s built-in <a href=\"https://docs.aws.amazon.com/sagemaker/latest/dg/data-wrangler-transform.html#data-wrangler-transform-join\" target=\"_blank\">join datasets</a> capability, which lets us perform SQL-like join operations on tabular data. The following screenshot shows the data flow in the data flow UI in Studio. For step-by-step instructions to create the data flow using Data Wrangler, refer to the <a href=\"https://github.com/aws-samples/sm-data-wrangler-mlops-workflows\" target=\"_blank\">GitHub repository</a>.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/f6fc5c59244042c6b40cbb5313da29ab_image.png\" alt=\"image.png\" /></p>\n<p>You can now use the data flow (.flow) file to perform data transformations on our raw data files. The data flow UI can automatically generate Python notebooks for us to use and integrate directly with Pipelines using the SageMaker SDK. For Step Functions, we use the <a href=\"https://aws-step-functions-data-science-sdk.readthedocs.io/en/stable/\" target=\"_blank\">AWS Step Functions Data Science Python SDK</a> to integrate our Data Wrangler processing with a Step Functions pipeline. For Amazon MWAA, we use a <a href=\"https://airflow.apache.org/docs/apache-airflow/stable/howto/custom-operator.html\" target=\"_blank\">custom Airflow operator</a> and the <a href=\"https://sagemaker.readthedocs.io/en/stable/workflows/airflow/using_workflow.html\" target=\"_blank\">Airflow SageMaker operator</a>. We discuss each of these approaches in detail in the following sections.</p>\n<h3><a id=\"Integrate_Data_Wrangler_with_Pipelines_26\"></a><strong>Integrate Data Wrangler with Pipelines</strong></h3>\n<p>SageMaker Pipelines is a native workflow orchestration tool for building ML pipelines that take advantage of direct SageMaker integration. Along with the <a href=\"https://docs.aws.amazon.com/sagemaker/latest/dg/model-registry.html\" target=\"_blank\">SageMaker model registry</a>, Pipelines improves the operational resilience and reproducibility of your ML workflows. These workflow automation components enable you to easily scale your ability to build, train, test, and deploy hundreds of models in production; iterate faster; reduce errors due to manual orchestration; and build repeatable mechanisms. Each step in the pipeline can keep track of the lineage, and intermediate steps can be cached for quickly rerunning the pipeline. You can <a href=\"https://sagemaker.readthedocs.io/en/stable/workflows/pipelines/sagemaker.workflow.pipelines.html\" target=\"_blank\">create pipelines using the SageMaker Python SDK</a>.</p>\n<p>A workflow built with SageMaker pipelines consists of a sequence of steps forming a Directed Acyclic Graph (DAG). In this example, we begin with a <a href=\"\" target=\"_blank\">processing step</a>https://docs.aws.amazon.com/sagemaker/latest/dg/build-and-manage-steps.html#step-type-processing, which runs a <a href=\"https://docs.aws.amazon.com/sagemaker/latest/dg/processing-job.html\" target=\"_blank\">SageMaker Processing job</a> based on the Data Wrangler’s flow file to create a training dataset. We then continue with a <a href=\"https://docs.aws.amazon.com/sagemaker/latest/dg/build-and-manage-steps.html#step-type-training\" target=\"_blank\">training step</a>, where we train an XGBoost model using SageMaker’s built-in XGBoost algorithm and the training dataset created in the previous step. After a model has been trained, we end this workflow with a <a href=\"https://docs.aws.amazon.com/sagemaker/latest/dg/build-and-manage-steps.html#step-type-register-model\" target=\"_blank\">RegisterModel step </a>to register the trained model with the SageMaker model registry.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/bec10178f66d4052a791599ebb91877b_image.png\" alt=\"image.png\" /></p>\n<h4><a id=\"Installation_and_walkthrough_34\"></a><strong>Installation and walkthrough</strong></h4>\n<p>To run this sample, we use a Jupyter notebook running Python3 on a Data Science kernel image in a Studio environment. You can also run it on a Jupyter notebook instance locally on your machine by setting up the credentials to assume the SageMaker execution role. The notebook is lightweight and can be run on an ml.t3.medium instance. Detailed step-by-step instructions can be found in the <a href=\"https://github.com/aws-samples/sm-data-wrangler-mlops-workflows\" target=\"_blank\">GitHub repository</a>.</p>\n<p>You can either use the export feature in Data Wrangler to generate the Pipelines code, or build your own script from scratch. In our sample repository, we use a combination of both approaches for simplicity. At a high level, the following are the steps to build and run the Pipelines workflow:</p>\n<ol>\n<li>Generate a flow file from Data Wrangler or use the setup script to generate a flow file from a preconfigured template.</li>\n<li>Create an <a href=\"http://aws.amazon.com/s3\" target=\"_blank\">Amazon Simple Storage Service</a> (Amazon S3) bucket and upload your flow file and input files to the bucket. In our sample notebook, we use the SageMaker default S3 bucket.</li>\n<li>Follow the instructions in the notebook to create a Processor object based on the Data Wrangler flow file, and an Estimator object with the parameters of the training job.<br />\na. In our example, because we only use SageMaker features and the default S3 bucket, we can use Studio’s default execution role. The same <a href=\"http://aws.amazon.com/iam\" target=\"_blank\">AWS Identity and Access Management</a> (IAM) role is assumed by the pipeline run, the processing job, and the training job. You can further customize the execution role according to minimum privilege.</li>\n<li>Continue with the instructions to create a pipeline with steps referencing the Processor and Estimator objects, and then run the pipeline. The processing and training jobs run on SageMaker managed environments and take a few minutes to complete.</li>\n<li>In Studio, you can see the pipeline details monitor the pipeline run. You can also monitor the underlying processing and training jobs from the SageMaker console or from <a href=\"http://aws.amazon.com/cloudwatch\" target=\"_blank\">Amazon CloudWatch.</a></li>\n</ol>\n<h3><a id=\"Integrate_Data_Wrangler_with_Step_Functions_47\"></a><strong>Integrate Data Wrangler with Step Functions</strong></h3>\n<p>With Step Functions, you can express complex business logic as low-code, event-driven workflows that connect different AWS services. The <a href=\"https://aws-step-functions-data-science-sdk.readthedocs.io/en/stable/\" target=\"_blank\">Step Functions Data Science SDK</a> is an open-source library that allows data scientists to create workflows that can preprocess datasets and build, deploy, and monitor ML models using SageMaker and Step Functions. Step Functions is based on state machines and tasks. Step Functions creates workflows out of steps called <a href=\"https://docs.aws.amazon.com/step-functions/latest/dg/concepts-states.html\" target=\"_blank\">states</a>, and expresses that workflow in the <a href=\"https://docs.aws.amazon.com/step-functions/latest/dg/concepts-amazon-states-language.html\" target=\"_blank\">Amazon States Language</a>. When you create a workflow using the Step Functions Data Science SDK, it creates a state machine representing your workflow and steps in Step Functions.</p>\n<p>For this use case, we built a Step Functions workflow based on the common pattern used in this post that includes a processing step, training step, and <code>RegisterModel</code> step. In this case, we import these steps from the Step Functions Data Science Python SDK. We chain these steps in the same order to create a Step Functions workflow. The workflow uses the flow file that was generated from Data Wrangler, but you can also use your own Data Wrangler flow file. We reuse some code from the Data Wrangler <a href=\"https://docs.aws.amazon.com/sagemaker/latest/dg/data-wrangler-data-export.html\" target=\"_blank\">export feature</a> for simplicity. We run the data preprocessing logic generated by Data Wrangler flow file to create a training dataset, train a model using the XGBoost algorithm, and save the trained model artifact as a SageMaker model. Additionally, in the GitHub repo, we also show how Step Functions allows us to try and catch errors, and handle failures and retries with <code>FailStateStep</code> and <code>CatchStateStep</code>.</p>\n<p>The resulting flow diagram, as shown in the following screenshot, is available on the Step Functions console after the workflow has started. This helps data scientists and engineers visualize the entire workflow and every step within it, and access the linked CloudWatch logs for every step.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/3645f9c9d07d4a0f931316630cfca44c_image.png\" alt=\"image.png\" /></p>\n<h4><a id=\"Installation_and_walkthrough_57\"></a><strong>Installation and walkthrough</strong></h4>\n<p>To run this sample, we use a Python notebook running with a Data Science kernel in a Studio environment. You can also run it on a Python notebook instance locally on your machine by setting up the credentials to assume the SageMaker execution role. The notebook is lightweight and can be run on a t3 medium instance for example. Detailed step-by-step instructions can be found in the <a href=\"https://github.com/aws-samples/sm-data-wrangler-mlops-workflows\" target=\"_blank\">GitHub repository</a>.</p>\n<p>You can either use the export feature in Data Wrangler to generate the Pipelines code and modify it for Step Functions or build your own script from scratch. In our sample repository, we use a combination of both approaches for simplicity. At a high level, the following are the steps to build and run the Step Functions workflow:</p>\n<ol>\n<li>Generate a flow file from Data Wrangler or use the setup script to generate a flow file from a preconfigured template.</li>\n<li>Create an S3 bucket and upload your flow file and input files to the bucket.</li>\n<li>Configure your SageMaker execution role with the required permissions as mentioned earlier. Refer to the GitHub repository for detailed instructions.</li>\n<li>Follow the instructions to run the notebook in the repository to start a workflow. The processing job runs on a SageMaker-managed Spark environment and can take few minutes to complete.</li>\n<li>Go to Step Functions console and track the workflow visually. You can also navigate to the linked CloudWatch logs to debug errors.</li>\n</ol>\n<p>Let’s review some important sections of the code here. To define the workflow, we first define the steps in the workflow for the Step Function state machine. The first step is <code>the data_wrangler_step</code> for data processing, which uses the Data Wrangler flow file as an input to transform the raw data files. We also define a model training step and a model creation step named <code>training_step</code> and <code>model_step</code>, respectively. Finally, we create a workflow by chaining all the steps we created, as shown in the following code:</p>\n<pre><code class=\"lang-\">from stepfunctions.steps import Chain \nfrom stepfunctions.workflow import Workflow \nimport uuid \n\nworkflow_graph = Chain([data_wrangler_step, training_step,model_step ]) \nbranching_workflow = Workflow( name = "Wrangler-SF-Run-{}".format(uuid.uuid1().hex),definition = workflow_graph, role = iam_role ) \nbranching_workflow.create()\n</code></pre>\n<p>In our example, we built the workflow to take job names as parameters because they’re unique and need to be randomly generated during every pipeline run. We pass these names when the workflow runs. You can also schedule Step Functions workflow to run using CloudWatch (see <a href=\"https://aws.amazon.com/getting-started/hands-on/scheduling-a-serverless-workflow-step-functions-cloudwatch-events/\" target=\"_blank\">Schedule a Serverless Workflow with AWS Step Functions and Amazon CloudWatch</a>), invoked using <a href=\"https://docs.aws.amazon.com/step-functions/latest/dg/tutorial-cloudwatch-events-s3.html\" target=\"_blank\">Amazon S3 Events</a>, or invoked from <a href=\"https://aws.amazon.com/eventbridge/\" target=\"_blank\">Amazon EventBridge</a> (see <a href=\"https://serverlessland.com/patterns/eventbridge-sfn\" target=\"_blank\">Create an EventBridge rule that triggers a Step Functions workflow</a>). For demonstration purposes, we can invoke the Step Functions workflow from the Step Functions console UI or using the following code from the notebook.</p>\n<pre><code class=\"lang-\"># Execute workflow \nexecution = branching_workflow.execute( \n\t\t\tinputs= { “ProcessingJobName”: processing_job_name, # Unique processing job name, \n\t\t\t\t “TrainingJobName”: training_job_name, # Unique training job name, \n\t\t\t\t “ModelName” : model_name # Unique model name \n\t\t\t\t } \n\t) \nexecution_output = execution.get_output(wait=True)\n</code></pre>\n<h3><a id=\"Integrate_Data_Wrangler_with_Apache_Airflow_94\"></a><strong>Integrate Data Wrangler with Apache Airflow</strong></h3>\n<p>allows you to programmatically author, schedule, and monitor workflows. Amazon MWAA makes it easy to set up and operate end-to-end ML pipelines with Apache Airflow in the cloud at scale. An Airflow pipeline consists of a sequence of tasks, also referred to as a workflow. A workflow is defined as a DAG that encapsulates the tasks and the dependencies between them, defining how they should run within the workflow.</p>\n<p>We created an Airflow DAG within an Amazon MWAA environment to implement our MLOps workflow. Each task in the workflow is an executable unit, written in Python programming language, that performs some action. A task can either be an operator or a sensor. In our case, we use an <a href=\"https://airflow.apache.org/docs/apache-airflow/stable/howto/operator/python.html\" target=\"_blank\">Airflow Python operator</a> along with <a href=\"https://sagemaker.readthedocs.io/en/stable/\" target=\"_blank\">SageMaker Python SDK</a> to run the Data Wrangler Python script, and use Airflow’s natively supported S<a href=\"https://sagemaker.readthedocs.io/en/stable/workflows/airflow/using_workflow.html\" target=\"_blank\">ageMaker operators</a> to train the SageMaker built-in XGBoost algorithm and create the model from the resulting artifacts. We also created a helpful custom Data Wrangler operator (<code>SageMakerDataWranglerOperator</code>) for Apache Airflow, which you can use to process Data Wrangler flow files for data processing without the need for any additional code.</p>\n<p>The following screenshot shows the Airflow DAG with five steps to implement our MLOps workflow.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/0bc23b4a11624e81a607434f198bc08d_image.png\" alt=\"image.png\" /></p>\n<p>The Start step uses a Python operator to initialize configurations for the rest of the steps in the workflow. <code>SageMaker_DataWrangler_Step</code> uses <code>SageMakerDataWranglerOperator</code> and the data flow file we created earlier. <code>SageMaker_training_step</code> and <code>SageMaker_create_model_step</code> use the built-in SageMaker operators for model training and model creation, respectively. Our Amazon MWAA environment uses the smallest instance type (mw1.small), because the bulk of the processing is done via Processing jobs, which uses its own instance type that can be defined as configuration parameters within the workflow.</p>\n<h4><a id=\"Installation_and_walkthrough_106\"></a><strong>Installation and walkthrough</strong></h4>\n<p>Detailed step-by-step installation instructions to deploy this solution can be found in our <a href=\"https://github.com/aws-samples/sm-data-wrangler-mlops-workflows/blob/main/3-apache-airflow-pipelines/README.md#getting-started\" target=\"_blank\">GitHub repository</a>. We used a Jupyter notebook with Python code cells to set up the Airflow DAG. Assuming you have already generated the data flow file, the following is a high-level overview of the installation steps:</p>\n<ol>\n<li><a href=\"https://docs.aws.amazon.com/mwaa/latest/userguide/mwaa-s3-bucket.html\" target=\"_blank\">Create an S3 bucket</a> and subsequent folders required by Amazon MWAA.</li>\n<li><a href=\"https://docs.aws.amazon.com/mwaa/latest/userguide/create-environment.html\" target=\"_blank\">Create an Amazon MWAA environment</a>. Note that we used Airflow version 2.0.2 for this solution.</li>\n<li>Create and upload a <code>requirements.txt</code> file with all the Python dependencies required by the Airflow tasks and upload it to the <code>/requirements</code> directory within the Amazon MWAA primary S3 bucket. This is used by the managed Airflow environment to install the Python dependencies.</li>\n<li>Upload the <code>SMDataWranglerOperator.py</code> file to the <code>/dags</code> directory. This Python script contains code for the custom Airflow operator for Data Wrangler. This operator can be used for tasks to process any .flow file.</li>\n<li>Create and upload the <code>config.py</code> script to the <code>/dags</code> directory. This Python script is used for the first step of our DAG to create configuration objects required by the remaining steps of the workflow.</li>\n<li>Finally, create and upload the <code>ml_pipelines.py</code> file to the <code>/dags</code> directory. This script contains the DAG definition for the Airflow workflow. This is where we define each of the tasks, and set up dependencies between them. Amazon MWAA periodically polls the <code>/dags</code> directory to run this script to create the DAG or update the existing one with any latest changes.</li>\n</ol>\n<p>The following is the code for <code>SageMaker_DataWrangler_step</code>, which uses the custom <code>SageMakerDataWranglerOperator</code>. With just a few lines of code in your DAG definition Python script, you can point the <code>SageMakerDataWranglerOperator</code> to the Data Wrangler flow file location (which is an S3 location). Behind the scenes, this operator uses <a href=\"https://sagemaker-examples.readthedocs.io/en/latest/sagemaker_processing/scikit_learn_data_processing_and_model_evaluation/scikit_learn_data_processing_and_model_evaluation.html\" target=\"_blank\">SageMaker Processing jobs</a> to process the .flow file in order to apply the defined transforms to your raw data files. You can also specify the <a href=\"https://docs.aws.amazon.com/sagemaker/latest/dg/data-wrangler-data-flow.html\" target=\"_blank\">type of instance</a> and number of instances needed by the Data Wrangler processing job.</p>\n<pre><code class=\"lang-\"># Airflow Data Wrangler operator \nfrom SMDataWranglerOperator import SageMakerDataWranglerOperator \npreprocess_task = SageMakerDataWranglerOperator( task_id='DataWrangler_Processing_Step', \n dag=dag, \n flow_file_s3uri = flow_uri, \n processing_instance_count=2, \n instance_type='ml.m5.4xlarge', \n aws_conn_id="aws_default", \n config=config)\n</code></pre>\n<p>The <code>config</code> parameter accepts a dictionary (key-value pairs) of additional configurations required by the processing job, such as the output prefix of the final output file, type of output file (CSV or Parquet), and URI for the built-in Data Wrangler container image. The following code is what the <code>config</code> dictionary for <code>SageMakerDataWranglerOperator</code> looks like. These configurations are required for a SageMaker Processing <a href=\"https://sagemaker.readthedocs.io/en/stable/api/training/processing.html#module-sagemaker.processing\" target=\"_blank\">processor</a>. For details of each of these config parameters, refer to <a href=\"https://sagemaker.readthedocs.io/en/stable/api/training/processing.html#sagemaker.processing.Processor\" target=\"_blank\">sagemaker.processing.Processor()</a>.</p>\n<pre><code class=\"lang-\">{\n\t"sagemaker_role": #required SageMaker IAM Role name or ARN,\n\t"s3_data_type": #optional;defaults to "S3Prefix"\n\t"s3_input_mode": #optional;defaults to "File",\n\t"s3_data_distribution_type": #optional;defaults to "FullyReplicated",\n\t"kms_key": #optional;defaults to None,\n\t"volume_size_in_gb": #optional;defaults to 30,\n\t"enable_network_isolation": #optional;defaults to False,\n\t"wait_for_processing": #optional;defaults to True,\n\t"container_uri": #optional;defaults to built - in container URI,\n\t"container_uri_pinned": #optional;defaults to built - in container URI,\n\t"outputConfig": {\n\t\t"s3_output_upload_mode": #optional;defaults to EndOfJob\n\t\t"output_content_type": #optional;defaults to CSV\n\t\t"output_bucket": #optional;defaults to SageMaker Default bucket\n\t\t"output_prefix": #optional;defaults to None.Prefix within bucket where output will be written\n\t}\n}\n</code></pre>\n<h3><a id=\"Clean_up_154\"></a><strong>Clean up</strong></h3>\n<p>To avoid incurring future charges, delete the resources created for the solutions you implemented.</p>\n<ol>\n<li>Follow these <a href=\"https://github.com/aws-samples/sm-data-wrangler-mlops-workflows/blob/main/1-sagemaker-pipelines/README.md\" target=\"_blank\">instructions</a> provided in the GitHub repository to clean up resources created by the SageMaker Pipelines solution.</li>\n<li>Follow these <a href=\"https://github.com/aws-samples/sm-data-wrangler-mlops-workflows/blob/main/2-step-functions-pipelines/README.md\" target=\"_blank\">instructions</a> provided in the GitHub repository to clean up resources created by the Step Functions solution.</li>\n<li>Follow these <a href=\"https://github.com/aws-samples/sm-data-wrangler-mlops-workflows/blob/main/3-apache-airflow-pipelines/README.md\" target=\"_blank\">instructions</a> provided in the GitHub repository to clean up resources created by the Amazon MWAA solution.</li>\n</ol>\n<h3><a id=\"Conclusion_162\"></a><strong>Conclusion</strong></h3>\n<p>This post demonstrated how you can easily integrate Data Wrangler with some of the well-known workflow automation and orchestration technologies in AWS. We first reviewed a sample use case and architecture for the solution that uses Data Wrangler for data preprocessing. We then demonstrated how you can integrate Data Wrangler with Pipelines, Step Functions, and Amazon MWAA.</p>\n<p>As a next step, you can find and try out the code samples and notebooks in our <a href=\"https://github.com/aws-samples/sm-data-wrangler-mlops-workflows\" target=\"_blank\">GitHub repository</a> using the detailed instructions for each of the solutions discussed in this post. To learn more about how Data Wrangler can help your ML workloads, visit the Data Wrangler <a href=\"https://aws.amazon.com/sagemaker/data-wrangler/\" target=\"_blank\">product page</a> and <a href=\"https://docs.aws.amazon.com/sagemaker/latest/dg/data-wrangler.html\" target=\"_blank\">Prepare ML Data with Amazon SageMaker Data Wrangler</a>.</p>\n<h3><a id=\"About_the_authors_168\"></a><strong>About the authors</strong></h3>\n<p><img src=\"https://dev-media.amazoncloud.cn/2a82b4fb1d66440496c0700d93b52e0e_image.png\" alt=\"image.png\" /></p>\n<p><strong>Rodrigo Alarcon</strong> is a Senior ML Strategy Solutions Architect with AWS based out of Santiago, Chile. In his role, Rodrigo helps companies of different sizes generate business outcomes through cloud-based AI and ML solutions. His interests include machine learning and cybersecurity.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/6640c38016694baa9361bd7c5dffb116_image.png\" alt=\"image.png\" /></p>\n<p><strong>Ganapathi Krishnamoorthi</strong> is a Senior ML Solutions Architect at AWS. Ganapathi provides prescriptive guidance to startup and enterprise customers helping them to design and deploy cloud applications at scale. He is specialized in machine learning and is focused on helping customers leverage AI/ML for their business outcomes. When not at work, he enjoys exploring outdoors and listening to music.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/47f5539c159c4c12aa55596fc9f971c2_image.png\" alt=\"image.png\" /></p>\n<p><strong>Anjan Biswas</strong> is a Senior AI Services Solutions Architect with focus on AI/ML and Data Analytics. Anjan is part of the world-wide AI services team and works with customers to help them understand, and develop solutions to business problems with AI and ML. Anjan has over 14 years of experience working with global supply chain, manufacturing, and retail organizations and is actively helping customers get started and scale on AWS AI services.</p>\n"}

Integrate Amazon SageMaker Data Wrangler with MLOps workflows

海外精选

海外精选的内容汇集了全球优质的亚马逊云科技相关技术内容。同时,内容中提到的“AWS”

是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

0

0 0

0亚马逊云科技解决方案 基于行业客户应用场景及技术领域的解决方案

联系亚马逊云科技专家

目录

亚马逊云科技解决方案 基于行业客户应用场景及技术领域的解决方案

联系亚马逊云科技专家

亚马逊云科技解决方案

基于行业客户应用场景及技术领域的解决方案

联系专家

0

目录

分享

分享 点赞

点赞 收藏

收藏 目录

目录立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。