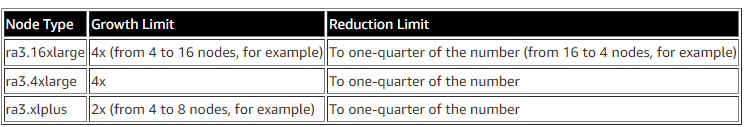

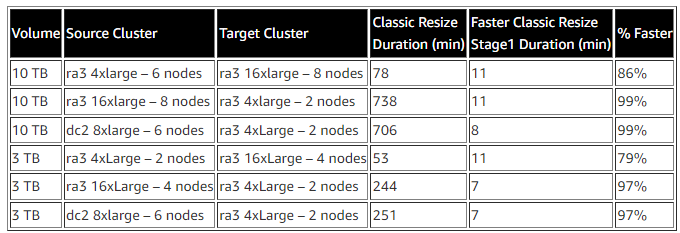

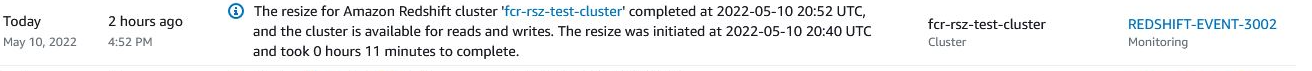

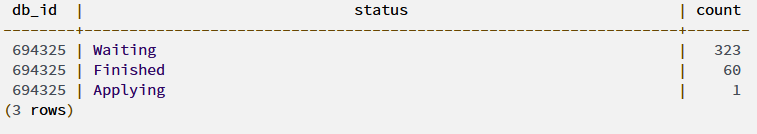

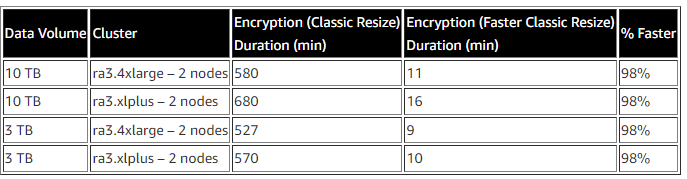

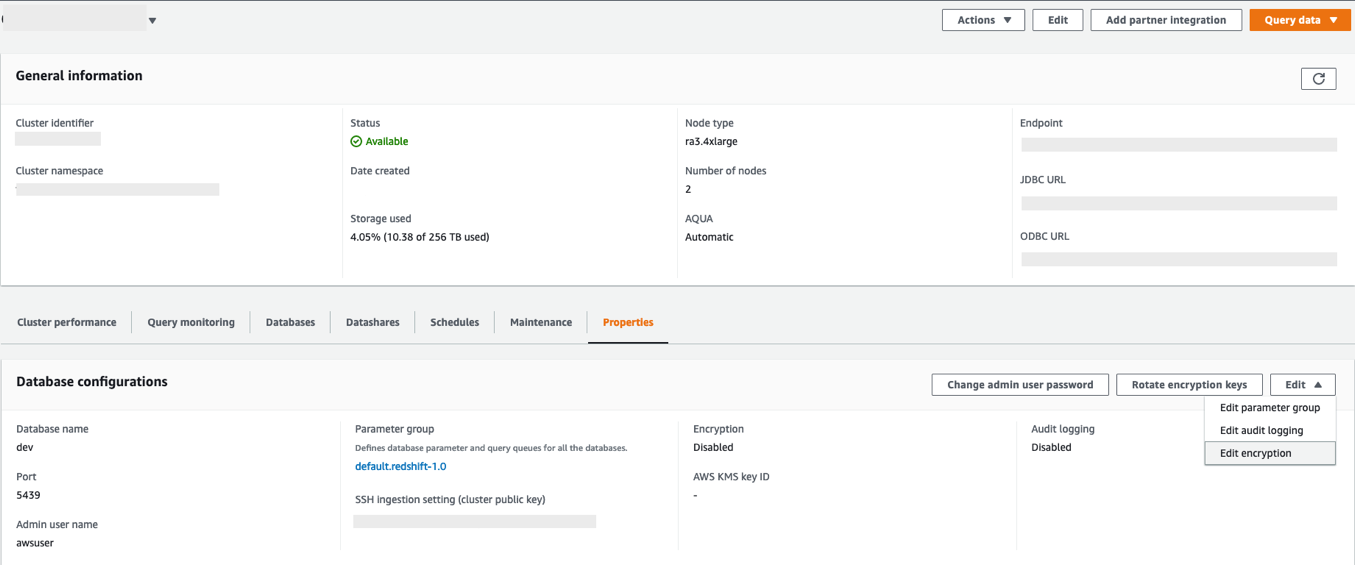

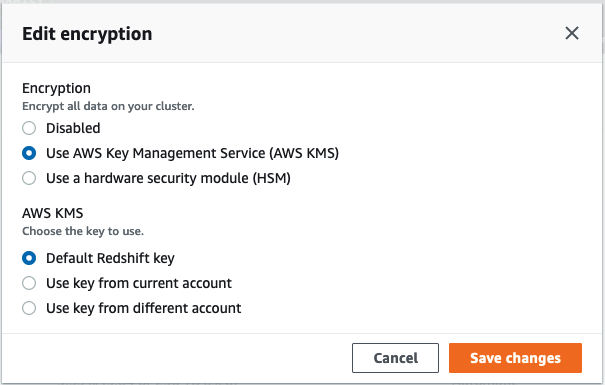

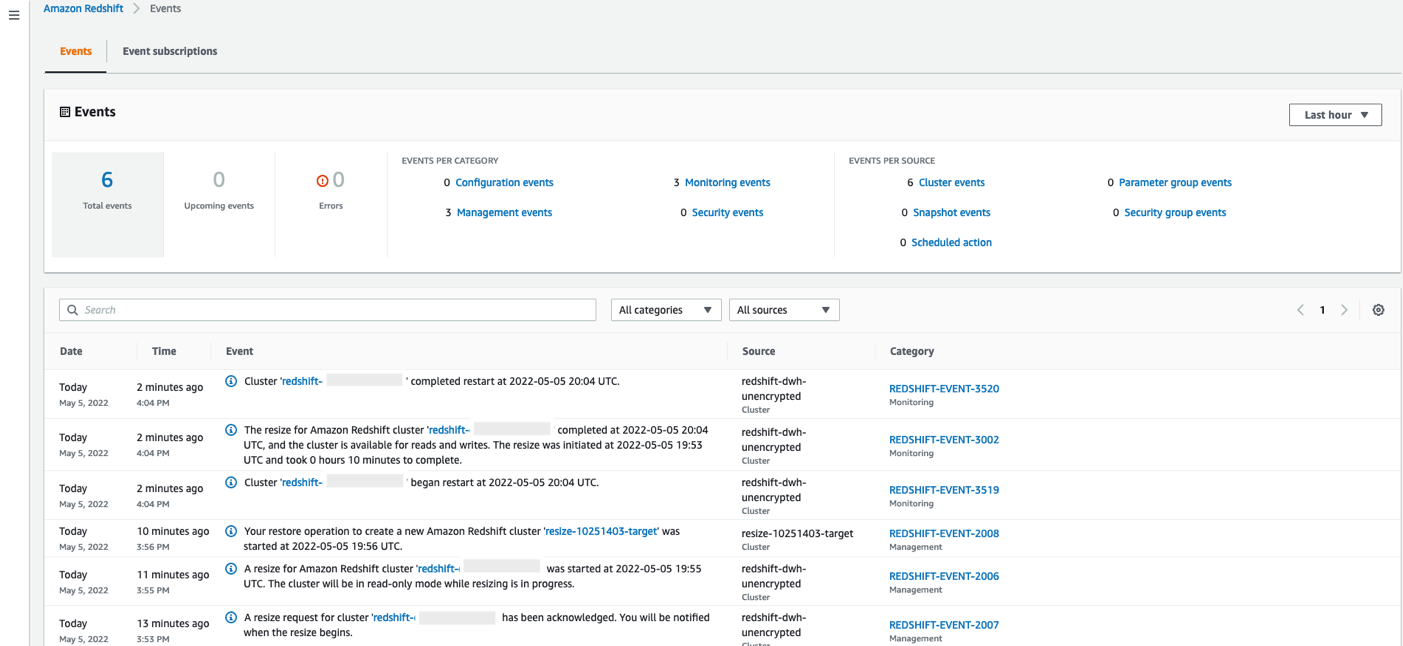

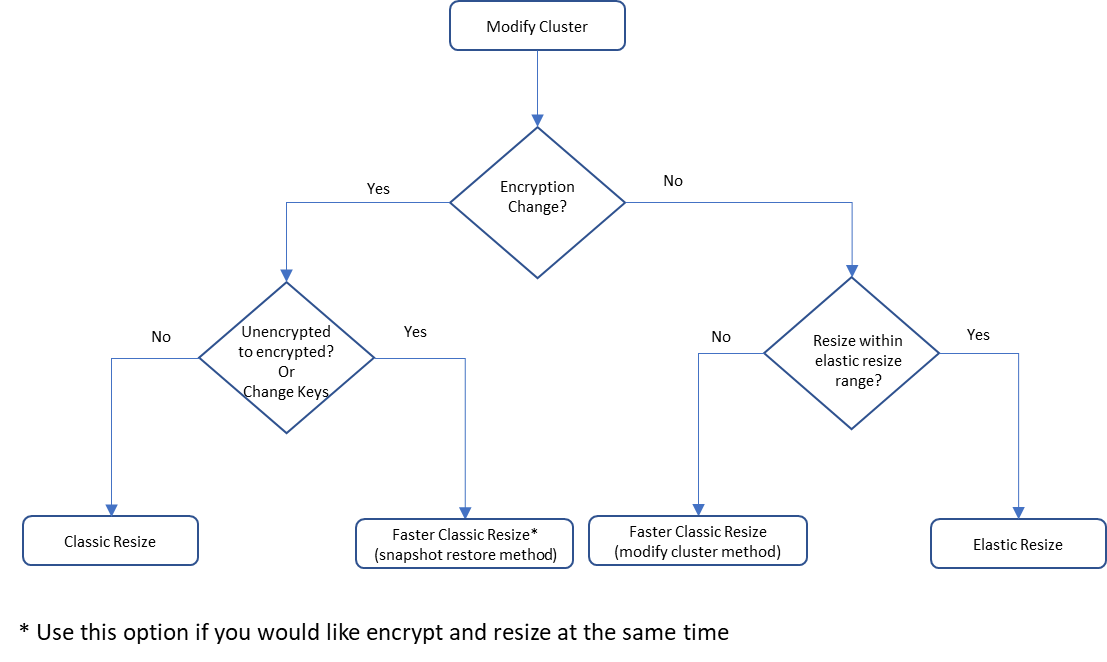

{"value":"[Amazon Redshift](https://aws.amazon.com/redshift/) has improved the performance of the classic resize feature and increased the flexibility of the cluster snapshot restore operation. You can use the classic resize operation to resize a cluster when you need to change the instance type or transition to a configuration that can’t be supported by elastic resize. This could take the cluster offline for many hours during the resize, but now the cluster can typically be available to process queries in minutes. Clusters can also be resized when restoring from a snapshot and in those cases there could be restrictions.\n\nYou can now also restore an encrypted cluster from an unencrypted snapshot or change the encryption key. Amazon Redshift uses [Amazon Web Services Key Management Service](http://aws.amazon.com/kms) (Amazon Web Services KMS) as an encryption option to provide an additional layer of data protection by securing your data from unauthorized access to the underlying storage. Now you can encrypt an unencrypted cluster with a KMS key faster by simply specifying a KMS key ID when modifying the cluster. You can also restore an Amazon Web Services KMS-encrypted cluster from an unencrypted snapshot. You can access the feature via the [Amazon Web Services Management Console](http://aws.amazon.com/console), SDK, or [Amazon Web Services Command Line Interface](http://aws.amazon.com/cli) (Amazon Web Services CLI). Please note that these features only apply to the clusters or target clusters with the RA3 node type.\n\nIn this post, we show you how the updated classic resize option works and also how it significantly improves the amount of time it takes to resize or encrypt your cluster with this enhancement. We also walk through the steps to resize your Amazon Redshift cluster using Faster Classic Resize.\n\n\n#### **Existing resize options**\n\n\nWe’ve worked closely with our customers to learn how their needs evolve as their data scales or as their security and compliance requirements change. To address and meet your ever-growing demands, you often have to [resize your Amazon Redshift cluster](https://docs.aws.amazon.com/redshift/latest/mgmt/managing-cluster-operations.html#rs-resize-tutorial) and choose an optimal instance type that delivers the best price/performance. As of this writing, there are three ways you can resize your clusters: [elastic resize](https://docs.aws.amazon.com/redshift/latest/mgmt/rs-resize-tutorial.html#elastic-resize), [classic resize](https://docs.aws.amazon.com/redshift/latest/mgmt/rs-resize-tutorial.html#classic-resize), and the [snapshot, restore, and resize](https://docs.aws.amazon.com/redshift/latest/mgmt/rs-resize-tutorial.html#rs-tutorial-snapshot-restore-resize-overview) method.\n\nAmong the three options, elastic resize is the fastest available resize mechanism because it works based on slice remapping instead of full data copy. And classic resize is used primarily when cluster resize is outside the allowed slice ranges by elastic resize, or the encryption status should be changed. Let’s briefly discuss these scenarios before describing how the new migration process helps.\n\n\n#### **Current limitations**\n\n\nThe current resize options have a few limitations of note.\n\n- **Configuration changes** – Elastic resize supports the following RA3 configuration changes by design. So, when you need to choose a target cluster outsize the ranges mentioned in the following table, you should choose classic resize.\n\n\n\nAlso, elastic resize can’t be performed if the current cluster is a single-node cluster or isn’t running on an EC2-VPC platform. These scenarios also drive customers to choose classic resize.\n\n- **Encryption changes** – You may need to encrypt your Amazon Redshift cluster based on security, compliance, and data consumption requirements. Currently, in order to modify encryption on an Amazon Redshift cluster, we use classic resize technology, which internally performs a deep copy operation of the entire dataset and rewrites the dataset with the desired encryption state. To avoid any changes during the deep copy operation, the source cluster is placed in read-only mode during the entire operation, which can take a few hours to days depending on the dataset size. Or, you might be locked out altogether if the data warehouse is down for a resize. As a result, the administrators or application owners can’t support Service Level Agreements (SLAs) that they have set with their business stakeholders.\n\nSwitching to the Faster Classic Resize approach can help speed up the migration process when turning on encryption. This has been one of the requirements for cross-account, cross-Region data sharing enabled on unencrypted clusters and integrations with [Amazon Web Services Data Exchange for Amazon Redshift](https://docs.aws.amazon.com/redshift/latest/dg/adx-datashare.html). Additionally, [Amazon Redshift Serverless](https://aws.amazon.com/redshift/redshift-serverless/) is encrypted by default. So, to create a data share from a provisioned cluster to Redshift Serverless, the provisioned cluster should be encrypted as well. This is one more compelling requirement **for Faster** Classic Resize.\n\n\n#### **Faster Classic Resize**\n\n\nFaster Classic Resize works like elastic resize, but performs similar functions like classic resize, thereby offering the best of both approaches. Unlike classic resize, which involves extracting tuples from the source cluster and inserting those tuples on the target cluster, the Faster Classic Resize operation doesn’t involve extraction of tuples. Instead, it starts from the snapshots and the data blocks are copied over to the target cluster.\n\nThe new Faster Classic Resize operation involves two stages:\n\n- **Stage 1 (Critical path)** – This first stage consists of migrating the data from the source cluster to the target cluster, during which the source cluster is in read-only mode. Typically, this is a very short duration. Then the cluster is made available for read and writes.\n- **Stage 2 (Off critical path)** – The second stage involves redistributing the data as per the previous data distribution style. This process runs in the background off the critical path of migration from the source to target cluster. The duration of this stage is dependent on the volume to distribute, cluster workload, and so on.\n\nLet’s see how Faster Classic Resize works with configuration changes, encryption changes, and restoring an unencrypted snapshot into an encrypted cluster.\n\n\n\n#### **Prerequisites**\n\n\nComplete the following prerequisite steps:\n\n1. Take a snapshot from the current cluster or use an existing snapshot.\n2. Provide the [Amazon Web Services Identity and Access Management](http://aws.amazon.com/iam) (IAM) role credentials that are required to run the Amazon Web Services CLI. For more information, refer to [Using identity-based policies (IAM policies) for Amazon Redshift](https://docs.aws.amazon.com/redshift/latest/mgmt/redshift-iam-access-control-identity-based.html).\n3. For encryption changes, create a KMS key if none exists. For instructions, refer to [Creating keys](https://docs.aws.amazon.com/kms/latest/developerguide/create-keys.html).\n\n\n#### **Configuration change**\n\n\nAs of this writing, you can use it change your cluster configuration from DC2, DS2, and RA3 node types to any RA3 node type. However, changing from RA3 to DC2 or DS2 is not supported yet.\n\nWe did a benchmark on Faster Classic Resize with different cluster combinations and volumes. The following table summarizes the results comparing critical paths in classic resize and Faster Classic Resize.\n\n\n\n\nThe Faster Classic Resize option consistently completed in significantly less time and made the cluster available for read and write operations in a short time. Classic resize took a longer time in all cases and kept the cluster in read-only mode, making it unavailable for writes. Also, the classic resize duration is comparatively longer when the target cluster configuration is smaller than the original cluster configuration.\n\n\n\n#### **Perform Faster Classic Resize**\n\n\nYou can use either of the following two methods to resize your cluster using Faster Classic Resize via the Amazon Web Services CLI for RA3 target node types.\n\n**Note**: If you initiate Classic resize from user interface, the new Faster Classic Resize will be performed for RA3 target node types and existing Classic resize will be performed for DC2/DS2 target node types.\n\n- Modify cluster method – Resize an existing cluster without changing the endpoint\nThe following are the steps involved:\n\n 1 . Take a snapshot on the current cluster prior to performing the resize operation.\n 2 . Determine the target cluster configuration and run the following command from the Amazon Web Services CLI:\n\n```\naws redshift modify-cluster --region <CLUSTER REGION>\n--endpoint-url https://redshift.<CLUSTER REGION>.amazonaws.com/\n--cluster-identifier <CLUSTER NAME>\n--cluster-type multi-node\n--node-type <TARGET INSTANCE TYPE>\n--number-of-nodes <TARGET NUMBER OF NODES>\n```\n\nFor example:\n\n```\naws redshift modify-cluster --region us-east-1\n--endpoint-url https://redshift.us-east-1.amazonaws.com/\n--cluster-identifier my-cluster-identifier\n--cluster-type multi-node\n--node-type ra3.16xlarge\n--number-of-nodes 12\n```\n\n- Snapshot restore method – Restore an existing snapshot to the new cluster with the new cluster endpoint\nThe following are the steps involved:\n\n1. Identify the snapshot for restore and a unique name for the new cluster.\n2. Determine the target cluster configuration and run the following command from the Amazon Web Services CLI:\n\n```\naws redshift restore-from-cluster-snapshot --region <CLUSTER REGION>\n--endpoint-url https://redshift.<CLUSTER REGION>.amazonaws.com/\n--snapshot-identifier <SNAPSHOT ID> \n--cluster-identifier <CLUSTER NAME>\n--node-type <TARGET INSTANCE TYPE>\n--number-of-node <NUMBER>\n```\n\nFor example:\n\n```\n\naws redshift restore-from-cluster-snapshot --region us-east-1\n--endpoint-url https://redshift.us-east-1.amazonaws.com/\n--snapshot-identifier rs:sales-cluster-2022-05-26-16-19-36\n--cluster-identifier my-new-cluster-identifier\n--node-type ra3.16xlarge\n--number-of-node 12\n```\n\n**Note**: Snapshot restore method will perform elastic resize if the new configuration is within allowed ranges, else it will use the Faster Classic Resize approach.\n\n\n#### **Monitor the resize process**\n\n\nMonitor the progress through the cluster management console. You can also check the events generated by the resize process. The resize completion status is logged in events along with the duration it took for the resize. The following screenshot shows an example.\n\n\n\nIt’s important to note that you may observe longer query times in the second stage of Faster Classic Resize. During the first stage, the data for tables with ```dist-key``` distribution style is transferred as dist-even. Later, a background process converts them back to ```dist-key``` (in stage 2). However, background processes are running behind the scenes to get the data redistributed to the original distribution style (the distribution style before the cluster resize). You can monitor the progress of the background processes by querying the ```stv_xrestore_alter_queue_state``` table. It’s important to note that tables with ALL, AUTO, or EVEN distribution styles don’t require redistribution post-resize. Therefore, they’re not logged in the ```stv_xrestore_alter_queue_state``` table. The counts you observe in these tables are for the tables with distribution style as Key before the resize operation.\n\nSee the following example query:\n\n```\nselect db_id, status, count(*) from stv_xrestore_alter_queue_state group by 1,2 order by 3 desc\n```\n\nThe following table shows that for 60 tables data redistribution is finished, for 323 tables data redistribution is pending, and for 1 table data redistribution is in progress.\n\n\n\n\nWe ran tests to assess time to complete the redistribution. For 10 TB of data, it took approximately 5 hours and 30 minutes on an idle cluster. For 3 TB, it took approximately 2 hours and 30 minutes on an idle cluster. The following is a summary of tests performed on larger volumes:\n\n- A snapshot with 100 TB where 70% of blocks needs redistribution would take 10–40 hours\n- A snapshot with 450 TB where 70% of blocks needs redistribution would take 2–8 days\n- A snapshot with 1600 TB where 70% of blocks needs redistribution would take 7–27 days\n\nThe actual time to complete redistribution is largely dependent on data volume, cluster idle cycles, target cluster size, data skewness, and more. Therefore, we recommend performing Faster Classic Resize when there is enough of an idle window (such as weekends) for the cluster to perform redistribution.\n\n\n#### **Encryption changes**\n\n\nYou can encrypt your Amazon Redshift cluster from the console (the modify cluster method) or using the Amazon Web Services CLI using the snapshot restore method. Amazon Redshift performs the encryption change using Faster Classic Resize. The operation only takes a few minutes to complete and your cluster is available for both read and write. With Faster Classic Resize, you can change an unencrypted cluster to an encrypted cluster or change the encryption key using the snapshot restore method.\n\nFor this post, we show how you can change the encryption using the Amazon Redshift console. To test the timings, we created multiple Amazon Redshift clusters using [TPC-DS](https://github.com/awslabs/amazon-redshift-utils/tree/master/src/CloudDataWarehouseBenchmark/Cloud-DWB-Derived-from-TPCDS/10TB) data. The Faster Classic Resize option consistently completed in significantly less time and made clusters available for read and write operations faster. Classic resize took a longer time in all cases and kept the cluster in read-only mode. The following table contains the summary of the results.\n\n\n\nNow, let’s perform the encryption change from an unencrypted cluster to an encrypted cluster using the console. Complete the following steps:\n\n1. On the Amazon Redshift console, navigate to your cluster.\n2. On the **Properties** tab, on the **Edit** drop-down menu, choose **Edit encryption**.\n\n\n\n3. For Encryption, select Use Amazon Web Services Key Management Service (Amazon Web Services KMS).\n4. For Amazon Web Services KMS, select Default Redshift key.\n5. Choose Save changes.\n\n\n\nYou can monitor the progress of your encryption change on the Events tab. As shown in the following screenshot, the entire process to change the encryption took approximately 11 minutes.\n\n\n\n\n#### **Restore an unencrypted snapshot to an encrypted cluster**\n\n\nAs of today, the Faster Classic Resize option to restore an unencrypted snapshot into an encrypted cluster or to change the encryption key is available only through the Amazon Web Services CLI. When triggered, the restored cluster operates in read/write mode immediately. The encryption state change for restored blocks that are unencrypted operates in the background, and newly ingested blocks continue to be encrypted.\n\n[Restore](https://docs.aws.amazon.com/cli/latest/reference/redshift/restore-from-cluster-snapshot.html) the snapshot using the following command into a new cluster. (Replace the indicated parameter values; ```--encrypted``` and ```--kms-key-id``` are required).\n\n```\naws redshift restore-from-cluster-snapshot \n--cluster-identifier <CLUSTER NAME>\n--snapshot-identifier <SNAPSHOT ID> \n--region <AWS REGION> \n--encrypted\n--kms-key-id <KMS KEY ID>\n--cluster-subnet-group-name <SUBNET GROUP>\n```\n\n#### **When to use which resize option**\n\nThe following flow chart provides guidance on which resize option is recommended when changing your cluster encryption status or resizing to a new cluster configuration.\n\n\n\n\n#### **Summary**\n\n\nIn this post, we talked about the improved performance of Amazon Redshift’s classic resize feature and how Faster Classic Resize significantly improves your ability to scale your Amazon Redshift clusters using the classic resize method. We also talked about when to use different resize operations based on your requirements. We demonstrated how it works from the console (for encryption changes) and using the Amazon Web Services CLI. We also showed the results of our benchmark test and how it significantly improves the migration time for configuration changes and encryption changes for your Amazon Redshift cluster.\n\nTo learn more about resizing your clusters, refer to [Resizing clusters in Amazon Redshift](https://docs.aws.amazon.com/redshift/latest/mgmt/rs-resize-tutorial.html). If you have any feedback or questions, please leave them in the comments.\n\n\n##### **About the authors**\n\n\n\n\n**Sumeet Joshi** is an Analytics Specialist Solutions Architect based out of New York. He specializes in building large-scale data warehousing solutions. He has over 17 years of experience in the data warehousing and analytical space.\n\n\n\n**Satesh Sonti** is a Sr. Analytics Specialist Solutions Architect based out of Atlanta, specialized in building enterprise data platforms, data warehousing, and analytics solutions. He has over 16 years of experience in building data assets and leading complex data platform programs for banking and insurance clients across the globe.\n\n\n\n**Krishna Chaitanya Gudipati** is a Senior Software Development Engineer at Amazon Redshift. He has been working on distributed systems for over 14 years and is passionate about building scalable and performant systems. In his spare time, he enjoys reading and exploring new places.","render":"<p><a href=\"https://aws.amazon.com/redshift/\" target=\"_blank\">Amazon Redshift</a> has improved the performance of the classic resize feature and increased the flexibility of the cluster snapshot restore operation. You can use the classic resize operation to resize a cluster when you need to change the instance type or transition to a configuration that can’t be supported by elastic resize. This could take the cluster offline for many hours during the resize, but now the cluster can typically be available to process queries in minutes. Clusters can also be resized when restoring from a snapshot and in those cases there could be restrictions.</p>\n<p>You can now also restore an encrypted cluster from an unencrypted snapshot or change the encryption key. Amazon Redshift uses <a href=\"http://aws.amazon.com/kms\" target=\"_blank\">Amazon Web Services Key Management Service</a> (Amazon Web Services KMS) as an encryption option to provide an additional layer of data protection by securing your data from unauthorized access to the underlying storage. Now you can encrypt an unencrypted cluster with a KMS key faster by simply specifying a KMS key ID when modifying the cluster. You can also restore an Amazon Web Services KMS-encrypted cluster from an unencrypted snapshot. You can access the feature via the <a href=\"http://aws.amazon.com/console\" target=\"_blank\">Amazon Web Services Management Console</a>, SDK, or <a href=\"http://aws.amazon.com/cli\" target=\"_blank\">Amazon Web Services Command Line Interface</a> (Amazon Web Services CLI). Please note that these features only apply to the clusters or target clusters with the RA3 node type.</p>\n<p>In this post, we show you how the updated classic resize option works and also how it significantly improves the amount of time it takes to resize or encrypt your cluster with this enhancement. We also walk through the steps to resize your Amazon Redshift cluster using Faster Classic Resize.</p>\n<h4><a id=\"Existing_resize_options_7\"></a><strong>Existing resize options</strong></h4>\n<p>We’ve worked closely with our customers to learn how their needs evolve as their data scales or as their security and compliance requirements change. To address and meet your ever-growing demands, you often have to <a href=\"https://docs.aws.amazon.com/redshift/latest/mgmt/managing-cluster-operations.html#rs-resize-tutorial\" target=\"_blank\">resize your Amazon Redshift cluster</a> and choose an optimal instance type that delivers the best price/performance. As of this writing, there are three ways you can resize your clusters: <a href=\"https://docs.aws.amazon.com/redshift/latest/mgmt/rs-resize-tutorial.html#elastic-resize\" target=\"_blank\">elastic resize</a>, <a href=\"https://docs.aws.amazon.com/redshift/latest/mgmt/rs-resize-tutorial.html#classic-resize\" target=\"_blank\">classic resize</a>, and the <a href=\"https://docs.aws.amazon.com/redshift/latest/mgmt/rs-resize-tutorial.html#rs-tutorial-snapshot-restore-resize-overview\" target=\"_blank\">snapshot, restore, and resize</a> method.</p>\n<p>Among the three options, elastic resize is the fastest available resize mechanism because it works based on slice remapping instead of full data copy. And classic resize is used primarily when cluster resize is outside the allowed slice ranges by elastic resize, or the encryption status should be changed. Let’s briefly discuss these scenarios before describing how the new migration process helps.</p>\n<h4><a id=\"Current_limitations_15\"></a><strong>Current limitations</strong></h4>\n<p>The current resize options have a few limitations of note.</p>\n<ul>\n<li><strong>Configuration changes</strong> – Elastic resize supports the following RA3 configuration changes by design. So, when you need to choose a target cluster outsize the ranges mentioned in the following table, you should choose classic resize.</li>\n</ul>\n<p><img src=\"https://dev-media.amazoncloud.cn/e2b16af4216949e1a35437b40e6c7285_image.png\" alt=\"image.png\" /></p>\n<p>Also, elastic resize can’t be performed if the current cluster is a single-node cluster or isn’t running on an EC2-VPC platform. These scenarios also drive customers to choose classic resize.</p>\n<ul>\n<li><strong>Encryption changes</strong> – You may need to encrypt your Amazon Redshift cluster based on security, compliance, and data consumption requirements. Currently, in order to modify encryption on an Amazon Redshift cluster, we use classic resize technology, which internally performs a deep copy operation of the entire dataset and rewrites the dataset with the desired encryption state. To avoid any changes during the deep copy operation, the source cluster is placed in read-only mode during the entire operation, which can take a few hours to days depending on the dataset size. Or, you might be locked out altogether if the data warehouse is down for a resize. As a result, the administrators or application owners can’t support Service Level Agreements (SLAs) that they have set with their business stakeholders.</li>\n</ul>\n<p>Switching to the Faster Classic Resize approach can help speed up the migration process when turning on encryption. This has been one of the requirements for cross-account, cross-Region data sharing enabled on unencrypted clusters and integrations with <a href=\"https://docs.aws.amazon.com/redshift/latest/dg/adx-datashare.html\" target=\"_blank\">Amazon Web Services Data Exchange for Amazon Redshift</a>. Additionally, <a href=\"https://aws.amazon.com/redshift/redshift-serverless/\" target=\"_blank\">Amazon Redshift Serverless</a> is encrypted by default. So, to create a data share from a provisioned cluster to Redshift Serverless, the provisioned cluster should be encrypted as well. This is one more compelling requirement <strong>for Faster</strong> Classic Resize.</p>\n<h4><a id=\"Faster_Classic_Resize_31\"></a><strong>Faster Classic Resize</strong></h4>\n<p>Faster Classic Resize works like elastic resize, but performs similar functions like classic resize, thereby offering the best of both approaches. Unlike classic resize, which involves extracting tuples from the source cluster and inserting those tuples on the target cluster, the Faster Classic Resize operation doesn’t involve extraction of tuples. Instead, it starts from the snapshots and the data blocks are copied over to the target cluster.</p>\n<p>The new Faster Classic Resize operation involves two stages:</p>\n<ul>\n<li><strong>Stage 1 (Critical path)</strong> – This first stage consists of migrating the data from the source cluster to the target cluster, during which the source cluster is in read-only mode. Typically, this is a very short duration. Then the cluster is made available for read and writes.</li>\n<li><strong>Stage 2 (Off critical path)</strong> – The second stage involves redistributing the data as per the previous data distribution style. This process runs in the background off the critical path of migration from the source to target cluster. The duration of this stage is dependent on the volume to distribute, cluster workload, and so on.</li>\n</ul>\n<p>Let’s see how Faster Classic Resize works with configuration changes, encryption changes, and restoring an unencrypted snapshot into an encrypted cluster.</p>\n<h4><a id=\"Prerequisites_45\"></a><strong>Prerequisites</strong></h4>\n<p>Complete the following prerequisite steps:</p>\n<ol>\n<li>Take a snapshot from the current cluster or use an existing snapshot.</li>\n<li>Provide the <a href=\"http://aws.amazon.com/iam\" target=\"_blank\">Amazon Web Services Identity and Access Management</a> (IAM) role credentials that are required to run the Amazon Web Services CLI. For more information, refer to <a href=\"https://docs.aws.amazon.com/redshift/latest/mgmt/redshift-iam-access-control-identity-based.html\" target=\"_blank\">Using identity-based policies (IAM policies) for Amazon Redshift</a>.</li>\n<li>For encryption changes, create a KMS key if none exists. For instructions, refer to <a href=\"https://docs.aws.amazon.com/kms/latest/developerguide/create-keys.html\" target=\"_blank\">Creating keys</a>.</li>\n</ol>\n<h4><a id=\"Configuration_change_55\"></a><strong>Configuration change</strong></h4>\n<p>As of this writing, you can use it change your cluster configuration from DC2, DS2, and RA3 node types to any RA3 node type. However, changing from RA3 to DC2 or DS2 is not supported yet.</p>\n<p>We did a benchmark on Faster Classic Resize with different cluster combinations and volumes. The following table summarizes the results comparing critical paths in classic resize and Faster Classic Resize.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/edb4de4282e7462291ea643f431b251e_image.png\" alt=\"image.png\" /></p>\n<p>The Faster Classic Resize option consistently completed in significantly less time and made the cluster available for read and write operations in a short time. Classic resize took a longer time in all cases and kept the cluster in read-only mode, making it unavailable for writes. Also, the classic resize duration is comparatively longer when the target cluster configuration is smaller than the original cluster configuration.</p>\n<h4><a id=\"Perform_Faster_Classic_Resize_69\"></a><strong>Perform Faster Classic Resize</strong></h4>\n<p>You can use either of the following two methods to resize your cluster using Faster Classic Resize via the Amazon Web Services CLI for RA3 target node types.</p>\n<p><strong>Note</strong>: If you initiate Classic resize from user interface, the new Faster Classic Resize will be performed for RA3 target node types and existing Classic resize will be performed for DC2/DS2 target node types.</p>\n<ul>\n<li>\n<p>Modify cluster method – Resize an existing cluster without changing the endpoint<br />\nThe following are the steps involved:</p>\n<p>1 . Take a snapshot on the current cluster prior to performing the resize operation.<br />\n2 . Determine the target cluster configuration and run the following command from the Amazon Web Services CLI:</p>\n</li>\n</ul>\n<pre><code class=\"lang-\">aws redshift modify-cluster --region <CLUSTER REGION>\n--endpoint-url https://redshift.<CLUSTER REGION>.amazonaws.com/\n--cluster-identifier <CLUSTER NAME>\n--cluster-type multi-node\n--node-type <TARGET INSTANCE TYPE>\n--number-of-nodes <TARGET NUMBER OF NODES>\n</code></pre>\n<p>For example:</p>\n<pre><code class=\"lang-\">aws redshift modify-cluster --region us-east-1\n--endpoint-url https://redshift.us-east-1.amazonaws.com/\n--cluster-identifier my-cluster-identifier\n--cluster-type multi-node\n--node-type ra3.16xlarge\n--number-of-nodes 12\n</code></pre>\n<ul>\n<li>Snapshot restore method – Restore an existing snapshot to the new cluster with the new cluster endpoint<br />\nThe following are the steps involved:</li>\n</ul>\n<ol>\n<li>Identify the snapshot for restore and a unique name for the new cluster.</li>\n<li>Determine the target cluster configuration and run the following command from the Amazon Web Services CLI:</li>\n</ol>\n<pre><code class=\"lang-\">aws redshift restore-from-cluster-snapshot --region <CLUSTER REGION>\n--endpoint-url https://redshift.<CLUSTER REGION>.amazonaws.com/\n--snapshot-identifier <SNAPSHOT ID> \n--cluster-identifier <CLUSTER NAME>\n--node-type <TARGET INSTANCE TYPE>\n--number-of-node <NUMBER>\n</code></pre>\n<p>For example:</p>\n<pre><code class=\"lang-\">\naws redshift restore-from-cluster-snapshot --region us-east-1\n--endpoint-url https://redshift.us-east-1.amazonaws.com/\n--snapshot-identifier rs:sales-cluster-2022-05-26-16-19-36\n--cluster-identifier my-new-cluster-identifier\n--node-type ra3.16xlarge\n--number-of-node 12\n</code></pre>\n<p><strong>Note</strong>: Snapshot restore method will perform elastic resize if the new configuration is within allowed ranges, else it will use the Faster Classic Resize approach.</p>\n<h4><a id=\"Monitor_the_resize_process_132\"></a><strong>Monitor the resize process</strong></h4>\n<p>Monitor the progress through the cluster management console. You can also check the events generated by the resize process. The resize completion status is logged in events along with the duration it took for the resize. The following screenshot shows an example.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/eee53a61b6134c5b875a1f7ba27535b6_image.png\" alt=\"image.png\" /></p>\n<p>It’s important to note that you may observe longer query times in the second stage of Faster Classic Resize. During the first stage, the data for tables with <code>dist-key</code> distribution style is transferred as dist-even. Later, a background process converts them back to <code>dist-key</code> (in stage 2). However, background processes are running behind the scenes to get the data redistributed to the original distribution style (the distribution style before the cluster resize). You can monitor the progress of the background processes by querying the <code>stv_xrestore_alter_queue_state</code> table. It’s important to note that tables with ALL, AUTO, or EVEN distribution styles don’t require redistribution post-resize. Therefore, they’re not logged in the <code>stv_xrestore_alter_queue_state</code> table. The counts you observe in these tables are for the tables with distribution style as Key before the resize operation.</p>\n<p>See the following example query:</p>\n<pre><code class=\"lang-\">select db_id, status, count(*) from stv_xrestore_alter_queue_state group by 1,2 order by 3 desc\n</code></pre>\n<p>The following table shows that for 60 tables data redistribution is finished, for 323 tables data redistribution is pending, and for 1 table data redistribution is in progress.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/fb3f88229cb643ee83447739304dcef5_image.png\" alt=\"image.png\" /></p>\n<p>We ran tests to assess time to complete the redistribution. For 10 TB of data, it took approximately 5 hours and 30 minutes on an idle cluster. For 3 TB, it took approximately 2 hours and 30 minutes on an idle cluster. The following is a summary of tests performed on larger volumes:</p>\n<ul>\n<li>A snapshot with 100 TB where 70% of blocks needs redistribution would take 10–40 hours</li>\n<li>A snapshot with 450 TB where 70% of blocks needs redistribution would take 2–8 days</li>\n<li>A snapshot with 1600 TB where 70% of blocks needs redistribution would take 7–27 days</li>\n</ul>\n<p>The actual time to complete redistribution is largely dependent on data volume, cluster idle cycles, target cluster size, data skewness, and more. Therefore, we recommend performing Faster Classic Resize when there is enough of an idle window (such as weekends) for the cluster to perform redistribution.</p>\n<h4><a id=\"Encryption_changes_161\"></a><strong>Encryption changes</strong></h4>\n<p>You can encrypt your Amazon Redshift cluster from the console (the modify cluster method) or using the Amazon Web Services CLI using the snapshot restore method. Amazon Redshift performs the encryption change using Faster Classic Resize. The operation only takes a few minutes to complete and your cluster is available for both read and write. With Faster Classic Resize, you can change an unencrypted cluster to an encrypted cluster or change the encryption key using the snapshot restore method.</p>\n<p>For this post, we show how you can change the encryption using the Amazon Redshift console. To test the timings, we created multiple Amazon Redshift clusters using <a href=\"https://github.com/awslabs/amazon-redshift-utils/tree/master/src/CloudDataWarehouseBenchmark/Cloud-DWB-Derived-from-TPCDS/10TB\" target=\"_blank\">TPC-DS</a> data. The Faster Classic Resize option consistently completed in significantly less time and made clusters available for read and write operations faster. Classic resize took a longer time in all cases and kept the cluster in read-only mode. The following table contains the summary of the results.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/dd86f6e5f74e43368612878b59cbdf27_image.png\" alt=\"image.png\" /></p>\n<p>Now, let’s perform the encryption change from an unencrypted cluster to an encrypted cluster using the console. Complete the following steps:</p>\n<ol>\n<li>On the Amazon Redshift console, navigate to your cluster.</li>\n<li>On the <strong>Properties</strong> tab, on the <strong>Edit</strong> drop-down menu, choose <strong>Edit encryption</strong>.</li>\n</ol>\n<p><img src=\"https://dev-media.amazoncloud.cn/2d79899cd5d44feca4b759577caba8e5_image.png\" alt=\"image.png\" /></p>\n<ol start=\"3\">\n<li>For Encryption, select Use Amazon Web Services Key Management Service (Amazon Web Services KMS).</li>\n<li>For Amazon Web Services KMS, select Default Redshift key.</li>\n<li>Choose Save changes.</li>\n</ol>\n<p><img src=\"https://dev-media.amazoncloud.cn/748f0f850de64339a855397aacfe4619_image.png\" alt=\"image.png\" /></p>\n<p>You can monitor the progress of your encryption change on the Events tab. As shown in the following screenshot, the entire process to change the encryption took approximately 11 minutes.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/9d542574dc7843f3a65aed7c38c3b846_image.png\" alt=\"image.png\" /></p>\n<h4><a id=\"Restore_an_unencrypted_snapshot_to_an_encrypted_cluster_188\"></a><strong>Restore an unencrypted snapshot to an encrypted cluster</strong></h4>\n<p>As of today, the Faster Classic Resize option to restore an unencrypted snapshot into an encrypted cluster or to change the encryption key is available only through the Amazon Web Services CLI. When triggered, the restored cluster operates in read/write mode immediately. The encryption state change for restored blocks that are unencrypted operates in the background, and newly ingested blocks continue to be encrypted.</p>\n<p><a href=\"https://docs.aws.amazon.com/cli/latest/reference/redshift/restore-from-cluster-snapshot.html\" target=\"_blank\">Restore</a> the snapshot using the following command into a new cluster. (Replace the indicated parameter values; <code>--encrypted</code> and <code>--kms-key-id</code> are required).</p>\n<pre><code class=\"lang-\">aws redshift restore-from-cluster-snapshot \n--cluster-identifier <CLUSTER NAME>\n--snapshot-identifier <SNAPSHOT ID> \n--region <AWS REGION> \n--encrypted\n--kms-key-id <KMS KEY ID>\n--cluster-subnet-group-name <SUBNET GROUP>\n</code></pre>\n<h4><a id=\"When_to_use_which_resize_option_205\"></a><strong>When to use which resize option</strong></h4>\n<p>The following flow chart provides guidance on which resize option is recommended when changing your cluster encryption status or resizing to a new cluster configuration.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/b653dee43d78484da60d5aaff1953e2c_image.png\" alt=\"image.png\" /></p>\n<h4><a id=\"Summary_212\"></a><strong>Summary</strong></h4>\n<p>In this post, we talked about the improved performance of Amazon Redshift’s classic resize feature and how Faster Classic Resize significantly improves your ability to scale your Amazon Redshift clusters using the classic resize method. We also talked about when to use different resize operations based on your requirements. We demonstrated how it works from the console (for encryption changes) and using the Amazon Web Services CLI. We also showed the results of our benchmark test and how it significantly improves the migration time for configuration changes and encryption changes for your Amazon Redshift cluster.</p>\n<p>To learn more about resizing your clusters, refer to <a href=\"https://docs.aws.amazon.com/redshift/latest/mgmt/rs-resize-tutorial.html\" target=\"_blank\">Resizing clusters in Amazon Redshift</a>. If you have any feedback or questions, please leave them in the comments.</p>\n<h5><a id=\"About_the_authors_220\"></a><strong>About the authors</strong></h5>\n<p><img src=\"https://dev-media.amazoncloud.cn/e588e730885146079f09c5ec40175e35_image.png\" alt=\"image.png\" /></p>\n<p><strong>Sumeet Joshi</strong> is an Analytics Specialist Solutions Architect based out of New York. He specializes in building large-scale data warehousing solutions. He has over 17 years of experience in the data warehousing and analytical space.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/1dacb2191f8e456a895df6c17c6cf9d0_image.png\" alt=\"image.png\" /></p>\n<p><strong>Satesh Sonti</strong> is a Sr. Analytics Specialist Solutions Architect based out of Atlanta, specialized in building enterprise data platforms, data warehousing, and analytics solutions. He has over 16 years of experience in building data assets and leading complex data platform programs for banking and insurance clients across the globe.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/2fa006a985764080beca27c624420867_image.png\" alt=\"image.png\" /></p>\n<p><strong>Krishna Chaitanya Gudipati</strong> is a Senior Software Development Engineer at Amazon Redshift. He has been working on distributed systems for over 14 years and is passionate about building scalable and performant systems. In his spare time, he enjoys reading and exploring new places.</p>\n"}

Accelerate resize and encryption of Amazon Redshift clusters with Faster Classic Resize

海外精选

海外精选的内容汇集了全球优质的亚马逊云科技相关技术内容。同时,内容中提到的“AWS”

是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

0

0 0

0亚马逊云科技解决方案 基于行业客户应用场景及技术领域的解决方案

联系亚马逊云科技专家

目录

亚马逊云科技解决方案 基于行业客户应用场景及技术领域的解决方案

联系亚马逊云科技专家

亚马逊云科技解决方案

基于行业客户应用场景及技术领域的解决方案

联系专家

0

目录

分享

分享 点赞

点赞 收藏

收藏 目录

目录立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。