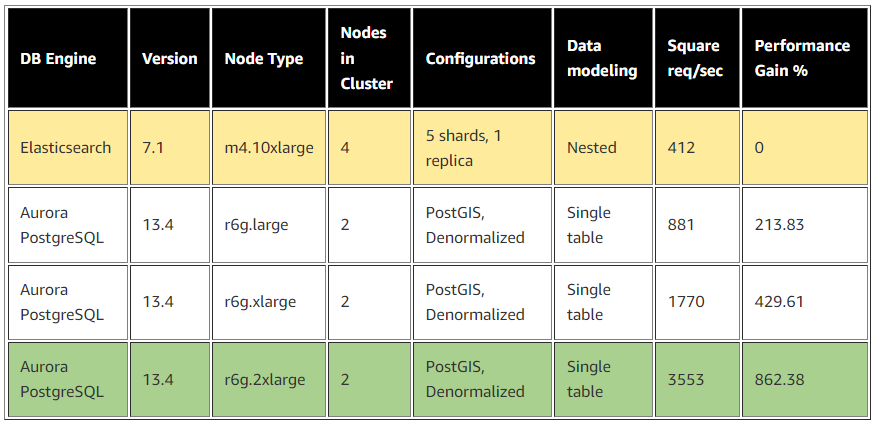

{"value":"[Plugsurfing](https://plugsurfing.com/) aligns the entire car charging ecosystem—drivers, charging point operators, and carmakers—within a single platform. The over 1 million drivers connected to the Plugsurfing Power Platform benefit from a network of over 300,000 charging points across Europe. Plugsurfing serves charging point operators with a backend cloud software for managing everything from country-specific regulations to providing diverse payment options for customers. Carmakers benefit from white label solutions as well as deeper integrations with their in-house technology. The platform-based ecosystem has already processed more than 18 million charging sessions. Plugsurfing was acquired fully by Fortum Oyj in 2018.\n\nPlugsurfing uses [Amazon OpenSearch Service](https://aws.amazon.com/opensearch-service/) as a central data store to store 300,000 charging stations’ information and to power search and filter requests coming from mobile, web, and connected car dashboard clients. With the increasing usage, Plugsurfing created multiple read replicas of an OpenSearch Service cluster to meet demand and scale. Over time and with the increase in demand, this solution started to become cost exhaustive and limited in terms of cost performance benefit.\n\nAWS EMEA Prototyping Labs collaborated with the Plugsurfing team for 4 weeks on a hands-on prototyping engagement to solve this problem, which resulted in 70% cost savings and doubled the performance benefit over the current solution. This post shows the overall approach and ideas we tested with Plugsurfing to achieve the results.\n\n\n#### **The challenge: Scaling higher transactions per second while keeping costs under control**\n\n\nOne of the key issues of the legacy solution was keeping up with higher transactions per second (TPS) from APIs while keeping costs low. The majority of the cost was coming from the OpenSearch Service cluster, because the mobile, web, and EV car dashboards use different APIs for different use cases, but all query the same cluster. The solution to achieve higher TPS with the legacy solution was to scale the OpenSearch Service cluster.\n\nThe following figure illustrates the legacy architecture.\n\n\n\nPlugsurfing APIs are responsible for serving data for four different use cases:\n\n- **Radius search** – Find all the EV charging stations (latitude/longitude) with in x km radius from the point of interest (or current location on GPS).\n- **Square search** – Find all the EV charging stations within a box of length x width, where the point of interest (or current location on GPS) is at the center.\n- **Geo clustering search** – Find all the EV charging stations clustered (grouped) by their concentration within a given area. For example, searching all EV chargers in all of Germany results in something like 50 in Munich and 100 in Berlin.\n- **Radius search with filtering** – Filter the results by EV charger that are available or in use by plug type, power rating, or other filters.\n\nThe OpenSearch Service domain configuration was as follows:\n\n- m4.10xlarge.search x 4 nodes\n- Elasticsearch 7.10 version\n- A single index to store 300,000 EV charger locations with five shards and one replica\n- A nested document structure\n\nThe following code shows the example document\n\n```\\n{\\n \\"locationId\\":\\"location:1\\",\\n \\"location\\":{\\n \\"latitude\\":32.1123,\\n \\"longitude\\":-9.2523\\n },\\n \\"adress\\":\\"parking lot 1\\",\\n \\"chargers\\":[\\n {\\n \\"chargerId\\":\\"location:1:charger:1\\",\\n \\"connectors\\":[\\n {\\n \\"connectorId\\":\\"location:1:charger:1:connector:1\\",\\n \\"status\\":\\"AVAILABLE\\",\\n \\"plug_type\\":\\"Type A\\"\\n }\\n ]\\n }\\n ]\\n}\\n```\n\n#### **Solution overview**\n\n\nAWS EMEA Prototyping Labs proposed an experimentation approach to try three high-level ideas for performance optimization and to lower overall solution costs.\n\nWe launched an [Amazon Elastic Compute Cloud](http://aws.amazon.com/ec2) (EC2) instance in a prototyping AWS account to host a benchmarking tool based on [k6](https://k6.io/) (an open-source tool that makes load testing simple for developers and QA engineers) Later, we used scripts to dump and restore production data to various databases, transforming it to fit with different data models. Then we ran k6 scripts to run and record performance metrics for each use case, database, and data model combination. We also used the [AWS Pricing Calculator](https://calculator.aws/#/) to estimate the cost of each experiment.\n\n\n#### **Experiment 1: Use AWS Graviton and optimize OpenSearch Service domain configuration**\n\n\nWe benchmarked a replica of the legacy OpenSearch Service domain setup in a prototyping environment to baseline performance and costs. Next, we analyzed the current cluster setup and recommended testing the following changes:\n\n- Use [AWS Graviton](https://aws.amazon.com/pm/ec2-graviton) based memory optimized EC2 instances (r6g) x 2 nodes in the cluster\n- Reduce the number of shards from five to one, given the volume of data (all documents) is less than 1 GB\n- Increase the refresh interval configuration from the default 1 second to 5 seconds\n- Denormalize the full document; if not possible, then denormalize all the fields that are part of the search query\n- Upgrade to [Amazon OpenSearch Service](https://aws.amazon.com/cn/opensearch-service/?trk=cndc-detail) 1.0 from Elasticsearch 7.10\n\nPlugsurfing created multiple new OpenSearch Service domains with the same data and benchmarked them against the legacy baseline to obtain the following results. The row in yellow represents the baseline from the legacy setup; the rows with green represent the best outcome out of all experiments performed for the given use cases.\n\n\n\nPlugsurfing was able to gain 95% (doubled) better performance across the radius and filtering use cases with this experiment.\n\n\n#### **Experiment 2: Use purpose-built databases on AWS for different use cases**\n\n\nWe tested [Amazon OpenSearch Service](https://aws.amazon.com/cn/opensearch-service/?trk=cndc-detail), [Amazon Aurora PostgreSQL-Compatible Edition](https://aws.amazon.com/rds/aurora/postgresql-features/), and [Amazon DynamoDB](https://aws.amazon.com/dynamodb/) extensively with many data models for different use cases.\n\nWe tested the square search use case with an Aurora PostgreSQL cluster with a db.r6g.2xlarge single node as the reader and a db.r6g.large single node as the writer. \nThe square search used a single PostgreSQL table configured via the following steps:\n\n1. Create the geo search table with geography as the data type to store latitude/longitude:\n\n```\\nCREATE TYPE status AS ENUM ('available', 'inuse', 'out-of-order');\\n\\nCREATE TABLE IF NOT EXISTS square_search\\n(\\nid serial PRIMARY KEY,\\ngeog geography(POINT),\\nstatus status,\\ndata text -- Can be used as json data type, or add extra fields as flat json\\n);\\n```\n\n2. Create an index on the geog field:\n\n```\\nCREATE INDEX global_points_gix ON square_search USING GIST (geog);\\n```\n\n3. Query the data for the square search use case:\n\n```\\nSELECT id, ST_AsText(geog), status, datafrom square_search\\nwhere geog && ST_MakeEnvelope(32.5,9,32.8,11,4326) limit 100;\\n```\nWe achieved an eight-times greater improvement in TPS for the square search use case, as shown in the following table.\n\n\n\nWe tested the geo clustering search use case with a DynamoDB model. The partition key (PK) is made up of three components: <zoom-level>:<geo-hash>:<api-key>, and the sort key is the EV charger current status. We examined the following:\\n\\n- The zoom level of the map set by the user\\n- The [geo hash](https://en.wikipedia.org/wiki/Geohash) computed based on the map tile in the user’s view port area (at every zoom level, the map of Earth is divided into multiple tiles, where each tile can be represented as a geohash)\\n- The API key to identify the API user\\n\\n\\n\\nThe writer updates the counters (increment or decrement) against each filter condition and charger status whenever the EV charger status is updated at all zoom levels. With this model, the reader can query pre-clustered data with a single direct partition hit for all the map tiles viewable by the user at the given zoom level.\\n\\nThe DynamoDB model helped us gain a 45-times greater read performance for our geo clustering use case. However, it also added extra work on the writer side to pre-compute numbers and update multiple rows when the status of a single EV charger is updated. The following table summarizes our results.\\n\\n\\n\\n\\n\\n#### **Experiment 3: Use AWS Lambda@Edge and AWS Wavelength for better network performance**\\n\\nWe recommended that Plugsurfing use [Lambda@Edge](https://aws.amazon.com/lambda/edge/) and [AWS Wavelength](https://aws.amazon.com/wavelength/) to optimize network performance by shifting some of the APIs at the edge to closer to the user. The EV car dashboard can use the same 5G network connectivity to invoke Plugsurfing APIs with AWS Wavelength.\\n\\n\\n#### **Post-prototype architecture**\\n\\n\\nThe post-prototype architecture used [purpose-built databases on AWS](https://aws.amazon.com/products/databases/) to achieve better performance across all four use cases. We looked at the results and split the workload based on which database performs best for each use case. This approach optimized performance and cost, but added complexity on readers and writers. The final experiment summary represents the database fits for the given use cases that provide the best performance (highlighted in orange).\\n\\nPlugsurfing has already implemented a short-term plan (light green) as an immediate action post-prototype and plans to implement mid-term and long-term actions (dark green) in the future.\\n\\n\\n\\nThe following diagram illustrates the updated architecture.\\n\\n\\n\\n\\n#### **Conclusion**\\n\\n\\nPlugsurfing was able to achieve a 70% cost reduction over their legacy setup with two-times better performance by using purpose-built databases like DynamoDB, Aurora PostgreSQL, and AWS Graviton based instances for Amazon OpenSearch Service. They achieved the following results:\\n\\n- The radius search and radius search with filtering use cases achieved better performance using Amazon OpenSearch Service on AWS Graviton with a denormalized document structure\\n- The square search use case performed better using Aurora PostgreSQL, where we used the [PostGIS](http://postgis.net/) extension for geo square queries\\n- The geo clustering search use case performed better using DynamoDB\\n\\nLearn more about [AWS Graviton](https://aws.amazon.com/ec2/graviton/) instances and [purpose-built databases on AWS](https://aws.amazon.com/products/databases/), and let us know how we can help optimize your workload on AWS.\\n\\n\\n##### **About the Author**\\n\\n\\n\\nAnand Shah is a Big Data Prototyping Solution Architect at AWS. He works with AWS customers and their engineering teams to build prototypes using AWS Analytics services and purpose-built databases. Anand helps customers solve the most challenging problems using art-of-the-possible technology. He enjoys beaches in his leisure time.","render":"<p><a href=\\"https://plugsurfing.com/\\" target=\\"_blank\\">Plugsurfing</a> aligns the entire car charging ecosystem—drivers, charging point operators, and carmakers—within a single platform. The over 1 million drivers connected to the Plugsurfing Power Platform benefit from a network of over 300,000 charging points across Europe. Plugsurfing serves charging point operators with a backend cloud software for managing everything from country-specific regulations to providing diverse payment options for customers. Carmakers benefit from white label solutions as well as deeper integrations with their in-house technology. The platform-based ecosystem has already processed more than 18 million charging sessions. Plugsurfing was acquired fully by Fortum Oyj in 2018.</p>\\n<p>Plugsurfing uses <a href=\\"https://aws.amazon.com/opensearch-service/\\" target=\\"_blank\\">Amazon OpenSearch Service</a> as a central data store to store 300,000 charging stations’ information and to power search and filter requests coming from mobile, web, and connected car dashboard clients. With the increasing usage, Plugsurfing created multiple read replicas of an OpenSearch Service cluster to meet demand and scale. Over time and with the increase in demand, this solution started to become cost exhaustive and limited in terms of cost performance benefit.</p>\\n<p>AWS EMEA Prototyping Labs collaborated with the Plugsurfing team for 4 weeks on a hands-on prototyping engagement to solve this problem, which resulted in 70% cost savings and doubled the performance benefit over the current solution. This post shows the overall approach and ideas we tested with Plugsurfing to achieve the results.</p>\n<h4><a id=\\"The_challenge_Scaling_higher_transactions_per_second_while_keeping_costs_under_control_7\\"></a><strong>The challenge: Scaling higher transactions per second while keeping costs under control</strong></h4>\\n<p>One of the key issues of the legacy solution was keeping up with higher transactions per second (TPS) from APIs while keeping costs low. The majority of the cost was coming from the OpenSearch Service cluster, because the mobile, web, and EV car dashboards use different APIs for different use cases, but all query the same cluster. The solution to achieve higher TPS with the legacy solution was to scale the OpenSearch Service cluster.</p>\n<p>The following figure illustrates the legacy architecture.</p>\n<p><img src=\\"https://dev-media.amazoncloud.cn/b7dfb1df21084b3e89b8d2c53e08daf2_image.png\\" alt=\\"image.png\\" /></p>\n<p>Plugsurfing APIs are responsible for serving data for four different use cases:</p>\n<ul>\\n<li><strong>Radius search</strong> – Find all the EV charging stations (latitude/longitude) with in x km radius from the point of interest (or current location on GPS).</li>\\n<li><strong>Square search</strong> – Find all the EV charging stations within a box of length x width, where the point of interest (or current location on GPS) is at the center.</li>\\n<li><strong>Geo clustering search</strong> – Find all the EV charging stations clustered (grouped) by their concentration within a given area. For example, searching all EV chargers in all of Germany results in something like 50 in Munich and 100 in Berlin.</li>\\n<li><strong>Radius search with filtering</strong> – Filter the results by EV charger that are available or in use by plug type, power rating, or other filters.</li>\\n</ul>\n<p>The OpenSearch Service domain configuration was as follows:</p>\n<ul>\\n<li>m4.10xlarge.search x 4 nodes</li>\n<li>Elasticsearch 7.10 version</li>\n<li>A single index to store 300,000 EV charger locations with five shards and one replica</li>\n<li>A nested document structure</li>\n</ul>\\n<p>The following code shows the example document</p>\n<pre><code class=\\"lang-\\">{\\n "locationId":"location:1",\\n "location":{\\n "latitude":32.1123,\\n "longitude":-9.2523\\n },\\n "adress":"parking lot 1",\\n "chargers":[\\n {\\n "chargerId":"location:1:charger:1",\\n "connectors":[\\n {\\n "connectorId":"location:1:charger:1:connector:1",\\n "status":"AVAILABLE",\\n "plug_type":"Type A"\\n }\\n ]\\n }\\n ]\\n}\\n</code></pre>\\n<h4><a id=\\"Solution_overview_55\\"></a><strong>Solution overview</strong></h4>\\n<p>AWS EMEA Prototyping Labs proposed an experimentation approach to try three high-level ideas for performance optimization and to lower overall solution costs.</p>\n<p>We launched an <a href=\\"http://aws.amazon.com/ec2\\" target=\\"_blank\\">Amazon Elastic Compute Cloud</a> (EC2) instance in a prototyping AWS account to host a benchmarking tool based on <a href=\\"https://k6.io/\\" target=\\"_blank\\">k6</a> (an open-source tool that makes load testing simple for developers and QA engineers) Later, we used scripts to dump and restore production data to various databases, transforming it to fit with different data models. Then we ran k6 scripts to run and record performance metrics for each use case, database, and data model combination. We also used the <a href=\\"https://calculator.aws/#/\\" target=\\"_blank\\">AWS Pricing Calculator</a> to estimate the cost of each experiment.</p>\\n<h4><a id=\\"Experiment_1_Use_AWS_Graviton_and_optimize_OpenSearch_Service_domain_configuration_63\\"></a><strong>Experiment 1: Use AWS Graviton and optimize OpenSearch Service domain configuration</strong></h4>\\n<p>We benchmarked a replica of the legacy OpenSearch Service domain setup in a prototyping environment to baseline performance and costs. Next, we analyzed the current cluster setup and recommended testing the following changes:</p>\n<ul>\\n<li>Use <a href=\\"https://aws.amazon.com/pm/ec2-graviton\\" target=\\"_blank\\">AWS Graviton</a> based memory optimized EC2 instances (r6g) x 2 nodes in the cluster</li>\\n<li>Reduce the number of shards from five to one, given the volume of data (all documents) is less than 1 GB</li>\n<li>Increase the refresh interval configuration from the default 1 second to 5 seconds</li>\n<li>Denormalize the full document; if not possible, then denormalize all the fields that are part of the search query</li>\n<li>Upgrade to Amazon OpenSearch Service 1.0 from Elasticsearch 7.10</li>\n</ul>\\n<p>Plugsurfing created multiple new OpenSearch Service domains with the same data and benchmarked them against the legacy baseline to obtain the following results. The row in yellow represents the baseline from the legacy setup; the rows with green represent the best outcome out of all experiments performed for the given use cases.</p>\n<p><img src=\\"https://dev-media.amazoncloud.cn/27656410431147498cf0f107819772ac_image.png\\" alt=\\"image.png\\" /></p>\n<p>Plugsurfing was able to gain 95% (doubled) better performance across the radius and filtering use cases with this experiment.</p>\n<h4><a id=\\"Experiment_2_Use_purposebuilt_databases_on_AWS_for_different_use_cases_81\\"></a><strong>Experiment 2: Use purpose-built databases on AWS for different use cases</strong></h4>\\n<p>We tested Amazon OpenSearch Service, <a href=\\"https://aws.amazon.com/rds/aurora/postgresql-features/\\" target=\\"_blank\\">Amazon Aurora PostgreSQL-Compatible Edition</a>, and <a href=\\"https://aws.amazon.com/dynamodb/\\" target=\\"_blank\\">Amazon DynamoDB</a> extensively with many data models for different use cases.</p>\\n<p>We tested the square search use case with an Aurora PostgreSQL cluster with a db.r6g.2xlarge single node as the reader and a db.r6g.large single node as the writer.<br />\\nThe square search used a single PostgreSQL table configured via the following steps:</p>\n<ol>\\n<li>Create the geo search table with geography as the data type to store latitude/longitude:</li>\n</ol>\\n<pre><code class=\\"lang-\\">CREATE TYPE status AS ENUM ('available', 'inuse', 'out-of-order');\\n\\nCREATE TABLE IF NOT EXISTS square_search\\n(\\nid serial PRIMARY KEY,\\ngeog geography(POINT),\\nstatus status,\\ndata text -- Can be used as json data type, or add extra fields as flat json\\n);\\n</code></pre>\\n<ol start=\\"2\\">\\n<li>Create an index on the geog field:</li>\n</ol>\\n<pre><code class=\\"lang-\\">CREATE INDEX global_points_gix ON square_search USING GIST (geog);\\n</code></pre>\\n<ol start=\\"3\\">\\n<li>Query the data for the square search use case:</li>\n</ol>\\n<pre><code class=\\"lang-\\">SELECT id, ST_AsText(geog), status, datafrom square_search\\nwhere geog && ST_MakeEnvelope(32.5,9,32.8,11,4326) limit 100;\\n</code></pre>\\n<p>We achieved an eight-times greater improvement in TPS for the square search use case, as shown in the following table.</p>\n<p><img src=\\"https://dev-media.amazoncloud.cn/9c1465f9048145e3a3c4f7f37df35644_image.png\\" alt=\\"image.png\\" /></p>\n<p>We tested the geo clustering search use case with a DynamoDB model. The partition key (PK) is made up of three components: <zoom-level>:<geo-hash>:<api-key>, and the sort key is the EV charger current status. We examined the following:</p>\n<ul>\\n<li>The zoom level of the map set by the user</li>\n<li>The <a href=\\"https://en.wikipedia.org/wiki/Geohash\\" target=\\"_blank\\">geo hash</a> computed based on the map tile in the user’s view port area (at every zoom level, the map of Earth is divided into multiple tiles, where each tile can be represented as a geohash)</li>\\n<li>The API key to identify the API user</li>\n</ul>\\n<p><img src=\\"https://dev-media.amazoncloud.cn/2eeb52b05e244b73b3dcc0d7a16c87b7_image.png\\" alt=\\"image.png\\" /></p>\n<p>The writer updates the counters (increment or decrement) against each filter condition and charger status whenever the EV charger status is updated at all zoom levels. With this model, the reader can query pre-clustered data with a single direct partition hit for all the map tiles viewable by the user at the given zoom level.</p>\n<p>The DynamoDB model helped us gain a 45-times greater read performance for our geo clustering use case. However, it also added extra work on the writer side to pre-compute numbers and update multiple rows when the status of a single EV charger is updated. The following table summarizes our results.</p>\n<p><img src=\\"https://dev-media.amazoncloud.cn/112939a014384047b1c82aadd00abf35_image.png\\" alt=\\"image.png\\" /></p>\n<h4><a id=\\"Experiment_3_Use_AWS_LambdaEdge_and_AWS_Wavelength_for_better_network_performance_135\\"></a><strong>Experiment 3: Use AWS Lambda@Edge and AWS Wavelength for better network performance</strong></h4>\\n<p>We recommended that Plugsurfing use <a href=\\"https://aws.amazon.com/lambda/edge/\\" target=\\"_blank\\">Lambda@Edge</a> and <a href=\\"https://aws.amazon.com/wavelength/\\" target=\\"_blank\\">AWS Wavelength</a> to optimize network performance by shifting some of the APIs at the edge to closer to the user. The EV car dashboard can use the same 5G network connectivity to invoke Plugsurfing APIs with [AWS Wavelength](https://aws.amazon.com/cn/wavelength/?trk=cndc-detail).</p>\\n<h4><a id=\\"Postprototype_architecture_140\\"></a><strong>Post-prototype architecture</strong></h4>\\n<p>The post-prototype architecture used <a href=\\"https://aws.amazon.com/products/databases/\\" target=\\"_blank\\">purpose-built databases on AWS</a> to achieve better performance across all four use cases. We looked at the results and split the workload based on which database performs best for each use case. This approach optimized performance and cost, but added complexity on readers and writers. The final experiment summary represents the database fits for the given use cases that provide the best performance (highlighted in orange).</p>\\n<p>Plugsurfing has already implemented a short-term plan (light green) as an immediate action post-prototype and plans to implement mid-term and long-term actions (dark green) in the future.</p>\n<p><img src=\\"https://dev-media.amazoncloud.cn/a5938092055549e3a567cd5066a2eacb_image.png\\" alt=\\"image.png\\" /></p>\n<p>The following diagram illustrates the updated architecture.</p>\n<p><img src=\\"https://dev-media.amazoncloud.cn/5ae936b08e9d4c2b9c0cb9f3c163aff2_image.png\\" alt=\\"image.png\\" /></p>\n<h4><a id=\\"Conclusion_154\\"></a><strong>Conclusion</strong></h4>\\n<p>Plugsurfing was able to achieve a 70% cost reduction over their legacy setup with two-times better performance by using purpose-built databases like DynamoDB, Aurora PostgreSQL, and AWS Graviton based instances for Amazon OpenSearch Service. They achieved the following results:</p>\n<ul>\\n<li>The radius search and radius search with filtering use cases achieved better performance using Amazon OpenSearch Service on AWS Graviton with a denormalized document structure</li>\n<li>The square search use case performed better using Aurora PostgreSQL, where we used the <a href=\\"http://postgis.net/\\" target=\\"_blank\\">PostGIS</a> extension for geo square queries</li>\\n<li>The geo clustering search use case performed better using DynamoDB</li>\n</ul>\\n<p>Learn more about <a href=\\"https://aws.amazon.com/ec2/graviton/\\" target=\\"_blank\\">AWS Graviton</a> instances and <a href=\\"https://aws.amazon.com/products/databases/\\" target=\\"_blank\\">purpose-built databases on AWS</a>, and let us know how we can help optimize your workload on AWS.</p>\\n<h5><a id=\\"About_the_Author_166\\"></a><strong>About the Author</strong></h5>\\n<p><img src=\\"https://dev-media.amazoncloud.cn/0ae553dd9aa846729b000158c4ccac13_image.png\\" alt=\\"image.png\\" /></p>\n<p>Anand Shah is a Big Data Prototyping Solution Architect at AWS. He works with AWS customers and their engineering teams to build prototypes using AWS Analytics services and purpose-built databases. Anand helps customers solve the most challenging problems using art-of-the-possible technology. He enjoys beaches in his leisure time.</p>\n"}

How Plugsurfing doubled performance and reduced cost by 70% with purpose-built databases and Amazon Graviton

海外精选

海外精选的内容汇集了全球优质的亚马逊云科技相关技术内容。同时,内容中提到的“AWS”

是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

0

0 0

0亚马逊云科技解决方案 基于行业客户应用场景及技术领域的解决方案

联系亚马逊云科技专家

目录

亚马逊云科技解决方案 基于行业客户应用场景及技术领域的解决方案

联系亚马逊云科技专家

亚马逊云科技解决方案

基于行业客户应用场景及技术领域的解决方案

联系专家

0

目录

分享

分享 点赞

点赞 收藏

收藏 目录

目录立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。