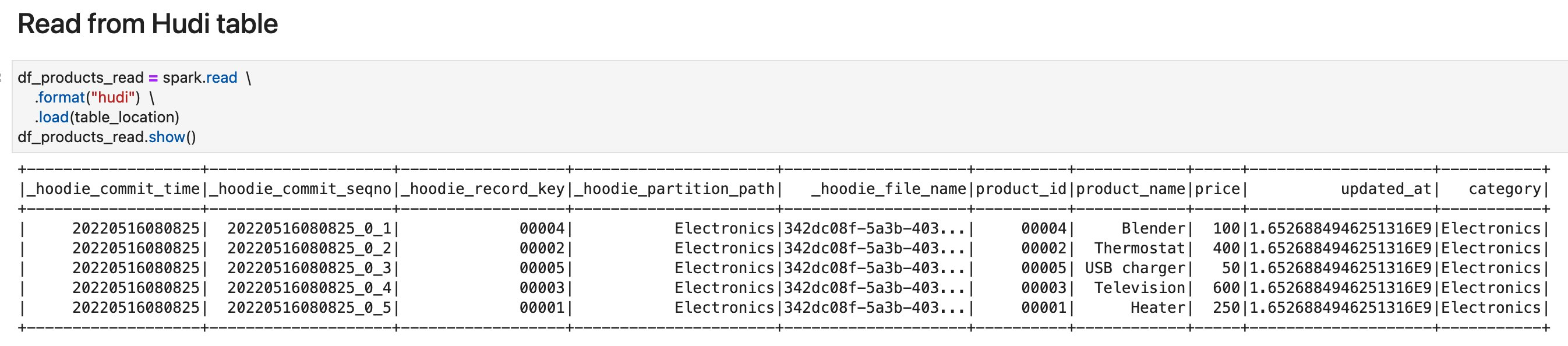

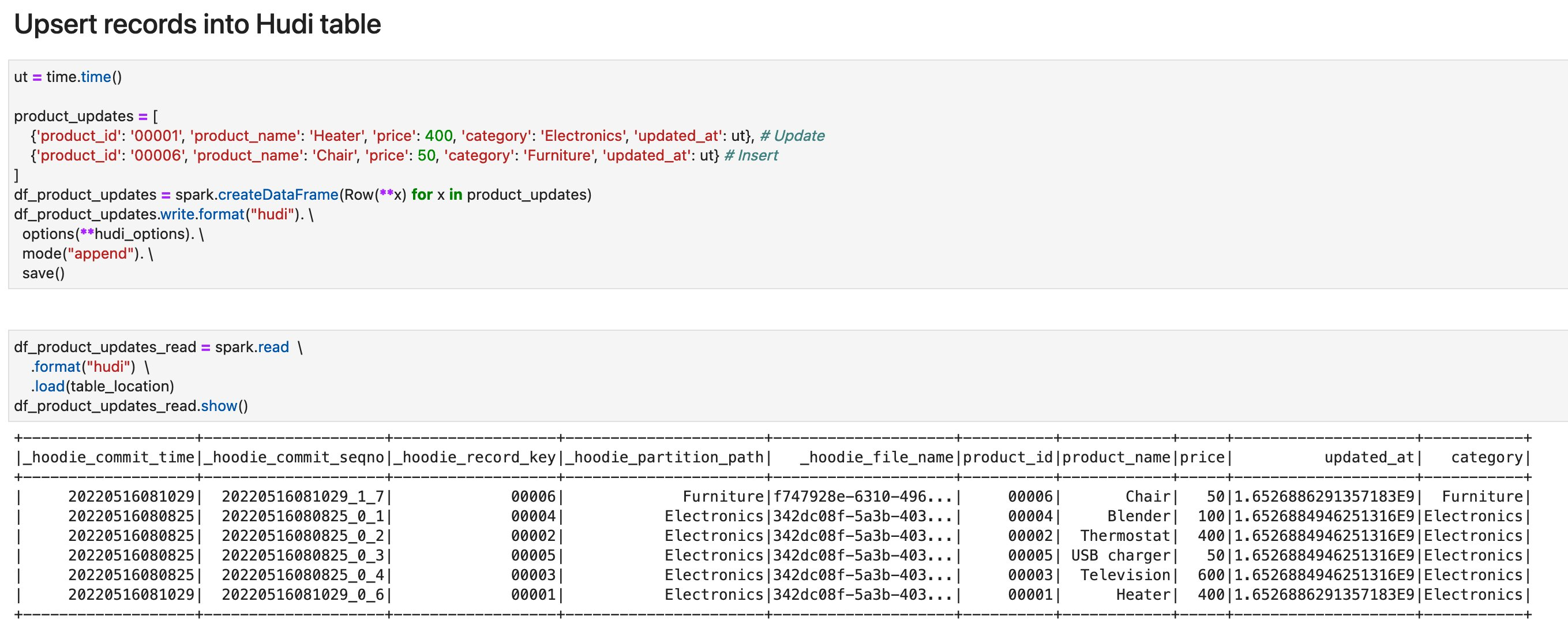

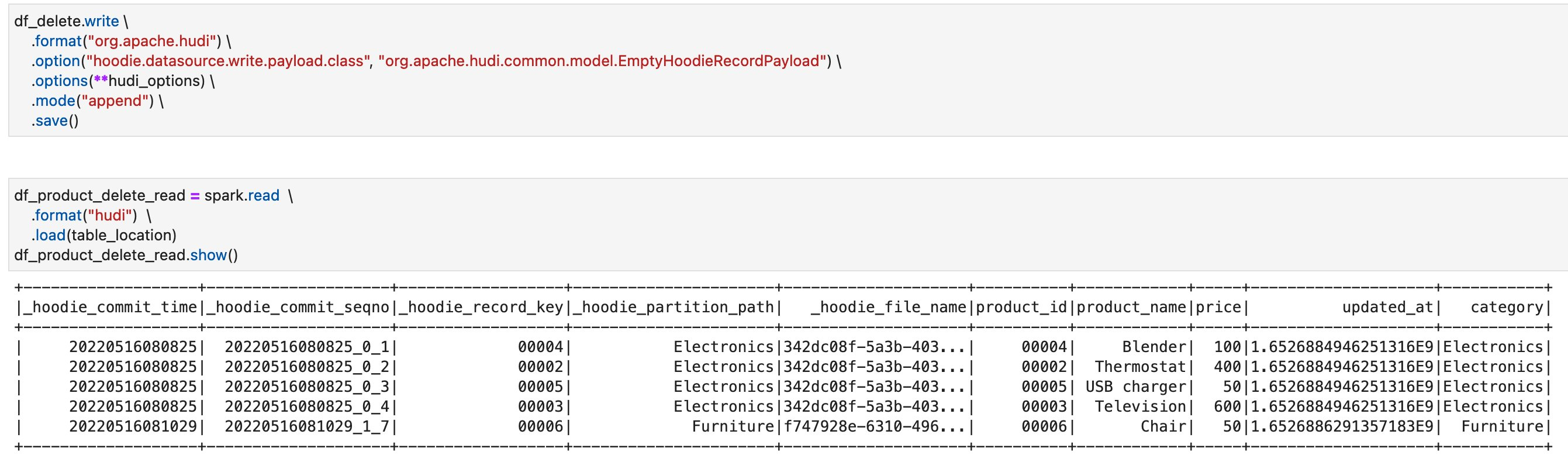

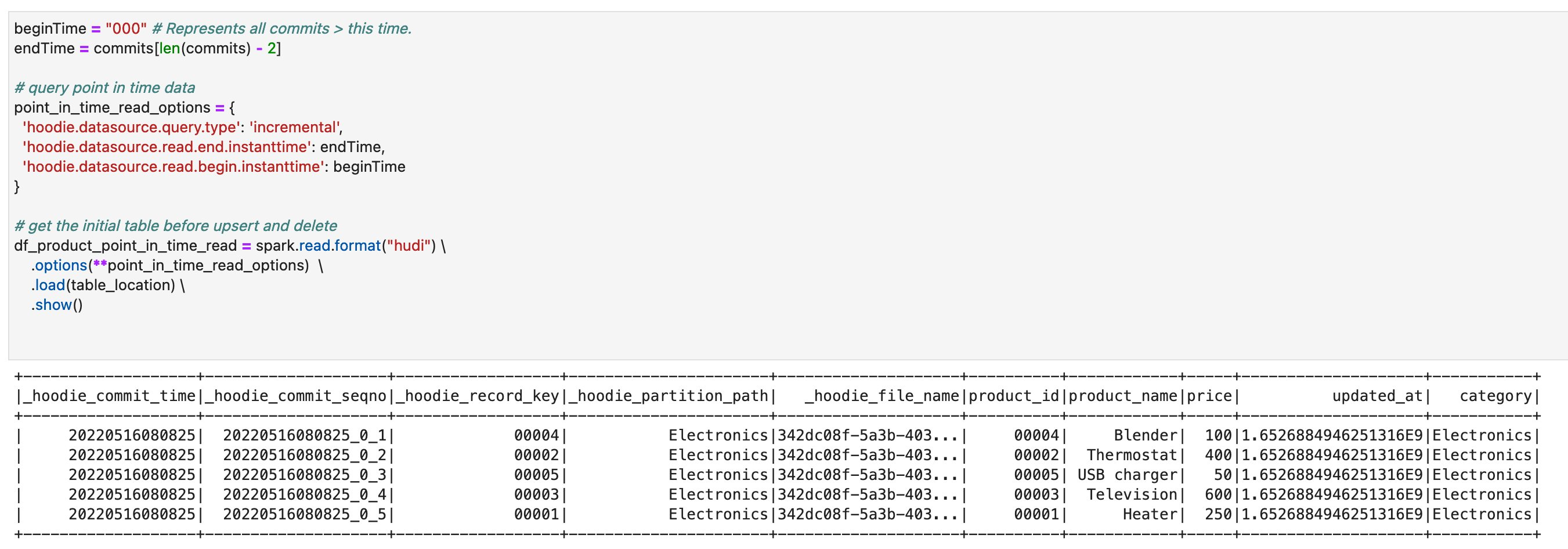

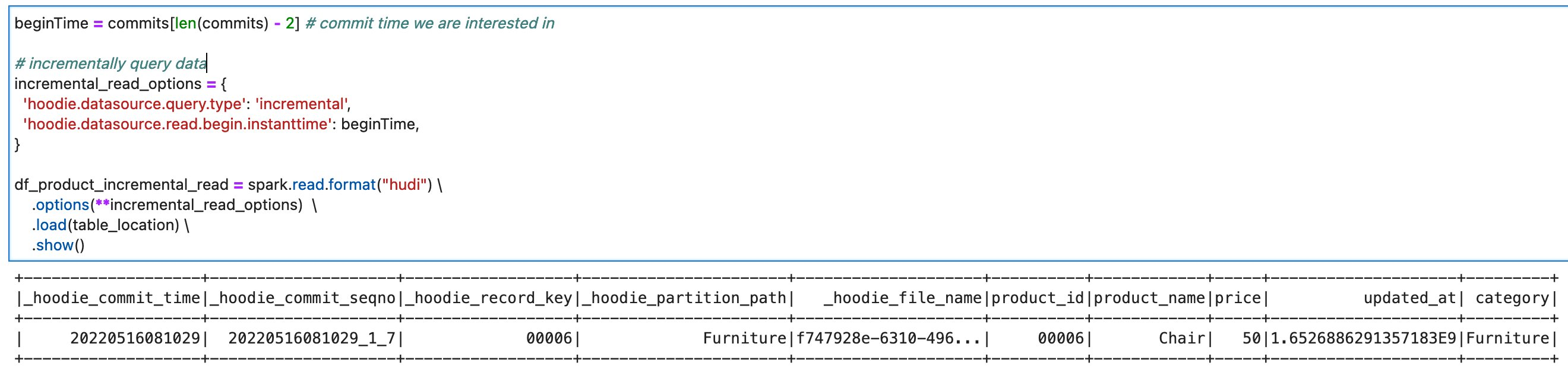

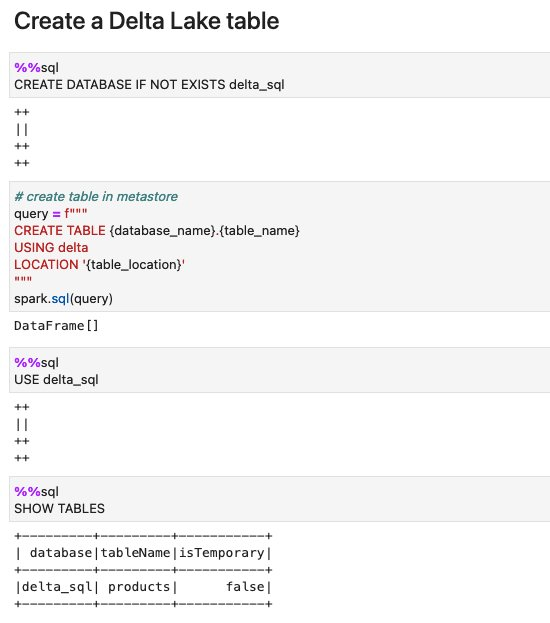

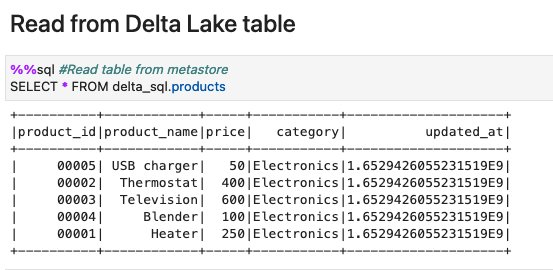

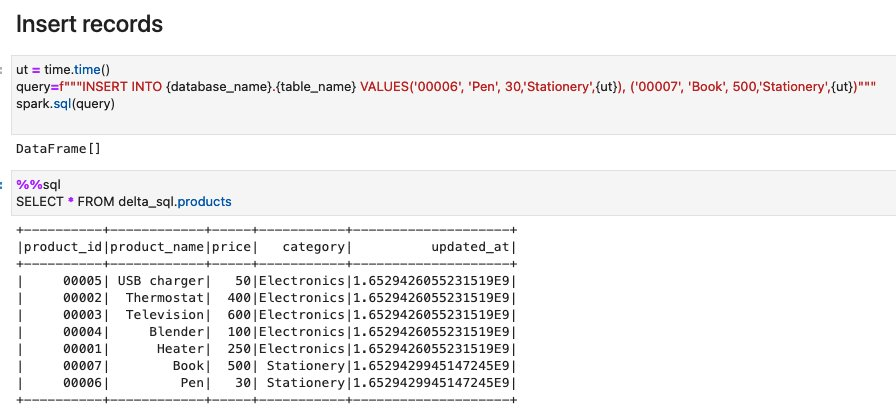

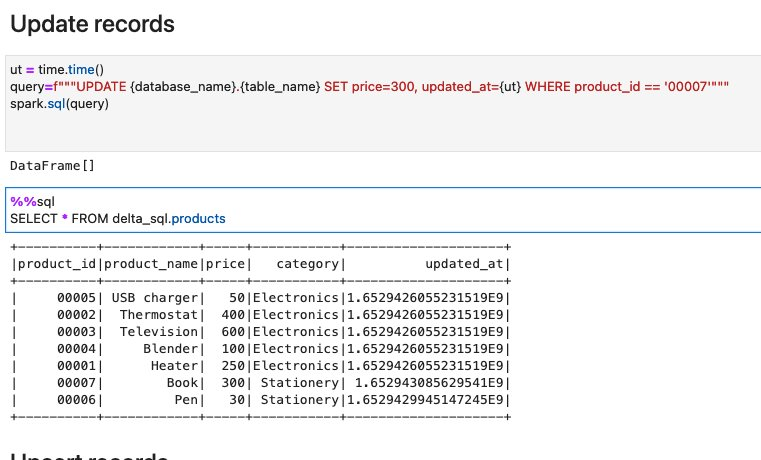

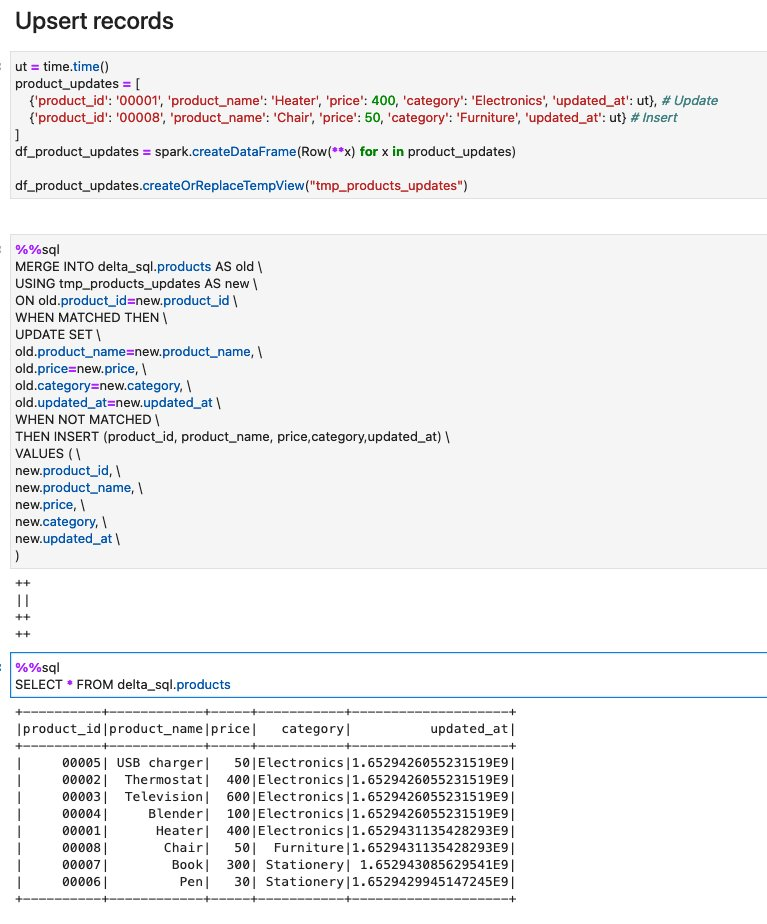

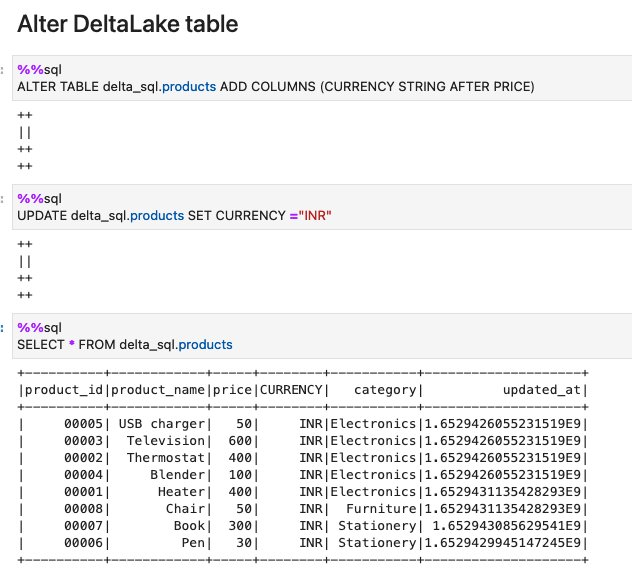

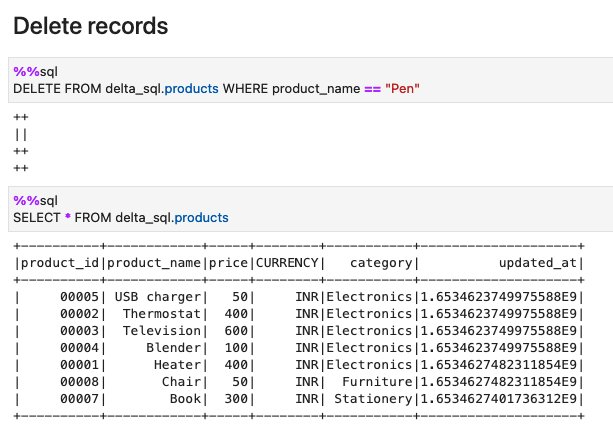

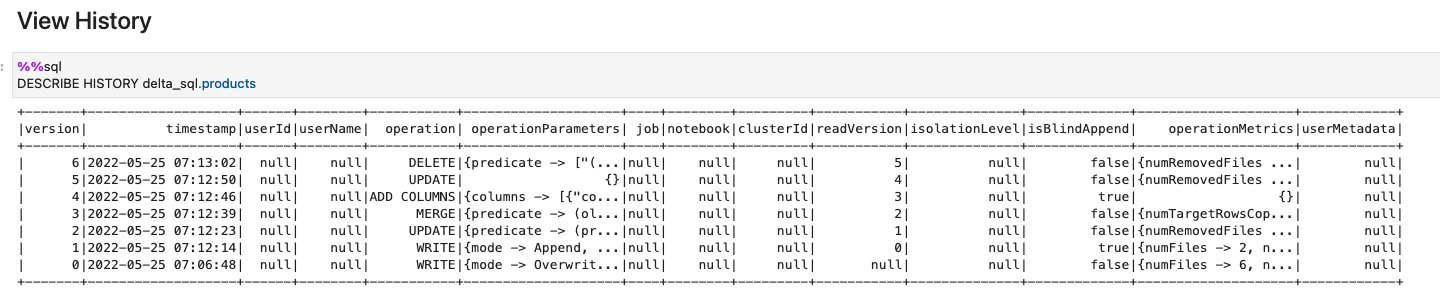

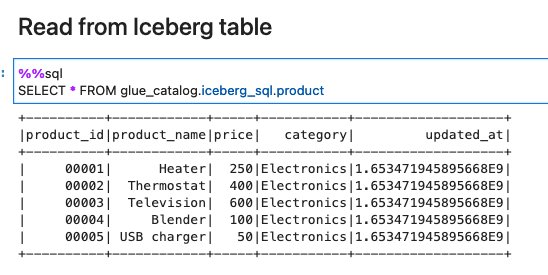

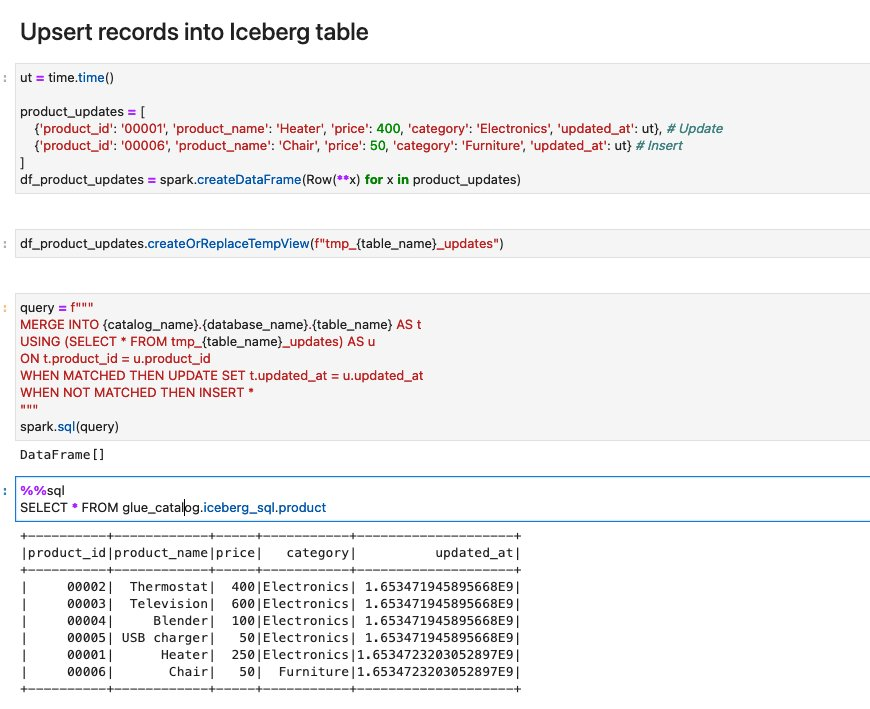

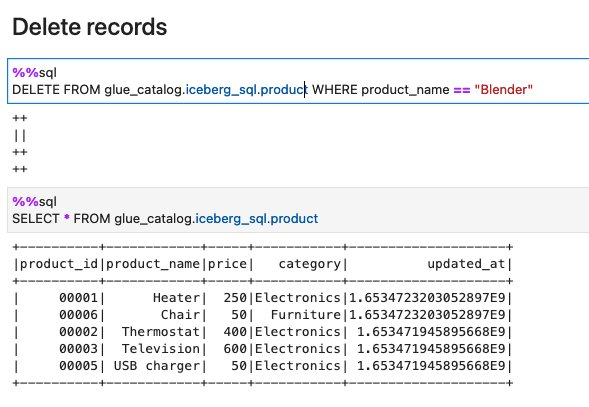

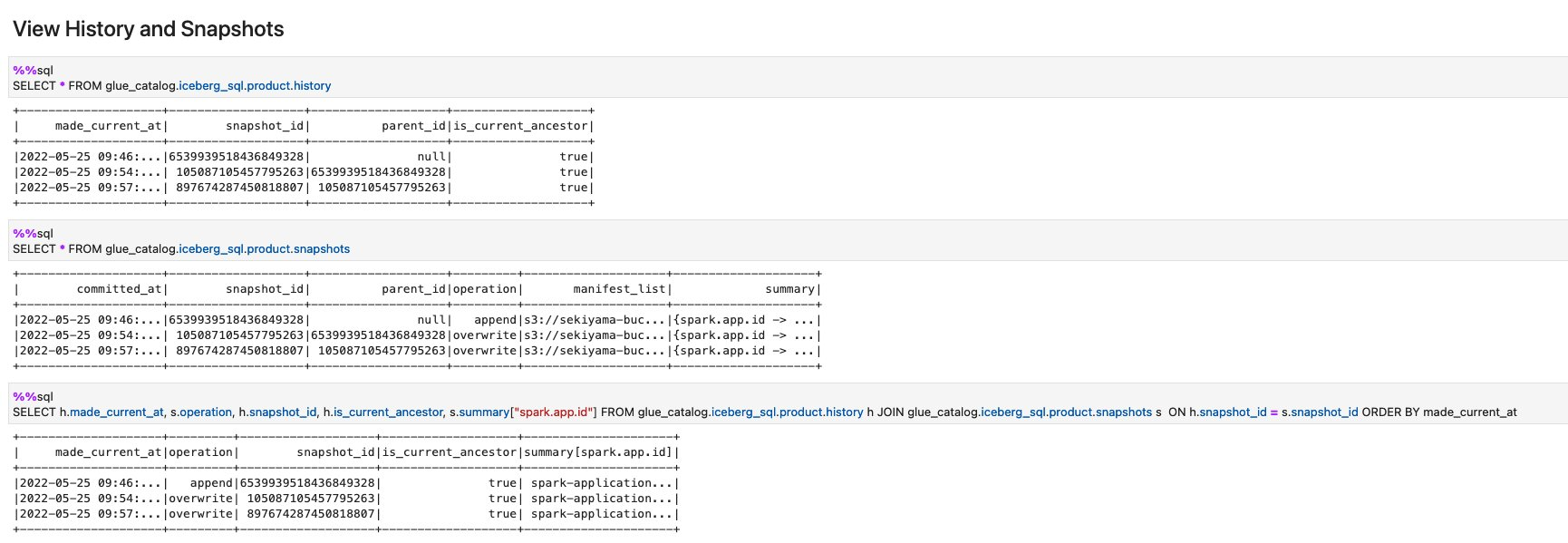

{"value":"Cloud data lakes provides a scalable and low-cost data repository that enables customers to easily store data from a variety of data sources. Data scientists, business analysts, and line of business users leverage data lake to explore, refine, and analyze petabytes of data. [Amazon Web Services Glue](https://aws.amazon.com/glue/) is a serverless data integration service that makes it easy to discover, prepare, and combine data for analytics, machine learning, and application development. Customers use Amazon Web Services Glue to discover and extract data from a variety of data sources, enrich and cleanse the data before storing it in data lakes and data warehouses.\n\nOver years, many table formats have emerged to support ACID transaction, governance, and catalog usecases. For example, formats such as [Apache Hudi](https://hudi.apache.org/), [Delta Lake](https://delta.io/), [Apache Iceberg](https://iceberg.apache.org/), and [Amazon Web Services Lake Formation governed tables](https://docs.aws.amazon.com/lake-formation/latest/dg/governed-tables-main.html), enabled customers to run ACID transactions on [Amazon Simple Storage Service](https://docs.aws.amazon.com/lake-formation/latest/dg/governed-tables-main.html) ([Amazon S3](https://aws.amazon.com/cn/s3/?trk=cndc-detail)). Amazon Web Services Glue supports these table formats for batch and streaming workloads. This post focuses on Apache Hudi, Delta Lake, and Apache Iceberg, and summarizes how to use them in Amazon Web Services Glue 3.0 jobs. If you’re interested in [Amazon Web Services Lake Formation](https://aws.amazon.com/lake-formation/) governed tables, then visit [Effective data lakes using Amazon Web Services Lake Formation series](https://aws.amazon.com/blogs/big-data/part-1-effective-data-lakes-using-aws-lake-formation-part-1-getting-started-with-governed-tables/).\n\n**Process Apache Hudi, Delta Lake, Apache Iceberg dataset at scale**\n\n- **Part 1: Amazon Web Services Glue Studio Notebook**\n- Part 2: [Using Amazon Web Services Glue Studio Visual Editor](https://aws.amazon.com/blogs/big-data/part-2-integrate-apache-hudi-delta-lake-apache-iceberg-dataset-at-scale-using-aws-glue-studio-visual-editor/)\t\n\n\n#### **Bring libraries for the data lake formats**\n\n\nToday, there are three available options for bringing libraries for the data lake formats on the Amazon Web Services Glue job platform: Marketplace connectors, custom connectors (BYOL), and extra library dependencies.\n\n##### **Marketplace connectors**\n\n[Amazon Web Services Glue Connector Marketplace](https://us-east-1.console.aws.amazon.com/gluestudio/home?region=us-east-1#/marketplace) is the centralized repository for cataloging the available Glue connectors provided by multiple vendors. You can subscribe to more than 60 connectors offered in Amazon Web Services Glue Connector Marketplace as of today. There are marketplace connectors available for [Apache Hudi](https://aws.amazon.com/marketplace/pp/prodview-6rofemcq6erku), [Delta Lake](https://aws.amazon.com/marketplace/pp/prodview-seypofzqhdueq), and [Apache Iceberg](https://aws.amazon.com/marketplace/pp/prodview-iicxofvpqvsio). Furthermore, the marketplace connectors are hosted on [Amazon Elastic Container Registry (Amazon ECR)](https://aws.amazon.com/ecr/) repository, and downloaded to the Glue job system in runtime. When you prefer simple user experience by subscribing to the connectors and using them on your Glue ETL jobs, the marketplace connector is a good option.\n\n\n##### **Custom connectors as bring-your-own-connector (BYOC)**\n\n\nAmazon Web Services Glue custom connector enables you to upload and register your own libraries located in [Amazon S3](https://aws.amazon.com/cn/s3/?trk=cndc-detail) as Glue connectors. You have more control over the library versions, patches, and dependencies. Since it uses your S3 bucket, you can configure the S3 bucket policy to share the libraries only with specific users, you can configure private network access to download the libraries using VPC Endpoints, etc. When you prefer having more control over those configurations, the custom connector as BYOC is a good option.\n\n\n##### **Extra library dependencies**\n\n\nThere is another option – to download the data lake format libraries, upload them to your S3 bucket, and add extra library dependencies to them. With this option, you can add libraries directly to the job without a connector and use them. In Glue job, you can configure in Dependent JARs path. In API, it’s the ```--extra-jars``` parameter. In Glue Studio notebook, you can configure in the ```%extra_jars``` magic. To download the relevant JAR files, see the library locations in the section **Create a Custom connection (BYOC)**.\n\n\n#### **Create a Marketplace connection**\n\nTo create a new marketplace connection for Apache Hudi, Delta Lake, or Apache Iceberg, complete the following steps.\n\n##### **Apache Hudi 0.10.1**\n\nComplete the following steps to create a marketplace connection for Apache Hudi 0.10.1:\n\n1. Open Amazon Web Services Glue Studio.\n2. Choose **Connectors**.\n3. Choose **Go to Amazon Web Services Marketplace**.\n4. Search for **Apache Hudi Connector for Amazon Web Services Glue**, and choose **Apache Hudi Connector for Amazon Web Services Glue**.\n5. Choose **Continue to Subscribe**.\n6. Review the **Terms and conditions**, pricing, and other details, and choose the **Accept Terms** button to continue.\n7. Make sure that the subscription is complete and you see the **Effective date** populated next to the product, and then choose **Continue to Configuration**.\n8. For **Delivery Method**, choose ```Glue 3.0```.\n9. For **Software version**, choose ```0.10.1```.\n10. Choose **Continue to Launch**.\n11. Under **Usage instructions**, choose **Activate the Glue connector in Amazon Web Services Glue Studio**. You’re redirected to Amazon Web Services Glue Studio.\n12. For **Name**, enter a name for your connection.\n13. Optionally, choose a VPC, subnet, and security group.\n14. Choose **Create connection**.\n\n##### **Delta Lake 1.0.0**\n\nComplete the following steps to create a marketplace connection for Delta Lake 1.0.0:\n\n1. Open Amazon Web Services Glue Studio.\n2. Choose **Connectors**.\n3. Choose **Go to Amazon Web Services Marketplace**.\n4. Search for **Delta Lake Connector for Amazon Web Services Glue**, and choose **Delta Lake Connector for Amazon Web Services Glue**.\n5. Choose **Continue to Subscribe**.\n6. Review the **Terms and conditions**, pricing, and other details, and choose the **Accept Terms** button to continue.\n7. Make sure that the subscription is complete and you see the **Effective date** populated next to the product, and then choose **Continue to Configuration**.\n8. For **Delivery Method**, choose ```Glue 3.0```.\n9. For **Software version**, choose ```1.0.0-2```.\n10. Choose **Continue to Launch**.\n11. Under **Usage instructions**, choose **Activate the Glue connector in Amazon Web Services Glue Studio**. You’re redirected to Amazon Web Services Glue Studio.\n12. For **Name**, enter a name for your connection.\n13. Optionally, choose a VPC, subnet, and security group.\n14. Choose **Create connection**.\n\n\n##### **Apache Iceberg 0.12.0**\n\n\nComplete the following steps to create a marketplace connection for Apache Iceberg 0.12.0:\n\n1. Open Amazon Web Services Glue Studio.\n2. Choose **Connectors**.\n3. Choose **Go to Amazon Web Services Marketplace**.\n4. Search for **Apache Iceberg Connector for Amazon Web Services Glue**, and choose **Apache Iceberg Connector for Amazon Web Services Glue**.\n5. Choose **Continue to Subscribe**.\n6. Review the **Terms and conditions**, pricing, and other details, and choose the **Accept Terms** button to continue.\n7. Make sure that the subscription is complete and you see the **Effective date** populated next to the product, and then choose **Continue to Configuration**.\n8. For **Delivery Method**, choose ```Glue 3.0```.\n9. For **Software version**, choose ```0.12.0-2```.\n10. Choose **Continue to Launch**.\n11. Under **Usage instructions**, choose **Activate the Glue connector in Amazon Web Services Glue Studio**. You’re redirected to Amazon Web Services Glue Studio.\n12. For **Name**, enter ```iceberg-0120-mp-connection```.\n13. Optionally, choose a VPC, subnet, and security group.\n14. Choose **Create connection**.\n\n\n#### **Create a Custom connection (BYOC)**\n\nYou can create your own custom connectors from JAR files. In this section, you can see the exact JAR files that are used in the marketplace connectors. You can just use the files for your custom connectors for Apache Hudi, Delta Lake, and Apache Iceberg.\n\nTo create a new custom connection for Apache Hudi, Delta Lake, or Apache Iceberg, complete the following steps.\n\n##### **Apache Hudi 0.9.0**\n\nComplete following steps to create a custom connection for Apache Hudi 0.9.0:\n\n1. Download the following JAR files, and upload them to your S3 bucket.\n a.[https://repo1.maven.org/maven2/org/apache/hudi/hudi-spark3-bundle_2.12/0.9.0/hudi-spark3-bundle_2.12-0.9.0.jar](https://repo1.maven.org/maven2/org/apache/hudi/hudi-spark3-bundle_2.12/0.9.0/hudi-spark3-bundle_2.12-0.9.0.jar)\nb.[https://repo1.maven.org/maven2/org/apache/hudi/hudi-utilities-bundle_2.12/0.9.0/hudi-utilities-bundle_2.12-0.9.0.jar](https://repo1.maven.org/maven2/org/apache/hudi/hudi-utilities-bundle_2.12/0.9.0/hudi-utilities-bundle_2.12-0.9.0.jar)\nc.[https://repo1.maven.org/maven2/org/apache/parquet/parquet-avro/1.10.1/parquet-avro-1.10.1.jar](https://repo1.maven.org/maven2/org/apache/parquet/parquet-avro/1.10.1/parquet-avro-1.10.1.jar)\nd.[https://repo1.maven.org/maven2/org/apache/spark/spark-avro_2.12/3.1.1/spark-avro_2.12-3.1.1.jar](https://repo1.maven.org/maven2/org/apache/spark/spark-avro_2.12/3.1.1/spark-avro_2.12-3.1.1.jar)\ne.[https://repo1.maven.org/maven2/org/apache/calcite/calcite-core/1.10.0/calcite-core-1.10.0.jar](https://repo1.maven.org/maven2/org/apache/calcite/calcite-core/1.10.0/calcite-core-1.10.0.jar)\nf.[https://repo1.maven.org/maven2/org/datanucleus/datanucleus-core/4.1.17/datanucleus-core-4.1.17.jar](https://repo1.maven.org/maven2/org/datanucleus/datanucleus-core/4.1.17/datanucleus-core-4.1.17.jar)\ng.[https://repo1.maven.org/maven2/org/apache/thrift/libfb303/0.9.3/libfb303-0.9.3.jar](https://repo1.maven.org/maven2/org/apache/thrift/libfb303/0.9.3/libfb303-0.9.3.jar)\n2. Open Amazon Web Services Glue Studio.\n3. Choose **Connectors**.\n4. Choose **Create custom connector**.\n5. For **Connector S3 URL**, enter comma separated [Amazon S3](https://aws.amazon.com/cn/s3/?trk=cndc-detail) paths for the above JAR files.\n6. For **Name**, enter ```hudi-090-byoc-connector```.\n7. For **Connector Type**, choose ```Spark```.\n8. For **Class name**, enter ```org.apache.hudi```.\n9. Choose **Create connector**.\n10. Choose ```hudi-090-byoc-connector```.\n11. Choose **Create connection**.\n12. For **Name**, enter ```hudi-090-byoc-connection```.\n13. Optionally, choose a VPC, subnet, and security group.\n14. Choose **Create connection**. \n\n##### **Apache Hudi 0.10.1**\n\nComplete the following steps to create a custom connection for Apache Hudi 0.10.1:\n\n1. Download following JAR files, and upload them to your S3 bucket.\n a. [hudi-utilities-bundle_2.12-0.10.1.jar](https://aws-bigdata-blog.s3.amazonaws.com/artifacts/hudi-delta-iceberg/hudi0.10.1/hudi-utilities-bundle_2.12-0.10.1.jar)\n b. [hudi-spark3.1.1-bundle_2.12-0.10.1.jar](https://aws-bigdata-blog.s3.amazonaws.com/artifacts/hudi-delta-iceberg/hudi0.10.1/hudi-spark3.1.1-bundle_2.12-0.10.1.jar)\n c. [spark-avro_2.12-3.1.1.jar](https://repo1.maven.org/maven2/org/apache/spark/spark-avro_2.12/3.1.1/spark-avro_2.12-3.1.1.jar)\n2. Open Amazon Web Services Glue Studio.\n3. Choose **Connectors**.\n4. Choose **Create custom connector**.\n5. For **Connector S3 URL**, enter comma separated [Amazon S3](https://aws.amazon.com/cn/s3/?trk=cndc-detail) paths for the above JAR files.\n6. For **Name**, enter ```hudi-0101-byoc-connector```.\n7. For **Connector Type**, choose Spark.\n8. For **Class name**, enter ```org.apache.hudi```.\n9. Choose **Create connector**.\n10. Choose ```hudi-0101-byoc-connector```.\n11. Choose **Create connection**.\n12. For **Name**, enter ```hudi-0101-byoc-connection```.\n13. Optionally, choose a VPC, subnet, and security group.\n14. Choose **Create connection**.\n\nNote that the above Hudi 0.10.1 installation on Glue 3.0 does not fully support [Merge On Read (MoR) tables](https://hudi.apache.org/docs/concepts/#merge-on-read-table).\n\n\n##### **Delta Lake 1.0.0**\n\n\nComplete the following steps to create a custom connector for Delta Lake 1.0.0:\n\n1. Download the following JAR file, and upload it to your S3 bucket.\n a. [https://repo1.maven.org/maven2/io/delta/delta-core_2.12/1.0.0/delta-core_2.12-1.0.0.jar](https://repo1.maven.org/maven2/io/delta/delta-core_2.12/1.0.0/delta-core_2.12-1.0.0.jar)\n2. Open Amazon Web Services Glue Studio.\n3. Choose **Connectors**.\n4. Choose **Create custom connector**.\n5. For **Connector S3 URL**, enter a comma separated [Amazon S3](https://aws.amazon.com/cn/s3/?trk=cndc-detail) path for the above JAR file.\n6. For **Name**, enter ```delta-100-byoc-connector```.\n7. For **Connector Type**, choose ```Spark```.\n8. For **Class name**, enter ```org.apache.spark.sql.delta.sources.DeltaDataSource```.\n9. Choose **Create connector**.\n10. Choose ```delta-100-byoc-connector```.\n11. Choose **Create connection**.\n12. For **Name**, enter ```delta-100-byoc-connection```.\n13. Optionally, choose a VPC, subnet, and security group.\n14. Choose **Create connection**.\n\n\n##### **Apache Iceberg 0.12.0**\n\nComplete the following steps to create a custom connection for Apache Iceberg 0.12.0:\n\n1. Download the following JAR files, and upload them to your S3 bucket.\n a. [https://search.maven.org/remotecontent?filepath=org/apache/iceberg/iceberg-spark3-runtime/0.12.0/iceberg-spark3-runtime-0.12.0.jar](https://search.maven.org/remotecontent?filepath=org/apache/iceberg/iceberg-spark3-runtime/0.12.0/iceberg-spark3-runtime-0.12.0.jar)\nb. [https://repo1.maven.org/maven2/software/amazon/awssdk/bundle/2.15.40/bundle-2.15.40.jar](https://repo1.maven.org/maven2/software/amazon/awssdk/bundle/2.15.40/bundle-2.15.40.jar)\nc. [https://repo1.maven.org/maven2/software/amazon/awssdk/url-connection-client/2.15.40/url-connection-client-2.15.40.jar](https://repo1.maven.org/maven2/software/amazon/awssdk/url-connection-client/2.15.40/url-connection-client-2.15.40.jar)\n2. Open Amazon Web Services Glue Studio.\n3. Choose **Connectors**.\n4. Choose **Create custom connector**.\n5. For **Connector S3 URL**, enter comma separated [Amazon S3](https://aws.amazon.com/cn/s3/?trk=cndc-detail) paths for the above JAR files.\n6. For **Name**, enter ```iceberg-0120-byoc-connector```.\n7. For **Connector Type**, choose ```Spark```.\n8. For **Class name**, enter ```iceberg```.\n9. Choose **Create connector**.\n10. Choose ```iceberg-0120-byoc-connector```.\n11. Choose **Create connection**.\n12. For **Name**, enter ```iceberg-0120-byoc-connection```.\n13. Optionally, choose a VPC, subnet, and security group.\n14. Choose **Create connection**.\n\n\n##### **Apache Iceberg 0.13.1**\n\n1. Download the following JAR files, and upload them to your S3 bucket.\na. [iceberg-spark-runtime-3.1_2.12-0.13.1.jar](https://aws-bigdata-blog.s3.amazonaws.com/artifacts/hudi-delta-iceberg/iceberg0.13.1/iceberg-spark-runtime-3.1_2.12-0.13.1.jar)\nb. [https://repo1.maven.org/maven2/software/amazon/awssdk/bundle/2.17.161/bundle-2.17.161.jar](https://repo1.maven.org/maven2/software/amazon/awssdk/bundle/2.17.161/bundle-2.17.161.jar)\nc. [https://repo1.maven.org/maven2/software/amazon/awssdk/url-connection-client/2.17.161/url-connection-client-2.17.161.jar](https://repo1.maven.org/maven2/software/amazon/awssdk/url-connection-client/2.17.161/url-connection-client-2.17.161.jar)\n2. Open Amazon Web Services Glue Studio.\n3. Choose **Connectors**.\n4. Choose **Create custom connector**.\n5. For **Connector S3 URL**, enter comma separated [Amazon S3](https://aws.amazon.com/cn/s3/?trk=cndc-detail) paths for the above JAR files.\n6. For **Name**, enter ```iceberg-0131-byoc-connector```.\n7. For **Connector Type**, choose ``Spark``.\\n8. For **Class name**, enter ```iceberg```.\\n9. Choose **Create connector**.\\n10. Choose ```iceberg-0131-byoc-connector```.\\n11. Choose Create connection.\\n12. For Name, enter ```iceberg-0131-byoc-connection```.\\n13. Optionally, choose a VPC, subnet, and security group.\\n14. Choose Create connection.\\n\\n\\n#### **Prerequisites**\\n\\n\\nTo continue this tutorial, you must create the following Amazon Web Services resources in advance:\\n\\n- [Amazon Web Services Identity and Access Management (IAM)](https://aws.amazon.com/iam/) role for your ETL job or notebook as instructed in [Set up IAM permissions for Amazon Web Services Glue Studio](https://docs.aws.amazon.com/glue/latest/ug/setting-up.html#getting-started-iam-permissions). Note that ```AmazonEC2ContainerRegistryReadOnly``` or equivalent permissions are needed when you use the marketplace connectors.\\n- Amazon S3 bucket for storing data.\\n- Glue connection (one of the marketplace connector or the custom connector corresponding to the data lake format).\\n\\n\\n#### **Reads/writes using the connector on Amazon Web Services Glue Studio Notebook**\\n\\n\\nThe following are the instructions to read/write tables using each data lake format on Amazon Web Services Glue Studio Notebook. As a prerequisite, make sure that you have created a connector and a connection for the connector using the information above.\\nThe example notebooks are hosted on [Amazon Web Services Glue Samples GitHub repository](https://github.com/aws-samples/aws-glue-samples/tree/master/examples/notebooks). You can find 7 notebooks available. In the following instructions, we will use one notebook per data lake format.\\n\\n\\n##### **Apache Hudi**\\n\\n1. Download [hudi_dataframe.ipynb](https://raw.githubusercontent.com/aws-samples/aws-glue-samples/master/examples/notebooks/hudi_dataframe.ipynb).\\n2. Open Amazon Web Services Glue Studio.\\n3. Choose **Jobs**.\\n4. Choose **Jupyter notebook** and then choose **Upload and edit an existing notebook**. From **Choose file**, select your ipynb file and choose **Open**, then choose **Create**.\\n5. On the **Notebook setup** page, for **Job name**, enter your job name.\\n6. For **IAM role**, select your IAM role. Choose **Create job**. After a short time period, the Jupyter notebook editor appears.\\n7. In the first cell, replace the placeholder with your Hudi connection name, and run the cell:\\n```%connections hudi-0101-byoc-connection``` (Alternatively you can use your connection name created from the marketplace connector).\\n8. In the second cell, replace the S3 bucket name placeholder with your S3 bucket name, and run the cell.\\n9. Run the cells in the section **Initialize SparkSession**.\\n10. Run the cells in the section **Clean up existing resources**.\\n11. Run the cells in the section **Create Hudi table with sample data using catalog sync** to create a new Hudi table with sample data.\\n12. Run the cells in the section **Read from Hudi table** to verify the new Hudi table. There are five records in this table.\\n\\n\\n\\n13. Run the cells in the section **Upsert records into Hudi table** to see how upsert works on Hudi. This code inserts one new record, and updates the one existing record. You can verify that there is a new record ```product_id=00006```, and the existing record ```product_id=00001```’s price has been updated from ```250``` to ```400```.\\n\\n\\n\\n14. Run the cells in the section **Delete a Record**. You can verify that the existing record ```product_id=00001``` has been deleted.\\n\\n\\n\\n15. Run the cells in the section **Point in time query**. You can verify that you’re seeing the previous version of the table where the upsert and delete operations haven’t been applied yet.\\n\\n\\n\\n16. Run the cells in the section **Incremental Query**. You can verify that you’re seeing only the recent commit about ```product_id=00006```.\\n\\n\\n\\nOn this notebook, you could complete the basic Spark DataFrame operations on Hudi tables.\\n\\n\\n##### **Delta Lake**\\n\\nTo read/write Delta Lake tables in the Amazon Web Services Glue Studio notebook, complete following:\\n\\n1. Download [delta_sql.ipynb](https://raw.githubusercontent.com/aws-samples/aws-glue-samples/master/examples/notebooks/delta_sql.ipynb).\\n2. Open Amazon Web Services Glue Studio.\\n3. Choose **Jobs**.\\n4. Choose **Jupyter notebook**, and then choose **Upload and edit an existing notebook**. From **Choose file**, select your ipynb file and choose **Open**, then choose **Create**.\\n5. On the **Notebook setup** page, for **Job name**, enter your job name.\\n6. For **IAM** role, select your IAM role. Choose **Create job**. After a short time period, the Jupyter notebook editor appears.\\n7. In the first cell, replace the placeholder with your Delta connection name, and run the cell:\\n```%connections delta-100-byoc-connection```\\n8. In the second cell, replace the S3 bucket name placeholder with your S3 bucket name, and run the cell.\\n9. Run the cells in the section **Initialize SparkSession**.\\n10. Run the cells in the section **Clean up existing resources**.\\n11. Run the cells in the section **Create Delta table with sample data** to create a new Delta table with sample data.\\n12. Run the cells in the section **Create a Delta Lake table**.\\n\\n\\n\\n13. Run the cells in the section **Read from Delta Lake table** to verify the new Delta table. There are five records in this table.\\n\\n\\n\\n14. Run the cells in the section Insert records. The query inserts two new records: ```record_id=00006```, and ```record_id=00007```.\\n\\n\\n\\n15. Run the cells in the section Update records. The query updates the price of the existing records ```record_id=00007```, and ```record_id=00007``` from ```500``` to ```300```.\\n\\n\\n\\n16. Run the cells in the section Upsert records. to see how upsert works on Delta. This code inserts one new record, and updates the one existing record. You can verify that there is a new record ```product_id=00008```, and the existing record ```product_id=00001```’s price has been updated from ```250``` to ```400```.\\n\\n\\n\\n17. Run the cells in the section **Alter DeltaLake table**. The queries add one new column, and update the values in the column.\\n\\n\\n\\n18. Run the cells in the section Delete records. You can verify that the record ```product_id=00006``` because it’s ```product_name``` is ```Pen```.\\n\\n\\n\\n19. Run the cells in the section **View History** to describe the history of operations that was triggered against the target Delta table.\\n\\n\\n\\nOn this notebook, you could complete the basic Spark SQL operations on Delta tables.\\n\\n\\n##### **Apache Iceberg**\\n\\nTo read/write Apache Iceberg tables in the Amazon Web Services Glue Studio notebook, complete the following:\\n\\n1. Download [iceberg_sql.ipynb](https://raw.githubusercontent.com/aws-samples/aws-glue-samples/master/examples/notebooks/iceberg_sql.ipynb).\\n2. Open Amazon Web Services Glue Studio.\\n3. Choose **Jobs**.\\n4. Choose **Jupyter notebook** and then choose **Upload and edit an existing notebook**. From **Choose file**, select your ipynb file and choose **Open**, then choose **Create**.\\n5. On the **Notebook setup** page, for **Job name**, enter your job name.\\n6. For **IAM** role, select your IAM role. Choose **Create job**. After a short time period, the Jupyter notebook editor appears.\\n7. In the first cell, replace the placeholder with your Delta connection name, and run the cell:\\n```%connections iceberg-0131-byoc-connection``` (Alternatively you can use your connection name created from the marketplace connector).\n8. In the second cell, replace the S3 bucket name placeholder with your S3 bucket name, and run the cell.\n9. Run the cells in the section **Initialize SparkSession**.\n10. Run the cells in the section **Clean up existing resources**.\n11. Run the cells in the section **Create Iceberg table with sample data** to create a new Iceberg table with sample data.\n12. Run the cells in the section **Read from Iceberg table**.\n\n\n\n13. Run the cells in the section **Upsert records into Iceberg table**.\n\n\n\n14. Run the cells in the section **Delete records**.\n\n\n\n15. Run the cells in the section **View History and Snapshots**.\n\n\n\nOn this notebook, you could complete the basic Spark SQL operations on Iceberg tables.\n\n\n#### **Conclusion**\n\n\nThis post summarized how to utilize Apache Hudi, Delta Lake, and Apache Iceberg on Amazon Web Services Glue platform, as well as demonstrate how each format works with a Glue Studio notebook. You can start using those data lake formats easily in Spark DataFrames and Spark SQL on the Glue jobs or the Glue Studio notebooks.\n\nThis post focused on interactive coding and querying on notebooks. The upcoming part 2 will focus on the experience using Amazon Web Services Glue Studio Visual Editor and Glue DynamicFrames for customers who prefer visual authoring without the need to write code.\n\n\n##### **About the Authors**\n\n\n\n**Noritaka Sekiyama** is a Principal Big Data Architect on the Amazon Web Services Glue team. He enjoys learning different use cases from customers and sharing knowledge about big data technologies with the wider community.\n\n\n\n**Dylan Qu** is a Specialist Solutions Architect focused on Big Data & Analytics with Amazon Web Services. He helps customers architect and build highly scalable, performant, and secure cloud-based solutions on Amazon Web Services.\n\n\n\n**Monjumi Sarma** is a Data Lab Solutions Architect at Amazon Web Services. She helps customers architect data analytics solutions, which gives them an accelerated path towards modernization initiatives.\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n","render":"<p>Cloud data lakes provides a scalable and low-cost data repository that enables customers to easily store data from a variety of data sources. Data scientists, business analysts, and line of business users leverage data lake to explore, refine, and analyze petabytes of data. <a href=\\"https://aws.amazon.com/glue/\\" target=\\"_blank\\">Amazon Web Services Glue</a> is a serverless data integration service that makes it easy to discover, prepare, and combine data for analytics, machine learning, and application development. Customers use Amazon Web Services Glue to discover and extract data from a variety of data sources, enrich and cleanse the data before storing it in data lakes and data warehouses.</p>\\n<p>Over years, many table formats have emerged to support ACID transaction, governance, and catalog usecases. For example, formats such as <a href=\\"https://hudi.apache.org/\\" target=\\"_blank\\">Apache Hudi</a>, <a href=\\"https://delta.io/\\" target=\\"_blank\\">Delta Lake</a>, <a href=\\"https://iceberg.apache.org/\\" target=\\"_blank\\">Apache Iceberg</a>, and <a href=\\"https://docs.aws.amazon.com/lake-formation/latest/dg/governed-tables-main.html\\" target=\\"_blank\\">Amazon Web Services Lake Formation governed tables</a>, enabled customers to run ACID transactions on <a href=\\"https://docs.aws.amazon.com/lake-formation/latest/dg/governed-tables-main.html\\" target=\\"_blank\\">Amazon Simple Storage Service</a> ([Amazon S3](https://aws.amazon.com/cn/s3/?trk=cndc-detail)). Amazon Web Services Glue supports these table formats for batch and streaming workloads. This post focuses on Apache Hudi, Delta Lake, and Apache Iceberg, and summarizes how to use them in Amazon Web Services Glue 3.0 jobs. If you’re interested in <a href=\\"https://aws.amazon.com/lake-formation/\\" target=\\"_blank\\">Amazon Web Services Lake Formation</a> governed tables, then visit <a href=\\"https://aws.amazon.com/blogs/big-data/part-1-effective-data-lakes-using-aws-lake-formation-part-1-getting-started-with-governed-tables/\\" target=\\"_blank\\">Effective data lakes using Amazon Web Services Lake Formation series</a>.</p>\\n<p><strong>Process Apache Hudi, Delta Lake, Apache Iceberg dataset at scale</strong></p>\\n<ul>\\n<li><strong>Part 1: Amazon Web Services Glue Studio Notebook</strong></li>\\n<li>Part 2: <a href=\\"https://aws.amazon.com/blogs/big-data/part-2-integrate-apache-hudi-delta-lake-apache-iceberg-dataset-at-scale-using-aws-glue-studio-visual-editor/\\" target=\\"_blank\\">Using Amazon Web Services Glue Studio Visual Editor</a></li>\\n</ul>\n<h4><a id=\\"Bring_libraries_for_the_data_lake_formats_10\\"></a><strong>Bring libraries for the data lake formats</strong></h4>\\n<p>Today, there are three available options for bringing libraries for the data lake formats on the Amazon Web Services Glue job platform: Marketplace connectors, custom connectors (BYOL), and extra library dependencies.</p>\n<h5><a id=\\"Marketplace_connectors_15\\"></a><strong>Marketplace connectors</strong></h5>\\n<p><a href=\\"https://us-east-1.console.aws.amazon.com/gluestudio/home?region=us-east-1#/marketplace\\" target=\\"_blank\\">Amazon Web Services Glue Connector Marketplace</a> is the centralized repository for cataloging the available Glue connectors provided by multiple vendors. You can subscribe to more than 60 connectors offered in Amazon Web Services Glue Connector Marketplace as of today. There are marketplace connectors available for <a href=\\"https://aws.amazon.com/marketplace/pp/prodview-6rofemcq6erku\\" target=\\"_blank\\">Apache Hudi</a>, <a href=\\"https://aws.amazon.com/marketplace/pp/prodview-seypofzqhdueq\\" target=\\"_blank\\">Delta Lake</a>, and <a href=\\"https://aws.amazon.com/marketplace/pp/prodview-iicxofvpqvsio\\" target=\\"_blank\\">Apache Iceberg</a>. Furthermore, the marketplace connectors are hosted on <a href=\\"https://aws.amazon.com/ecr/\\" target=\\"_blank\\">Amazon Elastic Container Registry (Amazon ECR)</a> repository, and downloaded to the Glue job system in runtime. When you prefer simple user experience by subscribing to the connectors and using them on your Glue ETL jobs, the marketplace connector is a good option.</p>\\n<h5><a id=\\"Custom_connectors_as_bringyourownconnector_BYOC_20\\"></a><strong>Custom connectors as bring-your-own-connector (BYOC)</strong></h5>\\n<p>Amazon Web Services Glue custom connector enables you to upload and register your own libraries located in Amazon S3 as Glue connectors. You have more control over the library versions, patches, and dependencies. Since it uses your S3 bucket, you can configure the S3 bucket policy to share the libraries only with specific users, you can configure private network access to download the libraries using VPC Endpoints, etc. When you prefer having more control over those configurations, the custom connector as BYOC is a good option.</p>\n<h5><a id=\\"Extra_library_dependencies_26\\"></a><strong>Extra library dependencies</strong></h5>\\n<p>There is another option – to download the data lake format libraries, upload them to your S3 bucket, and add extra library dependencies to them. With this option, you can add libraries directly to the job without a connector and use them. In Glue job, you can configure in Dependent JARs path. In API, it’s the <code>--extra-jars</code> parameter. In Glue Studio notebook, you can configure in the <code>%extra_jars</code> magic. To download the relevant JAR files, see the library locations in the section <strong>Create a Custom connection (BYOC)</strong>.</p>\\n<h4><a id=\\"Create_a_Marketplace_connection_32\\"></a><strong>Create a Marketplace connection</strong></h4>\\n<p>To create a new marketplace connection for Apache Hudi, Delta Lake, or Apache Iceberg, complete the following steps.</p>\n<h5><a id=\\"Apache_Hudi_0101_36\\"></a><strong>Apache Hudi 0.10.1</strong></h5>\\n<p>Complete the following steps to create a marketplace connection for Apache Hudi 0.10.1:</p>\n<ol>\\n<li>Open Amazon Web Services Glue Studio.</li>\n<li>Choose <strong>Connectors</strong>.</li>\\n<li>Choose <strong>Go to Amazon Web Services Marketplace</strong>.</li>\\n<li>Search for <strong>Apache Hudi Connector for Amazon Web Services Glue</strong>, and choose <strong>Apache Hudi Connector for Amazon Web Services Glue</strong>.</li>\\n<li>Choose <strong>Continue to Subscribe</strong>.</li>\\n<li>Review the <strong>Terms and conditions</strong>, pricing, and other details, and choose the <strong>Accept Terms</strong> button to continue.</li>\\n<li>Make sure that the subscription is complete and you see the <strong>Effective date</strong> populated next to the product, and then choose <strong>Continue to Configuration</strong>.</li>\\n<li>For <strong>Delivery Method</strong>, choose <code>Glue 3.0</code>.</li>\\n<li>For <strong>Software version</strong>, choose <code>0.10.1</code>.</li>\\n<li>Choose <strong>Continue to Launch</strong>.</li>\\n<li>Under <strong>Usage instructions</strong>, choose <strong>Activate the Glue connector in Amazon Web Services Glue Studio</strong>. You’re redirected to Amazon Web Services Glue Studio.</li>\\n<li>For <strong>Name</strong>, enter a name for your connection.</li>\\n<li>Optionally, choose a VPC, subnet, and security group.</li>\n<li>Choose <strong>Create connection</strong>.</li>\\n</ol>\n<h5><a id=\\"Delta_Lake_100_55\\"></a><strong>Delta Lake 1.0.0</strong></h5>\\n<p>Complete the following steps to create a marketplace connection for Delta Lake 1.0.0:</p>\n<ol>\\n<li>Open Amazon Web Services Glue Studio.</li>\n<li>Choose <strong>Connectors</strong>.</li>\\n<li>Choose <strong>Go to Amazon Web Services Marketplace</strong>.</li>\\n<li>Search for <strong>Delta Lake Connector for Amazon Web Services Glue</strong>, and choose <strong>Delta Lake Connector for Amazon Web Services Glue</strong>.</li>\\n<li>Choose <strong>Continue to Subscribe</strong>.</li>\\n<li>Review the <strong>Terms and conditions</strong>, pricing, and other details, and choose the <strong>Accept Terms</strong> button to continue.</li>\\n<li>Make sure that the subscription is complete and you see the <strong>Effective date</strong> populated next to the product, and then choose <strong>Continue to Configuration</strong>.</li>\\n<li>For <strong>Delivery Method</strong>, choose <code>Glue 3.0</code>.</li>\\n<li>For <strong>Software version</strong>, choose <code>1.0.0-2</code>.</li>\\n<li>Choose <strong>Continue to Launch</strong>.</li>\\n<li>Under <strong>Usage instructions</strong>, choose <strong>Activate the Glue connector in Amazon Web Services Glue Studio</strong>. You’re redirected to Amazon Web Services Glue Studio.</li>\\n<li>For <strong>Name</strong>, enter a name for your connection.</li>\\n<li>Optionally, choose a VPC, subnet, and security group.</li>\n<li>Choose <strong>Create connection</strong>.</li>\\n</ol>\n<h5><a id=\\"Apache_Iceberg_0120_75\\"></a><strong>Apache Iceberg 0.12.0</strong></h5>\\n<p>Complete the following steps to create a marketplace connection for Apache Iceberg 0.12.0:</p>\n<ol>\\n<li>Open Amazon Web Services Glue Studio.</li>\n<li>Choose <strong>Connectors</strong>.</li>\\n<li>Choose <strong>Go to Amazon Web Services Marketplace</strong>.</li>\\n<li>Search for <strong>Apache Iceberg Connector for Amazon Web Services Glue</strong>, and choose <strong>Apache Iceberg Connector for Amazon Web Services Glue</strong>.</li>\\n<li>Choose <strong>Continue to Subscribe</strong>.</li>\\n<li>Review the <strong>Terms and conditions</strong>, pricing, and other details, and choose the <strong>Accept Terms</strong> button to continue.</li>\\n<li>Make sure that the subscription is complete and you see the <strong>Effective date</strong> populated next to the product, and then choose <strong>Continue to Configuration</strong>.</li>\\n<li>For <strong>Delivery Method</strong>, choose <code>Glue 3.0</code>.</li>\\n<li>For <strong>Software version</strong>, choose <code>0.12.0-2</code>.</li>\\n<li>Choose <strong>Continue to Launch</strong>.</li>\\n<li>Under <strong>Usage instructions</strong>, choose <strong>Activate the Glue connector in Amazon Web Services Glue Studio</strong>. You’re redirected to Amazon Web Services Glue Studio.</li>\\n<li>For <strong>Name</strong>, enter <code>iceberg-0120-mp-connection</code>.</li>\\n<li>Optionally, choose a VPC, subnet, and security group.</li>\n<li>Choose <strong>Create connection</strong>.</li>\\n</ol>\n<h4><a id=\\"Create_a_Custom_connection_BYOC_96\\"></a><strong>Create a Custom connection (BYOC)</strong></h4>\\n<p>You can create your own custom connectors from JAR files. In this section, you can see the exact JAR files that are used in the marketplace connectors. You can just use the files for your custom connectors for Apache Hudi, Delta Lake, and Apache Iceberg.</p>\n<p>To create a new custom connection for Apache Hudi, Delta Lake, or Apache Iceberg, complete the following steps.</p>\n<h5><a id=\\"Apache_Hudi_090_102\\"></a><strong>Apache Hudi 0.9.0</strong></h5>\\n<p>Complete following steps to create a custom connection for Apache Hudi 0.9.0:</p>\n<ol>\\n<li>Download the following JAR files, and upload them to your S3 bucket.<br />\\na.<a href=\\"https://repo1.maven.org/maven2/org/apache/hudi/hudi-spark3-bundle_2.12/0.9.0/hudi-spark3-bundle_2.12-0.9.0.jar\\" target=\\"_blank\\">https://repo1.maven.org/maven2/org/apache/hudi/hudi-spark3-bundle_2.12/0.9.0/hudi-spark3-bundle_2.12-0.9.0.jar</a><br />\\nb.<a href=\\"https://repo1.maven.org/maven2/org/apache/hudi/hudi-utilities-bundle_2.12/0.9.0/hudi-utilities-bundle_2.12-0.9.0.jar\\" target=\\"_blank\\">https://repo1.maven.org/maven2/org/apache/hudi/hudi-utilities-bundle_2.12/0.9.0/hudi-utilities-bundle_2.12-0.9.0.jar</a><br />\\nc.<a href=\\"https://repo1.maven.org/maven2/org/apache/parquet/parquet-avro/1.10.1/parquet-avro-1.10.1.jar\\" target=\\"_blank\\">https://repo1.maven.org/maven2/org/apache/parquet/parquet-avro/1.10.1/parquet-avro-1.10.1.jar</a><br />\\nd.<a href=\\"https://repo1.maven.org/maven2/org/apache/spark/spark-avro_2.12/3.1.1/spark-avro_2.12-3.1.1.jar\\" target=\\"_blank\\">https://repo1.maven.org/maven2/org/apache/spark/spark-avro_2.12/3.1.1/spark-avro_2.12-3.1.1.jar</a><br />\\ne.<a href=\\"https://repo1.maven.org/maven2/org/apache/calcite/calcite-core/1.10.0/calcite-core-1.10.0.jar\\" target=\\"_blank\\">https://repo1.maven.org/maven2/org/apache/calcite/calcite-core/1.10.0/calcite-core-1.10.0.jar</a><br />\\nf.<a href=\\"https://repo1.maven.org/maven2/org/datanucleus/datanucleus-core/4.1.17/datanucleus-core-4.1.17.jar\\" target=\\"_blank\\">https://repo1.maven.org/maven2/org/datanucleus/datanucleus-core/4.1.17/datanucleus-core-4.1.17.jar</a><br />\\ng.<a href=\\"https://repo1.maven.org/maven2/org/apache/thrift/libfb303/0.9.3/libfb303-0.9.3.jar\\" target=\\"_blank\\">https://repo1.maven.org/maven2/org/apache/thrift/libfb303/0.9.3/libfb303-0.9.3.jar</a></li>\\n<li>Open Amazon Web Services Glue Studio.</li>\n<li>Choose <strong>Connectors</strong>.</li>\\n<li>Choose <strong>Create custom connector</strong>.</li>\\n<li>For <strong>Connector S3 URL</strong>, enter comma separated [Amazon S3](https://aws.amazon.com/cn/s3/?trk=cndc-detail) paths for the above JAR files.</li>\\n<li>For <strong>Name</strong>, enter <code>hudi-090-byoc-connector</code>.</li>\\n<li>For <strong>Connector Type</strong>, choose <code>Spark</code>.</li>\\n<li>For <strong>Class name</strong>, enter <code>org.apache.hudi</code>.</li>\\n<li>Choose <strong>Create connector</strong>.</li>\\n<li>Choose <code>hudi-090-byoc-connector</code>.</li>\\n<li>Choose <strong>Create connection</strong>.</li>\\n<li>For <strong>Name</strong>, enter <code>hudi-090-byoc-connection</code>.</li>\\n<li>Optionally, choose a VPC, subnet, and security group.</li>\n<li>Choose <strong>Create connection</strong>.</li>\\n</ol>\n<h5><a id=\\"Apache_Hudi_0101_128\\"></a><strong>Apache Hudi 0.10.1</strong></h5>\\n<p>Complete the following steps to create a custom connection for Apache Hudi 0.10.1:</p>\n<ol>\\n<li>Download following JAR files, and upload them to your S3 bucket.<br />\\na. <a href=\\"https://aws-bigdata-blog.s3.amazonaws.com/artifacts/hudi-delta-iceberg/hudi0.10.1/hudi-utilities-bundle_2.12-0.10.1.jar\\" target=\\"_blank\\">hudi-utilities-bundle_2.12-0.10.1.jar</a><br />\\nb. <a href=\\"https://aws-bigdata-blog.s3.amazonaws.com/artifacts/hudi-delta-iceberg/hudi0.10.1/hudi-spark3.1.1-bundle_2.12-0.10.1.jar\\" target=\\"_blank\\">hudi-spark3.1.1-bundle_2.12-0.10.1.jar</a><br />\\nc. <a href=\\"https://repo1.maven.org/maven2/org/apache/spark/spark-avro_2.12/3.1.1/spark-avro_2.12-3.1.1.jar\\" target=\\"_blank\\">spark-avro_2.12-3.1.1.jar</a></li>\\n<li>Open Amazon Web Services Glue Studio.</li>\n<li>Choose <strong>Connectors</strong>.</li>\\n<li>Choose <strong>Create custom connector</strong>.</li>\\n<li>For <strong>Connector S3 URL</strong>, enter comma separated [Amazon S3](https://aws.amazon.com/cn/s3/?trk=cndc-detail) paths for the above JAR files.</li>\\n<li>For <strong>Name</strong>, enter <code>hudi-0101-byoc-connector</code>.</li>\\n<li>For <strong>Connector Type</strong>, choose Spark.</li>\\n<li>For <strong>Class name</strong>, enter <code>org.apache.hudi</code>.</li>\\n<li>Choose <strong>Create connector</strong>.</li>\\n<li>Choose <code>hudi-0101-byoc-connector</code>.</li>\\n<li>Choose <strong>Create connection</strong>.</li>\\n<li>For <strong>Name</strong>, enter <code>hudi-0101-byoc-connection</code>.</li>\\n<li>Optionally, choose a VPC, subnet, and security group.</li>\n<li>Choose <strong>Create connection</strong>.</li>\\n</ol>\n<p>Note that the above Hudi 0.10.1 installation on Glue 3.0 does not fully support <a href=\\"https://hudi.apache.org/docs/concepts/#merge-on-read-table\\" target=\\"_blank\\">Merge On Read (MoR) tables</a>.</p>\\n<h5><a id=\\"Delta_Lake_100_153\\"></a><strong>Delta Lake 1.0.0</strong></h5>\\n<p>Complete the following steps to create a custom connector for Delta Lake 1.0.0:</p>\n<ol>\\n<li>Download the following JAR file, and upload it to your S3 bucket.<br />\\na. <a href=\\"https://repo1.maven.org/maven2/io/delta/delta-core_2.12/1.0.0/delta-core_2.12-1.0.0.jar\\" target=\\"_blank\\">https://repo1.maven.org/maven2/io/delta/delta-core_2.12/1.0.0/delta-core_2.12-1.0.0.jar</a></li>\\n<li>Open Amazon Web Services Glue Studio.</li>\n<li>Choose <strong>Connectors</strong>.</li>\\n<li>Choose <strong>Create custom connector</strong>.</li>\\n<li>For <strong>Connector S3 URL</strong>, enter a comma separated [Amazon S3](https://aws.amazon.com/cn/s3/?trk=cndc-detail) path for the above JAR file.</li>\\n<li>For <strong>Name</strong>, enter <code>delta-100-byoc-connector</code>.</li>\\n<li>For <strong>Connector Type</strong>, choose <code>Spark</code>.</li>\\n<li>For <strong>Class name</strong>, enter <code>org.apache.spark.sql.delta.sources.DeltaDataSource</code>.</li>\\n<li>Choose <strong>Create connector</strong>.</li>\\n<li>Choose <code>delta-100-byoc-connector</code>.</li>\\n<li>Choose <strong>Create connection</strong>.</li>\\n<li>For <strong>Name</strong>, enter <code>delta-100-byoc-connection</code>.</li>\\n<li>Optionally, choose a VPC, subnet, and security group.</li>\n<li>Choose <strong>Create connection</strong>.</li>\\n</ol>\n<h5><a id=\\"Apache_Iceberg_0120_175\\"></a><strong>Apache Iceberg 0.12.0</strong></h5>\\n<p>Complete the following steps to create a custom connection for Apache Iceberg 0.12.0:</p>\n<ol>\\n<li>Download the following JAR files, and upload them to your S3 bucket.<br />\\na. <a href=\\"https://search.maven.org/remotecontent?filepath=org/apache/iceberg/iceberg-spark3-runtime/0.12.0/iceberg-spark3-runtime-0.12.0.jar\\" target=\\"_blank\\">https://search.maven.org/remotecontent?filepath=org/apache/iceberg/iceberg-spark3-runtime/0.12.0/iceberg-spark3-runtime-0.12.0.jar</a><br />\\nb. <a href=\\"https://repo1.maven.org/maven2/software/amazon/awssdk/bundle/2.15.40/bundle-2.15.40.jar\\" target=\\"_blank\\">https://repo1.maven.org/maven2/software/amazon/awssdk/bundle/2.15.40/bundle-2.15.40.jar</a><br />\\nc. <a href=\\"https://repo1.maven.org/maven2/software/amazon/awssdk/url-connection-client/2.15.40/url-connection-client-2.15.40.jar\\" target=\\"_blank\\">https://repo1.maven.org/maven2/software/amazon/awssdk/url-connection-client/2.15.40/url-connection-client-2.15.40.jar</a></li>\\n<li>Open Amazon Web Services Glue Studio.</li>\n<li>Choose <strong>Connectors</strong>.</li>\\n<li>Choose <strong>Create custom connector</strong>.</li>\\n<li>For <strong>Connector S3 URL</strong>, enter comma separated [Amazon S3](https://aws.amazon.com/cn/s3/?trk=cndc-detail) paths for the above JAR files.</li>\\n<li>For <strong>Name</strong>, enter <code>iceberg-0120-byoc-connector</code>.</li>\\n<li>For <strong>Connector Type</strong>, choose <code>Spark</code>.</li>\\n<li>For <strong>Class name</strong>, enter <code>iceberg</code>.</li>\\n<li>Choose <strong>Create connector</strong>.</li>\\n<li>Choose <code>iceberg-0120-byoc-connector</code>.</li>\\n<li>Choose <strong>Create connection</strong>.</li>\\n<li>For <strong>Name</strong>, enter <code>iceberg-0120-byoc-connection</code>.</li>\\n<li>Optionally, choose a VPC, subnet, and security group.</li>\n<li>Choose <strong>Create connection</strong>.</li>\\n</ol>\n<h5><a id=\\"Apache_Iceberg_0131_198\\"></a><strong>Apache Iceberg 0.13.1</strong></h5>\\n<ol>\\n<li>Download the following JAR files, and upload them to your S3 bucket.<br />\\na. <a href=\\"https://aws-bigdata-blog.s3.amazonaws.com/artifacts/hudi-delta-iceberg/iceberg0.13.1/iceberg-spark-runtime-3.1_2.12-0.13.1.jar\\" target=\\"_blank\\">iceberg-spark-runtime-3.1_2.12-0.13.1.jar</a><br />\\nb. <a href=\\"https://repo1.maven.org/maven2/software/amazon/awssdk/bundle/2.17.161/bundle-2.17.161.jar\\" target=\\"_blank\\">https://repo1.maven.org/maven2/software/amazon/awssdk/bundle/2.17.161/bundle-2.17.161.jar</a><br />\\nc. <a href=\\"https://repo1.maven.org/maven2/software/amazon/awssdk/url-connection-client/2.17.161/url-connection-client-2.17.161.jar\\" target=\\"_blank\\">https://repo1.maven.org/maven2/software/amazon/awssdk/url-connection-client/2.17.161/url-connection-client-2.17.161.jar</a></li>\\n<li>Open Amazon Web Services Glue Studio.</li>\n<li>Choose <strong>Connectors</strong>.</li>\\n<li>Choose <strong>Create custom connector</strong>.</li>\\n<li>For <strong>Connector S3 URL</strong>, enter comma separated [Amazon S3](https://aws.amazon.com/cn/s3/?trk=cndc-detail) paths for the above JAR files.</li>\\n<li>For <strong>Name</strong>, enter <code>iceberg-0131-byoc-connector</code>.</li>\\n<li>For <strong>Connector Type</strong>, choose <code>Spark</code>.</li>\\n<li>For <strong>Class name</strong>, enter <code>iceberg</code>.</li>\\n<li>Choose <strong>Create connector</strong>.</li>\\n<li>Choose <code>iceberg-0131-byoc-connector</code>.</li>\\n<li>Choose Create connection.</li>\n<li>For Name, enter <code>iceberg-0131-byoc-connection</code>.</li>\\n<li>Optionally, choose a VPC, subnet, and security group.</li>\n<li>Choose Create connection.</li>\n</ol>\\n<h4><a id=\\"Prerequisites_219\\"></a><strong>Prerequisites</strong></h4>\\n<p>To continue this tutorial, you must create the following Amazon Web Services resources in advance:</p>\n<ul>\\n<li><a href=\\"https://aws.amazon.com/iam/\\" target=\\"_blank\\">Amazon Web Services Identity and Access Management (IAM)</a> role for your ETL job or notebook as instructed in <a href=\\"https://docs.aws.amazon.com/glue/latest/ug/setting-up.html#getting-started-iam-permissions\\" target=\\"_blank\\">Set up IAM permissions for Amazon Web Services Glue Studio</a>. Note that <code>AmazonEC2ContainerRegistryReadOnly</code> or equivalent permissions are needed when you use the marketplace connectors.</li>\\n<li>Amazon S3 bucket for storing data.</li>\n<li>Glue connection (one of the marketplace connector or the custom connector corresponding to the data lake format).</li>\n</ul>\\n<h4><a id=\\"Readswrites_using_the_connector_on_Amazon_Web_Services_Glue_Studio_Notebook_229\\"></a><strong>Reads/writes using the connector on Amazon Web Services Glue Studio Notebook</strong></h4>\\n<p>The following are the instructions to read/write tables using each data lake format on Amazon Web Services Glue Studio Notebook. As a prerequisite, make sure that you have created a connector and a connection for the connector using the information above.<br />\\nThe example notebooks are hosted on <a href=\\"https://github.com/aws-samples/aws-glue-samples/tree/master/examples/notebooks\\" target=\\"_blank\\">Amazon Web Services Glue Samples GitHub repository</a>. You can find 7 notebooks available. In the following instructions, we will use one notebook per data lake format.</p>\\n<h5><a id=\\"Apache_Hudi_236\\"></a><strong>Apache Hudi</strong></h5>\\n<ol>\\n<li>Download <a href=\\"https://raw.githubusercontent.com/aws-samples/aws-glue-samples/master/examples/notebooks/hudi_dataframe.ipynb\\" target=\\"_blank\\">hudi_dataframe.ipynb</a>.</li>\\n<li>Open Amazon Web Services Glue Studio.</li>\n<li>Choose <strong>Jobs</strong>.</li>\\n<li>Choose <strong>Jupyter notebook</strong> and then choose <strong>Upload and edit an existing notebook</strong>. From <strong>Choose file</strong>, select your ipynb file and choose <strong>Open</strong>, then choose <strong>Create</strong>.</li>\\n<li>On the <strong>Notebook setup</strong> page, for <strong>Job name</strong>, enter your job name.</li>\\n<li>For <strong>IAM role</strong>, select your IAM role. Choose <strong>Create job</strong>. After a short time period, the Jupyter notebook editor appears.</li>\\n<li>In the first cell, replace the placeholder with your Hudi connection name, and run the cell:<br />\\n<code>%connections hudi-0101-byoc-connection</code> (Alternatively you can use your connection name created from the marketplace connector).</li>\\n<li>In the second cell, replace the S3 bucket name placeholder with your S3 bucket name, and run the cell.</li>\n<li>Run the cells in the section <strong>Initialize SparkSession</strong>.</li>\\n<li>Run the cells in the section <strong>Clean up existing resources</strong>.</li>\\n<li>Run the cells in the section <strong>Create Hudi table with sample data using catalog sync</strong> to create a new Hudi table with sample data.</li>\\n<li>Run the cells in the section <strong>Read from Hudi table</strong> to verify the new Hudi table. There are five records in this table.</li>\\n</ol>\n<p><img src=\\"https://dev-media.amazoncloud.cn/47d1f7ca17df48a5879e588dff483be5_image.png\\" alt=\\"image.png\\" /></p>\n<ol start=\\"13\\">\\n<li>Run the cells in the section <strong>Upsert records into Hudi table</strong> to see how upsert works on Hudi. This code inserts one new record, and updates the one existing record. You can verify that there is a new record <code>product_id=00006</code>, and the existing record <code>product_id=00001</code>’s price has been updated from <code>250</code> to <code>400</code>.</li>\\n</ol>\n<p><img src=\\"https://dev-media.amazoncloud.cn/96d76aead2554fd0b62c9d010eefcc87_image.png\\" alt=\\"image.png\\" /></p>\n<ol start=\\"14\\">\\n<li>Run the cells in the section <strong>Delete a Record</strong>. You can verify that the existing record <code>product_id=00001</code> has been deleted.</li>\\n</ol>\n<p><img src=\\"https://dev-media.amazoncloud.cn/79cc71177d594aeeb8a421b8da1c61b4_image.png\\" alt=\\"image.png\\" /></p>\n<ol start=\\"15\\">\\n<li>Run the cells in the section <strong>Point in time query</strong>. You can verify that you’re seeing the previous version of the table where the upsert and delete operations haven’t been applied yet.</li>\\n</ol>\n<p><img src=\\"https://dev-media.amazoncloud.cn/281d9c35dde240fdaa146288149685a5_image.png\\" alt=\\"image.png\\" /></p>\n<ol start=\\"16\\">\\n<li>Run the cells in the section <strong>Incremental Query</strong>. You can verify that you’re seeing only the recent commit about <code>product_id=00006</code>.</li>\\n</ol>\n<p><img src=\\"https://dev-media.amazoncloud.cn/6a07855c3ef04ad1a71d2f7017d0db82_image.png\\" alt=\\"image.png\\" /></p>\n<p>On this notebook, you could complete the basic Spark DataFrame operations on Hudi tables.</p>\n<h5><a id=\\"Delta_Lake_273\\"></a><strong>Delta Lake</strong></h5>\\n<p>To read/write Delta Lake tables in the Amazon Web Services Glue Studio notebook, complete following:</p>\n<ol>\\n<li>Download <a href=\\"https://raw.githubusercontent.com/aws-samples/aws-glue-samples/master/examples/notebooks/delta_sql.ipynb\\" target=\\"_blank\\">delta_sql.ipynb</a>.</li>\\n<li>Open Amazon Web Services Glue Studio.</li>\n<li>Choose <strong>Jobs</strong>.</li>\\n<li>Choose <strong>Jupyter notebook</strong>, and then choose <strong>Upload and edit an existing notebook</strong>. From <strong>Choose file</strong>, select your ipynb file and choose <strong>Open</strong>, then choose <strong>Create</strong>.</li>\\n<li>On the <strong>Notebook setup</strong> page, for <strong>Job name</strong>, enter your job name.</li>\\n<li>For <strong>IAM</strong> role, select your IAM role. Choose <strong>Create job</strong>. After a short time period, the Jupyter notebook editor appears.</li>\\n<li>In the first cell, replace the placeholder with your Delta connection name, and run the cell:<br />\\n<code>%connections delta-100-byoc-connection</code></li>\\n<li>In the second cell, replace the S3 bucket name placeholder with your S3 bucket name, and run the cell.</li>\n<li>Run the cells in the section <strong>Initialize SparkSession</strong>.</li>\\n<li>Run the cells in the section <strong>Clean up existing resources</strong>.</li>\\n<li>Run the cells in the section <strong>Create Delta table with sample data</strong> to create a new Delta table with sample data.</li>\\n<li>Run the cells in the section <strong>Create a Delta Lake table</strong>.</li>\\n</ol>\n<p><img src=\\"https://dev-media.amazoncloud.cn/8a299823e065460ba7b4fc1321406f37_image.png\\" alt=\\"image.png\\" /></p>\n<ol start=\\"13\\">\\n<li>Run the cells in the section <strong>Read from Delta Lake table</strong> to verify the new Delta table. There are five records in this table.</li>\\n</ol>\n<p><img src=\\"https://dev-media.amazoncloud.cn/03065fa79bce4d089f0e5a080ff7e6de_image.png\\" alt=\\"image.png\\" /></p>\n<ol start=\\"14\\">\\n<li>Run the cells in the section Insert records. The query inserts two new records: <code>record_id=00006</code>, and <code>record_id=00007</code>.</li>\\n</ol>\n<p><img src=\\"https://dev-media.amazoncloud.cn/9295b179d77c4cba95e7cc7338b01a01_image.png\\" alt=\\"image.png\\" /></p>\n<ol start=\\"15\\">\\n<li>Run the cells in the section Update records. The query updates the price of the existing records <code>record_id=00007</code>, and <code>record_id=00007</code> from <code>500</code> to <code>300</code>.</li>\\n</ol>\n<p><img src=\\"https://dev-media.amazoncloud.cn/c47c61ea7ebe4914a6f0872bd064cd04_image.png\\" alt=\\"image.png\\" /></p>\n<ol start=\\"16\\">\\n<li>Run the cells in the section Upsert records. to see how upsert works on Delta. This code inserts one new record, and updates the one existing record. You can verify that there is a new record <code>product_id=00008</code>, and the existing record <code>product_id=00001</code>’s price has been updated from <code>250</code> to <code>400</code>.</li>\\n</ol>\n<p><img src=\\"https://dev-media.amazoncloud.cn/b091922fcc154b3dbe81a35a4b5a851d_image.png\\" alt=\\"image.png\\" /></p>\n<ol start=\\"17\\">\\n<li>Run the cells in the section <strong>Alter DeltaLake table</strong>. The queries add one new column, and update the values in the column.</li>\\n</ol>\n<p><img src=\\"https://dev-media.amazoncloud.cn/977c4d40100845b4a60639d50f7f901a_image.png\\" alt=\\"image.png\\" /></p>\n<ol start=\\"18\\">\\n<li>Run the cells in the section Delete records. You can verify that the record <code>product_id=00006</code> because it’s <code>product_name</code> is <code>Pen</code>.</li>\\n</ol>\n<p><img src=\\"https://dev-media.amazoncloud.cn/943af64d1f2a4b839ab772ae247451ba_image.png\\" alt=\\"image.png\\" /></p>\n<ol start=\\"19\\">\\n<li>Run the cells in the section <strong>View History</strong> to describe the history of operations that was triggered against the target Delta table.</li>\\n</ol>\n<p><img src=\\"https://dev-media.amazoncloud.cn/1cd8e8275b6f4b11aa1164b691db6400_image.png\\" alt=\\"image.png\\" /></p>\n<p>On this notebook, you could complete the basic Spark SQL operations on Delta tables.</p>\n<h5><a id=\\"Apache_Iceberg_324\\"></a><strong>Apache Iceberg</strong></h5>\\n<p>To read/write Apache Iceberg tables in the Amazon Web Services Glue Studio notebook, complete the following:</p>\n<ol>\\n<li>Download <a href=\\"https://raw.githubusercontent.com/aws-samples/aws-glue-samples/master/examples/notebooks/iceberg_sql.ipynb\\" target=\\"_blank\\">iceberg_sql.ipynb</a>.</li>\\n<li>Open Amazon Web Services Glue Studio.</li>\n<li>Choose <strong>Jobs</strong>.</li>\\n<li>Choose <strong>Jupyter notebook</strong> and then choose <strong>Upload and edit an existing notebook</strong>. From <strong>Choose file</strong>, select your ipynb file and choose <strong>Open</strong>, then choose <strong>Create</strong>.</li>\\n<li>On the <strong>Notebook setup</strong> page, for <strong>Job name</strong>, enter your job name.</li>\\n<li>For <strong>IAM</strong> role, select your IAM role. Choose <strong>Create job</strong>. After a short time period, the Jupyter notebook editor appears.</li>\\n<li>In the first cell, replace the placeholder with your Delta connection name, and run the cell:<br />\\n<code>%connections iceberg-0131-byoc-connection</code> (Alternatively you can use your connection name created from the marketplace connector).</li>\\n<li>In the second cell, replace the S3 bucket name placeholder with your S3 bucket name, and run the cell.</li>\n<li>Run the cells in the section <strong>Initialize SparkSession</strong>.</li>\\n<li>Run the cells in the section <strong>Clean up existing resources</strong>.</li>\\n<li>Run the cells in the section <strong>Create Iceberg table with sample data</strong> to create a new Iceberg table with sample data.</li>\\n<li>Run the cells in the section <strong>Read from Iceberg table</strong>.</li>\\n</ol>\n<p><img src=\\"https://dev-media.amazoncloud.cn/6a74181f5bc54b5eaaa2dd3897d7320c_image.png\\" alt=\\"image.png\\" /></p>\n<ol start=\\"13\\">\\n<li>Run the cells in the section <strong>Upsert records into Iceberg table</strong>.</li>\\n</ol>\n<p><img src=\\"https://dev-media.amazoncloud.cn/c15f700f334e40bc92314ea783c998e0_image.png\\" alt=\\"image.png\\" /></p>\n<ol start=\\"14\\">\\n<li>Run the cells in the section <strong>Delete records</strong>.</li>\\n</ol>\n<p><img src=\\"https://dev-media.amazoncloud.cn/6c9191004f934fa6947c92530c927670_image.png\\" alt=\\"image.png\\" /></p>\n<ol start=\\"15\\">\\n<li>Run the cells in the section <strong>View History and Snapshots</strong>.</li>\\n</ol>\n<p><img src=\\"https://dev-media.amazoncloud.cn/d73ca36e325848268569d3a69d2b0857_image.png\\" alt=\\"image.png\\" /></p>\n<p>On this notebook, you could complete the basic Spark SQL operations on Iceberg tables.</p>\n<h4><a id=\\"Conclusion_359\\"></a><strong>Conclusion</strong></h4>\\n<p>This post summarized how to utilize Apache Hudi, Delta Lake, and Apache Iceberg on Amazon Web Services Glue platform, as well as demonstrate how each format works with a Glue Studio notebook. You can start using those data lake formats easily in Spark DataFrames and Spark SQL on the Glue jobs or the Glue Studio notebooks.</p>\n<p>This post focused on interactive coding and querying on notebooks. The upcoming part 2 will focus on the experience using Amazon Web Services Glue Studio Visual Editor and Glue DynamicFrames for customers who prefer visual authoring without the need to write code.</p>\n<h5><a id=\\"About_the_Authors_367\\"></a><strong>About the Authors</strong></h5>\\n<p><img src=\\"https://dev-media.amazoncloud.cn/84162c8caf7d4b34a387f14378acd25d_image.png\\" alt=\\"image.png\\" /></p>\n<p><strong>Noritaka Sekiyama</strong> is a Principal Big Data Architect on the Amazon Web Services Glue team. He enjoys learning different use cases from customers and sharing knowledge about big data technologies with the wider community.</p>\\n<p><img src=\\"https://dev-media.amazoncloud.cn/62be0d9b63494ff699b0245dcd2c4a09_image.png\\" alt=\\"image.png\\" /></p>\n<p><strong>Dylan Qu</strong> is a Specialist Solutions Architect focused on Big Data & Analytics with Amazon Web Services. He helps customers architect and build highly scalable, performant, and secure cloud-based solutions on Amazon Web Services.</p>\\n<p><img src=\\"https://dev-media.amazoncloud.cn/2e47ac5d5a0e45689f50462a7bec846e_image.png\\" alt=\\"image.png\\" /></p>\n<p><strong>Monjumi Sarma</strong> is a Data Lab Solutions Architect at Amazon Web Services. She helps customers architect data analytics solutions, which gives them an accelerated path towards modernization initiatives.</p>\n"}

Process Apache Hudi, Delta Lake, Apache Iceberg datasets at scale, part 1: Amazon Glue Studio Notebook

海外精选

海外精选的内容汇集了全球优质的亚马逊云科技相关技术内容。同时,内容中提到的“AWS”

是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

0

0 0

0亚马逊云科技解决方案 基于行业客户应用场景及技术领域的解决方案

联系亚马逊云科技专家

目录

亚马逊云科技解决方案 基于行业客户应用场景及技术领域的解决方案

联系亚马逊云科技专家

亚马逊云科技解决方案

基于行业客户应用场景及技术领域的解决方案

联系专家

0

目录

分享

分享 点赞

点赞 收藏

收藏 目录

目录立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。