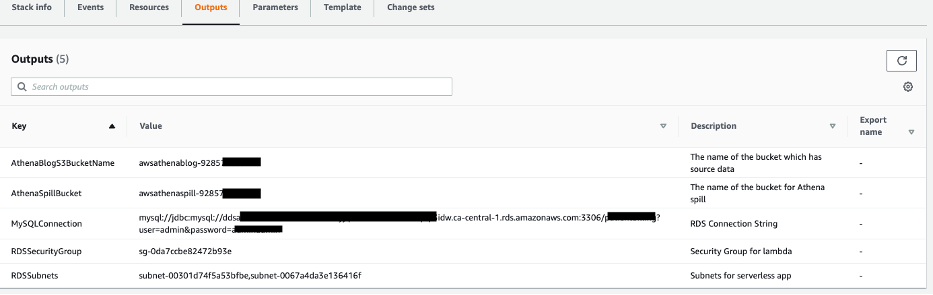

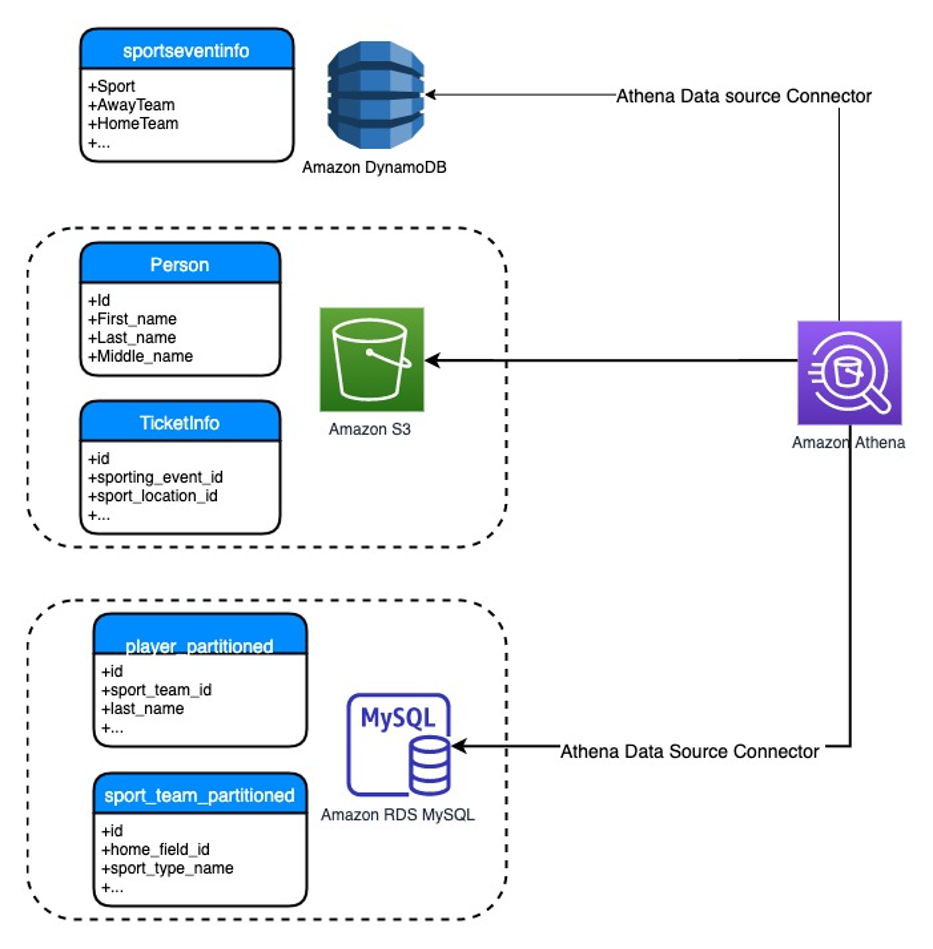

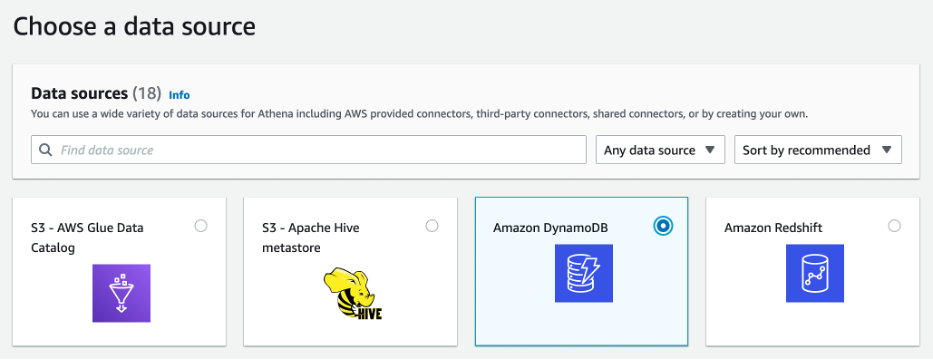

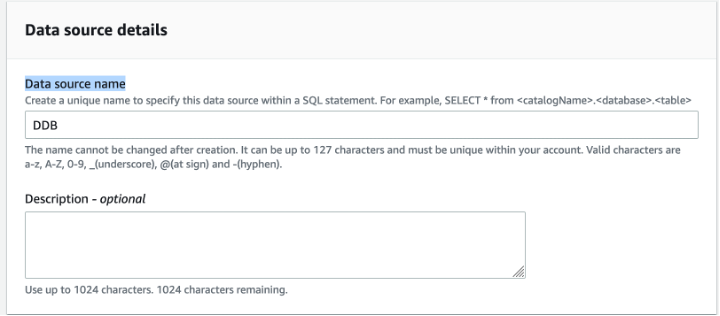

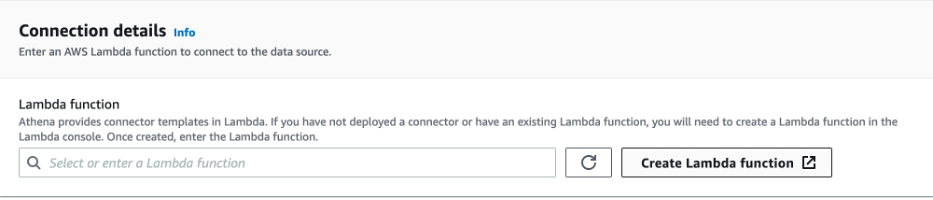

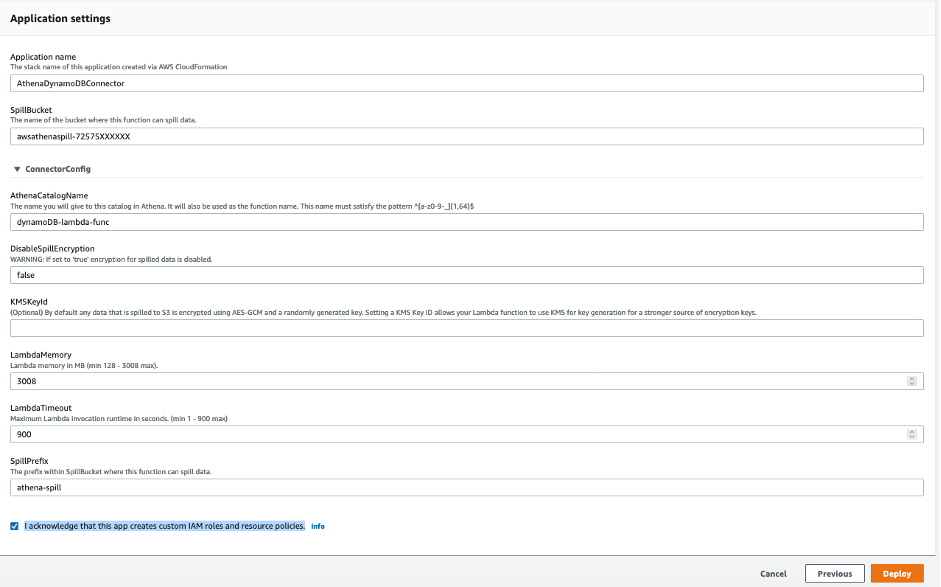

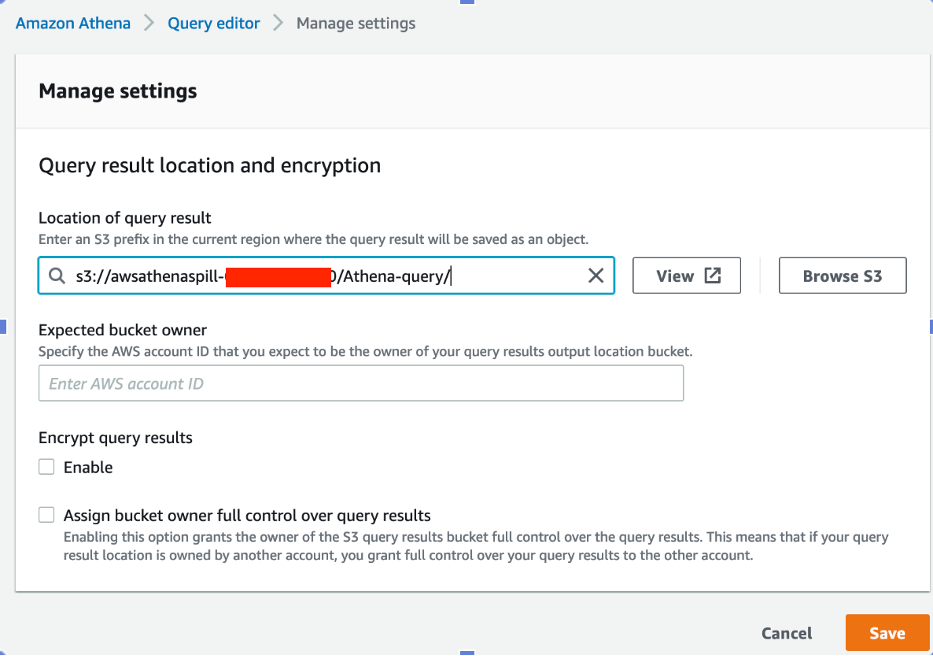

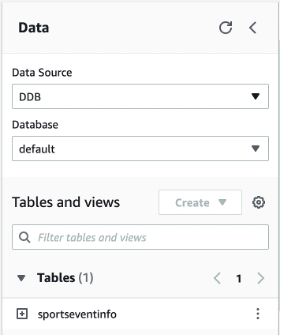

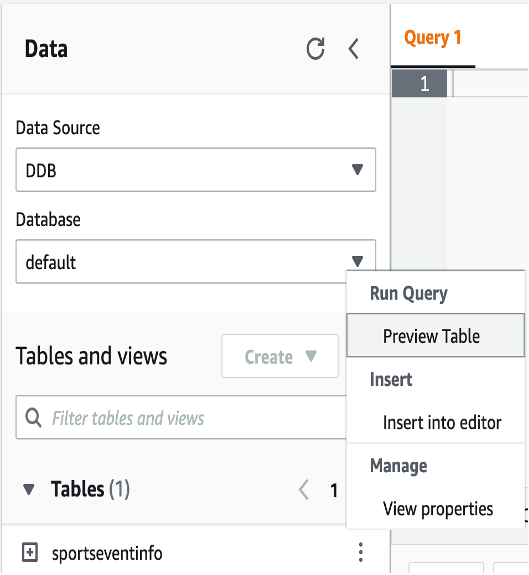

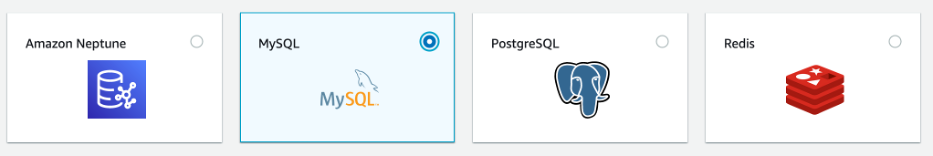

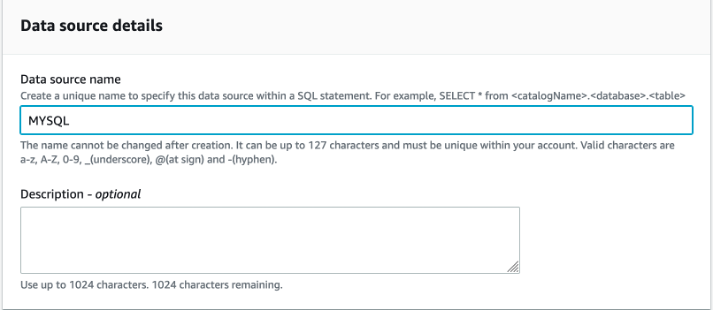

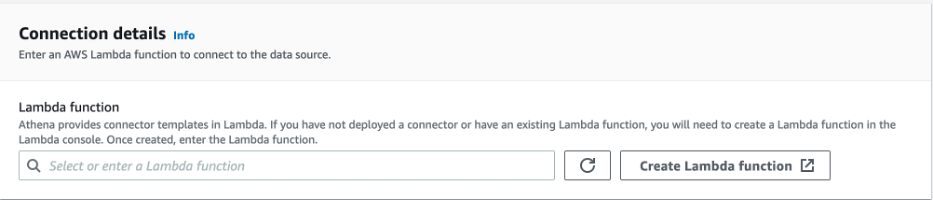

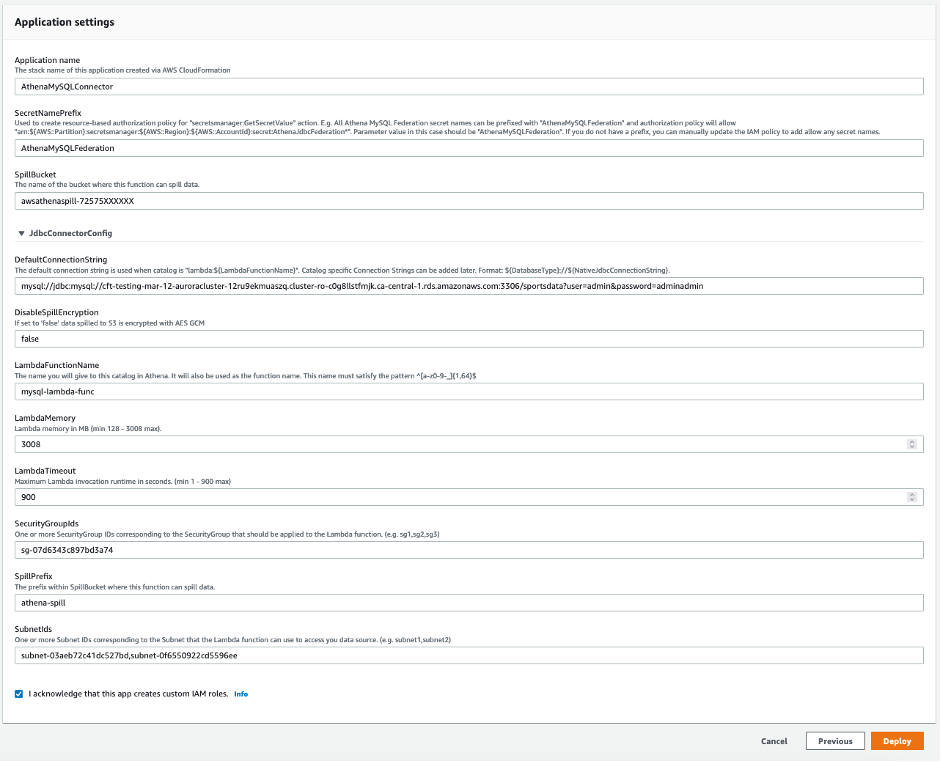

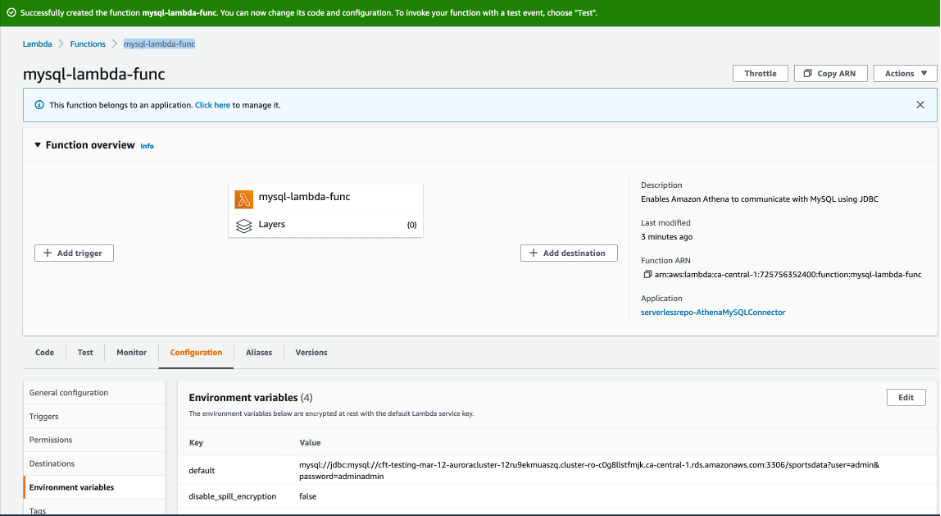

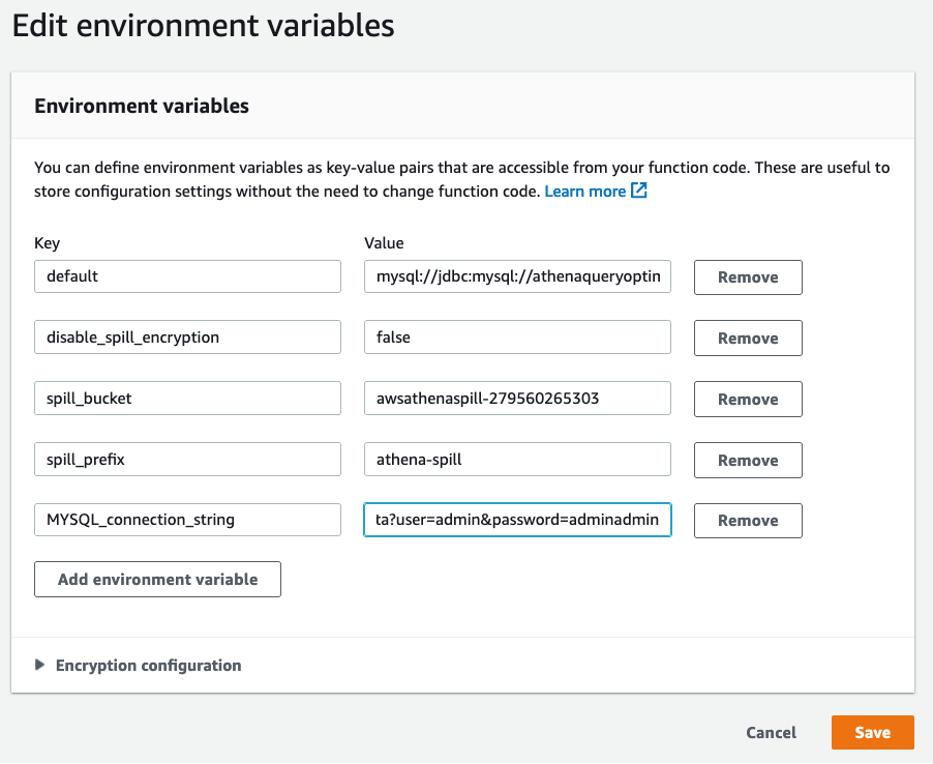

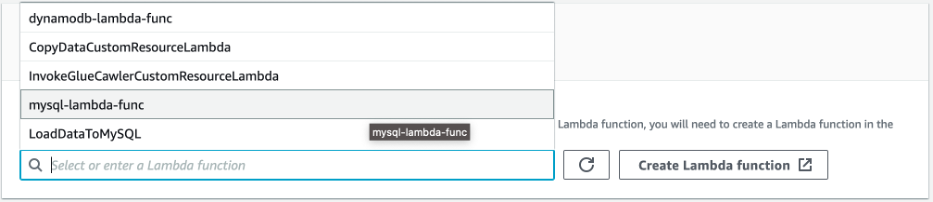

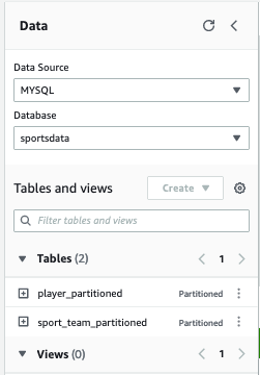

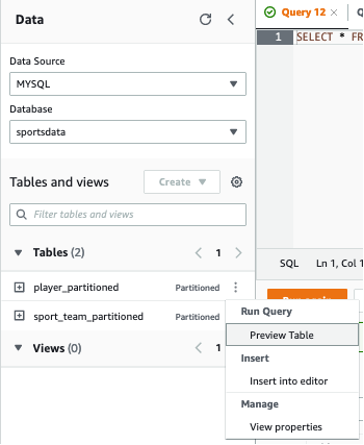

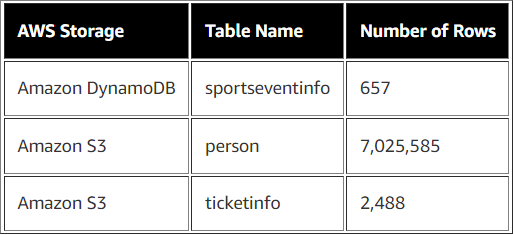

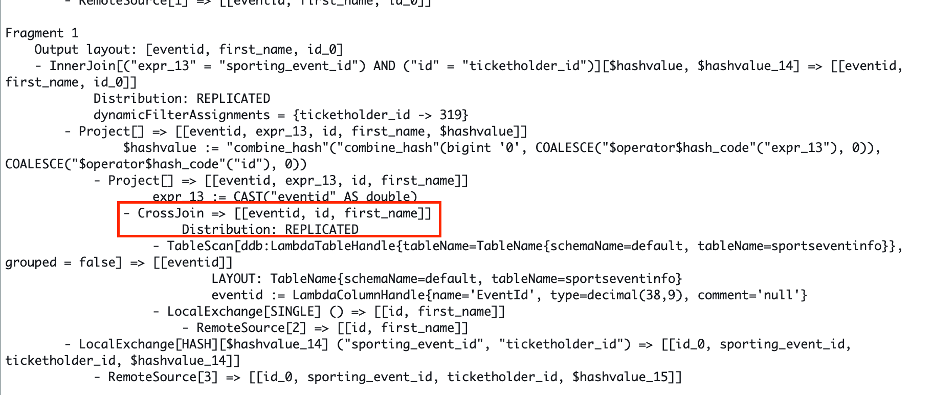

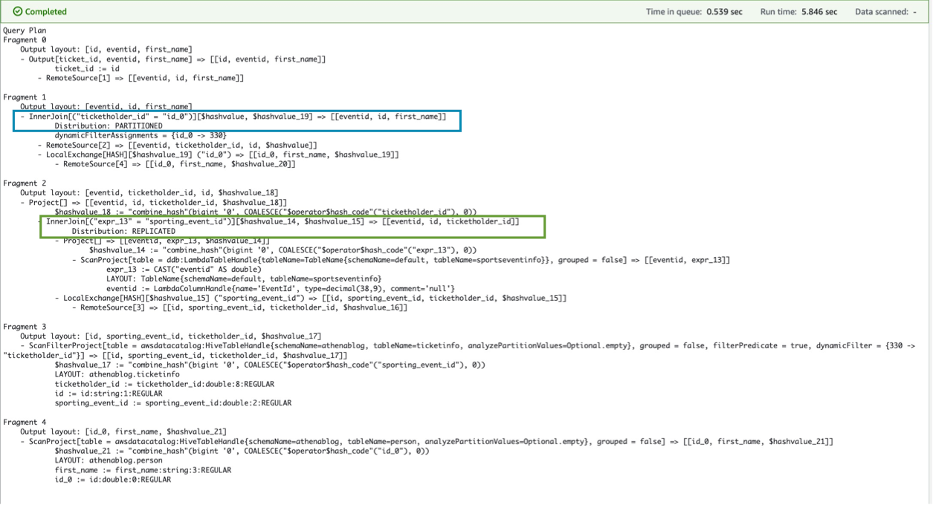

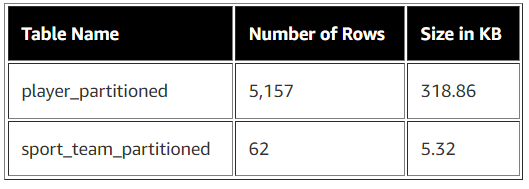

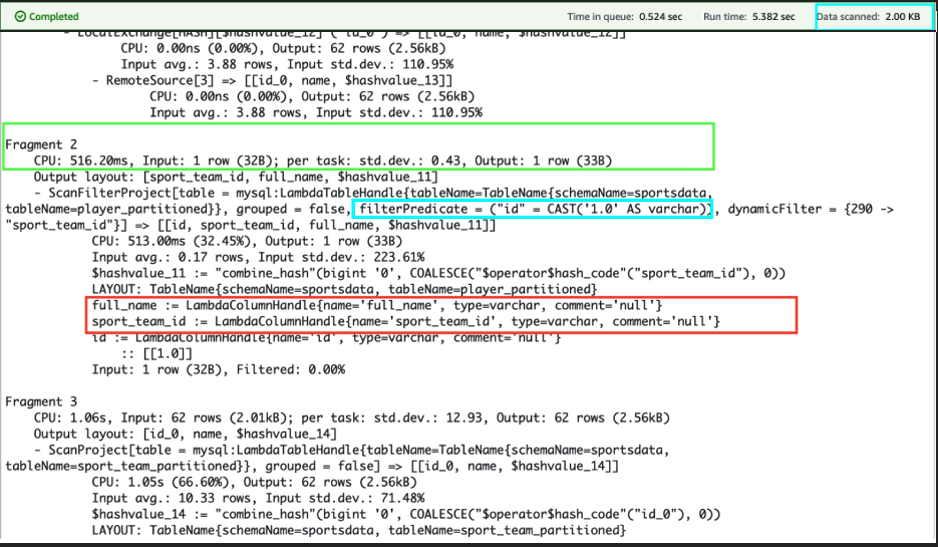

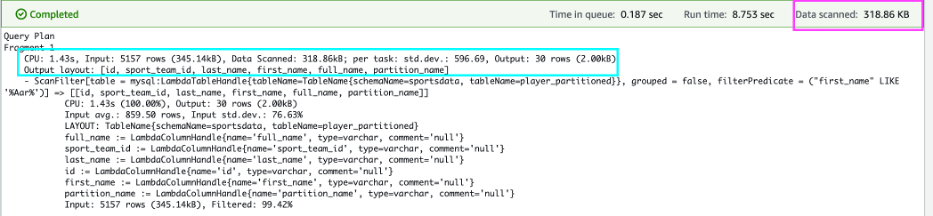

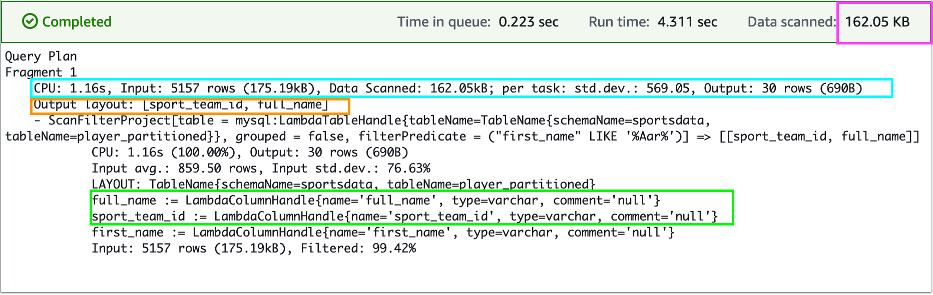

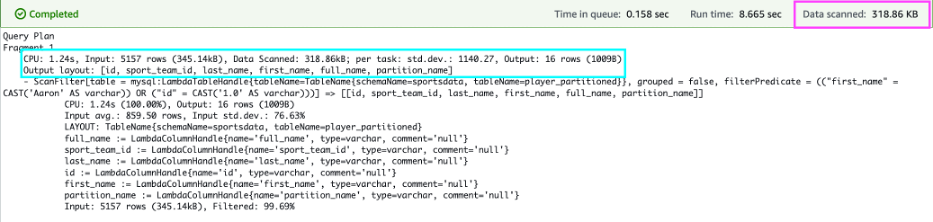

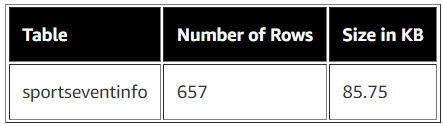

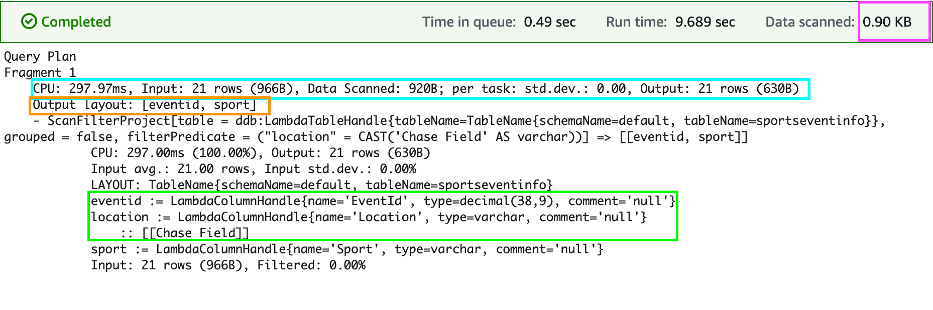

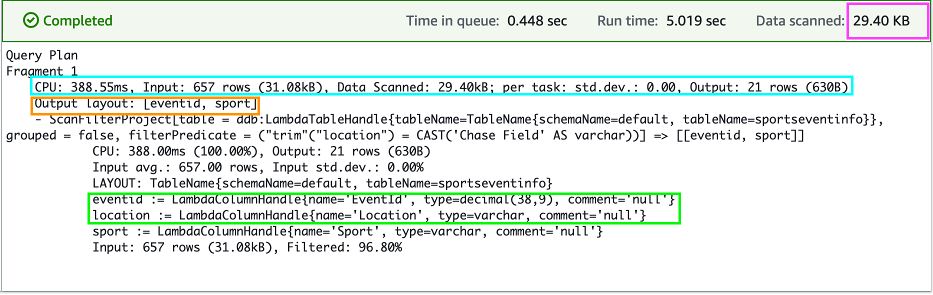

{"value":"[Amazon Athena](https://aws.amazon.com/athena/?whats-new-cards.sort-by=item.additionalFields.postDateTime&whats-new-cards.sort-order=desc) is an interactive query service that makes it easy to analyze data in [Amazon Simple Storage Service](https://aws.amazon.com/s3/) (Amazon S3) using standard SQL. Athena is serverless, so there is no infrastructure to manage, and you pay only for the queries that you run. In 2019, Athena added support for [federated queries](https://aws.amazon.com/blogs/big-data/query-any-data-source-with-amazon-athenas-new-federated-query/) to run SQL queries across data stored in relational, non-relational, object, and custom data sources.\n\nIn 2021, Athena added support for the [EXPLAIN](https://aws.amazon.com/about-aws/whats-new/2021/04/amazon-athena-now-presents-query-execution-plans-to-aid-tuning/) statement, which can help you understand and improve the efficiency of your queries. The EXPLAIN statement provides a detailed breakdown of a query’s run plan. You can analyze the plan to identify and reduce query complexity and improve its runtime. You can also use EXPLAIN to validate SQL syntax prior to running the query. Doing so helps prevent errors that would have occurred while running the query.\n\nAthena also added [EXPLAIN ANALYZE](https://aws.amazon.com/about-aws/whats-new/2021/11/amazon-athena-cost-details-query-execution-plans/), which displays the computational cost of your queries alongside their run plans. Administrators can benefit from using EXPLAIN ANALYZE because it provides a scanned data count, which helps you reduce financial impact due to user queries and apply optimizations for better cost control.\n\nIn this post, we demonstrate how to use and interpret EXPLAIN and EXPLAIN ANALYZE statements to improve Athena query performance when querying multiple data sources.\n\n### **Solution overview**\n\nTo demonstrate using EXPLAIN and EXPLAIN ANALYZE statements, we use the following services and resources:\n\n- Five database tables from the [Major League Baseball](https://github.com/aws-samples/aws-database-migration-samples/tree/master/PostgreSQL/sampledb/v1/data/csv) dataset\n- [AWS CloudFormation](https://aws.amazon.com/cloudformation/) to provision AWS resources\n- Amazon S3, [Amazon DynamoDB](https://aws.amazon.com/dynamodb/), and [Amazon Aurora MySQL-Compatible Edition](https://aws.amazon.com/rds/aurora/features/) as data storage\n\nAthena uses the AWS Glue Data Catalog to store and retrieve table metadata for the Amazon S3 data in your AWS account. The table metadata lets the Athena query engine know how to find, read, and process the data that you want to query. We use Athena data source connectors to connect to data sources external to Amazon S3.\n\n### **Prerequisites**\n\nTo deploy the CloudFormation template, you must have the following:\n\n- An AWS account\n- An [AWS Identity and Access Management (IAM)](https://aws.amazon.com/iam/) user with access to the following services:\n- Amazon Athena\n- AWS CloudFormation\n- Amazon DynamoDB\n- [AWS Glue](https://aws.amazon.com/glue/)\n- [AWS Lambda](https://aws.amazon.com/lambda/)\n- [Amazon Relational Database Service](https://aws.amazon.com/rds/) (Amazon RDS)\n- Amazon S3\n- [Amazon Virtual Private Cloud](https://aws.amazon.com/vpc/) (Amazon VPC)\n\n### **Provision resources with AWS CloudFormation**\n\nTo deploy the CloudFormation template, complete the following steps:\n\n1.Choose **Launch Stack**:\n[](https://signin.aws.amazon.com/signin?redirect_uri=https%3A%2F%2Fus-east-1.console.aws.amazon.com%2Fcloudformation%2Fhome%3Fregion%3Dus-east-1%26state%3DhashArgs%2523%252Fstacks%252Fquickcreate%253Fregion%253Dus-east-1%2526stackName%253Dathenaqueryoptimize%2526templateURL%253Dhttps%253A%252F%252Faws-blogs-artifacts-public.s3.amazonaws.com%252Fartifacts%252FBDB-1920%252FAthenaQueryPerformanceTuning.json%26isauthcode%3Dtrue&client_id=arn%3Aaws%3Aiam%3A%3A015428540659%3Auser%2Fcloudformation&forceMobileApp=0&code_challenge=PiEu3WMN6tJ-c0oHxiN00LWrj5tqJhPwwV4FC6veMvE&code_challenge_method=SHA-256)\n2.Follow the prompts on the AWS CloudFormation console to create the stack.\n3.Note the key-value pairs on the stack’s **Outputs** tab.\n\nYou use these values when configuring the Athena data source connectors.\n\n\n\nThe CloudFormation template creates the following resources:\n- S3 buckets to store data and act as temporary spill buckets for Lambda\n- AWS Glue Data Catalog tables for the data in the S3 buckets\n- A DynamoDB table and [Amazon RDS for MySQL](https://aws.amazon.com/rds/mysql/) tables, which are used to join multiple tables from different sources\n- A VPC, subnets, and endpoints, which are needed for Amazon RDS for MySQL and DynamoDB\n\nThe following figure shows the high-level data model for the data load.\n\n\n\n### **Create the DynamoDB data source connector**\n\nTo create the DynamoDB connector for Athena, complete the following steps:\n\n1.On the Athena console, choose **Data sources** in the navigation pane.\n2.Choose **Create data source**.\n3.For **Data sources**, select **Amazon DynamoDB**.\n4.Choose **Next**.\n\n\n\n5.For **Data source name**, enter DDB.\n\n\n\n6.For **Lambda function**, choose Create **Lambda function**.\n\n\n\n7.For **Application name**, enter ```AthenaDynamoDBConnector```.\n8.For **SpillBucket**, enter the value from the CloudFormation stack for ```AthenaSpillBucket```.\n9.For **AthenaCatalogName**, enter ```dynamodb-lambda-func```.\n10.Leave the remaining values at their defaults.\n11.Select **I acknowledge that this app creates custom IAM roles and resource policies**.\n12.Choose **Deploy**.\n\n\n\nYou’re returned to the Connect data sources section on the Athena console.\n\n13.Choose the refresh icon next to **Lambda function**.\n14.Choose the Lambda function you just created (```dynamodb-lambda-func```).\n15.Choose **Next**.\n16.Review the settings and choose Create **data source**.\n17.If you haven’t already set up the Athena query results location, choose View settings on the Athena query editor page.\n\n\n\n18.Choose **Manage**.\n19.For **Location of query result**, browse to the S3 bucket specified for the Athena spill bucket in the CloudFormation template.\n20.Add Athena-query to the S3 path.\n21.Choose **Save**.\n\n\n\n22.In the Athena query editor, for **Data source**, choose **DDB**.\n23.For **Database**, choose **default**.\n\nYou can now explore the schema for the ```sportseventinfo```table; the data is the same in DynamoDB.\n\n\n\n24.Choose the options icon for the ```sportseventinfo``` table and choose **Preview Table**.\n\n\n\n### **Create the Amazon RDS for MySQL data source connector**\n\nNow let’s create the connector for Amazon RDS for MySQL.\n\n1.On the Athena console, choose **Data sources** in the navigation pane.\n2.Choose **Create data source**.\n3.For **Data sources**, select **MySQL**.\n4.Choose **Next**.\n\n\n\n5.For **Data source name**, enter MySQL.\n\n\n\n6.For **Lambda function**, choose Create **Lambda function**.\n\n\n\n7.For **Application name**, enter ```AthenaMySQLConnector```.\n8.For **SecretNamePrefix**, enter ```AthenaMySQLFederation```.\n9.For **SpillBucket**, enter the value from the CloudFormation stack for ```AthenaSpillBucket```.\n10.For **DefaultConnectionString**, enter the value from the CloudFormation stack for ```MySQLConnection```.\n11.For **LambdaFunctionName**, enter ```mysql-lambda-func```.\n12.For **SecurityGroupIds**, enter the value from the CloudFormation stack for ```RDSSecurityGroup```.\n13.For **SubnetIds**, enter the value from the CloudFormation stack for ```RDSSubnets```.\n14.Select **I acknowledge that this app creates custom IAM roles and resource policies**.\n15.Choose **Deploy**.\n\n\n\n16.On the Lambda console, open the function you created (```mysql-lambda-func```).\n17.On the **Configuration** tab, under **Environment variables**, choose **Edit**.\n\n\n\n18.Choose **Add environment variable**.\n19.Enter a new key-value pair:\n- For **Key**, enter ```MYSQL_connection_string```.\n- For **Value**, enter the value from the CloudFormation stack for ```MySQLConnection```.\n20.Choose **Save**.\n\n\n\n21.Return to the **Connect data sources** section on the Athena console.\n22.Choose the refresh icon next to **Lambda function**.\n23。Choose the Lambda function you created (```mysql-lamdba-function```).\n\n\n\n24.Choose **Next**.\n25.Review the settings and choose **Create data source**.\n26.In the Athena query editor, for **Data Source**, choose **MYSQL**.\n27.For **Database**, choose **sportsdata**.\n\n\n\n28.Choose the options icon by the tables and choose **Preview Table** to examine the data and schema.\n\n\n\nIn the following sections, we demonstrate different ways to optimize our queries.\n\n### **Optimal join order using EXPLAIN plan**\n\nA join is a basic SQL operation to query data on multiple tables using relations on matching columns. Join operations affect how much data is read from a table, how much data is transferred to the intermediate stages through networks, and how much memory is needed to build up a hash table to facilitate a join.\n\nIf you have multiple join operations and these join tables aren’t in the correct order, you may experience performance issues. To demonstrate this, we use the following tables from difference sources and join them in a certain order. Then we observe the query runtime and improve performance by using the EXPLAIN feature from Athena, which provides some suggestions for optimizing the query.\n\nThe CloudFormation template you ran earlier loaded data into the following services:\n\n\n\nLet’s construct a query to find all those who participated in the event by type of tickets. The query runtime with the following join took approximately 7 mins to complete:\n\nSQL\n```\nSELECT t.id AS ticket_id, \ne.eventid, \np.first_name \nFROM \n\"DDB\".\"default\".\"sportseventinfo\" e, \n\"AwsDataCatalog\".\"athenablog\".\"person\" p, \n\"AwsDataCatalog\".\"athenablog\".\"ticketinfo\" t \nWHERE \nt.sporting_event_id = cast(e.eventid as double) \nAND t.ticketholder_id = p.id\n```\n\nNow let’s use EXPLAIN on the query to see its run plan. We use the same query as before, but add explain (TYPE DISTRIBUTED):\n\nSQL\n```\nEXPLAIN (TYPE DISTRIBUTED)\nSELECT t.id AS ticket_id, \ne.eventid, \np.first_name \nFROM \n\"DDB\".\"default\".\"sportseventinfo\" e, \n\"AwsDataCatalog\".\"athenablog\".\"person\" p, \n\"AwsDataCatalog\".\"athenablog\".\"ticketinfo\" t \nWHERE \nt.sporting_event_id = cast(e.eventid as double) \nAND t.ticketholder_id = p.id\n```\nThe following screenshot shows our output\n\n\n\nNotice the cross-join in Fragment 1. The joins are converted to a Cartesian product for each table, where every record in a table is compared to every record in another table. Therefore, this query takes a significant amount of time to complete.\n\nTo optimize our query, we can rewrite it by reordering the joining tables as ```sportseventinfo```first, ```ticketinfo``` second, and person last. The reason for this is because the WHERE clause, which is being converted to a JOIN ON clause during the query plan stage, doesn’t have the join relationship between the ```person``` table and ```sportseventinfo``` table. Therefore, the query plan generator converted the join type to cross-joins (a Cartesian product), which less efficient. Reordering the tables aligns the WHERE clause to the INNER JOIN type, which satisfies the JOIN ON clause and runtime is reduced from 7 minutes to 10 seconds.\n\nThe code for our optimized query is as follows:\n\nSQL\n```\nSELECT t.id AS ticket_id, \ne.eventid, \np.first_name \nFROM \n\"DDB\".\"default\".\"sportseventinfo\" e, \n\"AwsDataCatalog\".\"athenablog\".\"ticketinfo\" t, \n\"AwsDataCatalog\".\"athenablog\".\"person\" p \nWHERE \nt.sporting_event_id = cast(e.eventid as double) \nAND t.ticketholder_id = p.id\n```\n\nThe following is the EXPLAIN output of our query after reordering the join clause:\n\nSQL\n```\nEXPLAIN (TYPE DISTRIBUTED) \nSELECT t.id AS ticket_id, \ne.eventid, \np.first_name \nFROM \n\"DDB\".\"default\".\"sportseventinfo\" e, \n\"AwsDataCatalog\".\"athenablog\".\"ticketinfo\" t, \n\"AwsDataCatalog\".\"athenablog\".\"person\" p \nWHERE t.sporting_event_id = cast(e.eventid as double) \nAND t.ticketholder_id = p.id\n```\n\nThe following screenshot shows our output.\n\n\n\nThe cross-join changed to INNER JOIN with join on columns (```eventid```, ```id```, ```ticketholder_id```), which results in the query running faster. Joins between the ```ticketinfo``` and ```person``` tables converted to the PARTITION distribution type, where both left and right tables are hash-partitioned across all worker nodes due to the size of the ```person``` table. The join between the ```sportseventinfo``` table and ```ticketinfo``` are converted to the REPLICATED distribution type, where one table is hash-partitioned across all worker nodes and the other table is replicated to all worker nodes to perform the join operation.\n\nFor more information about how to analyze these results, refer to [Understanding Athena EXPLAIN statement results](https://docs.aws.amazon.com/athena/latest/ug/athena-explain-statement-understanding.html).\n\nAs a best practice, we recommend having a JOIN statement along with an ON clause, as shown in the following code:\n\nSQL\n```\nSELECT t.id AS ticket_id, \ne.eventid, \np.first_name \nFROM \n\"AwsDataCatalog\".\"athenablog\".\"person\" p \nJOIN \"AwsDataCatalog\".\"athenablog\".\"ticketinfo\" t ON t.ticketholder_id = p.id \nJOIN \"ddb\".\"default\".\"sportseventinfo\" e ON t.sporting_event_id = cast(e.eventid as double)\n```\nAlso as a best practice when you join two tables, specify the larger table on the left side of join and the smaller table on the right side of the join. Athena distributes the table on the right to worker nodes, and then streams the table on the left to do the join. If the table on the right is smaller, then less memory is used and the query runs faster.\n\nIn the following sections, we present examples of how to optimize pushdowns for filter predicates and projection filter operations for the Athena data source using **EXPLAIN ANALYZE**.\n\n### **Pushdown optimization for the Athena connector for Amazon RDS for MySQL**\n\nA pushdown is an optimization to improve the performance of a SQL query by moving its processing as close to the data as possible. Pushdowns can drastically reduce SQL statement processing time by filtering data before transferring it over the network and filtering data before loading it into memory. The Athena connector for Amazon RDS for MySQL supports pushdowns for filter predicates and projection pushdowns.\n\nThe following table summarizes the services and tables we use to demonstrate a pushdown using Aurora MySQL.\n\n\n\nWe use the following query as an example of a filtering predicate and projection filter:\n\nSQL\n```\nSELECT full_name,\nname \nFROM \"sportsdata\".\"player_partitioned\" a \nJOIN \"sportsdata\".\"sport_team_partitioned\" b ON a.sport_team_id=b.id \nWHERE a.id='1.0'\n```\n\nThis query selects the players and their team based on their ID. It serves as an example of both filter operations in the WHERE clause and projection because it selects only two columns.\n\nWe use EXPLAIN ANALYZE to get the cost for the running this query:\n\nSQL\n```\nEXPLAIN ANALYZE \nSELECT full_name,\nname \nFROM \"MYSQL\".\"sportsdata\".\"player_partitioned\" a \nJOIN \"MYSQL\".\"sportsdata\".\"sport_team_partitioned\" b ON a.sport_team_id=b.id \nWHERE a.id='1.0'\n```\n\nThe following screenshot shows the output in Fragment 2 for the table ```player_partitioned```, in which we observe that the connector has a successful pushdown filter on the source side, so it tries to scan only one record out of the 5,157 records in the table. The output also shows that the query scan has only two columns (```full_name``` as the projection column and ```sport_team_id``` and the join column), and uses SELECT and JOIN, which indicates the projection pushdown is successful. This helps reduce the data scan when using Athena data source connectors.\n\n\n\nNow let’s look at the conditions in which a filter predicate pushdown doesn’t work with Athena connectors.\n\n#### **LIKE statement in filter predicates**\n\nWe start with the following example query to demonstrate using the LIKE statement in filter predicates:\n\nSQL\n```\nSELECT * \nFROM \"MYSQL\".\"sportsdata\".\"player_partitioned\" \nWHERE first_name LIKE '%Aar%'\n```\n\nWe then add EXPLAIN **ANALYZE**:\n\nSQL\n```\nEXPLAIN ANALYZE \nSELECT * \nFROM \"MYSQL\".\"sportsdata\".\"player_partitioned\" \nWHERE first_name LIKE '%Aar%'\n```\n\nThe **EXPLAIN ANALYZE** output shows that the query performs the table scan (scanning the table ```player_partitioned```, which contains 5,157 records) for all the records even though the WHERE clause only has 30 records matching the condition %Aar%. Therefore, the data scan shows the complete table size even with the WHERE clause.\n\n\n\nWe can optimize the same query by selecting only the required columns:\n\nSQL\n```\nEXPLAIN ANALYZE \nSELECT sport_team_id,\nfull_name \nFROM \"MYSQL\".\"sportsdata\".\"player_partitioned\" \nWHERE first_name LIKE '%Aar%'\n```\n\nFrom the EXPLAIN ANALYZE output, we can observe that the connector supports the projection filter pushdown, because we select only two columns. This brought the data scan size down to half of the table size.\n\n\n\n#### **OR statement in filter predicates**\n\nWe start with the following query to demonstrate using the OR statement in filter predicates:\n\nSQL\n```\nSELECT id,\nfirst_name \nFROM \"MYSQL\".\"sportsdata\".\"player_partitioned\" \nWHERE first_name = 'Aaron' OR id ='1.0'\n```\n\nWe use EXPLAIN ANALYZE with the preceding query as follows:\n\nSQL\n```\nEXPLAIN ANALYZE \nSELECT * \nFROM \n\"MYSQL\".\"sportsdata\".\"player_partitioned\" \nWHERE first_name = 'Aaron' OR id ='1.0'\n```\n\nSimilar to the LIKE statement, the following output shows that query scanned the table instead of pushing down to only the records that matched the WHERE clause. This query outputs only 16 records, but the data scan indicates a complete scan.\n\n\n\n### **Pushdown optimization for the Athena connector for DynamoDB**\n\nFor our example using the DynamoDB connector, we use the following data:\n\n\n\nLet’s test the filter predicate and project filter operation for our DynamoDB table using the following query. This query tries to get all the events and sports for a given location. We use EXPLAIN ANALYZE for the query as follows:\n\nSQL\n```\nEXPLAIN ANALYZE \nSELECT EventId,\nSport \nFROM \"DDB\".\"default\".\"sportseventinfo\" \nWHERE Location = 'Chase Field'\n```\n\nThe output of EXPLAIN ANALYZE shows that the filter predicate retrieved only 21 records, and the project filter selected only two columns to push down to the source. Therefore, the data scan for this query is less than the table size.\n\n\n\nNow let’s see where filter predicate pushdown doesn’t work. In the WHERE clause, if you apply the TRIM() function to the Location column and then filter, predicate pushdown optimization doesn’t apply, but we still see the projection filter optimization, which does apply. See the following code:\n\nSQL\n```\nEXPLAIN ANALYZE \nSELECT EventId,\nSport \nFROM \"DDB\".\"default\".\"sportseventinfo\" \nWHERE trim(Location) = 'Chase Field'\n```\n\nThe output of EXPLAIN ANALYZE for this query shows that the query scans all the rows but is still limited to only two columns, which shows that the filter predicate doesn’t work when the TRIM function is applied.\n\n\n\n\nWe’ve seen from the preceding examples that the Athena data source connector for Amazon RDS for MySQL and DynamoDB do support filter predicates and projection predicates for pushdown optimization, but we also saw that operations such as LIKE,OR, and TRIM when used in the filter predicate don’t support pushdowns to the source. Therefore, if you encounter unexplained charges in your federated Athena query, we recommend using EXPLAIN ANALYZE with the query and determine whether your Athena connector supports the pushdown operation or not.\n\nPlease note that running EXPLAIN ANALYZE incurs cost because it scans the data.\n\n### **Conclusion**\n\nIn this post, we showcased how to use EXPLAIN and EXPLAIN ANALYZE to analyze Athena SQL queries for data sources on AWS S3 and Athena federated SQL query for data source like DynamoDB and Amazon RDS for MySQL. You can use this as an example to optimize queries which would also result in cost savings.\n\n#### **About the Authors**\n\n\n\n**Nishchai JM** is an Analytics Specialist Solutions Architect at Amazon Web services. He specializes in building Big-data applications and help customer to modernize their applications on Cloud. He thinks Data is new oil and spends most of his time in deriving insights out of the Data.\n\n\n\n**Varad Ram** is Senior Solutions Architect in Amazon Web Services. He likes to help customers adopt to cloud technologies and is particularly interested in artificial intelligence. He believes deep learning will power future technology growth. In his spare time, he like to be outdoor with his daughter and son.","render":"<p><a href=\"https://aws.amazon.com/athena/?whats-new-cards.sort-by=item.additionalFields.postDateTime&whats-new-cards.sort-order=desc\" target=\"_blank\">Amazon Athena</a> is an interactive query service that makes it easy to analyze data in <a href=\"https://aws.amazon.com/s3/\" target=\"_blank\">Amazon Simple Storage Service</a> (Amazon S3) using standard SQL. Athena is serverless, so there is no infrastructure to manage, and you pay only for the queries that you run. In 2019, Athena added support for <a href=\"https://aws.amazon.com/blogs/big-data/query-any-data-source-with-amazon-athenas-new-federated-query/\" target=\"_blank\">federated queries</a> to run SQL queries across data stored in relational, non-relational, object, and custom data sources.</p>\n<p>In 2021, Athena added support for the <a href=\"https://aws.amazon.com/about-aws/whats-new/2021/04/amazon-athena-now-presents-query-execution-plans-to-aid-tuning/\" target=\"_blank\">EXPLAIN</a> statement, which can help you understand and improve the efficiency of your queries. The EXPLAIN statement provides a detailed breakdown of a query’s run plan. You can analyze the plan to identify and reduce query complexity and improve its runtime. You can also use EXPLAIN to validate SQL syntax prior to running the query. Doing so helps prevent errors that would have occurred while running the query.</p>\n<p>Athena also added <a href=\"https://aws.amazon.com/about-aws/whats-new/2021/11/amazon-athena-cost-details-query-execution-plans/\" target=\"_blank\">EXPLAIN ANALYZE</a>, which displays the computational cost of your queries alongside their run plans. Administrators can benefit from using EXPLAIN ANALYZE because it provides a scanned data count, which helps you reduce financial impact due to user queries and apply optimizations for better cost control.</p>\n<p>In this post, we demonstrate how to use and interpret EXPLAIN and EXPLAIN ANALYZE statements to improve Athena query performance when querying multiple data sources.</p>\n<h3><a id=\"Solution_overview_8\"></a><strong>Solution overview</strong></h3>\n<p>To demonstrate using EXPLAIN and EXPLAIN ANALYZE statements, we use the following services and resources:</p>\n<ul>\n<li>Five database tables from the <a href=\"https://github.com/aws-samples/aws-database-migration-samples/tree/master/PostgreSQL/sampledb/v1/data/csv\" target=\"_blank\">Major League Baseball</a> dataset</li>\n<li><a href=\"https://aws.amazon.com/cloudformation/\" target=\"_blank\">AWS CloudFormation</a> to provision AWS resources</li>\n<li>Amazon S3, <a href=\"https://aws.amazon.com/dynamodb/\" target=\"_blank\">Amazon DynamoDB</a>, and <a href=\"https://aws.amazon.com/rds/aurora/features/\" target=\"_blank\">Amazon Aurora MySQL-Compatible Edition</a> as data storage</li>\n</ul>\n<p>Athena uses the AWS Glue Data Catalog to store and retrieve table metadata for the Amazon S3 data in your AWS account. The table metadata lets the Athena query engine know how to find, read, and process the data that you want to query. We use Athena data source connectors to connect to data sources external to Amazon S3.</p>\n<h3><a id=\"Prerequisites_18\"></a><strong>Prerequisites</strong></h3>\n<p>To deploy the CloudFormation template, you must have the following:</p>\n<ul>\n<li>An AWS account</li>\n<li>An <a href=\"https://aws.amazon.com/iam/\" target=\"_blank\">AWS Identity and Access Management (IAM)</a> user with access to the following services:</li>\n<li>Amazon Athena</li>\n<li>AWS CloudFormation</li>\n<li>Amazon DynamoDB</li>\n<li><a href=\"https://aws.amazon.com/glue/\" target=\"_blank\">AWS Glue</a></li>\n<li><a href=\"https://aws.amazon.com/lambda/\" target=\"_blank\">AWS Lambda</a></li>\n<li><a href=\"https://aws.amazon.com/rds/\" target=\"_blank\">Amazon Relational Database Service</a> (Amazon RDS)</li>\n<li>Amazon S3</li>\n<li><a href=\"https://aws.amazon.com/vpc/\" target=\"_blank\">Amazon Virtual Private Cloud</a> (Amazon VPC)</li>\n</ul>\n<h3><a id=\"Provision_resources_with_AWS_CloudFormation_33\"></a><strong>Provision resources with AWS CloudFormation</strong></h3>\n<p>To deploy the CloudFormation template, complete the following steps:</p>\n<p>1.Choose <strong>Launch Stack</strong>:<br />\n<a href=\"https://signin.aws.amazon.com/signin?redirect_uri=https%3A%2F%2Fus-east-1.console.aws.amazon.com%2Fcloudformation%2Fhome%3Fregion%3Dus-east-1%26state%3DhashArgs%2523%252Fstacks%252Fquickcreate%253Fregion%253Dus-east-1%2526stackName%253Dathenaqueryoptimize%2526templateURL%253Dhttps%253A%252F%252Faws-blogs-artifacts-public.s3.amazonaws.com%252Fartifacts%252FBDB-1920%252FAthenaQueryPerformanceTuning.json%26isauthcode%3Dtrue&client_id=arn%3Aaws%3Aiam%3A%3A015428540659%3Auser%2Fcloudformation&forceMobileApp=0&code_challenge=PiEu3WMN6tJ-c0oHxiN00LWrj5tqJhPwwV4FC6veMvE&code_challenge_method=SHA-256\" target=\"_blank\"><img src=\"https://dev-media.amazoncloud.cn/7579c03373394927abe31b1b862f473c_image.png\" alt=\"image.png\" /></a><br />\n2.Follow the prompts on the AWS CloudFormation console to create the stack.<br />\n3.Note the key-value pairs on the stack’s <strong>Outputs</strong> tab.</p>\n<p>You use these values when configuring the Athena data source connectors.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/50f780f5c6324f84a73ac967425ae85f_image.png\" alt=\"image.png\" /></p>\n<p>The CloudFormation template creates the following resources:</p>\n<ul>\n<li>S3 buckets to store data and act as temporary spill buckets for Lambda</li>\n<li>AWS Glue Data Catalog tables for the data in the S3 buckets</li>\n<li>A DynamoDB table and <a href=\"https://aws.amazon.com/rds/mysql/\" target=\"_blank\">Amazon RDS for MySQL</a> tables, which are used to join multiple tables from different sources</li>\n<li>A VPC, subnets, and endpoints, which are needed for Amazon RDS for MySQL and DynamoDB</li>\n</ul>\n<p>The following figure shows the high-level data model for the data load.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/f0edc994e1f540ffb7079814d9da76f8_image.png\" alt=\"image.png\" /></p>\n<h3><a id=\"Create_the_DynamoDB_data_source_connector_56\"></a><strong>Create the DynamoDB data source connector</strong></h3>\n<p>To create the DynamoDB connector for Athena, complete the following steps:</p>\n<p>1.On the Athena console, choose <strong>Data sources</strong> in the navigation pane.<br />\n2.Choose <strong>Create data source</strong>.<br />\n3.For <strong>Data sources</strong>, select <strong>Amazon DynamoDB</strong>.<br />\n4.Choose <strong>Next</strong>.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/a6764a5f602142359e2e40f87846e412_image.png\" alt=\"image.png\" /></p>\n<p>5.For <strong>Data source name</strong>, enter DDB.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/90677ef889664042affacf6516b6fedd_image.png\" alt=\"image.png\" /></p>\n<p>6.For <strong>Lambda function</strong>, choose Create <strong>Lambda function</strong>.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/fe7df7a911b248d18843737cee83fa04_image.png\" alt=\"image.png\" /></p>\n<p>7.For <strong>Application name</strong>, enter <code>AthenaDynamoDBConnector</code>.<br />\n8.For <strong>SpillBucket</strong>, enter the value from the CloudFormation stack for <code>AthenaSpillBucket</code>.<br />\n9.For <strong>AthenaCatalogName</strong>, enter <code>dynamodb-lambda-func</code>.<br />\n10.Leave the remaining values at their defaults.<br />\n11.Select <strong>I acknowledge that this app creates custom IAM roles and resource policies</strong>.<br />\n12.Choose <strong>Deploy</strong>.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/a59db2c0561a45de8fc41f64cd586848_image.png\" alt=\"image.png\" /></p>\n<p>You’re returned to the Connect data sources section on the Athena console.</p>\n<p>13.Choose the refresh icon next to <strong>Lambda function</strong>.<br />\n14.Choose the Lambda function you just created (<code>dynamodb-lambda-func</code>).<br />\n15.Choose <strong>Next</strong>.<br />\n16.Review the settings and choose Create <strong>data source</strong>.<br />\n17.If you haven’t already set up the Athena query results location, choose View settings on the Athena query editor page.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/090184b7712a448a9919bdb19831af6c_image.png\" alt=\"image.png\" /></p>\n<p>18.Choose <strong>Manage</strong>.<br />\n19.For <strong>Location of query result</strong>, browse to the S3 bucket specified for the Athena spill bucket in the CloudFormation template.<br />\n20.Add Athena-query to the S3 path.<br />\n21.Choose <strong>Save</strong>.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/cfcbc21b82354f18bf9a261d1f02585a_image.png\" alt=\"image.png\" /></p>\n<p>22.In the Athena query editor, for <strong>Data source</strong>, choose <strong>DDB</strong>.<br />\n23.For <strong>Database</strong>, choose <strong>default</strong>.</p>\n<p>You can now explore the schema for the <code>sportseventinfo</code>table; the data is the same in DynamoDB.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/758784b4485d436c81eb27da102e3650_image.png\" alt=\"image.png\" /></p>\n<p>24.Choose the options icon for the <code>sportseventinfo</code> table and choose <strong>Preview Table</strong>.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/735ff9d9df5e41d582fb1b68ae7fd057_image.png\" alt=\"image.png\" /></p>\n<h3><a id=\"Create_the_Amazon_RDS_for_MySQL_data_source_connector_112\"></a><strong>Create the Amazon RDS for MySQL data source connector</strong></h3>\n<p>Now let’s create the connector for Amazon RDS for MySQL.</p>\n<p>1.On the Athena console, choose <strong>Data sources</strong> in the navigation pane.<br />\n2.Choose <strong>Create data source</strong>.<br />\n3.For <strong>Data sources</strong>, select <strong>MySQL</strong>.<br />\n4.Choose <strong>Next</strong>.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/c8f5c79528ed405792026a9631e50456_image.png\" alt=\"image.png\" /></p>\n<p>5.For <strong>Data source name</strong>, enter MySQL.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/7141c04b0ca747aa8528e5a62d36ea25_image.png\" alt=\"image.png\" /></p>\n<p>6.For <strong>Lambda function</strong>, choose Create <strong>Lambda function</strong>.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/34ef662fd3f64c8693a8bc2729433a98_image.png\" alt=\"image.png\" /></p>\n<p>7.For <strong>Application name</strong>, enter <code>AthenaMySQLConnector</code>.<br />\n8.For <strong>SecretNamePrefix</strong>, enter <code>AthenaMySQLFederation</code>.<br />\n9.For <strong>SpillBucket</strong>, enter the value from the CloudFormation stack for <code>AthenaSpillBucket</code>.<br />\n10.For <strong>DefaultConnectionString</strong>, enter the value from the CloudFormation stack for <code>MySQLConnection</code>.<br />\n11.For <strong>LambdaFunctionName</strong>, enter <code>mysql-lambda-func</code>.<br />\n12.For <strong>SecurityGroupIds</strong>, enter the value from the CloudFormation stack for <code>RDSSecurityGroup</code>.<br />\n13.For <strong>SubnetIds</strong>, enter the value from the CloudFormation stack for <code>RDSSubnets</code>.<br />\n14.Select <strong>I acknowledge that this app creates custom IAM roles and resource policies</strong>.<br />\n15.Choose <strong>Deploy</strong>.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/8e05c58c77874ee3a41c6d956779cd3e_image.png\" alt=\"image.png\" /></p>\n<p>16.On the Lambda console, open the function you created (<code>mysql-lambda-func</code>).<br />\n17.On the <strong>Configuration</strong> tab, under <strong>Environment variables</strong>, choose <strong>Edit</strong>.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/2787a698f090449abb26e9329a098dd1_image.png\" alt=\"image.png\" /></p>\n<p>18.Choose <strong>Add environment variable</strong>.<br />\n19.Enter a new key-value pair:</p>\n<ul>\n<li>For <strong>Key</strong>, enter <code>MYSQL_connection_string</code>.</li>\n<li>For <strong>Value</strong>, enter the value from the CloudFormation stack for <code>MySQLConnection</code>.<br />\n20.Choose <strong>Save</strong>.</li>\n</ul>\n<p><img src=\"https://dev-media.amazoncloud.cn/2ce463b75432484b9ec6b7e3a46f2b4c_image.png\" alt=\"image.png\" /></p>\n<p>21.Return to the <strong>Connect data sources</strong> section on the Athena console.<br />\n22.Choose the refresh icon next to <strong>Lambda function</strong>.<br />\n23。Choose the Lambda function you created (<code>mysql-lamdba-function</code>).</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/7f30612cfb0e4127ad2dfc226c582c84_image.png\" alt=\"image.png\" /></p>\n<p>24.Choose <strong>Next</strong>.<br />\n25.Review the settings and choose <strong>Create data source</strong>.<br />\n26.In the Athena query editor, for <strong>Data Source</strong>, choose <strong>MYSQL</strong>.<br />\n27.For <strong>Database</strong>, choose <strong>sportsdata</strong>.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/0002afec6e144584b34ded35916ccfc8_image.png\" alt=\"image.png\" /></p>\n<p>28.Choose the options icon by the tables and choose <strong>Preview Table</strong> to examine the data and schema.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/638988f33c9c4935bc9508ec933a7c92_image.png\" alt=\"image.png\" /></p>\n<p>In the following sections, we demonstrate different ways to optimize our queries.</p>\n<h3><a id=\"Optimal_join_order_using_EXPLAIN_plan_175\"></a><strong>Optimal join order using EXPLAIN plan</strong></h3>\n<p>A join is a basic SQL operation to query data on multiple tables using relations on matching columns. Join operations affect how much data is read from a table, how much data is transferred to the intermediate stages through networks, and how much memory is needed to build up a hash table to facilitate a join.</p>\n<p>If you have multiple join operations and these join tables aren’t in the correct order, you may experience performance issues. To demonstrate this, we use the following tables from difference sources and join them in a certain order. Then we observe the query runtime and improve performance by using the EXPLAIN feature from Athena, which provides some suggestions for optimizing the query.</p>\n<p>The CloudFormation template you ran earlier loaded data into the following services:</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/c8ce57878bd44dc5a6b699bc62f79c46_image.png\" alt=\"image.png\" /></p>\n<p>Let’s construct a query to find all those who participated in the event by type of tickets. The query runtime with the following join took approximately 7 mins to complete:</p>\n<p>SQL</p>\n<pre><code class=\"lang-\">SELECT t.id AS ticket_id, \ne.eventid, \np.first_name \nFROM \n"DDB"."default"."sportseventinfo" e, \n"AwsDataCatalog"."athenablog"."person" p, \n"AwsDataCatalog"."athenablog"."ticketinfo" t \nWHERE \nt.sporting_event_id = cast(e.eventid as double) \nAND t.ticketholder_id = p.id\n</code></pre>\n<p>Now let’s use EXPLAIN on the query to see its run plan. We use the same query as before, but add explain (TYPE DISTRIBUTED):</p>\n<p>SQL</p>\n<pre><code class=\"lang-\">EXPLAIN (TYPE DISTRIBUTED)\nSELECT t.id AS ticket_id, \ne.eventid, \np.first_name \nFROM \n"DDB"."default"."sportseventinfo" e, \n"AwsDataCatalog"."athenablog"."person" p, \n"AwsDataCatalog"."athenablog"."ticketinfo" t \nWHERE \nt.sporting_event_id = cast(e.eventid as double) \nAND t.ticketholder_id = p.id\n</code></pre>\n<p>The following screenshot shows our output</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/dec40ea90d7f4c7b86828527f3ee16bf_image.png\" alt=\"image.png\" /></p>\n<p>Notice the cross-join in Fragment 1. The joins are converted to a Cartesian product for each table, where every record in a table is compared to every record in another table. Therefore, this query takes a significant amount of time to complete.</p>\n<p>To optimize our query, we can rewrite it by reordering the joining tables as <code>sportseventinfo</code>first, <code>ticketinfo</code> second, and person last. The reason for this is because the WHERE clause, which is being converted to a JOIN ON clause during the query plan stage, doesn’t have the join relationship between the <code>person</code> table and <code>sportseventinfo</code> table. Therefore, the query plan generator converted the join type to cross-joins (a Cartesian product), which less efficient. Reordering the tables aligns the WHERE clause to the INNER JOIN type, which satisfies the JOIN ON clause and runtime is reduced from 7 minutes to 10 seconds.</p>\n<p>The code for our optimized query is as follows:</p>\n<p>SQL</p>\n<pre><code class=\"lang-\">SELECT t.id AS ticket_id, \ne.eventid, \np.first_name \nFROM \n"DDB"."default"."sportseventinfo" e, \n"AwsDataCatalog"."athenablog"."ticketinfo" t, \n"AwsDataCatalog"."athenablog"."person" p \nWHERE \nt.sporting_event_id = cast(e.eventid as double) \nAND t.ticketholder_id = p.id\n</code></pre>\n<p>The following is the EXPLAIN output of our query after reordering the join clause:</p>\n<p>SQL</p>\n<pre><code class=\"lang-\">EXPLAIN (TYPE DISTRIBUTED) \nSELECT t.id AS ticket_id, \ne.eventid, \np.first_name \nFROM \n"DDB"."default"."sportseventinfo" e, \n"AwsDataCatalog"."athenablog"."ticketinfo" t, \n"AwsDataCatalog"."athenablog"."person" p \nWHERE t.sporting_event_id = cast(e.eventid as double) \nAND t.ticketholder_id = p.id\n</code></pre>\n<p>The following screenshot shows our output.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/17322ed63dfa43fa9b977838dcd28cd8_image.png\" alt=\"image.png\" /></p>\n<p>The cross-join changed to INNER JOIN with join on columns (<code>eventid</code>, <code>id</code>, <code>ticketholder_id</code>), which results in the query running faster. Joins between the <code>ticketinfo</code> and <code>person</code> tables converted to the PARTITION distribution type, where both left and right tables are hash-partitioned across all worker nodes due to the size of the <code>person</code> table. The join between the <code>sportseventinfo</code> table and <code>ticketinfo</code> are converted to the REPLICATED distribution type, where one table is hash-partitioned across all worker nodes and the other table is replicated to all worker nodes to perform the join operation.</p>\n<p>For more information about how to analyze these results, refer to <a href=\"https://docs.aws.amazon.com/athena/latest/ug/athena-explain-statement-understanding.html\" target=\"_blank\">Understanding Athena EXPLAIN statement results</a>.</p>\n<p>As a best practice, we recommend having a JOIN statement along with an ON clause, as shown in the following code:</p>\n<p>SQL</p>\n<pre><code class=\"lang-\">SELECT t.id AS ticket_id, \ne.eventid, \np.first_name \nFROM \n"AwsDataCatalog"."athenablog"."person" p \nJOIN "AwsDataCatalog"."athenablog"."ticketinfo" t ON t.ticketholder_id = p.id \nJOIN "ddb"."default"."sportseventinfo" e ON t.sporting_event_id = cast(e.eventid as double)\n</code></pre>\n<p>Also as a best practice when you join two tables, specify the larger table on the left side of join and the smaller table on the right side of the join. Athena distributes the table on the right to worker nodes, and then streams the table on the left to do the join. If the table on the right is smaller, then less memory is used and the query runs faster.</p>\n<p>In the following sections, we present examples of how to optimize pushdowns for filter predicates and projection filter operations for the Athena data source using <strong>EXPLAIN ANALYZE</strong>.</p>\n<h3><a id=\"Pushdown_optimization_for_the_Athena_connector_for_Amazon_RDS_for_MySQL_281\"></a><strong>Pushdown optimization for the Athena connector for Amazon RDS for MySQL</strong></h3>\n<p>A pushdown is an optimization to improve the performance of a SQL query by moving its processing as close to the data as possible. Pushdowns can drastically reduce SQL statement processing time by filtering data before transferring it over the network and filtering data before loading it into memory. The Athena connector for Amazon RDS for MySQL supports pushdowns for filter predicates and projection pushdowns.</p>\n<p>The following table summarizes the services and tables we use to demonstrate a pushdown using Aurora MySQL.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/feb9813b2df04e9a9f8047d6f3424839_image.png\" alt=\"image.png\" /></p>\n<p>We use the following query as an example of a filtering predicate and projection filter:</p>\n<p>SQL</p>\n<pre><code class=\"lang-\">SELECT full_name,\nname \nFROM "sportsdata"."player_partitioned" a \nJOIN "sportsdata"."sport_team_partitioned" b ON a.sport_team_id=b.id \nWHERE a.id='1.0'\n</code></pre>\n<p>This query selects the players and their team based on their ID. It serves as an example of both filter operations in the WHERE clause and projection because it selects only two columns.</p>\n<p>We use EXPLAIN ANALYZE to get the cost for the running this query:</p>\n<p>SQL</p>\n<pre><code class=\"lang-\">EXPLAIN ANALYZE \nSELECT full_name,\nname \nFROM "MYSQL"."sportsdata"."player_partitioned" a \nJOIN "MYSQL"."sportsdata"."sport_team_partitioned" b ON a.sport_team_id=b.id \nWHERE a.id='1.0'\n</code></pre>\n<p>The following screenshot shows the output in Fragment 2 for the table <code>player_partitioned</code>, in which we observe that the connector has a successful pushdown filter on the source side, so it tries to scan only one record out of the 5,157 records in the table. The output also shows that the query scan has only two columns (<code>full_name</code> as the projection column and <code>sport_team_id</code> and the join column), and uses SELECT and JOIN, which indicates the projection pushdown is successful. This helps reduce the data scan when using Athena data source connectors.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/6a9a8c0c95244f46af240f439fb3566f_image.png\" alt=\"image.png\" /></p>\n<p>Now let’s look at the conditions in which a filter predicate pushdown doesn’t work with Athena connectors.</p>\n<h4><a id=\"LIKE_statement_in_filter_predicates_320\"></a><strong>LIKE statement in filter predicates</strong></h4>\n<p>We start with the following example query to demonstrate using the LIKE statement in filter predicates:</p>\n<p>SQL</p>\n<pre><code class=\"lang-\">SELECT * \nFROM "MYSQL"."sportsdata"."player_partitioned" \nWHERE first_name LIKE '%Aar%'\n</code></pre>\n<p>We then add EXPLAIN <strong>ANALYZE</strong>:</p>\n<p>SQL</p>\n<pre><code class=\"lang-\">EXPLAIN ANALYZE \nSELECT * \nFROM "MYSQL"."sportsdata"."player_partitioned" \nWHERE first_name LIKE '%Aar%'\n</code></pre>\n<p>The <strong>EXPLAIN ANALYZE</strong> output shows that the query performs the table scan (scanning the table <code>player_partitioned</code>, which contains 5,157 records) for all the records even though the WHERE clause only has 30 records matching the condition %Aar%. Therefore, the data scan shows the complete table size even with the WHERE clause.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/4c666019028a46ffa2c3fd08173b486c_image.png\" alt=\"image.png\" /></p>\n<p>We can optimize the same query by selecting only the required columns:</p>\n<p>SQL</p>\n<pre><code class=\"lang-\">EXPLAIN ANALYZE \nSELECT sport_team_id,\nfull_name \nFROM "MYSQL"."sportsdata"."player_partitioned" \nWHERE first_name LIKE '%Aar%'\n</code></pre>\n<p>From the EXPLAIN ANALYZE output, we can observe that the connector supports the projection filter pushdown, because we select only two columns. This brought the data scan size down to half of the table size.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/3ea6826d3f45463b835924fbc2bc2c00_image.png\" alt=\"image.png\" /></p>\n<h4><a id=\"OR_statement_in_filter_predicates_360\"></a><strong>OR statement in filter predicates</strong></h4>\n<p>We start with the following query to demonstrate using the OR statement in filter predicates:</p>\n<p>SQL</p>\n<pre><code class=\"lang-\">SELECT id,\nfirst_name \nFROM "MYSQL"."sportsdata"."player_partitioned" \nWHERE first_name = 'Aaron' OR id ='1.0'\n</code></pre>\n<p>We use EXPLAIN ANALYZE with the preceding query as follows:</p>\n<p>SQL</p>\n<pre><code class=\"lang-\">EXPLAIN ANALYZE \nSELECT * \nFROM \n"MYSQL"."sportsdata"."player_partitioned" \nWHERE first_name = 'Aaron' OR id ='1.0'\n</code></pre>\n<p>Similar to the LIKE statement, the following output shows that query scanned the table instead of pushing down to only the records that matched the WHERE clause. This query outputs only 16 records, but the data scan indicates a complete scan.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/78f7b8f4f4d94ab0823273e44e5291cb_image.png\" alt=\"image.png\" /></p>\n<h3><a id=\"Pushdown_optimization_for_the_Athena_connector_for_DynamoDB_387\"></a><strong>Pushdown optimization for the Athena connector for DynamoDB</strong></h3>\n<p>For our example using the DynamoDB connector, we use the following data:</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/040d6e902d04498c8323ed96b6020879_image.png\" alt=\"image.png\" /></p>\n<p>Let’s test the filter predicate and project filter operation for our DynamoDB table using the following query. This query tries to get all the events and sports for a given location. We use EXPLAIN ANALYZE for the query as follows:</p>\n<p>SQL</p>\n<pre><code class=\"lang-\">EXPLAIN ANALYZE \nSELECT EventId,\nSport \nFROM "DDB"."default"."sportseventinfo" \nWHERE Location = 'Chase Field'\n</code></pre>\n<p>The output of EXPLAIN ANALYZE shows that the filter predicate retrieved only 21 records, and the project filter selected only two columns to push down to the source. Therefore, the data scan for this query is less than the table size.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/e02c994e8d614f5994ba3967ee080e17_image.png\" alt=\"image.png\" /></p>\n<p>Now let’s see where filter predicate pushdown doesn’t work. In the WHERE clause, if you apply the TRIM() function to the Location column and then filter, predicate pushdown optimization doesn’t apply, but we still see the projection filter optimization, which does apply. See the following code:</p>\n<p>SQL</p>\n<pre><code class=\"lang-\">EXPLAIN ANALYZE \nSELECT EventId,\nSport \nFROM "DDB"."default"."sportseventinfo" \nWHERE trim(Location) = 'Chase Field'\n</code></pre>\n<p>The output of EXPLAIN ANALYZE for this query shows that the query scans all the rows but is still limited to only two columns, which shows that the filter predicate doesn’t work when the TRIM function is applied.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/a2c36627eeaa4b3581f62d8d5e44c725_image.png\" alt=\"image.png\" /></p>\n<p>We’ve seen from the preceding examples that the Athena data source connector for Amazon RDS for MySQL and DynamoDB do support filter predicates and projection predicates for pushdown optimization, but we also saw that operations such as LIKE,OR, and TRIM when used in the filter predicate don’t support pushdowns to the source. Therefore, if you encounter unexplained charges in your federated Athena query, we recommend using EXPLAIN ANALYZE with the query and determine whether your Athena connector supports the pushdown operation or not.</p>\n<p>Please note that running EXPLAIN ANALYZE incurs cost because it scans the data.</p>\n<h3><a id=\"Conclusion_428\"></a><strong>Conclusion</strong></h3>\n<p>In this post, we showcased how to use EXPLAIN and EXPLAIN ANALYZE to analyze Athena SQL queries for data sources on AWS S3 and Athena federated SQL query for data source like DynamoDB and Amazon RDS for MySQL. You can use this as an example to optimize queries which would also result in cost savings.</p>\n<h4><a id=\"About_the_Authors_432\"></a><strong>About the Authors</strong></h4>\n<p><img src=\"https://dev-media.amazoncloud.cn/cffa5dbc6e854cd2820cead81b4b7c58_image.png\" alt=\"image.png\" /></p>\n<p><strong>Nishchai JM</strong> is an Analytics Specialist Solutions Architect at Amazon Web services. He specializes in building Big-data applications and help customer to modernize their applications on Cloud. He thinks Data is new oil and spends most of his time in deriving insights out of the Data.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/4551c1e0de4142d0aaf14f0361812d53_image.png\" alt=\"image.png\" /></p>\n<p><strong>Varad Ram</strong> is Senior Solutions Architect in Amazon Web Services. He likes to help customers adopt to cloud technologies and is particularly interested in artificial intelligence. He believes deep learning will power future technology growth. In his spare time, he like to be outdoor with his daughter and son.</p>\n"}

Optimize Federated Query Performance using EXPLAIN and EXPLAIN ANALYZE in Amazon Athena

海外精选

海外精选的内容汇集了全球优质的亚马逊云科技相关技术内容。同时,内容中提到的“AWS”

是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

0

0 0

0亚马逊云科技解决方案 基于行业客户应用场景及技术领域的解决方案

联系亚马逊云科技专家

目录

亚马逊云科技解决方案 基于行业客户应用场景及技术领域的解决方案

联系亚马逊云科技专家

亚马逊云科技解决方案

基于行业客户应用场景及技术领域的解决方案

联系专家

0

目录

分享

分享 点赞

点赞 收藏

收藏 目录

目录立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。