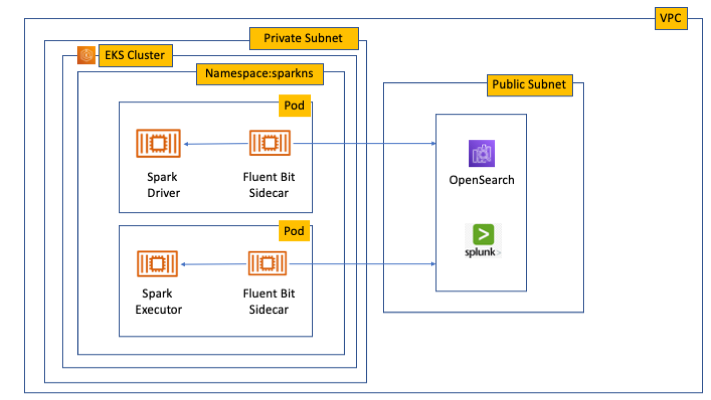

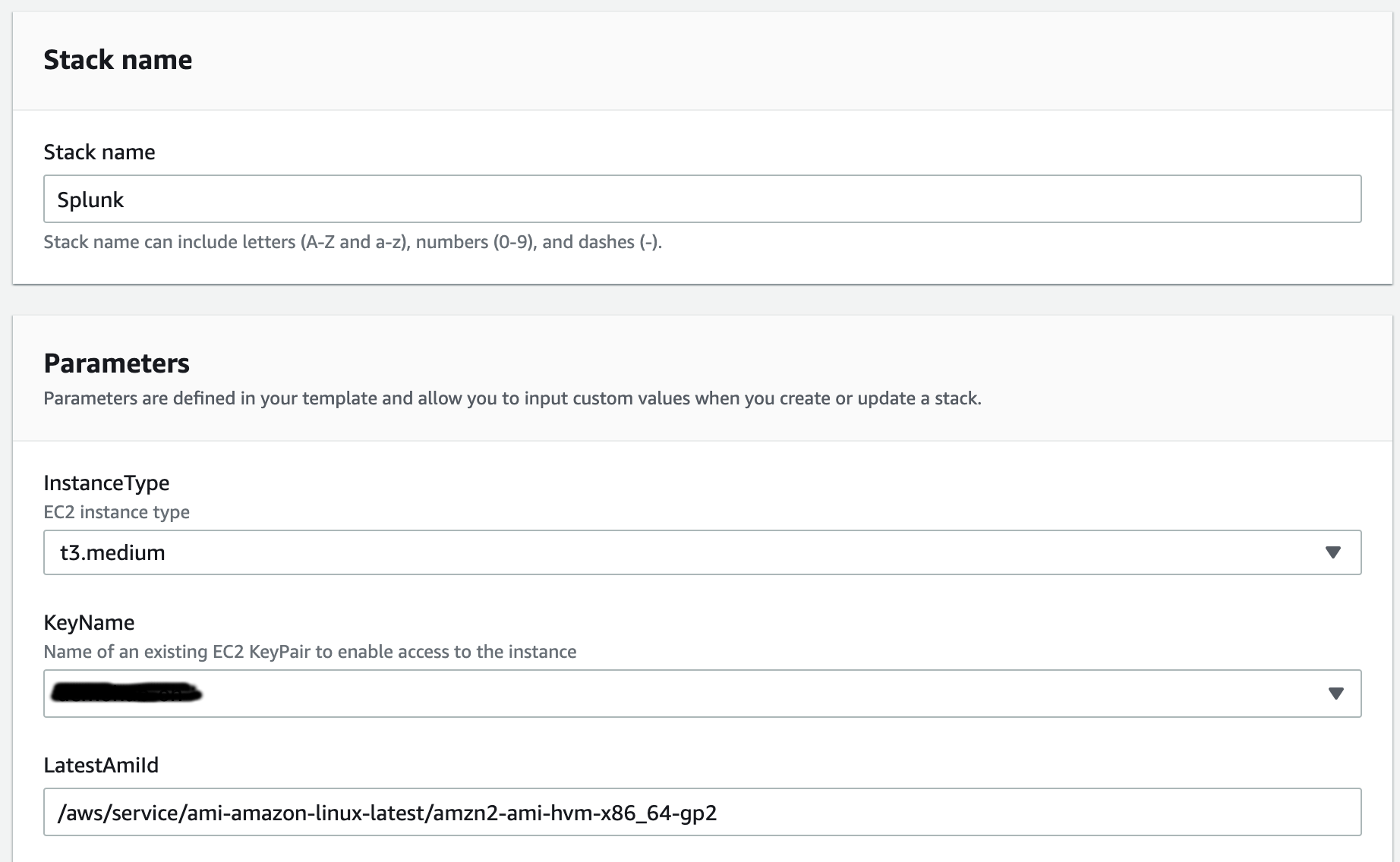

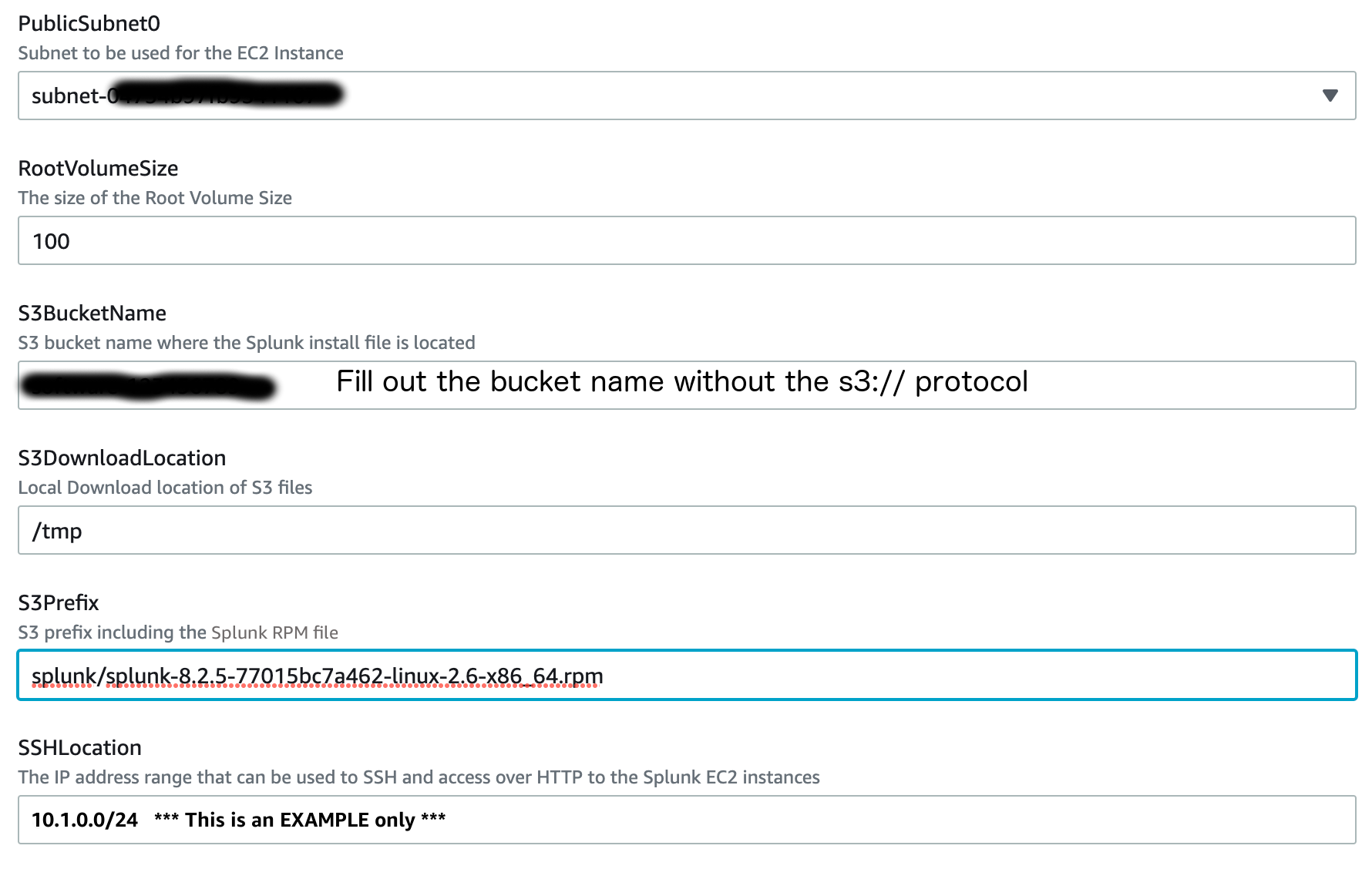

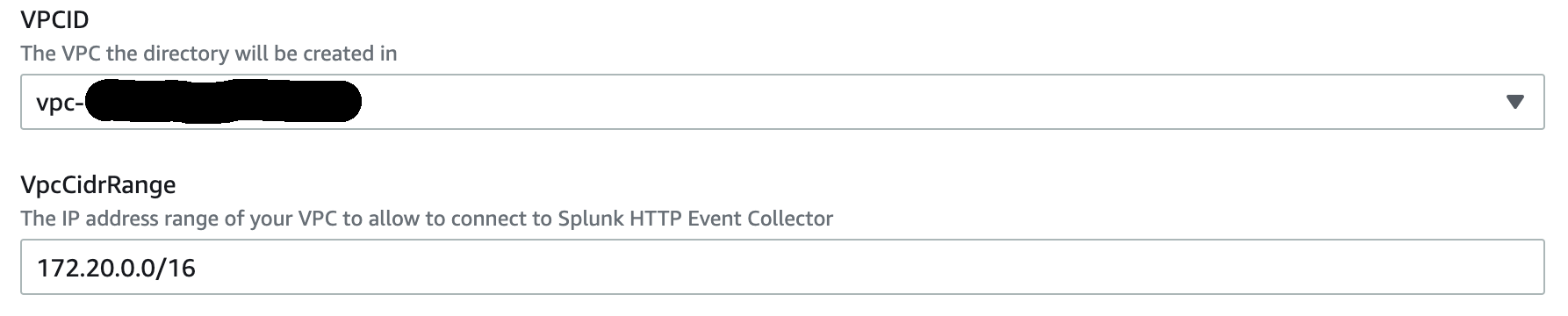

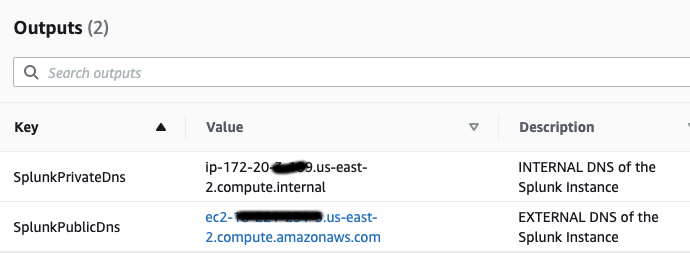

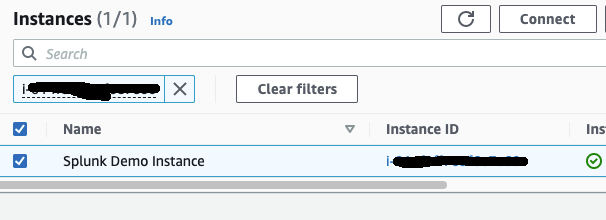

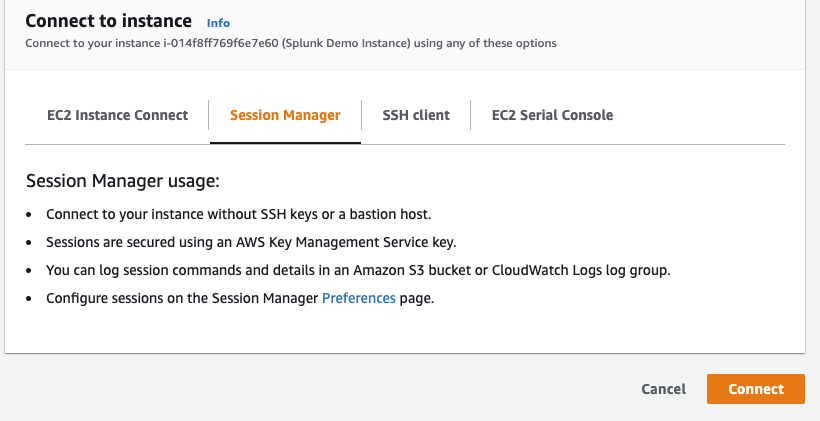

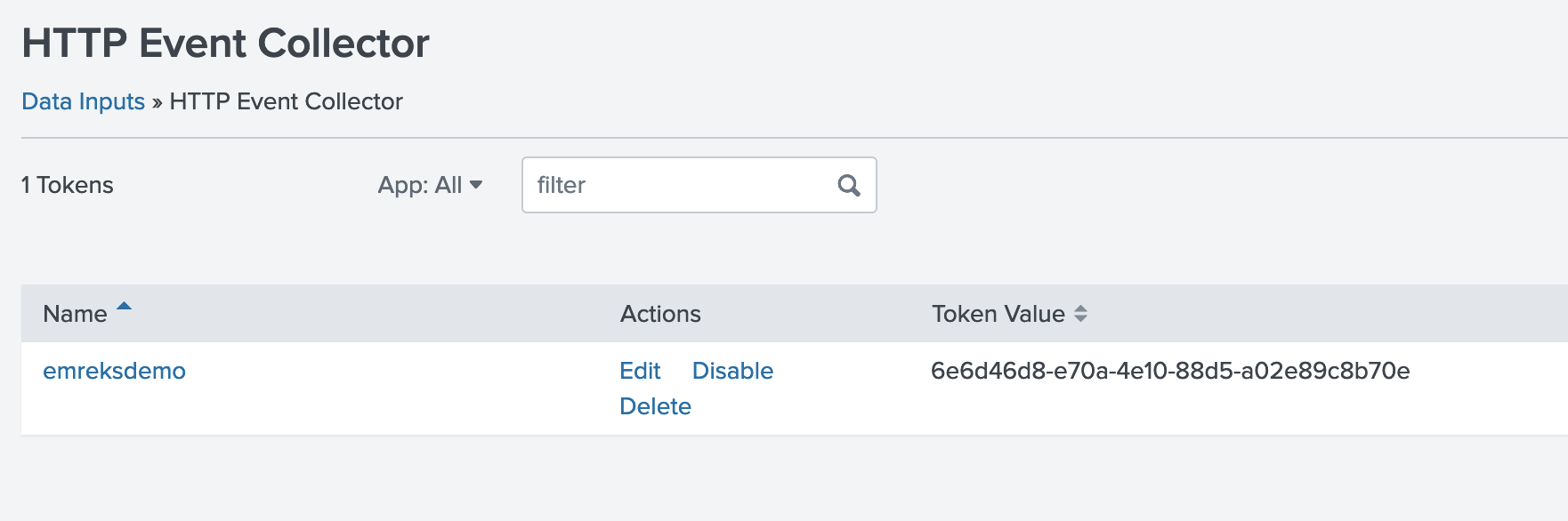

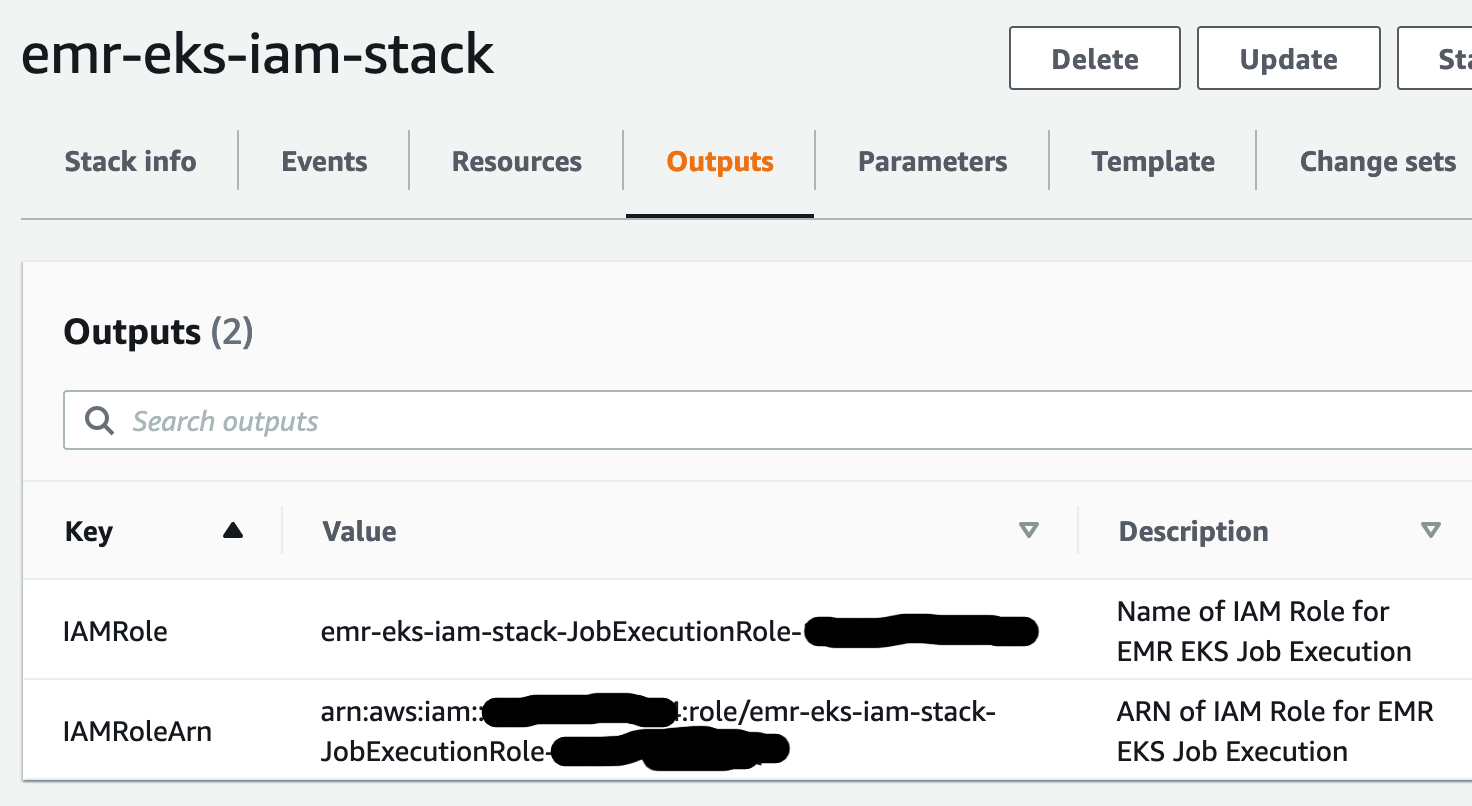

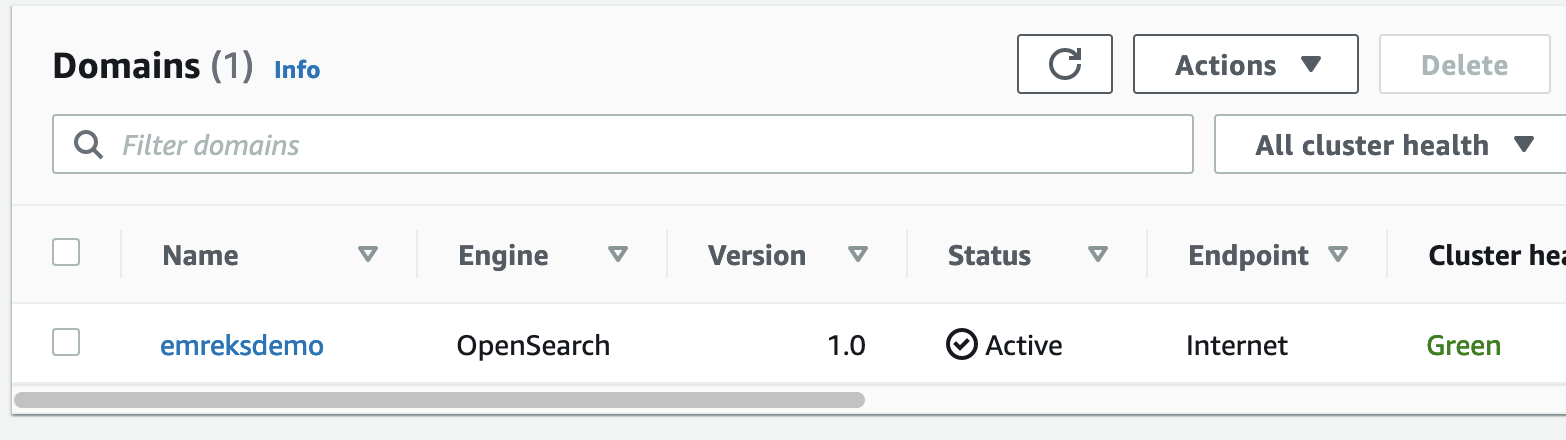

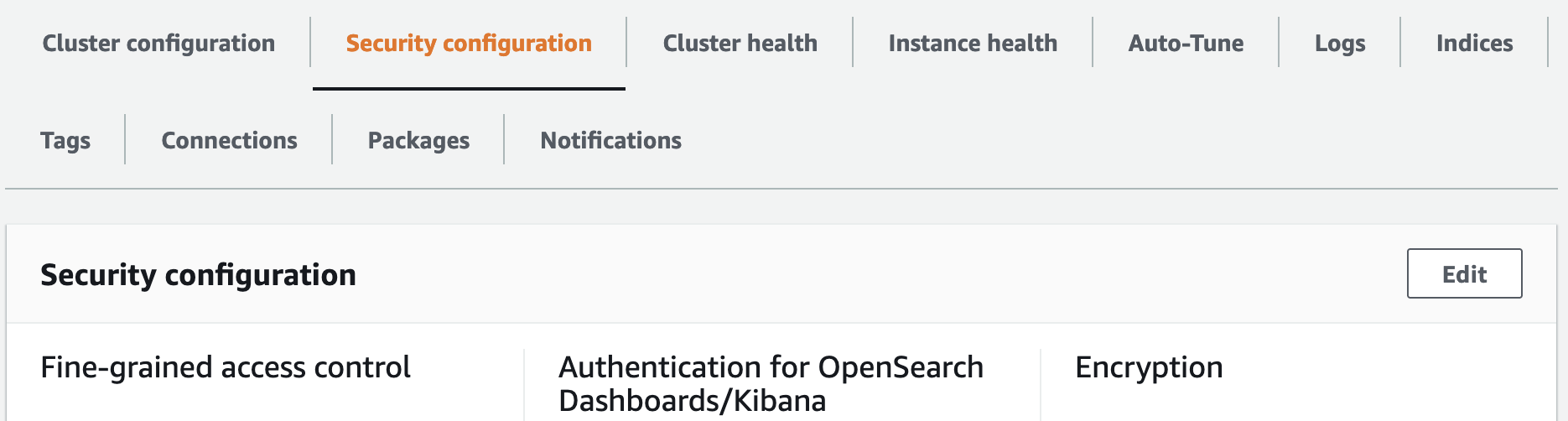

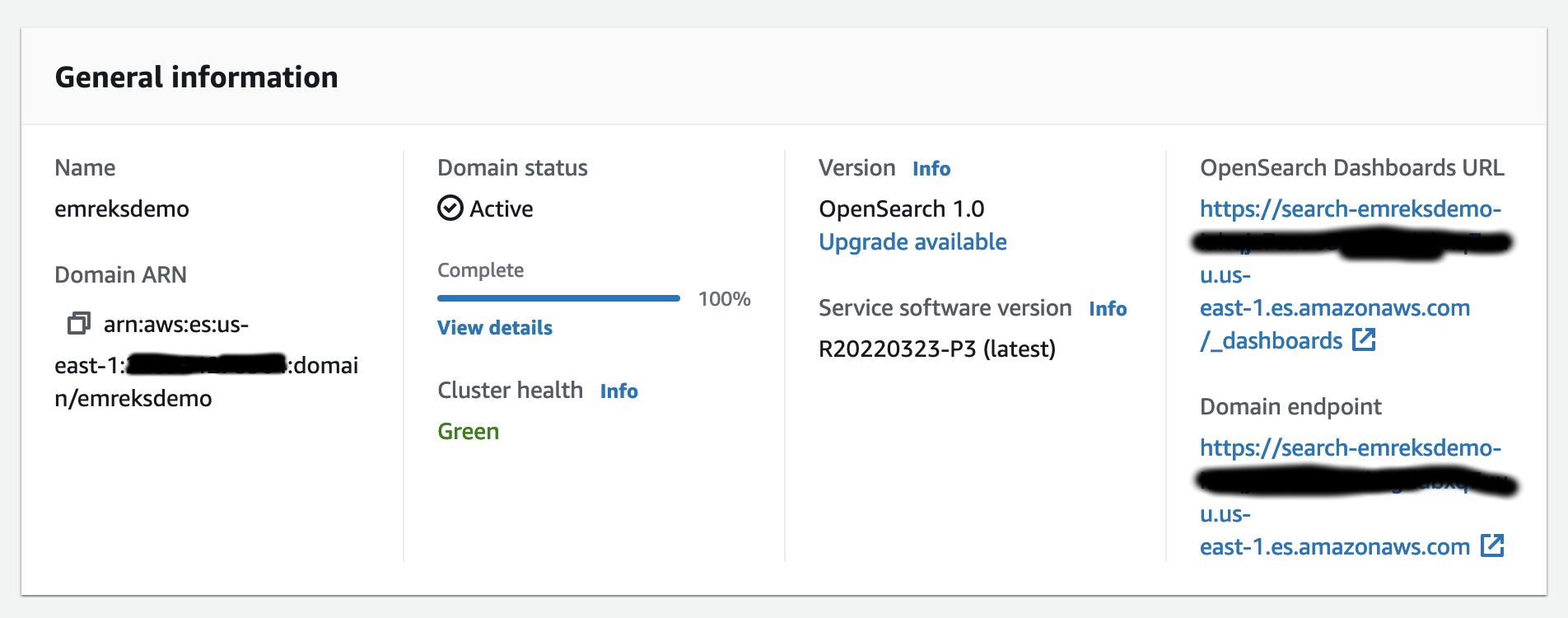

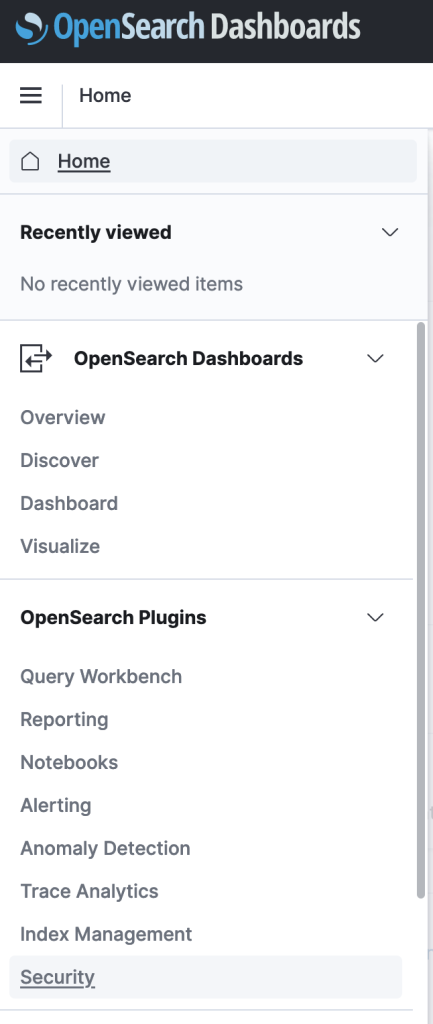

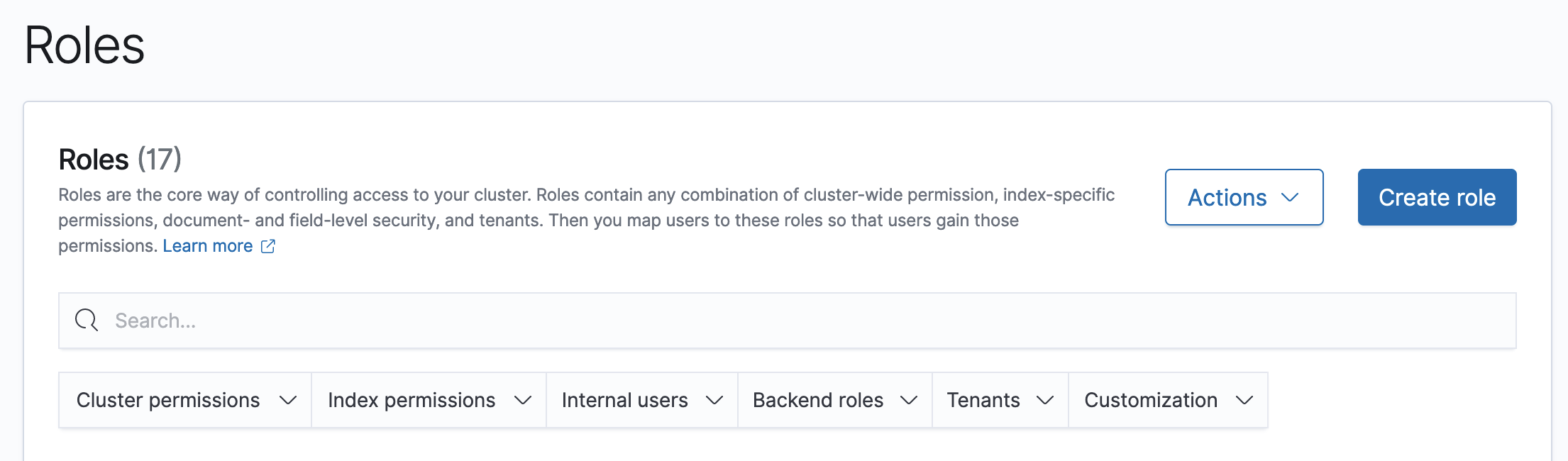

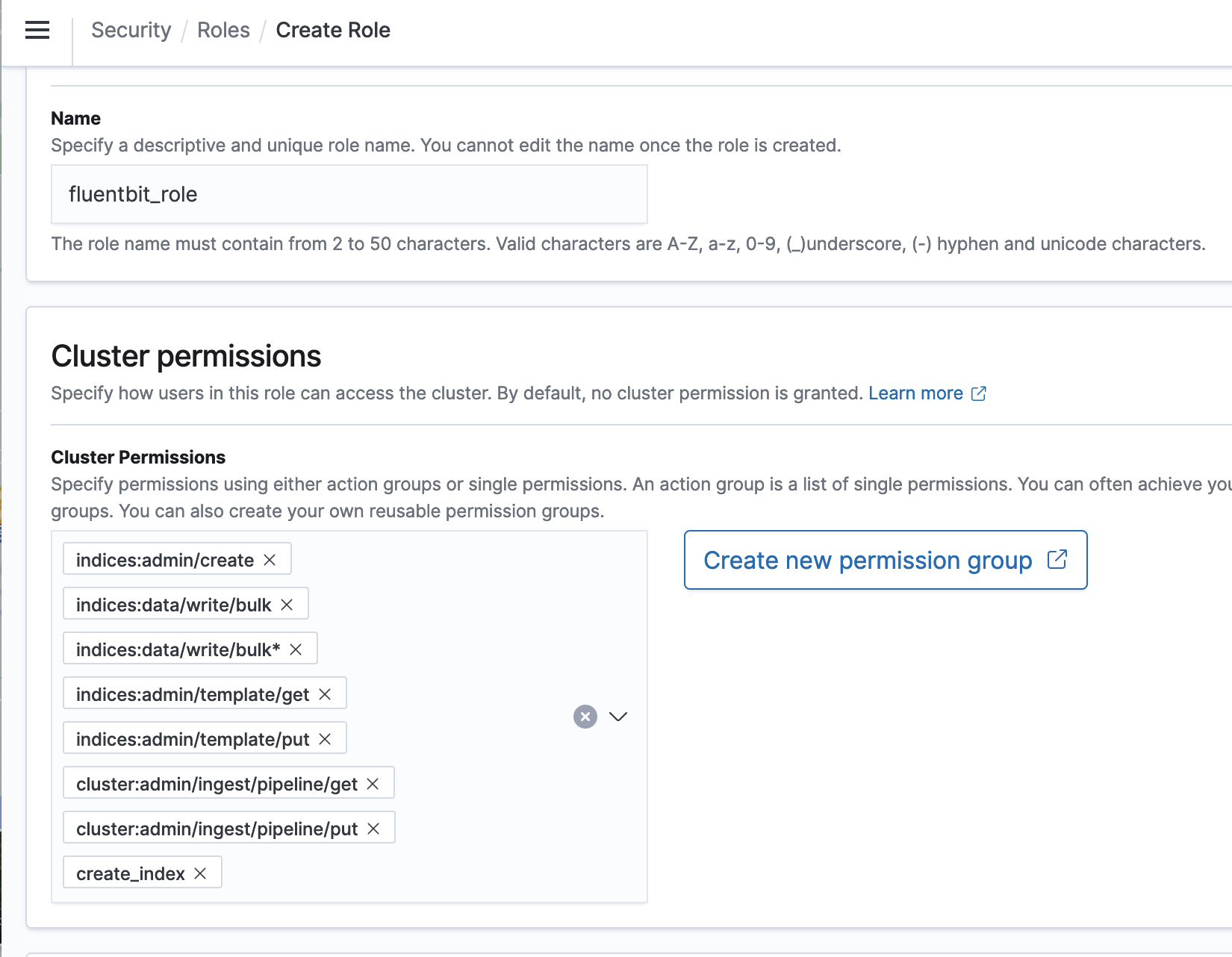

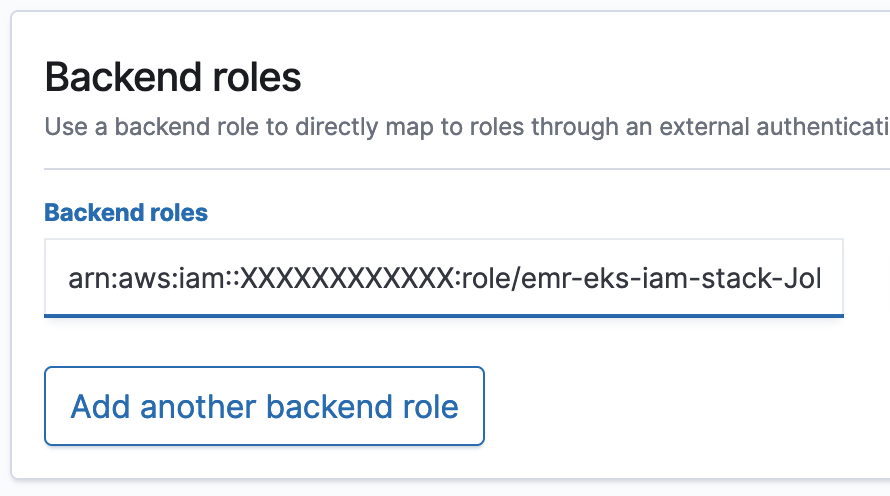

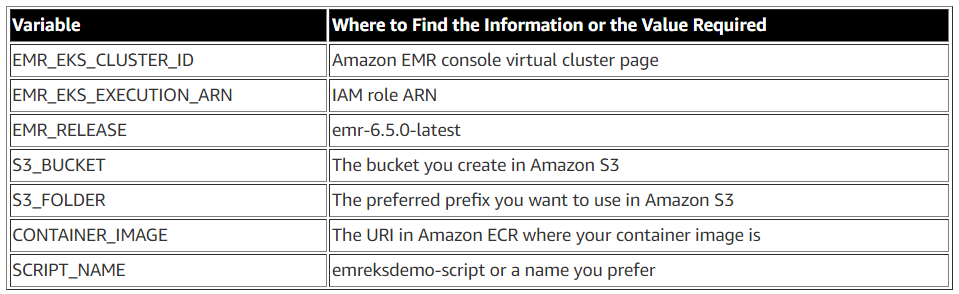

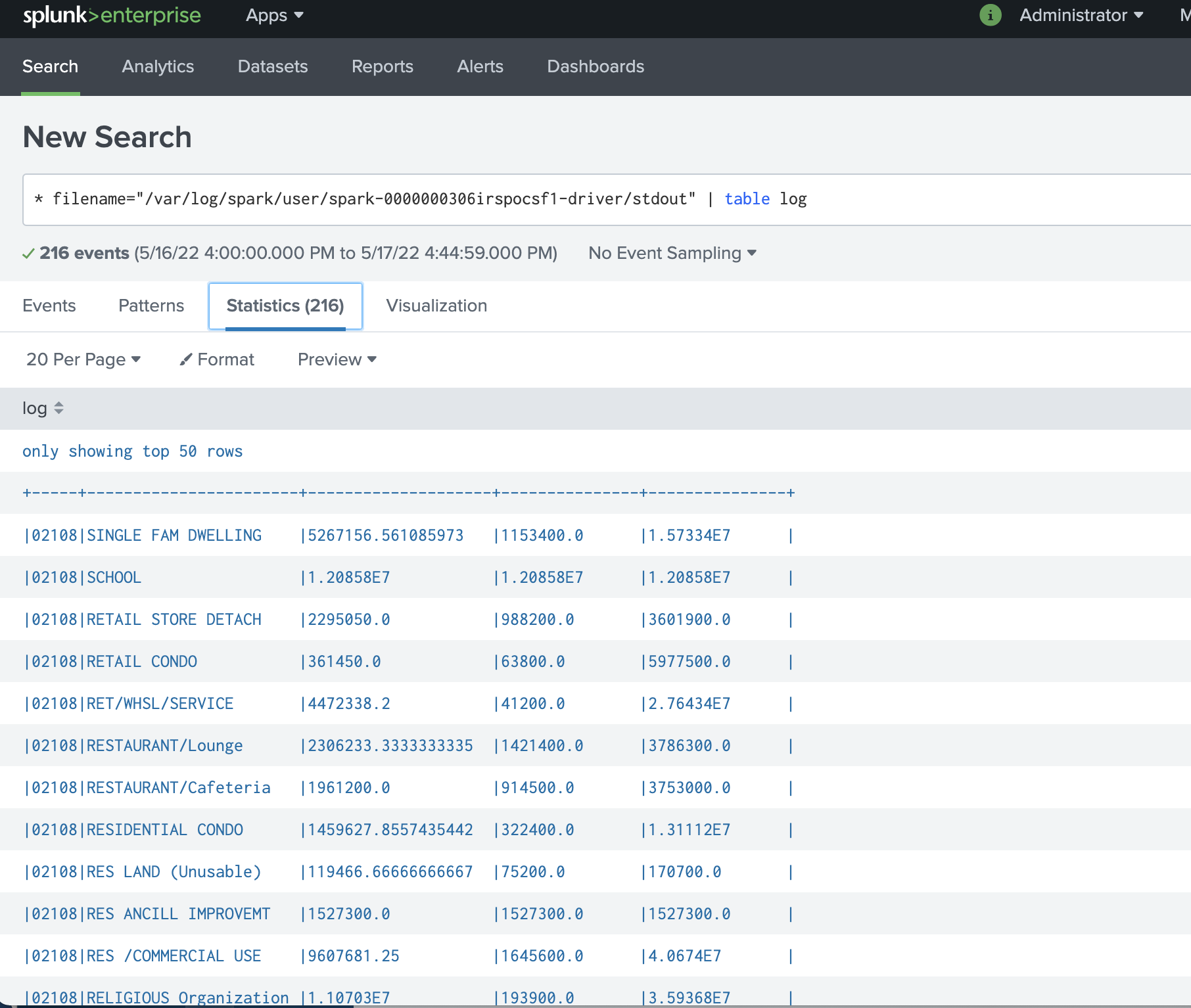

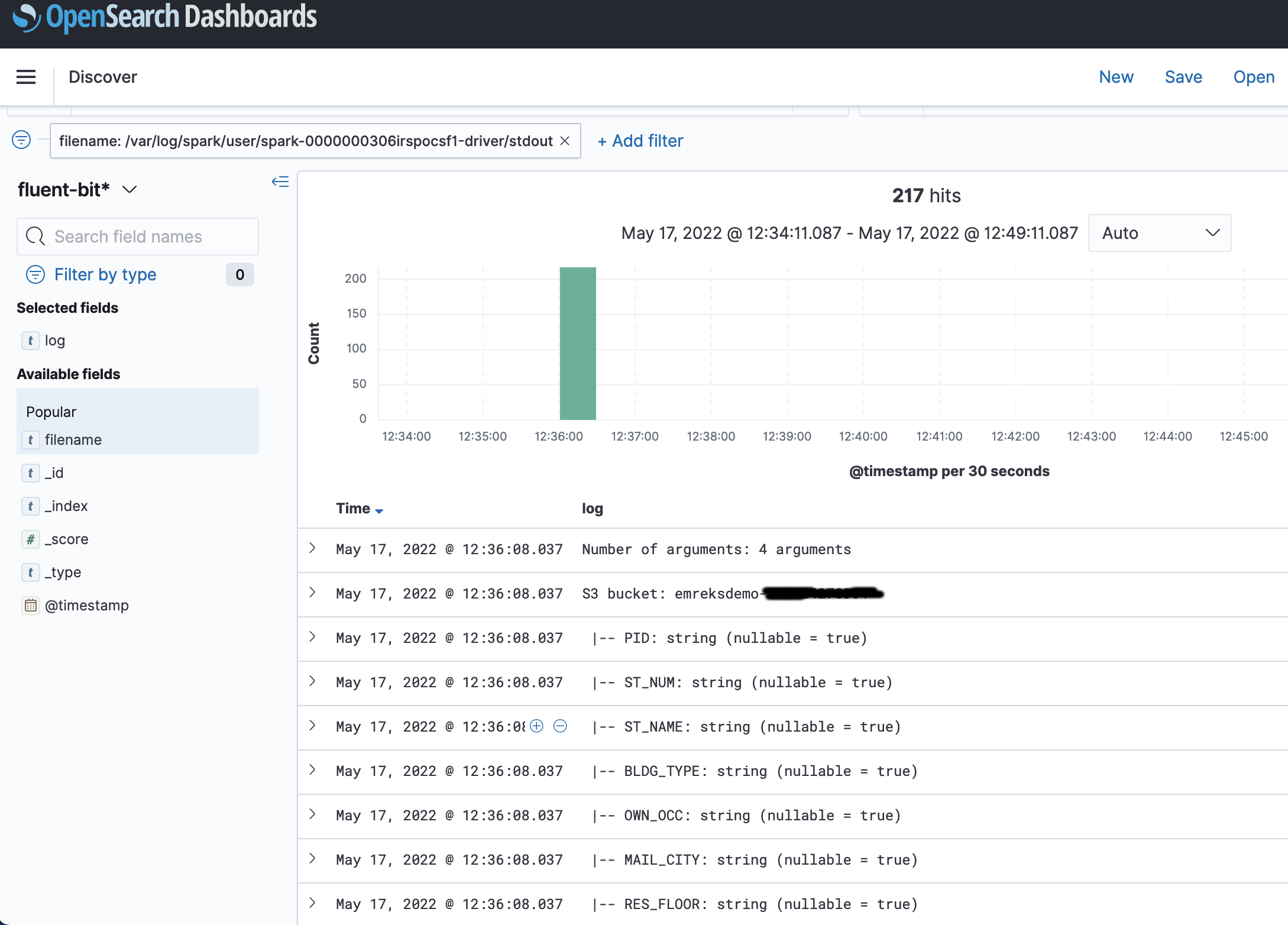

{"value":"Spark jobs running on [Amazon EMR on EKS](https://docs.aws.amazon.com/emr/latest/EMR-on-EKS-DevelopmentGuide/emr-eks.html) generate logs that are very useful in identifying issues with Spark processes and also as a way to see Spark outputs. You can access these logs from a variety of sources. On the [Amazon EMR](http://aws.amazon.com/emr) virtual cluster console, you can access logs from the Spark History UI. You also have flexibility to push logs into an [Amazon Simple Storage Service](http://aws.amazon.com/s3) (Amazon S3) bucket or [Amazon CloudWatch Logs](http://aws.amazon.com/cloudwatch). In each method, these logs are linked to the specific job in question. The common practice of log management in DevOps culture is to centralize logging through the forwarding of logs to an enterprise log aggregation system like Splunk or [Amazon OpenSearch Service](https://aws.amazon.com/opensearch-service/) (successor to Amazon Elasticsearch Service). This enables you to see all the applicable log data in one place. You can identify key trends, anomalies, and correlated events, and troubleshoot problems faster and notify the appropriate people in a timely fashion.\n\nEMR on EKS Spark logs are generated by Spark and can be accessed via the Kubernetes API and kubectl CLI. Therefore, although it’s possible to install log forwarding agents in the [Amazon Elastic Kubernetes Service](https://aws.amazon.com/opensearch-service/) (Amazon EKS) cluster to forward all Kubernetes logs, which include Spark logs, this can become quite expensive at scale because you get information that may not be important for Spark users about Kubernetes. In addition, from a security point of view, the EKS cluster logs and access to kubectl may not be available to the Spark user.\n\nTo solve this problem, this post proposes using pod templates to create a sidecar container alongside the Spark job pods. The sidecar containers are able to access the logs contained in the Spark pods and forward these logs to the log aggregator. This approach allows the logs to be managed separately from the EKS cluster and uses a small amount of resources because the sidecar container is only launched during the lifetime of the Spark job.\n\n\n#### **Implementing Fluent Bit as a sidecar container**\n\n\nFluent Bit is a lightweight, highly scalable, and high-speed logging and metrics processor and log forwarder. It collects event data from any source, enriches that data, and sends it to any destination. Its lightweight and efficient design coupled with its many features makes it very attractive to those working in the cloud and in containerized environments. It has been deployed extensively and trusted by many, even in large and complex environments. Fluent Bit has zero dependencies and requires only 650 KB in memory to operate, as compared to FluentD, which needs about 40 MB in memory. Therefore, it’s an ideal option as a log forwarder to forward logs generated from Spark jobs.\n\nWhen you submit a job to EMR on EKS, there are at least two Spark containers: the Spark driver and the Spark executor. The number of Spark executor pods depends on your job submission configuration. If you indicate more than one ```spark.executor.instances```, you get the corresponding number of Spark executor pods. What we want to do here is run Fluent Bit as sidecar containers with the Spark driver and executor pods. Diagrammatically, it looks like the following figure. The Fluent Bit sidecar container reads the indicated logs in the Spark driver and executor pods, and forwards these logs to the target log aggregator directly.\n\n\n\n\n#### **Pod templates in EMR on EKS**\n\n\nA Kubernetes pod is a group of one or more containers with shared storage, network resources, and a specification for how to run the containers. Pod templates are specifications for creating pods. It’s part of the desired state of the workload resources used to run the application. Pod template files can define the driver or executor pod configurations that aren’t supported in standard Spark configuration. That being said, Spark is opinionated about certain pod configurations and some values in the pod template are always overwritten by Spark. Using a pod template only allows Spark to start with a template pod and not an empty pod during the pod building process. Pod templates are enabled in EMR on EKS when you configure the Spark properties ```spark.kubernetes.driver.podTemplateFile``` and ```spark.kubernetes.executor.podTemplateFile```. Spark downloads these pod templates to construct the driver and executor pods.\n\n\n#### **Forward logs generated by Spark jobs in EMR on EKS**\n\n\nA log aggregating system like Amazon OpenSearch Service or Splunk should always be available that can accept the logs forwarded by the Fluent Bit sidecar containers. If not, we provide the following scripts in this post to help you launch a log aggregating system like Amazon OpenSearch Service or Splunk installed on an [Amazon Elastic Compute Cloud](http://aws.amazon.com/ec2) (Amazon EC2) instance.\n\nWe use several services to create and configure EMR on EKS. We use an [AWS Cloud9](https://aws.amazon.com/cloud9/) workspace to run all the scripts and to configure the EKS cluster. To prepare to run a job script that requires certain Python libraries absent from the generic EMR images, we use [Amazon Elastic Container Registry](https://aws.amazon.com/ecr/) (Amazon ECR) to store the customized EMR container image.\n\n\n##### **Create an Amazon Web Services Cloud9 workspace**\n\n\nThe first step is to launch and configure the Amazon Web Services Cloud9 workspace by following the instructions in [Create a Workspace](https://www.eksworkshop.com/020_prerequisites/workspace/) in the EKS Workshop. After you create the workspace, we create [Amazon Web Services Identity and Access Management](http://aws.amazon.com/iam) (IAM) resources. [Create an IAM role](https://www.eksworkshop.com/020_prerequisites/iamrole/) for the workspace, [attach the role](https://www.eksworkshop.com/020_prerequisites/ec2instance/) to the workspace, and [update the workspace](https://www.eksworkshop.com/020_prerequisites/workspaceiam/) IAM settings.\n\n\n##### **Prepare the Amazon Web Services Cloud9 workspace**\n\n\nClone the following [GitHub repository](https://github.com/aws-samples/aws-emr-eks-log-forwarding) and run the following script to prepare the Amazon Web Services Cloud9 workspace to be ready to install and configure Amazon EKS and EMR on EKS. The shell script prepare_cloud9.sh installs all the necessary components for the Amazon Web Services Cloud9 workspace to build and manage the EKS cluster. These include the kubectl command line tool, eksctl CLI tool, jq, and to update the [Amazon Web Services Command Line Interface](http://aws.amazon.com/cli) (Amazon Web Services CLI).\n\nBash\n```\n$ sudo yum -y install git\n$ cd ~ \n$ git clone https://github.com/aws-samples/aws-emr-eks-log-forwarding.git\n$ cd aws-emr-eks-log-forwarding\n$ cd emreks\n$ bash prepare_cloud9.sh\n```\n\nAll the necessary scripts and configuration to run this solution are found in the cloned GitHub repository.\n\n\n##### **Create a key pair**\n\n\nAs part of this particular deployment, you need an EC2 key pair to create an EKS cluster. If you already have an existing EC2 key pair, you may use that key pair. Otherwise, you can [create a key pair](https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/create-key-pairs.html).\n\n\n##### **Install Amazon EKS and EMR on EKS**\n\n\nAfter you configure the Amazon Web Services Cloud9 workspace, in the same folder (```emreks```), run the following deployment script:\n\nBash\n```\n$ bash deploy_eks_cluster_bash.sh \nDeployment Script -- EMR on EKS\n-----------------------------------------------\n\nPlease provide the following information before deployment:\n1. Region (If your Cloud9 desktop is in the same region as your deployment, you can leave this blank)\n2. Account ID (If your Cloud9 desktop is running in the same Account ID as where your deployment will be, you can leave this blank)\n3. Name of the S3 bucket to be created for the EMR S3 storage location\nRegion: [xx-xxxx-x]: < Press enter for default or enter region > \nAccount ID [xxxxxxxxxxxx]: < Press enter for default or enter account # > \nEC2 Public Key name: < Provide your key pair name here >\nDefault S3 bucket name for EMR on EKS (do not add s3://): < bucket name >\nBucket created: XXXXXXXXXXX ...\nDeploying CloudFormation stack with the following parameters...\nRegion: xx-xxxx-x | Account ID: xxxxxxxxxxxx | S3 Bucket: XXXXXXXXXXX\n\n...\n\nEKS Cluster and Virtual EMR Cluster have been installed.\n```\n\nThe last line indicates that installation was successful.\n\n\n#### **Log aggregation options**\n\n\nTo manually install Splunk on an EC2 instance, complete the following steps:\n\n\n##### **Option 1: Install Splunk Enterprise**\n\n\nTo manually install Splunk on an EC2 instance, complete the following steps:\n\n1. [Launch an EC2 instance](https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/ec2-launch-instance-wizard.html).\n2. [Install Splunk](https://docs.splunk.com/Documentation/Splunk/8.2.6/Installation/Beforeyouinstall).\n3. Configure the EC2 instance [security group](https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/security-group-rules-reference.html) to permit access to ports 22, 8000, and 8088.\n \nThis post, however, provides an automated way to install Spunk on an EC2 instance:\n\n1. Download the RPM install file and upload it to an accessible Amazon S3 location.\n2. Upload the following YAML script into Amazon Web Services CloudFormation.\n3. Provide the necessary parameters, as shown in the screenshots below.\n4. Choose Next and complete the steps to create your stack.\n\n\n\n\n\n\n\nAlternatively, run an Amazon Web Services CLI script like the following:\n\nBash\n```\naws cloudformation create-stack \\\n--stack-name \"splunk\" \\\n--template-body file://splunk_cf.yaml \\\n--parameters ParameterKey=KeyName,ParameterValue=\"< Name of EC2 Key Pair >\" \\\n ParameterKey=InstanceType,ParameterValue=\"t3.medium\" \\\n ParameterKey=LatestAmiId,ParameterValue=\"/aws/service/ami-amazon-linux-latest/amzn2-ami-hvm-x86_64-gp2\" \\\n ParameterKey=VPCID,ParameterValue=\"vpc-XXXXXXXXXXX\" \\\n ParameterKey=PublicSubnet0,ParameterValue=\"subnet-XXXXXXXXX\" \\\n ParameterKey=SSHLocation,ParameterValue=\"< CIDR Range for SSH access >\" \\\n ParameterKey=VpcCidrRange,ParameterValue=\"172.20.0.0/16\" \\\n ParameterKey=RootVolumeSize,ParameterValue=\"100\" \\\n ParameterKey=S3BucketName,ParameterValue=\"< S3 Bucket Name >\" \\\n ParameterKey=S3Prefix,ParameterValue=\"splunk/splunk-8.2.5-77015bc7a462-linux-2.6-x86_64.rpm\" \\\n ParameterKey=S3DownloadLocation,ParameterValue=\"/tmp\" \\\n--region < region > \\\n--capabilities CAPABILITY_IAM\n```\n\n5. After you build the stack, navigate to the stack’s **Outputs** tab on the Amazon Web Services CloudFormation console and note the internal and external DNS for the Splunk instance.\n\nYou use these later to configure the Splunk instance and log forwarding.\n\n\n\n6. To configure Splunk, go to the **Resources** tab for the CloudFormation stack and locate the physical ID of ```EC2Instance```.\n7. Choose that link to go to the specific EC2 instance.\n8. Select the instance and choose **Connect**.\n\n\n\n9. On the **Session Manager** tab, choose **Connect**.\n\n\n\nYou’re redirected to the instance’s shell.\n\n10. Install and configure Splunk as follows:\n\nBash\n```\n$ sudo /opt/splunk/bin/splunk start --accept-license\n…\nPlease enter an administrator username: admin\nPassword must contain at least:\n * 8 total printable ASCII character(s).\nPlease enter a new password: \nPlease confirm new password:\n…\nDone\n [ OK ]\n\nWaiting for web server at http://127.0.0.1:8000 to be available......... Done\nThe Splunk web interface is at http://ip-xx-xxx-xxx-x.us-east-2.compute.internal:8000\n```\n\n11. Enter the Splunk site using the ```SplunkPublicDns``` value from the stack outputs (for example, ```http://ec2-xx-xxx-xxx-x.us-east-2.compute.amazonaws.com:8000```). Note the port number of 8000.\n12. Log in with the user name and password you provided.\n\n\n\n\n##### **Configure HTTP Event Collector**\n\n\nTo configure Splunk to be able to receive logs from Fluent Bit, configure the HTTP Event Collector data input:\n\n1. Go to **Settings** and choose **Data input**.\n2. Choose **HTTP Event Collector**.\n3. Choose **Global Settings**.\n4. Select **Enabled**, keep port number 8088, then choose **Save**.\n5. Choose **New Token**.\n6. For **Name**, enter a name (for example, ```emreksdemo```).\n7. Choose **Next**.\n8. For **Available item(s) for Indexes**, add at least the main index.\n9. Choose **Review** and then **Submit**.\n10. In the list of HTTP Event Collect tokens, copy the token value for ```emreksdemo```.\n\nYou use it when configuring the Fluent Bit output.\n\n\n\n\n#### **Option 2: Set up Amazon OpenSearch Service**\n\n\nYour other log aggregation option is to use Amazon OpenSearch Service.\n\n**Provision an OpenSearch Service domain**\n\nProvisioning an OpenSearch Service domain is very straightforward. In this post, we provide a simple script and configuration to provision a basic domain. To do it yourself, refer to [Creating and managing Amazon OpenSearch Service domains](https://docs.aws.amazon.com/opensearch-service/latest/developerguide/createupdatedomains.html).\n\nBefore you start, get the ARN of the IAM role that you use to run the Spark jobs. If you created the EKS cluster with the provided script, go to the CloudFormation stack ```emr-eks-iam-stack```. On the Outputs tab, locate the ```IAMRoleArn``` output and copy this ARN. We also modify the IAM role later on, after we create the OpenSearch Service domain.\n\n\n\nIf you’re using the provided ```opensearch.sh``` installer, before you run it, modify the file.\n\nFrom the root folder of the GitHub repository, ```cd to opensearch``` and modify ```opensearch.sh``` (you can also use your preferred editor):\n\nBash\n```\n[../aws-emr-eks-log-forwarding] $ cd opensearch\n[../aws-emr-eks-log-forwarding/opensearch] $ vi opensearch.sh\n```\n\nConfigure opensearch.sh to fit your environment, for example:\n\nBash\n```\n# name of our Amazon OpenSearch cluster\nexport ES_DOMAIN_NAME=\"emreksdemo\"\n\n# Elasticsearch version\nexport ES_VERSION=\"OpenSearch_1.0\"\n\n# Instance Type\nexport INSTANCE_TYPE=\"t3.small.search\"\n\n# OpenSearch Dashboards admin user\nexport ES_DOMAIN_USER=\"emreks\"\n\n# OpenSearch Dashboards admin password\nexport ES_DOMAIN_PASSWORD='< ADD YOUR PASSWORD >'\n\n# Region\nexport REGION='us-east-1'\n```\n\nRun the script:\n\n```[../aws-emr-eks-log-forwarding/opensearch] $ bash opensearch.sh```\n\n\n##### **Configure your OpenSearch Service domain**\n\n\nAfter you set up your OpenSearch service domain and it’s active, make the following configuration changes to allow logs to be ingested into Amazon OpenSearch Service:\n\n1. On the Amazon OpenSearch Service console, on the **Domains** page, choose your domain.\n\n\n\n2. On the **Security configuration** tab, choose **Edit**.\n\n\n\n3. For **Access Policy**, select **Only use fine-grained access control**.\n4. Choose **Save changes**.\n\nThe access policy should look like the following code:\n\nJson\n```\n{\n \"Version\": \"2012-10-17\",\n \"Statement\": [\n {\n \"Effect\": \"Allow\",\n \"Principal\": {\n \"AWS\": \"*\"\n },\n \"Action\": \"es:*\",\n \"Resource\": \"arn:aws:es:xx-xxxx-x:xxxxxxxxxxxx:domain/emreksdemo/*\"\n }\n ]\n}\n```\n\n5. When the domain is active again, copy the domain ARN.\n\nWe use it to configure the Amazon EMR job IAM role we mentioned earlier.\n\n6. Choose the link for **OpenSearch Dashboards URL** to enter Amazon OpenSearch Service Dashboards.\n\n\n\n7. In Amazon OpenSearch Service Dashboards, use the user name and password that you configured earlier in the opensearch.sh file.\n8. Choose the options icon and choose **Security** under **OpenSearch Plugins**.\n\n\n\n9. Choose Roles.\n10. Choose Create role.\n\n\n\n11. Enter the new role’s name, cluster permissions, and index permissions. For this post, name the role ```fluentbit_role``` and give cluster permissions to the following:\n\n1. ```indices:admin/create```\n2. ```indices:admin/template/get```\n3. ```indices:admin/template/put```\n4. ```cluster:admin/ingest/pipeline/get```\n5. ```cluster:admin/ingest/pipeline/put```\n6. ```indices:data/write/bulk```\n7. ```indices:data/write/bulk*```\n8. ```create_index```\n\n\n\n12. In the **Index permissions** section, give write permission to the index ```fluent-*```.\n13. On the **Mapped users** tab, choose **Manage mapping**.\n14. For **Backend roles**, enter the Amazon EMR job execution IAM role ARN to be mapped to the ```fluentbit_role``` role.\n15. Choose **Map**.\n\n\n\n16. To complete the security configuration, go to the IAM console and add the following inline policy to the EMR on EKS IAM role entered in the backend role. Replace the resource ARN with the ARN of your OpenSearch Service domain.\n\nJson\n```\n{\n \"Version\": \"2012-10-17\",\n \"Statement\": [\n {\n \"Sid\": \"VisualEditor0\",\n \"Effect\": \"Allow\",\n \"Action\": [\n \"es:ESHttp*\"\n ],\n \"Resource\": \"arn:aws:es:us-east-2:XXXXXXXXXXXX:domain/emreksdemo\"\n }\n ]\n}\n```\n\nThe configuration of Amazon OpenSearch Service is complete and ready for ingestion of logs from the Fluent Bit sidecar container.\n\n\n#### **Configure the Fluent Bit sidecar container**\n\n\nWe need to write two configuration files to configure a Fluent Bit sidecar container. The first is the Fluent Bit configuration itself, and the second is the Fluent Bit sidecar subprocess configuration that makes sure that the sidecar operation ends when the main Spark job ends. The suggested configuration provided in this post is for Splunk and Amazon OpenSearch Service. However, you can configure Fluent Bit with other third-party log aggregators. For more information about configuring outputs, refer to [Outputs](https://docs.fluentbit.io/manual/pipeline/outputs).\n\n\n##### **Fluent Bit ConfigMap**\n\n\nThe following sample ConfigMap is from the [GitHub repo](https://github.com/aws-samples/aws-emr-eks-log-forwarding/blob/main/kube/configmaps/emr_configmap.yaml):\n\nApache Configuration\n```\napiVersion: v1\nkind: ConfigMap\nmetadata:\n name: fluent-bit-sidecar-config\n namespace: sparkns\n labels:\n app.kubernetes.io/name: fluent-bit\ndata:\n fluent-bit.conf: |\n [SERVICE]\n Flush 1\n Log_Level info\n Daemon off\n Parsers_File parsers.conf\n HTTP_Server On\n HTTP_Listen 0.0.0.0\n HTTP_Port 2020\n\n @INCLUDE input-application.conf\n @INCLUDE input-event-logs.conf\n @INCLUDE output-splunk.conf\n @INCLUDE output-opensearch.conf\n\n input-application.conf: |\n [INPUT]\n Name tail\n Path /var/log/spark/user/*/*\n Path_Key filename\n Buffer_Chunk_Size 1M\n Buffer_Max_Size 5M\n Skip_Long_Lines On\n Skip_Empty_Lines On\n\n input-event-logs.conf: |\n [INPUT]\n Name tail\n Path /var/log/spark/apps/*\n Path_Key filename\n Buffer_Chunk_Size 1M\n Buffer_Max_Size 5M\n Skip_Long_Lines On\n Skip_Empty_Lines On\n\n output-splunk.conf: |\n [OUTPUT]\n Name splunk\n Match *\n Host < INTERNAL DNS of Splunk EC2 Instance >\n Port 8088\n TLS On\n TLS.Verify Off\n Splunk_Token < Token as provided by the HTTP Event Collector in Splunk >\n\n output-opensearch.conf: |\n[OUTPUT]\n Name es\n Match *\n Host < HOST NAME of the OpenSearch Domain | No HTTP protocol >\n Port 443\n TLS On\n AWS_Auth On\n AWS_Region < Region >\n Retry_Limit 6\n```\n\nIn your Amazon Web Services Cloud9 workspace, modify the ConfigMap accordingly. Provide the values for the placeholder text by running the following commands to enter the VI editor mode. If preferred, you can use PICO or a different editor:\n\nBash\n```\n[../aws-emr-eks-log-forwarding] $ cd kube/configmaps\n[../aws-emr-eks-log-forwarding/kube/configmaps] $ vi emr_configmap.yaml\n\n# Modify the emr_configmap.yaml as above\n# Save the file once it is completed\n```\n\nComplete either the Splunk output configuration or the Amazon OpenSearch Service output configuration.\n\nNext, run the following commands to add the two Fluent Bit sidecar and subprocess ConfigMaps:\n\nBash\n```\n[../aws-emr-eks-log-forwarding/kube/configmaps] $ kubectl apply -f emr_configmap.yaml\n[../aws-emr-eks-log-forwarding/kube/configmaps] $ kubectl apply -f emr_entrypoint_configmap.yaml\n```\n\nYou don’t need to modify the second ConfigMap because it’s the subprocess script that runs inside the Fluent Bit sidecar container. To verify that the ConfigMaps have been installed, run the following command:\n\nBash\n```\n$ kubectl get cm -n sparkns\nNAME DATA AGE\nfluent-bit-sidecar-config 6 15s\nfluent-bit-sidecar-wrapper 2 15s\n```\n\n##### **Set up a customized EMR container image**\n\n\nTo run the [sample PySpark script](https://github.com/aws-samples/aws-emr-eks-log-forwarding/blob/main/scripts/bostonproperty.py), the script requires the Boto3 package that’s not available in the standard EMR container images. If you want to run your own script and it doesn’t require a customized EMR container image, you may skip this step.\n\nRun the following script:\n\nBash\n```\n[../aws-emr-eks-log-forwarding] $ cd ecr\n[../aws-emr-eks-log-forwarding/ecr] $ bash create_custom_image.sh <region> <EMR container image account number>\n```\n\nThe EMR container image account number can be obtained from [How to select a base image URI](https://docs.aws.amazon.com/emr/latest/EMR-on-EKS-DevelopmentGuide/docker-custom-images-tag.html). This documentation also provides the appropriate ECR registry account number. For example, the registry account number for ```us-east-1 is 755674844232```.\n\nTo verify the repository and image, run the following commands:\n\nBash\n```\n$ aws ecr describe-repositories --region < region > | grep emr-6.5.0-custom\n \"repositoryArn\": \"arn:aws:ecr:xx-xxxx-x:xxxxxxxxxxxx:repository/emr-6.5.0-custom\",\n \"repositoryName\": \"emr-6.5.0-custom\",\n \"repositoryUri\": \" xxxxxxxxxxxx.dkr.ecr.xx-xxxx-x.amazonaws.com/emr-6.5.0-custom\",\n\n$ aws ecr describe-images --region < region > --repository-name emr-6.5.0-custom | jq .imageDetails[0].imageTags\n[\n \"latest\"\n]\n```\n\n\n#### **Prepare pod templates for Spark jobs**\n\n\nUpload the two Spark driver and Spark executor pod templates to an S3 bucket and prefix. The two pod templates can be found in the [GitHub repository](https://github.com/aws-samples/aws-emr-eks-log-forwarding/tree/main/kube/podtemplates):\n\n- **emr_driver_template.yaml** – Spark driver pod template\n- **emr_executor_template.yaml** – Spark executor pod template\n\nThe pod templates provided here should not be modified.\n\n\n#### **Submitting a Spark job with a Fluent Bit sidecar container**\n\n\nThis Spark job example uses the [bostonproperty.py](https://github.com/aws-samples/aws-emr-eks-log-forwarding/blob/main/scripts/bostonproperty.py) script. To use this script, upload it to an accessible S3 bucket and prefix and complete the preceding steps to use an EMR customized container image. You also need to upload the CSV file from the [GitHub repo](https://github.com/aws-samples/aws-emr-eks-log-forwarding/tree/main/data), which you need to download and unzip. Upload the unzipped file to the following location: ```s3://<your chosen bucket>/<first level folder>/data/boston-property-assessment-2021.csv```.\n\nThe following commands assume that you launched your EKS cluster and virtual EMR cluster with the parameters indicated in the [GitHub repo](https://github.com/aws-samples/aws-emr-eks-log-forwarding/blob/main/emreks/parameters.sh).\n\n\n\nAlternatively, use the [provided script](https://github.com/aws-samples/aws-emr-eks-log-forwarding/blob/main/emreks/scripts/run_emr_script.sh) to run the job. Change the directory to the ```scripts``` folder in ```emreks``` and run the script as follows:\n\nBash\n```\n[../aws-emr-eks-log-forwarding] cd emreks/scripts\n[../aws-emr-eks-log-forwarding/emreks/scripts] bash run_emr_script.sh < S3 bucket name > < ECR container image > < script path>\n\nExample: bash run_emr_script.sh emreksdemo-123456 12345678990.dkr.ecr.us-east-2.amazonaws.com/emr-6.5.0-custom s3://emreksdemo-123456/scripts/scriptname.py\n```\n\nAfter you submit the Spark job successfully, you get a return JSON response like the following:\n\nJSON\n```\n{\n \"id\": \"0000000305e814v0bpt\",\n \"name\": \"emreksdemo-job\",\n \"arn\": \"arn:aws:emr-containers:xx-xxxx-x:XXXXXXXXXXX:/virtualclusters/upobc00wgff5XXXXXXXXXXX/jobruns/0000000305e814v0bpt\",\n \"virtualClusterId\": \"upobc00wgff5XXXXXXXXXXX\"\n}\n```\n\n\n#### **What happens when you submit a Spark job with a sidecar container**\n\n\nAfter you submit a Spark job, you can see what is happening by viewing the pods that are generated and the corresponding logs. First, using kubectl, get a list of the pods generated in the namespace where the EMR virtual cluster runs. In this case, it’s ```sparkns```. The first pod in the following code is the job controller for this particular Spark job. The second pod is the Spark executor; there can be more than one pod depending on how many executor instances are asked for in the Spark job setting—we asked for one here. The third pod is the Spark driver pod.\n\nBash\n```\n$ kubectl get pods -n sparkns\nNAME READY STATUS RESTARTS AGE\n0000000305e814v0bpt-hvwjs 3/3 Running 0 25s\nemreksdemo-script-1247bf80ae40b089-exec-1 0/3 Pending 0 0s\nspark-0000000305e814v0bpt-driver 3/3 Running 0 11s\n```\n\nTo view what happens in the sidecar container, follow the logs in the Spark driver pod and refer to the sidecar. The sidecar container launches with the Spark pods and persists until the file ```/var/log/fluentd/main-container-terminated``` is no longer available. For more information about how Amazon EMR controls the pod lifecycle, refer to [Using pod templates](https://docs.aws.amazon.com/emr/latest/EMR-on-EKS-DevelopmentGuide/pod-templates.html). The subprocess script ties the sidecar container to this same lifecycle and deletes itself upon the EMR controlled pod lifecycle process.\n\nBash\n```\n$ kubectl logs spark-0000000305e814v0bpt-driver -n sparkns -c custom-side-car-container --follow=true\n\nWaiting for file /var/log/fluentd/main-container-terminated to appear...\nAmazon Web Services for Fluent Bit Container Image Version 2.24.0Start wait: 1652190909\nElapsed Wait: 0\nNot found count: 0\nWaiting...\nFluent Bit v1.9.3\n* Copyright (C) 2015-2022 The Fluent Bit Authors\n* Fluent Bit is a CNCF sub-project under the umbrella of Fluentd\n* https://fluentbit.io\n\n[2022/05/10 13:55:09] [ info] [fluent bit] version=1.9.3, commit=9eb4996b7d, pid=11\n[2022/05/10 13:55:09] [ info] [storage] version=1.2.0, type=memory-only, sync=normal, checksum=disabled, max_chunks_up=128\n[2022/05/10 13:55:09] [ info] [cmetrics] version=0.3.1\n[2022/05/10 13:55:09] [ info] [output:splunk:splunk.0] worker #0 started\n[2022/05/10 13:55:09] [ info] [output:splunk:splunk.0] worker #1 started\n[2022/05/10 13:55:09] [ info] [output:es:es.1] worker #0 started\n[2022/05/10 13:55:09] [ info] [output:es:es.1] worker #1 started\n[2022/05/10 13:55:09] [ info] [http_server] listen iface=0.0.0.0 tcp_port=2020\n[2022/05/10 13:55:09] [ info] [sp] stream processor started\nWaiting for file /var/log/fluentd/main-container-terminated to appear...\nLast heartbeat: 1652190914\nElapsed Time since after heartbeat: 0\nFound count: 0\nlist files:\n-rw-r--r-- 1 saslauth 65534 0 May 10 13:55 /var/log/fluentd/main-container-terminated\nLast heartbeat: 1652190918\n\n…\n\n[2022/05/10 13:56:09] [ info] [input:tail:tail.0] inotify_fs_add(): inode=58834691 watch_fd=6 name=/var/log/spark/user/spark-0000000305e814v0bpt-driver/stdout-s3-container-log-in-tail.pos\n[2022/05/10 13:56:09] [ info] [input:tail:tail.1] inotify_fs_add(): inode=54644346 watch_fd=1 name=/var/log/spark/apps/spark-0000000305e814v0bpt\nOutside of loop, main-container-terminated file no longer exists\nls: cannot access /var/log/fluentd/main-container-terminated: No such file or directory\nThe file /var/log/fluentd/main-container-terminated doesn't exist anymore;\nTERMINATED PROCESS\nFluent-Bit pid: 11\nKilling process after sleeping for 15 seconds\nroot 11 8 0 13:55 ? 00:00:00 /fluent-bit/bin/fluent-bit -e /fluent-bit/firehose.so -e /fluent-bit/cloudwatch.so -e /fluent-bit/kinesis.so -c /fluent-bit/etc/fluent-bit.conf\nroot 114 7 0 13:56 ? 00:00:00 grep fluent\nKilling process 11\n[2022/05/10 13:56:24] [engine] caught signal (SIGTERM)\n[2022/05/10 13:56:24] [ info] [input] pausing tail.0\n[2022/05/10 13:56:24] [ info] [input] pausing tail.1\n[2022/05/10 13:56:24] [ warn] [engine] service will shutdown in max 5 seconds\n[2022/05/10 13:56:25] [ info] [engine] service has stopped (0 pending tasks)\n[2022/05/10 13:56:25] [ info] [input:tail:tail.1] inotify_fs_remove(): inode=54644346 watch_fd=1\n[2022/05/10 13:56:25] [ info] [input:tail:tail.0] inotify_fs_remove(): inode=60917120 watch_fd=1\n[2022/05/10 13:56:25] [ info] [input:tail:tail.0] inotify_fs_remove(): inode=60917121 watch_fd=2\n[2022/05/10 13:56:25] [ info] [input:tail:tail.0] inotify_fs_remove(): inode=58834690 watch_fd=3\n[2022/05/10 13:56:25] [ info] [input:tail:tail.0] inotify_fs_remove(): inode=58834692 watch_fd=4\n[2022/05/10 13:56:25] [ info] [input:tail:tail.0] inotify_fs_remove(): inode=58834689 watch_fd=5\n[2022/05/10 13:56:25] [ info] [input:tail:tail.0] inotify_fs_remove(): inode=58834691 watch_fd=6\n[2022/05/10 13:56:25] [ info] [output:splunk:splunk.0] thread worker #0 stopping...\n[2022/05/10 13:56:25] [ info] [output:splunk:splunk.0] thread worker #0 stopped\n[2022/05/10 13:56:25] [ info] [output:splunk:splunk.0] thread worker #1 stopping...\n[2022/05/10 13:56:25] [ info] [output:splunk:splunk.0] thread worker #1 stopped\n[2022/05/10 13:56:25] [ info] [output:es:es.1] thread worker #0 stopping...\n[2022/05/10 13:56:25] [ info] [output:es:es.1] thread worker #0 stopped\n[2022/05/10 13:56:25] [ info] [output:es:es.1] thread worker #1 stopping...\n[2022/05/10 13:56:25] [ info] [output:es:es.1] thread worker #1 stopped\n```\n\n\n#### **View the forwarded logs in Splunk or Amazon OpenSearch Service**\n\n\nTo view the forwarded logs, do a search in Splunk or on the Amazon OpenSearch Service console. If you’re using a shared log aggregator, you may have to filter the results. In this configuration, the logs tailed by Fluent Bit are in the ```/var/log/spark/*```. The following screenshots show the logs generated specifically by the Kubernetes Spark driver ```stdout``` that were forwarded to the log aggregators. You can compare the results with the logs provided using kubectl:\n\nBash\n```\nkubectl logs < Spark Driver Pod > -n < namespace > -c spark-kubernetes-driver --follow=true\n\n…\nroot\n |-- PID: string (nullable = true)\n |-- CM_ID: string (nullable = true)\n |-- GIS_ID: string (nullable = true)\n |-- ST_NUM: string (nullable = true)\n |-- ST_NAME: string (nullable = true)\n |-- UNIT_NUM: string (nullable = true)\n |-- CITY: string (nullable = true)\n |-- ZIPCODE: string (nullable = true)\n |-- BLDG_SEQ: string (nullable = true)\n |-- NUM_BLDGS: string (nullable = true)\n |-- LUC: string (nullable = true)\n…\n\n|02108|RETAIL CONDO |361450.0 |63800.0 |5977500.0 |\n|02108|RETAIL STORE DETACH |2295050.0 |988200.0 |3601900.0 |\n|02108|SCHOOL |1.20858E7 |1.20858E7 |1.20858E7 |\n|02108|SINGLE FAM DWELLING |5267156.561085973 |1153400.0 |1.57334E7 |\n+-----+-----------------------+--------------------+---------------+---------------+\nonly showing top 50 rows\n```\n\nThe following screenshot shows the Splunk logs.\n\n\n\nThe following screenshots show the Amazon OpenSearch Service logs.\n\n\n\n\n\n#### **Optional: Include a buffer between Fluent Bit and the log aggregators**\n\n\nIf you expect to generate a lot of logs because of high concurrent Spark jobs creating multiple individual connects that may overwhelm your Amazon OpenSearch Service or Splunk log aggregation clusters, consider employing a buffer between the Fluent Bit sidecars and your log aggregator. One option is to use [Amazon Kinesis Data Firehose](https://aws.amazon.com/kinesis/data-firehose/) as the buffering service.\n\nKinesis Data Firehose has built-in delivery to both Amazon OpenSearch Service and Splunk. If using Amazon OpenSearch Service, refer to [Loading streaming data from Amazon Kinesis Data Firehose](https://docs.aws.amazon.com/opensearch-service/latest/developerguide/integrations.html). If using Splunk, refer to [Configure Amazon Kinesis Firehose to send data to the Splunk platform](https://docs.splunk.com/Documentation/AddOns/released/Firehose/ConfigureFirehose) and [Choose Splunk for Your Destination](https://docs.aws.amazon.com/firehose/latest/dev/create-destination.html).\n\nTo configure Fluent Bit to Kinesis Data Firehose, add the following to your ConfigMap output. Refer to the [GitHub ConfigMap example](https://github.com/aws-samples/aws-emr-eks-log-forwarding/blob/main/kube/configmaps/emr_configmap.yaml) and add the ```@INCLUDE``` under the ```[SERVICE]``` section:\n\nApache Configuration\n```\n @INCLUDE output-kinesisfirehose.conf\n…\n\n output-kinesisfirehose.conf: |\n [OUTPUT]\n Name kinesis_firehose\n Match *\n region < region >\n delivery_stream < Kinesis Firehose Stream Name >\n```\n\n\n#### **Optional: Use data streams for Amazon OpenSearch Service**\n\n\nIf you’re in a scenario where the number of documents grows rapidly and you don’t need to update older documents, you need to manage the OpenSearch Service cluster. This involves steps like creating a rollover index alias, defining a write index, and defining common mappings and settings for the backing indexes. Consider using data streams to simplify this process and enforce a setup that best suits your time series data. For instructions on implementing data streams, refer to [Data streams](https://opensearch.org/docs/latest/opensearch/data-streams/).\n\n\n#### **Clean up**\n\n\nTo avoid incurring future charges, delete the resources by deleting the CloudFormation stacks that were created with this script. This removes the EKS cluster. However, before you do that, remove the EMR virtual cluster first by running the [delete-virtual-cluster](https://docs.aws.amazon.com/cli/latest/reference/emr-containers/delete-virtual-cluster.html) command. Then delete all the CloudFormation stacks generated by the deployment script.\n\nIf you launched an OpenSearch Service domain, you can delete the domain from the OpenSearch Service domain. If you used the script to launch a Splunk instance, you can go to the CloudFormation stack that launched the Splunk instance and delete the CloudFormation stack. This removes remove the Splunk instance and associated resources.\n\nYou can also use the following scripts to clean up resources:\n\n- To remove an OpenSearch Service domain, run the [delete_opensearch_domain.sh](https://github.com/aws-samples/aws-emr-eks-log-forwarding/blob/main/opensearch/delete_opensearch_domain.sh) script\n- To remove the Splunk resource, run the [remove_splunk_deployment.sh](https://github.com/aws-samples/aws-emr-eks-log-forwarding/blob/main/splunk/remove_splunk_deployment.sh) script\n- To remove the EKS cluster, including the EMR virtual cluster, VPC, and other IAM roles, run the [remove_emr_eks_deployment.sh](https://github.com/aws-samples/aws-emr-eks-log-forwarding/blob/main/emreks/remove_emr_eks_deployment.sh) script\n\n\n#### **Conclusion**\n\n\nEMR on EKS facilitates running Spark jobs on Kubernetes to achieve very fast and cost-efficient Spark operations. This is made possible through scheduling transient pods that are launched and then deleted the jobs are complete. To log all these operations in the same lifecycle of the Spark jobs, this post provides a solution using pod templates and Fluent Bit that is lightweight and powerful. This approach offers a decoupled way of log forwarding based at the Spark application level and not at the Kubernetes cluster level. It also avoids routing through intermediaries like CloudWatch, reducing cost and complexity. In this way, you can address security concerns and DevOps and system administration ease of management while providing Spark users with insights into their Spark jobs in a cost-efficient and functional way.\n\nIf you have questions or suggestions, please leave a comment.\n\n\n#### **About the Author**\n\n\n\n\n**Matthew Tan** is a Senior Analytics Solutions Architect at Amazon Web Services and provides guidance to customers developing solutions with Amazon Web Services Analytics services on their analytics workloads. ","render":"<p>Spark jobs running on <a href=\"https://docs.aws.amazon.com/emr/latest/EMR-on-EKS-DevelopmentGuide/emr-eks.html\" target=\"_blank\">Amazon EMR on EKS</a> generate logs that are very useful in identifying issues with Spark processes and also as a way to see Spark outputs. You can access these logs from a variety of sources. On the <a href=\"http://aws.amazon.com/emr\" target=\"_blank\">Amazon EMR</a> virtual cluster console, you can access logs from the Spark History UI. You also have flexibility to push logs into an <a href=\"http://aws.amazon.com/s3\" target=\"_blank\">Amazon Simple Storage Service</a> (Amazon S3) bucket or <a href=\"http://aws.amazon.com/cloudwatch\" target=\"_blank\">Amazon CloudWatch Logs</a>. In each method, these logs are linked to the specific job in question. The common practice of log management in DevOps culture is to centralize logging through the forwarding of logs to an enterprise log aggregation system like Splunk or <a href=\"https://aws.amazon.com/opensearch-service/\" target=\"_blank\">Amazon OpenSearch Service</a> (successor to Amazon Elasticsearch Service). This enables you to see all the applicable log data in one place. You can identify key trends, anomalies, and correlated events, and troubleshoot problems faster and notify the appropriate people in a timely fashion.</p>\n<p>EMR on EKS Spark logs are generated by Spark and can be accessed via the Kubernetes API and kubectl CLI. Therefore, although it’s possible to install log forwarding agents in the <a href=\"https://aws.amazon.com/opensearch-service/\" target=\"_blank\">Amazon Elastic Kubernetes Service</a> (Amazon EKS) cluster to forward all Kubernetes logs, which include Spark logs, this can become quite expensive at scale because you get information that may not be important for Spark users about Kubernetes. In addition, from a security point of view, the EKS cluster logs and access to kubectl may not be available to the Spark user.</p>\n<p>To solve this problem, this post proposes using pod templates to create a sidecar container alongside the Spark job pods. The sidecar containers are able to access the logs contained in the Spark pods and forward these logs to the log aggregator. This approach allows the logs to be managed separately from the EKS cluster and uses a small amount of resources because the sidecar container is only launched during the lifetime of the Spark job.</p>\n<h4><a id=\"Implementing_Fluent_Bit_as_a_sidecar_container_7\"></a><strong>Implementing Fluent Bit as a sidecar container</strong></h4>\n<p>Fluent Bit is a lightweight, highly scalable, and high-speed logging and metrics processor and log forwarder. It collects event data from any source, enriches that data, and sends it to any destination. Its lightweight and efficient design coupled with its many features makes it very attractive to those working in the cloud and in containerized environments. It has been deployed extensively and trusted by many, even in large and complex environments. Fluent Bit has zero dependencies and requires only 650 KB in memory to operate, as compared to FluentD, which needs about 40 MB in memory. Therefore, it’s an ideal option as a log forwarder to forward logs generated from Spark jobs.</p>\n<p>When you submit a job to EMR on EKS, there are at least two Spark containers: the Spark driver and the Spark executor. The number of Spark executor pods depends on your job submission configuration. If you indicate more than one <code>spark.executor.instances</code>, you get the corresponding number of Spark executor pods. What we want to do here is run Fluent Bit as sidecar containers with the Spark driver and executor pods. Diagrammatically, it looks like the following figure. The Fluent Bit sidecar container reads the indicated logs in the Spark driver and executor pods, and forwards these logs to the target log aggregator directly.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/f5fe3123effb46a5afe99dfd50ce3e6d_image.png\" alt=\"image.png\" /></p>\n<h4><a id=\"Pod_templates_in_EMR_on_EKS_17\"></a><strong>Pod templates in EMR on EKS</strong></h4>\n<p>A Kubernetes pod is a group of one or more containers with shared storage, network resources, and a specification for how to run the containers. Pod templates are specifications for creating pods. It’s part of the desired state of the workload resources used to run the application. Pod template files can define the driver or executor pod configurations that aren’t supported in standard Spark configuration. That being said, Spark is opinionated about certain pod configurations and some values in the pod template are always overwritten by Spark. Using a pod template only allows Spark to start with a template pod and not an empty pod during the pod building process. Pod templates are enabled in EMR on EKS when you configure the Spark properties <code>spark.kubernetes.driver.podTemplateFile</code> and <code>spark.kubernetes.executor.podTemplateFile</code>. Spark downloads these pod templates to construct the driver and executor pods.</p>\n<h4><a id=\"Forward_logs_generated_by_Spark_jobs_in_EMR_on_EKS_23\"></a><strong>Forward logs generated by Spark jobs in EMR on EKS</strong></h4>\n<p>A log aggregating system like Amazon OpenSearch Service or Splunk should always be available that can accept the logs forwarded by the Fluent Bit sidecar containers. If not, we provide the following scripts in this post to help you launch a log aggregating system like Amazon OpenSearch Service or Splunk installed on an <a href=\"http://aws.amazon.com/ec2\" target=\"_blank\">Amazon Elastic Compute Cloud</a> (Amazon EC2) instance.</p>\n<p>We use several services to create and configure EMR on EKS. We use an <a href=\"https://aws.amazon.com/cloud9/\" target=\"_blank\">AWS Cloud9</a> workspace to run all the scripts and to configure the EKS cluster. To prepare to run a job script that requires certain Python libraries absent from the generic EMR images, we use <a href=\"https://aws.amazon.com/ecr/\" target=\"_blank\">Amazon Elastic Container Registry</a> (Amazon ECR) to store the customized EMR container image.</p>\n<h5><a id=\"Create_an_Amazon_Web_Services_Cloud9_workspace_31\"></a><strong>Create an Amazon Web Services Cloud9 workspace</strong></h5>\n<p>The first step is to launch and configure the Amazon Web Services Cloud9 workspace by following the instructions in <a href=\"https://www.eksworkshop.com/020_prerequisites/workspace/\" target=\"_blank\">Create a Workspace</a> in the EKS Workshop. After you create the workspace, we create <a href=\"http://aws.amazon.com/iam\" target=\"_blank\">Amazon Web Services Identity and Access Management</a> (IAM) resources. <a href=\"https://www.eksworkshop.com/020_prerequisites/iamrole/\" target=\"_blank\">Create an IAM role</a> for the workspace, <a href=\"https://www.eksworkshop.com/020_prerequisites/ec2instance/\" target=\"_blank\">attach the role</a> to the workspace, and <a href=\"https://www.eksworkshop.com/020_prerequisites/workspaceiam/\" target=\"_blank\">update the workspace</a> IAM settings.</p>\n<h5><a id=\"Prepare_the_Amazon_Web_Services__Cloud9_workspace_37\"></a><strong>Prepare the Amazon Web Services Cloud9 workspace</strong></h5>\n<p>Clone the following <a href=\"https://github.com/aws-samples/aws-emr-eks-log-forwarding\" target=\"_blank\">GitHub repository</a> and run the following script to prepare the Amazon Web Services Cloud9 workspace to be ready to install and configure Amazon EKS and EMR on EKS. The shell script prepare_cloud9.sh installs all the necessary components for the Amazon Web Services Cloud9 workspace to build and manage the EKS cluster. These include the kubectl command line tool, eksctl CLI tool, jq, and to update the <a href=\"http://aws.amazon.com/cli\" target=\"_blank\">Amazon Web Services Command Line Interface</a> (Amazon Web Services CLI).</p>\n<p>Bash</p>\n<pre><code class=\"lang-\">$ sudo yum -y install git\n$ cd ~ \n$ git clone https://github.com/aws-samples/aws-emr-eks-log-forwarding.git\n$ cd aws-emr-eks-log-forwarding\n$ cd emreks\n$ bash prepare_cloud9.sh\n</code></pre>\n<p>All the necessary scripts and configuration to run this solution are found in the cloned GitHub repository.</p>\n<h5><a id=\"Create_a_key_pair_55\"></a><strong>Create a key pair</strong></h5>\n<p>As part of this particular deployment, you need an EC2 key pair to create an EKS cluster. If you already have an existing EC2 key pair, you may use that key pair. Otherwise, you can <a href=\"https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/create-key-pairs.html\" target=\"_blank\">create a key pair</a>.</p>\n<h5><a id=\"Install_Amazon_EKS_and_EMR_on_EKS_61\"></a><strong>Install Amazon EKS and EMR on EKS</strong></h5>\n<p>After you configure the Amazon Web Services Cloud9 workspace, in the same folder (<code>emreks</code>), run the following deployment script:</p>\n<p>Bash</p>\n<pre><code class=\"lang-\">$ bash deploy_eks_cluster_bash.sh \nDeployment Script -- EMR on EKS\n-----------------------------------------------\n\nPlease provide the following information before deployment:\n1. Region (If your Cloud9 desktop is in the same region as your deployment, you can leave this blank)\n2. Account ID (If your Cloud9 desktop is running in the same Account ID as where your deployment will be, you can leave this blank)\n3. Name of the S3 bucket to be created for the EMR S3 storage location\nRegion: [xx-xxxx-x]: < Press enter for default or enter region > \nAccount ID [xxxxxxxxxxxx]: < Press enter for default or enter account # > \nEC2 Public Key name: < Provide your key pair name here >\nDefault S3 bucket name for EMR on EKS (do not add s3://): < bucket name >\nBucket created: XXXXXXXXXXX ...\nDeploying CloudFormation stack with the following parameters...\nRegion: xx-xxxx-x | Account ID: xxxxxxxxxxxx | S3 Bucket: XXXXXXXXXXX\n\n...\n\nEKS Cluster and Virtual EMR Cluster have been installed.\n</code></pre>\n<p>The last line indicates that installation was successful.</p>\n<h4><a id=\"Log_aggregation_options_92\"></a><strong>Log aggregation options</strong></h4>\n<p>To manually install Splunk on an EC2 instance, complete the following steps:</p>\n<h5><a id=\"Option_1_Install_Splunk_Enterprise_98\"></a><strong>Option 1: Install Splunk Enterprise</strong></h5>\n<p>To manually install Splunk on an EC2 instance, complete the following steps:</p>\n<ol>\n<li><a href=\"https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/ec2-launch-instance-wizard.html\" target=\"_blank\">Launch an EC2 instance</a>.</li>\n<li><a href=\"https://docs.splunk.com/Documentation/Splunk/8.2.6/Installation/Beforeyouinstall\" target=\"_blank\">Install Splunk</a>.</li>\n<li>Configure the EC2 instance <a href=\"https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/security-group-rules-reference.html\" target=\"_blank\">security group</a> to permit access to ports 22, 8000, and 8088.</li>\n</ol>\n<p>This post, however, provides an automated way to install Spunk on an EC2 instance:</p>\n<ol>\n<li>Download the RPM install file and upload it to an accessible Amazon S3 location.</li>\n<li>Upload the following YAML script into Amazon Web Services CloudFormation.</li>\n<li>Provide the necessary parameters, as shown in the screenshots below.</li>\n<li>Choose Next and complete the steps to create your stack.</li>\n</ol>\n<p><img src=\"https://dev-media.amazoncloud.cn/e4a5e2525e6b46079b32c5632b452676_image.png\" alt=\"image.png\" /></p>\n<p><img src=\"https://dev-media.amazoncloud.cn/bf4a09b17ee949d299426617859ac65c_image.png\" alt=\"image.png\" /></p>\n<p><img src=\"https://dev-media.amazoncloud.cn/447542502dcf40b9b3afc729359d4691_image.png\" alt=\"image.png\" /></p>\n<p>Alternatively, run an Amazon Web Services CLI script like the following:</p>\n<p>Bash</p>\n<pre><code class=\"lang-\">aws cloudformation create-stack \\\n--stack-name "splunk" \\\n--template-body file://splunk_cf.yaml \\\n--parameters ParameterKey=KeyName,ParameterValue="< Name of EC2 Key Pair >" \\\n ParameterKey=InstanceType,ParameterValue="t3.medium" \\\n ParameterKey=LatestAmiId,ParameterValue="/aws/service/ami-amazon-linux-latest/amzn2-ami-hvm-x86_64-gp2" \\\n ParameterKey=VPCID,ParameterValue="vpc-XXXXXXXXXXX" \\\n ParameterKey=PublicSubnet0,ParameterValue="subnet-XXXXXXXXX" \\\n ParameterKey=SSHLocation,ParameterValue="< CIDR Range for SSH access >" \\\n ParameterKey=VpcCidrRange,ParameterValue="172.20.0.0/16" \\\n ParameterKey=RootVolumeSize,ParameterValue="100" \\\n ParameterKey=S3BucketName,ParameterValue="< S3 Bucket Name >" \\\n ParameterKey=S3Prefix,ParameterValue="splunk/splunk-8.2.5-77015bc7a462-linux-2.6-x86_64.rpm" \\\n ParameterKey=S3DownloadLocation,ParameterValue="/tmp" \\\n--region < region > \\\n--capabilities CAPABILITY_IAM\n</code></pre>\n<ol start=\"5\">\n<li>After you build the stack, navigate to the stack’s <strong>Outputs</strong> tab on the Amazon Web Services CloudFormation console and note the internal and external DNS for the Splunk instance.</li>\n</ol>\n<p>You use these later to configure the Splunk instance and log forwarding.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/8bc8e59ac449484eb57287e7619c9eb6_image.png\" alt=\"image.png\" /></p>\n<ol start=\"6\">\n<li>To configure Splunk, go to the <strong>Resources</strong> tab for the CloudFormation stack and locate the physical ID of <code>EC2Instance</code>.</li>\n<li>Choose that link to go to the specific EC2 instance.</li>\n<li>Select the instance and choose <strong>Connect</strong>.</li>\n</ol>\n<p><img src=\"https://dev-media.amazoncloud.cn/82a62303d0634881a28f76b9830015ee_image.png\" alt=\"image.png\" /></p>\n<ol start=\"9\">\n<li>On the <strong>Session Manager</strong> tab, choose <strong>Connect</strong>.</li>\n</ol>\n<p><img src=\"https://dev-media.amazoncloud.cn/d386cd9adc484596b58baf3861a4f1b3_image.png\" alt=\"image.png\" /></p>\n<p>You’re redirected to the instance’s shell.</p>\n<ol start=\"10\">\n<li>Install and configure Splunk as follows:</li>\n</ol>\n<p>Bash</p>\n<pre><code class=\"lang-\">$ sudo /opt/splunk/bin/splunk start --accept-license\n…\nPlease enter an administrator username: admin\nPassword must contain at least:\n * 8 total printable ASCII character(s).\nPlease enter a new password: \nPlease confirm new password:\n…\nDone\n [ OK ]\n\nWaiting for web server at http://127.0.0.1:8000 to be available......... Done\nThe Splunk web interface is at http://ip-xx-xxx-xxx-x.us-east-2.compute.internal:8000\n</code></pre>\n<ol start=\"11\">\n<li>Enter the Splunk site using the <code>SplunkPublicDns</code> value from the stack outputs (for example, <code>http://ec2-xx-xxx-xxx-x.us-east-2.compute.amazonaws.com:8000</code>). Note the port number of 8000.</li>\n<li>Log in with the user name and password you provided.</li>\n</ol>\n<p><img src=\"https://dev-media.amazoncloud.cn/9ff3d7cb101848c58f5308445a1ccf43_image.png\" alt=\"image.png\" /></p>\n<h5><a id=\"Configure_HTTP_Event_Collector_185\"></a><strong>Configure HTTP Event Collector</strong></h5>\n<p>To configure Splunk to be able to receive logs from Fluent Bit, configure the HTTP Event Collector data input:</p>\n<ol>\n<li>Go to <strong>Settings</strong> and choose <strong>Data input</strong>.</li>\n<li>Choose <strong>HTTP Event Collector</strong>.</li>\n<li>Choose <strong>Global Settings</strong>.</li>\n<li>Select <strong>Enabled</strong>, keep port number 8088, then choose <strong>Save</strong>.</li>\n<li>Choose <strong>New Token</strong>.</li>\n<li>For <strong>Name</strong>, enter a name (for example, <code>emreksdemo</code>).</li>\n<li>Choose <strong>Next</strong>.</li>\n<li>For <strong>Available item(s) for Indexes</strong>, add at least the main index.</li>\n<li>Choose <strong>Review</strong> and then <strong>Submit</strong>.</li>\n<li>In the list of HTTP Event Collect tokens, copy the token value for <code>emreksdemo</code>.</li>\n</ol>\n<p>You use it when configuring the Fluent Bit output.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/e4ac47285d5e4e1b90c63c3d792ae1c7_image.png\" alt=\"image.png\" /></p>\n<h4><a id=\"Option_2_Set_up_Amazon_OpenSearch_Service_206\"></a><strong>Option 2: Set up Amazon OpenSearch Service</strong></h4>\n<p>Your other log aggregation option is to use Amazon OpenSearch Service.</p>\n<p><strong>Provision an OpenSearch Service domain</strong></p>\n<p>Provisioning an OpenSearch Service domain is very straightforward. In this post, we provide a simple script and configuration to provision a basic domain. To do it yourself, refer to <a href=\"https://docs.aws.amazon.com/opensearch-service/latest/developerguide/createupdatedomains.html\" target=\"_blank\">Creating and managing Amazon OpenSearch Service domains</a>.</p>\n<p>Before you start, get the ARN of the IAM role that you use to run the Spark jobs. If you created the EKS cluster with the provided script, go to the CloudFormation stack <code>emr-eks-iam-stack</code>. On the Outputs tab, locate the <code>IAMRoleArn</code> output and copy this ARN. We also modify the IAM role later on, after we create the OpenSearch Service domain.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/89a3f29b80f24aa0881c5e19f5715372_image.png\" alt=\"image.png\" /></p>\n<p>If you’re using the provided <code>opensearch.sh</code> installer, before you run it, modify the file.</p>\n<p>From the root folder of the GitHub repository, <code>cd to opensearch</code> and modify <code>opensearch.sh</code> (you can also use your preferred editor):</p>\n<p>Bash</p>\n<pre><code class=\"lang-\">[../aws-emr-eks-log-forwarding] $ cd opensearch\n[../aws-emr-eks-log-forwarding/opensearch] $ vi opensearch.sh\n</code></pre>\n<p>Configure opensearch.sh to fit your environment, for example:</p>\n<p>Bash</p>\n<pre><code class=\"lang-\"># name of our Amazon OpenSearch cluster\nexport ES_DOMAIN_NAME="emreksdemo"\n\n# Elasticsearch version\nexport ES_VERSION="OpenSearch_1.0"\n\n# Instance Type\nexport INSTANCE_TYPE="t3.small.search"\n\n# OpenSearch Dashboards admin user\nexport ES_DOMAIN_USER="emreks"\n\n# OpenSearch Dashboards admin password\nexport ES_DOMAIN_PASSWORD='< ADD YOUR PASSWORD >'\n\n# Region\nexport REGION='us-east-1'\n</code></pre>\n<p>Run the script:</p>\n<p><code>[../aws-emr-eks-log-forwarding/opensearch] $ bash opensearch.sh</code></p>\n<h5><a id=\"Configure_your_OpenSearch_Service_domain_257\"></a><strong>Configure your OpenSearch Service domain</strong></h5>\n<p>After you set up your OpenSearch service domain and it’s active, make the following configuration changes to allow logs to be ingested into Amazon OpenSearch Service:</p>\n<ol>\n<li>On the Amazon OpenSearch Service console, on the <strong>Domains</strong> page, choose your domain.</li>\n</ol>\n<p><img src=\"https://dev-media.amazoncloud.cn/7b4859e542624a57a60da0445eb12ff9_image.png\" alt=\"image.png\" /></p>\n<ol start=\"2\">\n<li>On the <strong>Security configuration</strong> tab, choose <strong>Edit</strong>.</li>\n</ol>\n<p><img src=\"https://dev-media.amazoncloud.cn/49fe860a30fc4576bfe7b5ae26be8669_image.png\" alt=\"image.png\" /></p>\n<ol start=\"3\">\n<li>For <strong>Access Policy</strong>, select <strong>Only use fine-grained access control</strong>.</li>\n<li>Choose <strong>Save changes</strong>.</li>\n</ol>\n<p>The access policy should look like the following code:</p>\n<p>Json</p>\n<pre><code class=\"lang-\">{\n "Version": "2012-10-17",\n "Statement": [\n {\n "Effect": "Allow",\n "Principal": {\n "AWS": "*"\n },\n "Action": "es:*",\n "Resource": "arn:aws:es:xx-xxxx-x:xxxxxxxxxxxx:domain/emreksdemo/*"\n }\n ]\n}\n</code></pre>\n<ol start=\"5\">\n<li>When the domain is active again, copy the domain ARN.</li>\n</ol>\n<p>We use it to configure the Amazon EMR job IAM role we mentioned earlier.</p>\n<ol start=\"6\">\n<li>Choose the link for <strong>OpenSearch Dashboards URL</strong> to enter Amazon OpenSearch Service Dashboards.</li>\n</ol>\n<p><img src=\"https://dev-media.amazoncloud.cn/b71f44312a7b4efcbf1d687d38296460_image.png\" alt=\"image.png\" /></p>\n<ol start=\"7\">\n<li>In Amazon OpenSearch Service Dashboards, use the user name and password that you configured earlier in the opensearch.sh file.</li>\n<li>Choose the options icon and choose <strong>Security</strong> under <strong>OpenSearch Plugins</strong>.</li>\n</ol>\n<p><img src=\"https://dev-media.amazoncloud.cn/3db30f980a524fd5a4a164a46c263d32_image.png\" alt=\"image.png\" /></p>\n<ol start=\"9\">\n<li>Choose Roles.</li>\n<li>Choose Create role.</li>\n</ol>\n<p><img src=\"https://dev-media.amazoncloud.cn/c1344444a965496b99ec4a1836bb4678_image.png\" alt=\"image.png\" /></p>\n<ol start=\"11\">\n<li>\n<p>Enter the new role’s name, cluster permissions, and index permissions. For this post, name the role <code>fluentbit_role</code> and give cluster permissions to the following:</p>\n</li>\n<li>\n<p><code>indices:admin/create</code></p>\n</li>\n<li>\n<p><code>indices:admin/template/get</code></p>\n</li>\n<li>\n<p><code>indices:admin/template/put</code></p>\n</li>\n<li>\n<p><code>cluster:admin/ingest/pipeline/get</code></p>\n</li>\n<li>\n<p><code>cluster:admin/ingest/pipeline/put</code></p>\n</li>\n<li>\n<p><code>indices:data/write/bulk</code></p>\n</li>\n<li>\n<p><code>indices:data/write/bulk*</code></p>\n</li>\n<li>\n<p><code>create_index</code></p>\n</li>\n</ol>\n<p><img src=\"https://dev-media.amazoncloud.cn/1e51557ce50d4581b95291ca39fdf5ae_image.png\" alt=\"image.png\" /></p>\n<ol start=\"12\">\n<li>In the <strong>Index permissions</strong> section, give write permission to the index <code>fluent-*</code>.</li>\n<li>On the <strong>Mapped users</strong> tab, choose <strong>Manage mapping</strong>.</li>\n<li>For <strong>Backend roles</strong>, enter the Amazon EMR job execution IAM role ARN to be mapped to the <code>fluentbit_role</code> role.</li>\n<li>Choose <strong>Map</strong>.</li>\n</ol>\n<p><img src=\"https://dev-media.amazoncloud.cn/a7ce6dbde84542da90fc8dc2d8fb0c90_image.png\" alt=\"image.png\" /></p>\n<ol start=\"16\">\n<li>To complete the security configuration, go to the IAM console and add the following inline policy to the EMR on EKS IAM role entered in the backend role. Replace the resource ARN with the ARN of your OpenSearch Service domain.</li>\n</ol>\n<p>Json</p>\n<pre><code class=\"lang-\">{\n "Version": "2012-10-17",\n "Statement": [\n {\n "Sid": "VisualEditor0",\n "Effect": "Allow",\n "Action": [\n "es:ESHttp*"\n ],\n "Resource": "arn:aws:es:us-east-2:XXXXXXXXXXXX:domain/emreksdemo"\n }\n ]\n}\n</code></pre>\n<p>The configuration of Amazon OpenSearch Service is complete and ready for ingestion of logs from the Fluent Bit sidecar container.</p>\n<h4><a id=\"Configure_the_Fluent_Bit_sidecar_container_352\"></a><strong>Configure the Fluent Bit sidecar container</strong></h4>\n<p>We need to write two configuration files to configure a Fluent Bit sidecar container. The first is the Fluent Bit configuration itself, and the second is the Fluent Bit sidecar subprocess configuration that makes sure that the sidecar operation ends when the main Spark job ends. The suggested configuration provided in this post is for Splunk and Amazon OpenSearch Service. However, you can configure Fluent Bit with other third-party log aggregators. For more information about configuring outputs, refer to <a href=\"https://docs.fluentbit.io/manual/pipeline/outputs\" target=\"_blank\">Outputs</a>.</p>\n<h5><a id=\"Fluent_Bit_ConfigMap_358\"></a><strong>Fluent Bit ConfigMap</strong></h5>\n<p>The following sample ConfigMap is from the <a href=\"https://github.com/aws-samples/aws-emr-eks-log-forwarding/blob/main/kube/configmaps/emr_configmap.yaml\" target=\"_blank\">GitHub repo</a>:</p>\n<p>Apache Configuration</p>\n<pre><code class=\"lang-\">apiVersion: v1\nkind: ConfigMap\nmetadata:\n name: fluent-bit-sidecar-config\n namespace: sparkns\n labels:\n app.kubernetes.io/name: fluent-bit\ndata:\n fluent-bit.conf: |\n [SERVICE]\n Flush 1\n Log_Level info\n Daemon off\n Parsers_File parsers.conf\n HTTP_Server On\n HTTP_Listen 0.0.0.0\n HTTP_Port 2020\n\n @INCLUDE input-application.conf\n @INCLUDE input-event-logs.conf\n @INCLUDE output-splunk.conf\n @INCLUDE output-opensearch.conf\n\n input-application.conf: |\n [INPUT]\n Name tail\n Path /var/log/spark/user/*/*\n Path_Key filename\n Buffer_Chunk_Size 1M\n Buffer_Max_Size 5M\n Skip_Long_Lines On\n Skip_Empty_Lines On\n\n input-event-logs.conf: |\n [INPUT]\n Name tail\n Path /var/log/spark/apps/*\n Path_Key filename\n Buffer_Chunk_Size 1M\n Buffer_Max_Size 5M\n Skip_Long_Lines On\n Skip_Empty_Lines On\n\n output-splunk.conf: |\n [OUTPUT]\n Name splunk\n Match *\n Host < INTERNAL DNS of Splunk EC2 Instance >\n Port 8088\n TLS On\n TLS.Verify Off\n Splunk_Token < Token as provided by the HTTP Event Collector in Splunk >\n\n output-opensearch.conf: |\n[OUTPUT]\n Name es\n Match *\n Host < HOST NAME of the OpenSearch Domain | No HTTP protocol >\n Port 443\n TLS On\n AWS_Auth On\n AWS_Region < Region >\n Retry_Limit 6\n</code></pre>\n<p>In your Amazon Web Services Cloud9 workspace, modify the ConfigMap accordingly. Provide the values for the placeholder text by running the following commands to enter the VI editor mode. If preferred, you can use PICO or a different editor:</p>\n<p>Bash</p>\n<pre><code class=\"lang-\">[../aws-emr-eks-log-forwarding] $ cd kube/configmaps\n[../aws-emr-eks-log-forwarding/kube/configmaps] $ vi emr_configmap.yaml\n\n# Modify the emr_configmap.yaml as above\n# Save the file once it is completed\n</code></pre>\n<p>Complete either the Splunk output configuration or the Amazon OpenSearch Service output configuration.</p>\n<p>Next, run the following commands to add the two Fluent Bit sidecar and subprocess ConfigMaps:</p>\n<p>Bash</p>\n<pre><code class=\"lang-\">[../aws-emr-eks-log-forwarding/kube/configmaps] $ kubectl apply -f emr_configmap.yaml\n[../aws-emr-eks-log-forwarding/kube/configmaps] $ kubectl apply -f emr_entrypoint_configmap.yaml\n</code></pre>\n<p>You don’t need to modify the second ConfigMap because it’s the subprocess script that runs inside the Fluent Bit sidecar container. To verify that the ConfigMaps have been installed, run the following command:</p>\n<p>Bash</p>\n<pre><code class=\"lang-\">$ kubectl get cm -n sparkns\nNAME DATA AGE\nfluent-bit-sidecar-config 6 15s\nfluent-bit-sidecar-wrapper 2 15s\n</code></pre>\n<h5><a id=\"Set_up_a_customized_EMR_container_image_461\"></a><strong>Set up a customized EMR container image</strong></h5>\n<p>To run the <a href=\"https://github.com/aws-samples/aws-emr-eks-log-forwarding/blob/main/scripts/bostonproperty.py\" target=\"_blank\">sample PySpark script</a>, the script requires the Boto3 package that’s not available in the standard EMR container images. If you want to run your own script and it doesn’t require a customized EMR container image, you may skip this step.</p>\n<p>Run the following script:</p>\n<p>Bash</p>\n<pre><code class=\"lang-\">[../aws-emr-eks-log-forwarding] $ cd ecr\n[../aws-emr-eks-log-forwarding/ecr] $ bash create_custom_image.sh <region> <EMR container image account number>\n</code></pre>\n<p>The EMR container image account number can be obtained from <a href=\"https://docs.aws.amazon.com/emr/latest/EMR-on-EKS-DevelopmentGuide/docker-custom-images-tag.html\" target=\"_blank\">How to select a base image URI</a>. This documentation also provides the appropriate ECR registry account number. For example, the registry account number for <code>us-east-1 is 755674844232</code>.</p>\n<p>To verify the repository and image, run the following commands:</p>\n<p>Bash</p>\n<pre><code class=\"lang-\">$ aws ecr describe-repositories --region < region > | grep emr-6.5.0-custom\n "repositoryArn": "arn:aws:ecr:xx-xxxx-x:xxxxxxxxxxxx:repository/emr-6.5.0-custom",\n "repositoryName": "emr-6.5.0-custom",\n "repositoryUri": " xxxxxxxxxxxx.dkr.ecr.xx-xxxx-x.amazonaws.com/emr-6.5.0-custom",\n\n$ aws ecr describe-images --region < region > --repository-name emr-6.5.0-custom | jq .imageDetails[0].imageTags\n[\n "latest"\n]\n</code></pre>\n<h4><a id=\"Prepare_pod_templates_for_Spark_jobs_492\"></a><strong>Prepare pod templates for Spark jobs</strong></h4>\n<p>Upload the two Spark driver and Spark executor pod templates to an S3 bucket and prefix. The two pod templates can be found in the <a href=\"https://github.com/aws-samples/aws-emr-eks-log-forwarding/tree/main/kube/podtemplates\" target=\"_blank\">GitHub repository</a>:</p>\n<ul>\n<li><strong>emr_driver_template.yaml</strong> – Spark driver pod template</li>\n<li><strong>emr_executor_template.yaml</strong> – Spark executor pod template</li>\n</ul>\n<p>The pod templates provided here should not be modified.</p>\n<h4><a id=\"Submitting_a_Spark_job_with_a_Fluent_Bit_sidecar_container_503\"></a><strong>Submitting a Spark job with a Fluent Bit sidecar container</strong></h4>\n<p>This Spark job example uses the <a href=\"https://github.com/aws-samples/aws-emr-eks-log-forwarding/blob/main/scripts/bostonproperty.py\" target=\"_blank\">bostonproperty.py</a> script. To use this script, upload it to an accessible S3 bucket and prefix and complete the preceding steps to use an EMR customized container image. You also need to upload the CSV file from the <a href=\"https://github.com/aws-samples/aws-emr-eks-log-forwarding/tree/main/data\" target=\"_blank\">GitHub repo</a>, which you need to download and unzip. Upload the unzipped file to the following location: <code>s3://<your chosen bucket>/<first level folder>/data/boston-property-assessment-2021.csv</code>.</p>\n<p>The following commands assume that you launched your EKS cluster and virtual EMR cluster with the parameters indicated in the <a href=\"https://github.com/aws-samples/aws-emr-eks-log-forwarding/blob/main/emreks/parameters.sh\" target=\"_blank\">GitHub repo</a>.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/0939d0b56efd46a7914454e4a34acfea_image.png\" alt=\"image.png\" /></p>\n<p>Alternatively, use the <a href=\"https://github.com/aws-samples/aws-emr-eks-log-forwarding/blob/main/emreks/scripts/run_emr_script.sh\" target=\"_blank\">provided script</a> to run the job. Change the directory to the <code>scripts</code> folder in <code>emreks</code> and run the script as follows:</p>\n<p>Bash</p>\n<pre><code class=\"lang-\">[../aws-emr-eks-log-forwarding] cd emreks/scripts\n[../aws-emr-eks-log-forwarding/emreks/scripts] bash run_emr_script.sh < S3 bucket name > < ECR container image > < script path>\n\nExample: bash run_emr_script.sh emreksdemo-123456 12345678990.dkr.ecr.us-east-2.amazonaws.com/emr-6.5.0-custom s3://emreksdemo-123456/scripts/scriptname.py\n</code></pre>\n<p>After you submit the Spark job successfully, you get a return JSON response like the following:</p>\n<p>JSON</p>\n<pre><code class=\"lang-\">{\n "id": "0000000305e814v0bpt",\n "name": "emreksdemo-job",\n "arn": "arn:aws:emr-containers:xx-xxxx-x:XXXXXXXXXXX:/virtualclusters/upobc00wgff5XXXXXXXXXXX/jobruns/0000000305e814v0bpt",\n "virtualClusterId": "upobc00wgff5XXXXXXXXXXX"\n}\n</code></pre>\n<h4><a id=\"What_happens_when_you_submit_a_Spark_job_with_a_sidecar_container_535\"></a><strong>What happens when you submit a Spark job with a sidecar container</strong></h4>\n<p>After you submit a Spark job, you can see what is happening by viewing the pods that are generated and the corresponding logs. First, using kubectl, get a list of the pods generated in the namespace where the EMR virtual cluster runs. In this case, it’s <code>sparkns</code>. The first pod in the following code is the job controller for this particular Spark job. The second pod is the Spark executor; there can be more than one pod depending on how many executor instances are asked for in the Spark job setting—we asked for one here. The third pod is the Spark driver pod.</p>\n<p>Bash</p>\n<pre><code class=\"lang-\">$ kubectl get pods -n sparkns\nNAME READY STATUS RESTARTS AGE\n0000000305e814v0bpt-hvwjs 3/3 Running 0 25s\nemreksdemo-script-1247bf80ae40b089-exec-1 0/3 Pending 0 0s\nspark-0000000305e814v0bpt-driver 3/3 Running 0 11s\n</code></pre>\n<p>To view what happens in the sidecar container, follow the logs in the Spark driver pod and refer to the sidecar. The sidecar container launches with the Spark pods and persists until the file <code>/var/log/fluentd/main-container-terminated</code> is no longer available. For more information about how Amazon EMR controls the pod lifecycle, refer to <a href=\"https://docs.aws.amazon.com/emr/latest/EMR-on-EKS-DevelopmentGuide/pod-templates.html\" target=\"_blank\">Using pod templates</a>. The subprocess script ties the sidecar container to this same lifecycle and deletes itself upon the EMR controlled pod lifecycle process.</p>\n<p>Bash</p>\n<pre><code class=\"lang-\">$ kubectl logs spark-0000000305e814v0bpt-driver -n sparkns -c custom-side-car-container --follow=true\n\nWaiting for file /var/log/fluentd/main-container-terminated to appear...\nAmazon Web Services for Fluent Bit Container Image Version 2.24.0Start wait: 1652190909\nElapsed Wait: 0\nNot found count: 0\nWaiting...\nFluent Bit v1.9.3\n* Copyright (C) 2015-2022 The Fluent Bit Authors\n* Fluent Bit is a CNCF sub-project under the umbrella of Fluentd\n* https://fluentbit.io\n\n[2022/05/10 13:55:09] [ info] [fluent bit] version=1.9.3, commit=9eb4996b7d, pid=11\n[2022/05/10 13:55:09] [ info] [storage] version=1.2.0, type=memory-only, sync=normal, checksum=disabled, max_chunks_up=128\n[2022/05/10 13:55:09] [ info] [cmetrics] version=0.3.1\n[2022/05/10 13:55:09] [ info] [output:splunk:splunk.0] worker #0 started\n[2022/05/10 13:55:09] [ info] [output:splunk:splunk.0] worker #1 started\n[2022/05/10 13:55:09] [ info] [output:es:es.1] worker #0 started\n[2022/05/10 13:55:09] [ info] [output:es:es.1] worker #1 started\n[2022/05/10 13:55:09] [ info] [http_server] listen iface=0.0.0.0 tcp_port=2020\n[2022/05/10 13:55:09] [ info] [sp] stream processor started\nWaiting for file /var/log/fluentd/main-container-terminated to appear...\nLast heartbeat: 1652190914\nElapsed Time since after heartbeat: 0\nFound count: 0\nlist files:\n-rw-r--r-- 1 saslauth 65534 0 May 10 13:55 /var/log/fluentd/main-container-terminated\nLast heartbeat: 1652190918\n\n…\n\n[2022/05/10 13:56:09] [ info] [input:tail:tail.0] inotify_fs_add(): inode=58834691 watch_fd=6 name=/var/log/spark/user/spark-0000000305e814v0bpt-driver/stdout-s3-container-log-in-tail.pos\n[2022/05/10 13:56:09] [ info] [input:tail:tail.1] inotify_fs_add(): inode=54644346 watch_fd=1 name=/var/log/spark/apps/spark-0000000305e814v0bpt\nOutside of loop, main-container-terminated file no longer exists\nls: cannot access /var/log/fluentd/main-container-terminated: No such file or directory\nThe file /var/log/fluentd/main-container-terminated doesn't exist anymore;\nTERMINATED PROCESS\nFluent-Bit pid: 11\nKilling process after sleeping for 15 seconds\nroot 11 8 0 13:55 ? 00:00:00 /fluent-bit/bin/fluent-bit -e /fluent-bit/firehose.so -e /fluent-bit/cloudwatch.so -e /fluent-bit/kinesis.so -c /fluent-bit/etc/fluent-bit.conf\nroot 114 7 0 13:56 ? 00:00:00 grep fluent\nKilling process 11\n[2022/05/10 13:56:24] [engine] caught signal (SIGTERM)\n[2022/05/10 13:56:24] [ info] [input] pausing tail.0\n[2022/05/10 13:56:24] [ info] [input] pausing tail.1\n[2022/05/10 13:56:24] [ warn] [engine] service will shutdown in max 5 seconds\n[2022/05/10 13:56:25] [ info] [engine] service has stopped (0 pending tasks)\n[2022/05/10 13:56:25] [ info] [input:tail:tail.1] inotify_fs_remove(): inode=54644346 watch_fd=1\n[2022/05/10 13:56:25] [ info] [input:tail:tail.0] inotify_fs_remove(): inode=60917120 watch_fd=1\n[2022/05/10 13:56:25] [ info] [input:tail:tail.0] inotify_fs_remove(): inode=60917121 watch_fd=2\n[2022/05/10 13:56:25] [ info] [input:tail:tail.0] inotify_fs_remove(): inode=58834690 watch_fd=3\n[2022/05/10 13:56:25] [ info] [input:tail:tail.0] inotify_fs_remove(): inode=58834692 watch_fd=4\n[2022/05/10 13:56:25] [ info] [input:tail:tail.0] inotify_fs_remove(): inode=58834689 watch_fd=5\n[2022/05/10 13:56:25] [ info] [input:tail:tail.0] inotify_fs_remove(): inode=58834691 watch_fd=6\n[2022/05/10 13:56:25] [ info] [output:splunk:splunk.0] thread worker #0 stopping...\n[2022/05/10 13:56:25] [ info] [output:splunk:splunk.0] thread worker #0 stopped\n[2022/05/10 13:56:25] [ info] [output:splunk:splunk.0] thread worker #1 stopping...\n[2022/05/10 13:56:25] [ info] [output:splunk:splunk.0] thread worker #1 stopped\n[2022/05/10 13:56:25] [ info] [output:es:es.1] thread worker #0 stopping...\n[2022/05/10 13:56:25] [ info] [output:es:es.1] thread worker #0 stopped\n[2022/05/10 13:56:25] [ info] [output:es:es.1] thread worker #1 stopping...\n[2022/05/10 13:56:25] [ info] [output:es:es.1] thread worker #1 stopped\n</code></pre>\n<h4><a id=\"View_the_forwarded_logs_in_Splunk_or_Amazon_OpenSearch_Service_618\"></a><strong>View the forwarded logs in Splunk or Amazon OpenSearch Service</strong></h4>\n<p>To view the forwarded logs, do a search in Splunk or on the Amazon OpenSearch Service console. If you’re using a shared log aggregator, you may have to filter the results. In this configuration, the logs tailed by Fluent Bit are in the <code>/var/log/spark/*</code>. The following screenshots show the logs generated specifically by the Kubernetes Spark driver <code>stdout</code> that were forwarded to the log aggregators. You can compare the results with the logs provided using kubectl:</p>\n<p>Bash</p>\n<pre><code class=\"lang-\">kubectl logs < Spark Driver Pod > -n < namespace > -c spark-kubernetes-driver --follow=true\n\n…\nroot\n |-- PID: string (nullable = true)\n |-- CM_ID: string (nullable = true)\n |-- GIS_ID: string (nullable = true)\n |-- ST_NUM: string (nullable = true)\n |-- ST_NAME: string (nullable = true)\n |-- UNIT_NUM: string (nullable = true)\n |-- CITY: string (nullable = true)\n |-- ZIPCODE: string (nullable = true)\n |-- BLDG_SEQ: string (nullable = true)\n |-- NUM_BLDGS: string (nullable = true)\n |-- LUC: string (nullable = true)\n…\n\n|02108|RETAIL CONDO |361450.0 |63800.0 |5977500.0 |\n|02108|RETAIL STORE DETACH |2295050.0 |988200.0 |3601900.0 |\n|02108|SCHOOL |1.20858E7 |1.20858E7 |1.20858E7 |\n|02108|SINGLE FAM DWELLING |5267156.561085973 |1153400.0 |1.57334E7 |\n+-----+-----------------------+--------------------+---------------+---------------+\nonly showing top 50 rows\n</code></pre>\n<p>The following screenshot shows the Splunk logs.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/04bc8a8dc2554addae7b48748be35602_image.png\" alt=\"image.png\" /></p>\n<p>The following screenshots show the Amazon OpenSearch Service logs.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/450f7ec06b1a4cf780bd17eae1691047_image.png\" alt=\"image.png\" /></p>\n<h4><a id=\"Optional_Include_a_buffer_between_Fluent_Bit_and_the_log_aggregators_660\"></a><strong>Optional: Include a buffer between Fluent Bit and the log aggregators</strong></h4>\n<p>If you expect to generate a lot of logs because of high concurrent Spark jobs creating multiple individual connects that may overwhelm your Amazon OpenSearch Service or Splunk log aggregation clusters, consider employing a buffer between the Fluent Bit sidecars and your log aggregator. One option is to use <a href=\"https://aws.amazon.com/kinesis/data-firehose/\" target=\"_blank\">Amazon Kinesis Data Firehose</a> as the buffering service.</p>\n<p>Kinesis Data Firehose has built-in delivery to both Amazon OpenSearch Service and Splunk. If using Amazon OpenSearch Service, refer to <a href=\"https://docs.aws.amazon.com/opensearch-service/latest/developerguide/integrations.html\" target=\"_blank\">Loading streaming data from Amazon Kinesis Data Firehose</a>. If using Splunk, refer to <a href=\"https://docs.splunk.com/Documentation/AddOns/released/Firehose/ConfigureFirehose\" target=\"_blank\">Configure Amazon Kinesis Firehose to send data to the Splunk platform</a> and <a href=\"https://docs.aws.amazon.com/firehose/latest/dev/create-destination.html\" target=\"_blank\">Choose Splunk for Your Destination</a>.</p>\n<p>To configure Fluent Bit to Kinesis Data Firehose, add the following to your ConfigMap output. Refer to the <a href=\"https://github.com/aws-samples/aws-emr-eks-log-forwarding/blob/main/kube/configmaps/emr_configmap.yaml\" target=\"_blank\">GitHub ConfigMap example</a> and add the <code>@INCLUDE</code> under the <code>[SERVICE]</code> section:</p>\n<p>Apache Configuration</p>\n<pre><code class=\"lang-\"> @INCLUDE output-kinesisfirehose.conf\n…\n\n output-kinesisfirehose.conf: |\n [OUTPUT]\n Name kinesis_firehose\n Match *\n region < region >\n delivery_stream < Kinesis Firehose Stream Name >\n</code></pre>\n<h4><a id=\"Optional_Use_data_streams_for_Amazon_OpenSearch_Service_683\"></a><strong>Optional: Use data streams for Amazon OpenSearch Service</strong></h4>\n<p>If you’re in a scenario where the number of documents grows rapidly and you don’t need to update older documents, you need to manage the OpenSearch Service cluster. This involves steps like creating a rollover index alias, defining a write index, and defining common mappings and settings for the backing indexes. Consider using data streams to simplify this process and enforce a setup that best suits your time series data. For instructions on implementing data streams, refer to <a href=\"https://opensearch.org/docs/latest/opensearch/data-streams/\" target=\"_blank\">Data streams</a>.</p>\n<h4><a id=\"Clean_up_689\"></a><strong>Clean up</strong></h4>\n<p>To avoid incurring future charges, delete the resources by deleting the CloudFormation stacks that were created with this script. This removes the EKS cluster. However, before you do that, remove the EMR virtual cluster first by running the <a href=\"https://docs.aws.amazon.com/cli/latest/reference/emr-containers/delete-virtual-cluster.html\" target=\"_blank\">delete-virtual-cluster</a> command. Then delete all the CloudFormation stacks generated by the deployment script.</p>\n<p>If you launched an OpenSearch Service domain, you can delete the domain from the OpenSearch Service domain. If you used the script to launch a Splunk instance, you can go to the CloudFormation stack that launched the Splunk instance and delete the CloudFormation stack. This removes remove the Splunk instance and associated resources.</p>\n<p>You can also use the following scripts to clean up resources:</p>\n<ul>\n<li>To remove an OpenSearch Service domain, run the <a href=\"https://github.com/aws-samples/aws-emr-eks-log-forwarding/blob/main/opensearch/delete_opensearch_domain.sh\" target=\"_blank\">delete_opensearch_domain.sh</a> script</li>\n<li>To remove the Splunk resource, run the <a href=\"https://github.com/aws-samples/aws-emr-eks-log-forwarding/blob/main/splunk/remove_splunk_deployment.sh\" target=\"_blank\">remove_splunk_deployment.sh</a> script</li>\n<li>To remove the EKS cluster, including the EMR virtual cluster, VPC, and other IAM roles, run the <a href=\"https://github.com/aws-samples/aws-emr-eks-log-forwarding/blob/main/emreks/remove_emr_eks_deployment.sh\" target=\"_blank\">remove_emr_eks_deployment.sh</a> script</li>\n</ul>\n<h4><a id=\"Conclusion_703\"></a><strong>Conclusion</strong></h4>\n<p>EMR on EKS facilitates running Spark jobs on Kubernetes to achieve very fast and cost-efficient Spark operations. This is made possible through scheduling transient pods that are launched and then deleted the jobs are complete. To log all these operations in the same lifecycle of the Spark jobs, this post provides a solution using pod templates and Fluent Bit that is lightweight and powerful. This approach offers a decoupled way of log forwarding based at the Spark application level and not at the Kubernetes cluster level. It also avoids routing through intermediaries like CloudWatch, reducing cost and complexity. In this way, you can address security concerns and DevOps and system administration ease of management while providing Spark users with insights into their Spark jobs in a cost-efficient and functional way.</p>\n<p>If you have questions or suggestions, please leave a comment.</p>\n<h4><a id=\"About_the_Author_711\"></a><strong>About the Author</strong></h4>\n<p><img src=\"https://dev-media.amazoncloud.cn/27ffce745d524d0a9d7605d13848b097_image.png\" alt=\"image.png\" /></p>\n<p><strong>Matthew Tan</strong> is a Senior Analytics Solutions Architect at Amazon Web Services and provides guidance to customers developing solutions with Amazon Web Services Analytics services on their analytics workloads.</p>\n"}