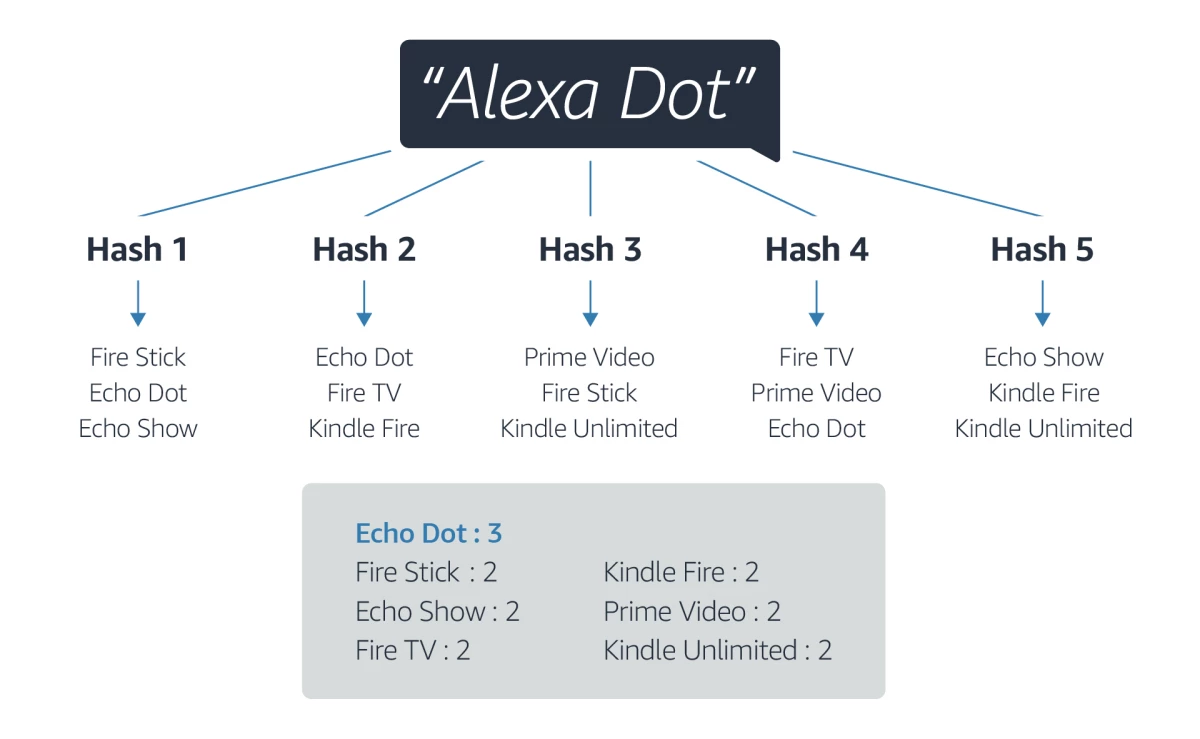

{"value":"Locality-sensitive hashing enables cache to hold more than three times as many query results.\n\nOne of the ways that Amazon makes online shopping more convenient for customers is by caching the results of popular product queries. If a lot of people are searching for X Brand shoes, then Amazon’s servers store the results of the query “X brand shoes” and serve them to anyone who types that query, without having to re-execute the product retrieval algorithm and fetch product data from the Amazon catalogue.\n\nThe problem is that if “X brand shoes” is a popular query, then “X brand shoe” probably is, too, and maybe “Shoes brand X”, “X brand footwear”, and other variations. If the servers are filling the cache with different ways of describing the same product, then it’s an inefficient use of space.\n\nIn a [paper](https://www.amazon.science/publications/rose-robust-caches-for-amazon-product-search) we presented last week at [the Web Conference](https://www.amazon.science/conferences-and-events/the-web-conference-2022), we describe a technique for using cache space more efficiently, by storing only one descriptor of every product. To route syntactically varied but semantically similar queries to that one descriptor, we use a technique called locality-sensitive hashing (LSH).\n\n\n#### **Locality-sensitive hashing**\n\n\nTo people familiar with the concept of hashing from college computer science courses, LSH can seem counterintuitive. Like conventional hashing, LSH involves a hash function that maps an arbitrary string of symbols to a unique location in an array, commonly known as a hash bucket.\n\nBut unlike conventional hashing, which attempts to distribute strings across buckets randomly and uniformly, LSH tries to map similar symbol strings to the same bucket. Where conventional hashing seeks to minimize collisions, or mappings to the same bucket, LSH encourages them.\n\nEssentially, our idea is to map related product queries to the same bucket, which stores the location of the associated results. The catch is that, like a conventional hash function, a locality-sensitive hash function will sometimes map different strings to the same bucket. “Brand X shoes”, “Brand X shoe”, and “Shoes Brand X” might all map to the same bucket, but “Brand A jeans” might, too.\n\nPart of our solution to this problem is to store, in every bucket, one canonical query for each set of related queries that maps to that location. For the family of queries about Brand X shoes, for instance, we pick one at random — say, “Brand X shoes” — and store that in the associated bucket, as an index to the list of appropriate query results.\n\nOf course, the same bucket might also contain an index for “Brand A jeans”, or an indefinite number of additional indices. If our query — say, “X Brand footwear” — maps to a bucket with multiple indices, how do we know which set of results to retrieve?\n\nOur answer is to hash the same string multiple times, using slightly different locality-sensitive hash functions. One function might map “X Brand footwear” to a bucket that includes “Brand X shoes”, “Brand A jeans”, and “Brand Q cameras”; another might map it to a bucket that includes “Acme widgets”, “Brand X shoes”, and “Top-shelf pencils”; and so on. Across all these mappings, we simply tally the index that shows up most frequently and retrieve the results associated with it.\n\n\n\nTo determine which canonical phrase corresponds to a new query, the new algorithm simply counts the number of matches across multiple hash functions.\n\nIn practice, we find that 36 different hash functions works well. This reduces the likelihood of retrieving the wrong set of query results to near zero.\n\n\n#### **Similarity functions**\n\n\nTo map similar inputs to the same buckets, locality-sensitive hash functions must, of course, encode some notion of similarity. For any standard similarity measure — Euclidean distance, L-norm, and so on — it’s possible to construct a locality-sensitive hash function that implements it.\n\nFor our purposes, we use weighted Jaccard similarity. Jaccard similarity is the ratio of the number of elements two data items have in common to the total number of elements they contain: it’s the ratio of their intersection to their union.\n\nWeighted Jaccard similarity gives certain correspondences between data items greater weight than others. In our case, we assign weights using a machine learning model trained to do named-entity recognition.\n\nThe intuition is that customers looking for Brand X shoes will be more accepting of query results that include Brand Y shoes than of results that include Brand X T-shirts. So our weighted Jaccard measure gives greater weight to product category correspondences than to brand name correspondences. That weighting is all done offline and incorporated into the design of the hash function.\n\n\n#### **Concept clusters**\n\n\nIn a second Web Conference paper, “[Massive text normalization via an efficient randomized algorithm](https://www.amazon.science/publications/massive-text-normalization-via-an-efficient-randomized-algorithm)”, we describe the process we use to identify clusters of related queries — “Brand X shoes”, “Brand X shoe”, “Brand X footwear”, and so on — and select one of them as the index query. (In fact, the paper describes a general procedure for assimilating varied expressions of the same concept to a single, canonical expression. But we adapt it to the problem of caching query results.)\n\nOnce we’ve constructed our family of 36 hash functions, we use them all to hash our complete list of popular product queries. Every time two queries are hashed to the same bucket, we increment the weight of the edge that connects them in a huge graph. So by the time we’ve completed the final hash, the maximum edge weight is 36, the minimum 1.\n\nThen, we delete all the graph edges whose weights fall below some threshold. The result is a host of subgraphs of related terms. From each subgraph, we randomly pick one term as the index for that family of queries.\n\n\n\nDeleting low-weight edges from a graph of hash correspondences leaves clusters of related concepts.\n\nTo evaluate our approach, we selected 60 million popular Amazon Store product queries and divided them into three equal groups, according to frequency: normal queries, hard queries, and long-tail queries. Then we used four different techniques to cache the results of those queries in a fixed amount of storage space.\n\nWe measured performance using F1 score, which combines recall — the fraction of results that the method retrieves from the cache — and precision — the frequency with which the method retrieves the correct query results.\n\nCompared to exact caching, which uses conventional hashing to map queries to results, the improvements in F1 score afforded by our method ranged from 33%, for the normal queries, to 250%, for the long-tail queries. Those improvements did come at the expense of an increase in retrieval time, from 0.1 milliseconds to 2.1 milliseconds. But in many cases, the increase in cache capacity will be worth it.\n\n\nABOUT THE AUTHOR\n\n#### **[Chen Luo](https://www.amazon.science/author/chen-luo)**\n\nChen Luo is an applied scientist at Amazon.\n","render":"<p>Locality-sensitive hashing enables cache to hold more than three times as many query results.</p>\n<p>One of the ways that Amazon makes online shopping more convenient for customers is by caching the results of popular product queries. If a lot of people are searching for X Brand shoes, then Amazon’s servers store the results of the query “X brand shoes” and serve them to anyone who types that query, without having to re-execute the product retrieval algorithm and fetch product data from the Amazon catalogue.</p>\n<p>The problem is that if “X brand shoes” is a popular query, then “X brand shoe” probably is, too, and maybe “Shoes brand X”, “X brand footwear”, and other variations. If the servers are filling the cache with different ways of describing the same product, then it’s an inefficient use of space.</p>\n<p>In a <a href=\"https://www.amazon.science/publications/rose-robust-caches-for-amazon-product-search\" target=\"_blank\">paper</a> we presented last week at <a href=\"https://www.amazon.science/conferences-and-events/the-web-conference-2022\" target=\"_blank\">the Web Conference</a>, we describe a technique for using cache space more efficiently, by storing only one descriptor of every product. To route syntactically varied but semantically similar queries to that one descriptor, we use a technique called locality-sensitive hashing (LSH).</p>\n<h4><a id=\"Localitysensitive_hashing_9\"></a><strong>Locality-sensitive hashing</strong></h4>\n<p>To people familiar with the concept of hashing from college computer science courses, LSH can seem counterintuitive. Like conventional hashing, LSH involves a hash function that maps an arbitrary string of symbols to a unique location in an array, commonly known as a hash bucket.</p>\n<p>But unlike conventional hashing, which attempts to distribute strings across buckets randomly and uniformly, LSH tries to map similar symbol strings to the same bucket. Where conventional hashing seeks to minimize collisions, or mappings to the same bucket, LSH encourages them.</p>\n<p>Essentially, our idea is to map related product queries to the same bucket, which stores the location of the associated results. The catch is that, like a conventional hash function, a locality-sensitive hash function will sometimes map different strings to the same bucket. “Brand X shoes”, “Brand X shoe”, and “Shoes Brand X” might all map to the same bucket, but “Brand A jeans” might, too.</p>\n<p>Part of our solution to this problem is to store, in every bucket, one canonical query for each set of related queries that maps to that location. For the family of queries about Brand X shoes, for instance, we pick one at random — say, “Brand X shoes” — and store that in the associated bucket, as an index to the list of appropriate query results.</p>\n<p>Of course, the same bucket might also contain an index for “Brand A jeans”, or an indefinite number of additional indices. If our query — say, “X Brand footwear” — maps to a bucket with multiple indices, how do we know which set of results to retrieve?</p>\n<p>Our answer is to hash the same string multiple times, using slightly different locality-sensitive hash functions. One function might map “X Brand footwear” to a bucket that includes “Brand X shoes”, “Brand A jeans”, and “Brand Q cameras”; another might map it to a bucket that includes “Acme widgets”, “Brand X shoes”, and “Top-shelf pencils”; and so on. Across all these mappings, we simply tally the index that shows up most frequently and retrieve the results associated with it.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/832a44d953a14d95b468d0c2ece4b43f_%E4%B8%8B%E8%BD%BD.jpg\" alt=\"下载.jpg\" /></p>\n<p>To determine which canonical phrase corresponds to a new query, the new algorithm simply counts the number of matches across multiple hash functions.</p>\n<p>In practice, we find that 36 different hash functions works well. This reduces the likelihood of retrieving the wrong set of query results to near zero.</p>\n<h4><a id=\"Similarity_functions_31\"></a><strong>Similarity functions</strong></h4>\n<p>To map similar inputs to the same buckets, locality-sensitive hash functions must, of course, encode some notion of similarity. For any standard similarity measure — Euclidean distance, L-norm, and so on — it’s possible to construct a locality-sensitive hash function that implements it.</p>\n<p>For our purposes, we use weighted Jaccard similarity. Jaccard similarity is the ratio of the number of elements two data items have in common to the total number of elements they contain: it’s the ratio of their intersection to their union.</p>\n<p>Weighted Jaccard similarity gives certain correspondences between data items greater weight than others. In our case, we assign weights using a machine learning model trained to do named-entity recognition.</p>\n<p>The intuition is that customers looking for Brand X shoes will be more accepting of query results that include Brand Y shoes than of results that include Brand X T-shirts. So our weighted Jaccard measure gives greater weight to product category correspondences than to brand name correspondences. That weighting is all done offline and incorporated into the design of the hash function.</p>\n<h4><a id=\"Concept_clusters_43\"></a><strong>Concept clusters</strong></h4>\n<p>In a second Web Conference paper, “<a href=\"https://www.amazon.science/publications/massive-text-normalization-via-an-efficient-randomized-algorithm\" target=\"_blank\">Massive text normalization via an efficient randomized algorithm</a>”, we describe the process we use to identify clusters of related queries — “Brand X shoes”, “Brand X shoe”, “Brand X footwear”, and so on — and select one of them as the index query. (In fact, the paper describes a general procedure for assimilating varied expressions of the same concept to a single, canonical expression. But we adapt it to the problem of caching query results.)</p>\n<p>Once we’ve constructed our family of 36 hash functions, we use them all to hash our complete list of popular product queries. Every time two queries are hashed to the same bucket, we increment the weight of the edge that connects them in a huge graph. So by the time we’ve completed the final hash, the maximum edge weight is 36, the minimum 1.</p>\n<p>Then, we delete all the graph edges whose weights fall below some threshold. The result is a host of subgraphs of related terms. From each subgraph, we randomly pick one term as the index for that family of queries.</p>\n<p><img src=\"https://dev-media.amazoncloud.cn/8c7e3b8c00084a259f06bcafd7b7d75d_%E4%B8%8B%E8%BD%BD.jpg\" alt=\"下载.jpg\" /></p>\n<p>Deleting low-weight edges from a graph of hash correspondences leaves clusters of related concepts.</p>\n<p>To evaluate our approach, we selected 60 million popular Amazon Store product queries and divided them into three equal groups, according to frequency: normal queries, hard queries, and long-tail queries. Then we used four different techniques to cache the results of those queries in a fixed amount of storage space.</p>\n<p>We measured performance using F1 score, which combines recall — the fraction of results that the method retrieves from the cache — and precision — the frequency with which the method retrieves the correct query results.</p>\n<p>Compared to exact caching, which uses conventional hashing to map queries to results, the improvements in F1 score afforded by our method ranged from 33%, for the normal queries, to 250%, for the long-tail queries. Those improvements did come at the expense of an increase in retrieval time, from 0.1 milliseconds to 2.1 milliseconds. But in many cases, the increase in cache capacity will be worth it.</p>\n<p>ABOUT THE AUTHOR</p>\n<h4><a id=\"Chen_Luohttpswwwamazonscienceauthorchenluo_65\"></a><strong><a href=\"https://www.amazon.science/author/chen-luo\" target=\"_blank\">Chen Luo</a></strong></h4>\n<p>Chen Luo is an applied scientist at Amazon.</p>\n"}

立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。

立即关注

亚马逊云开发者

公众号

User Group

公众号

亚马逊云科技

官方小程序

“AWS” 是 “Amazon Web Services” 的缩写,在此网站不作为商标展示。